FIRST Robotics Competition Control System

Welcome to the FIRST® Robotics Competition Control System Documentation! This site contains everything you need to know for programming a competition robot!

Community translations can be found in a variety of languages in the bottom-left menu.

Returning Teams

If you are a returning team, please check out the overview of changes from 2021 to 2022 and the cards below for a refresher.

New Teams

The Zero-to-Robot tutorial will guide you through preparation, wiring and programming a basic robot!

Hardware Overview

An overview of the hardware components available to teams.

Software Overview

An overview of the software components and tools available to teams.

Programming Basics

Documentation that is useful throughout a team’s programming process.

Advanced Programming

Documentation that is suited toward veteran teams. This includes content such as Path Planning and Kinematics.

Hardware

Hardware tutorials and content available for teams.

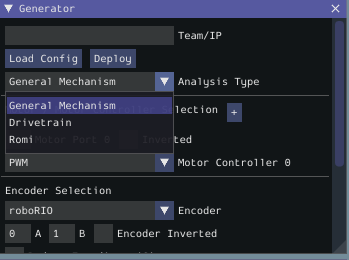

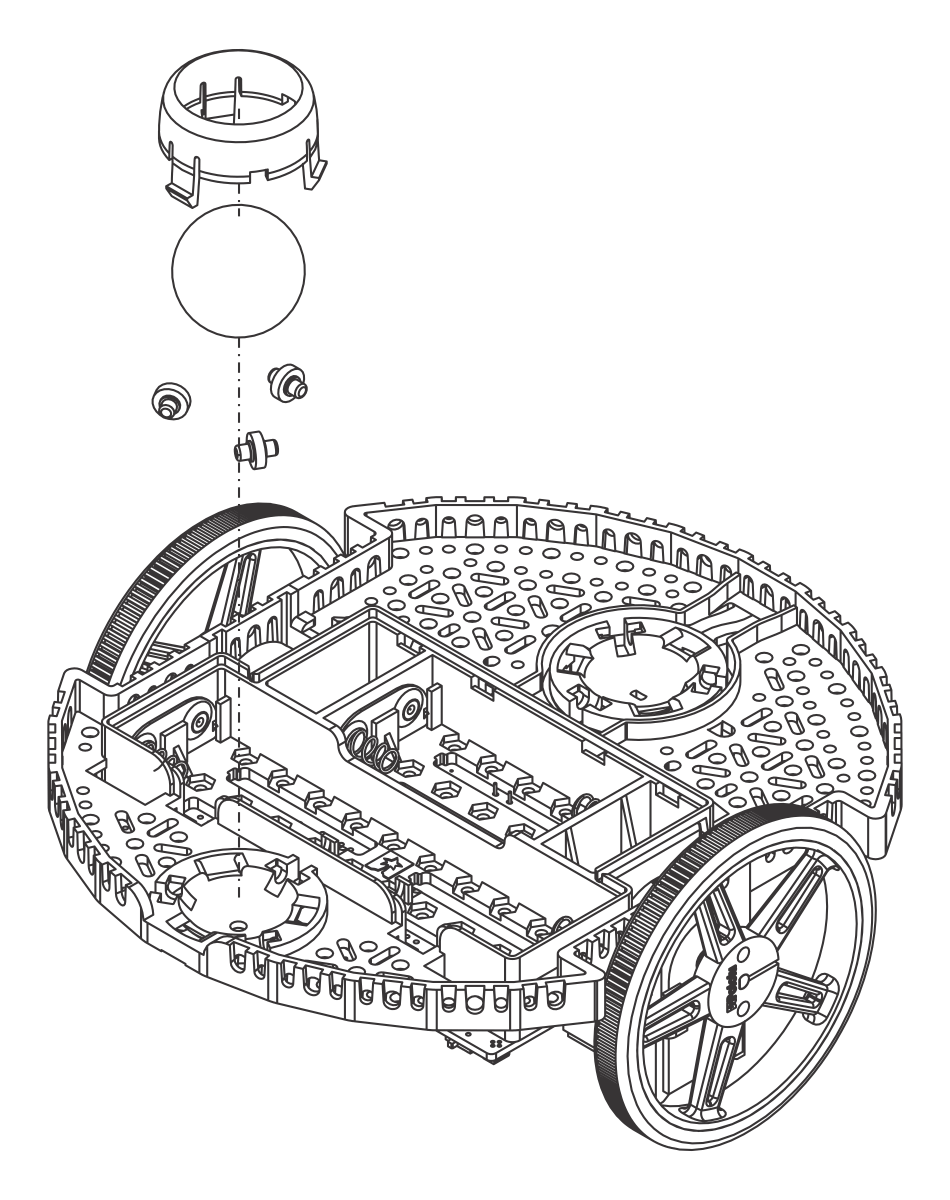

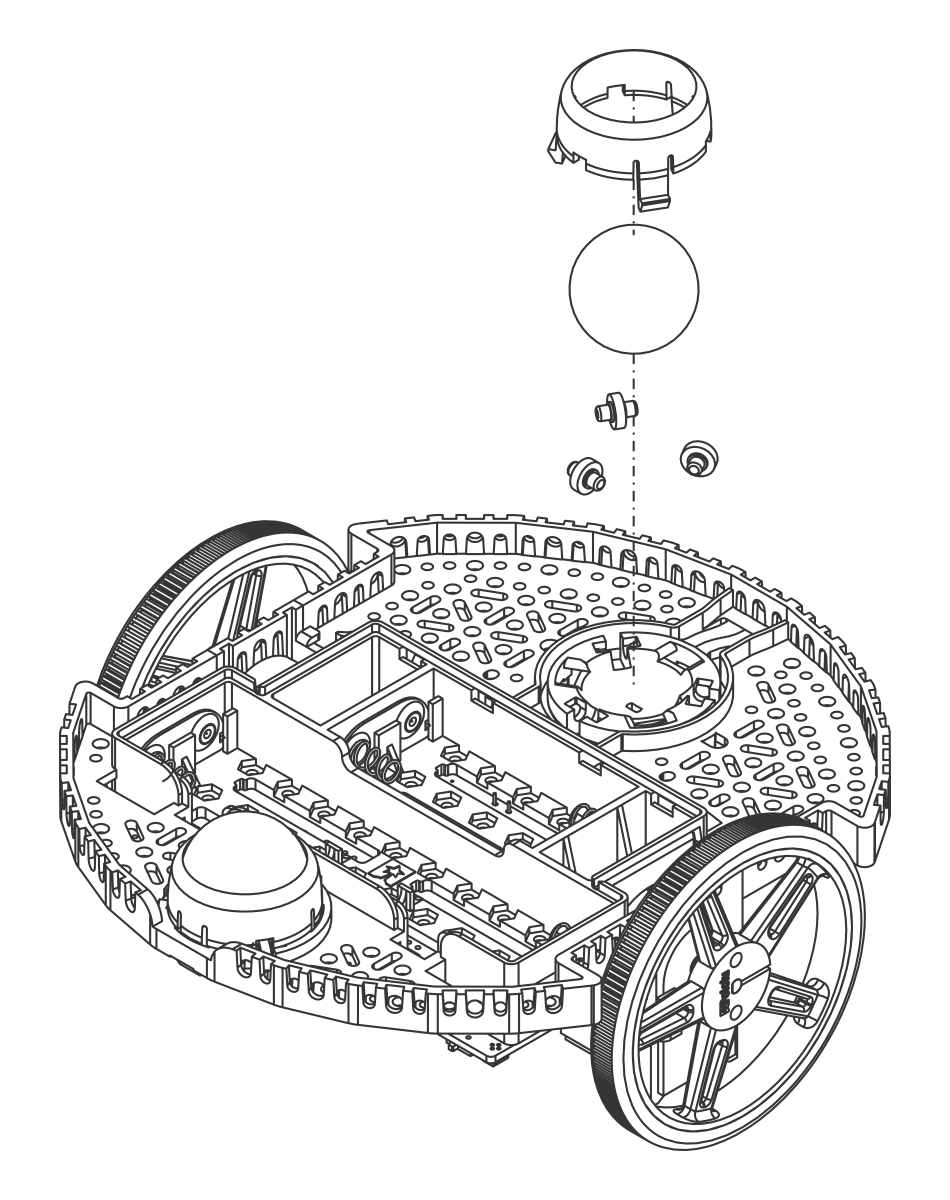

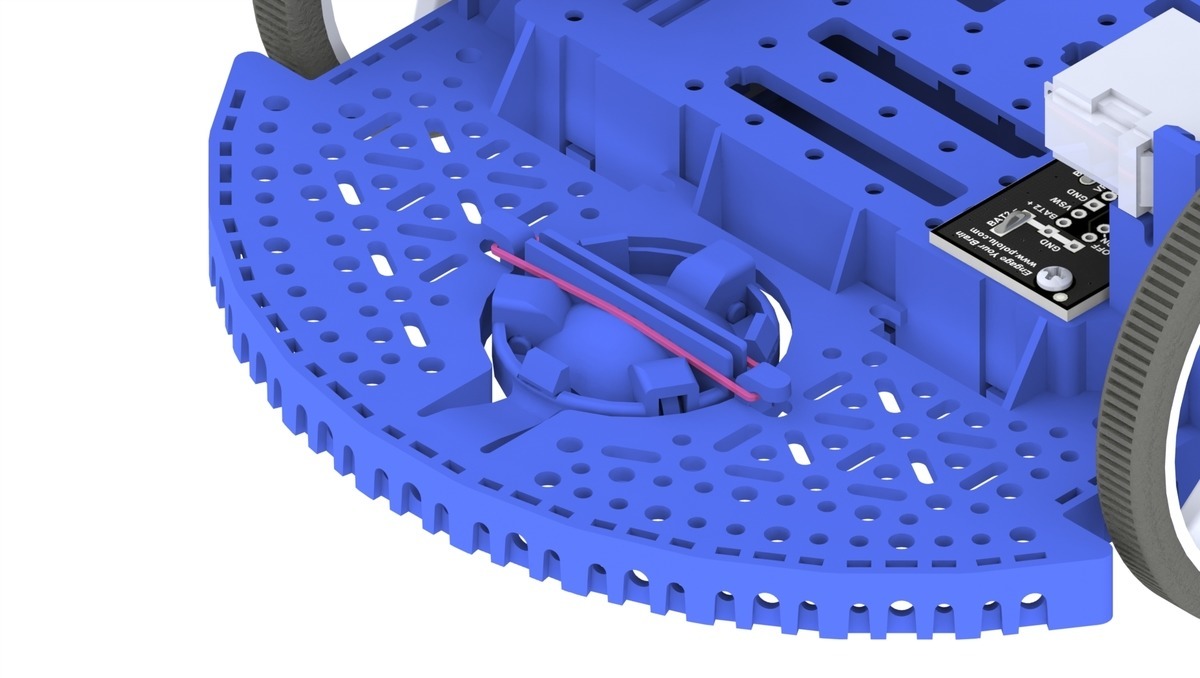

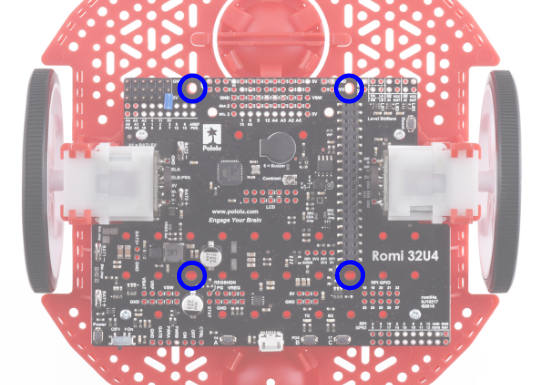

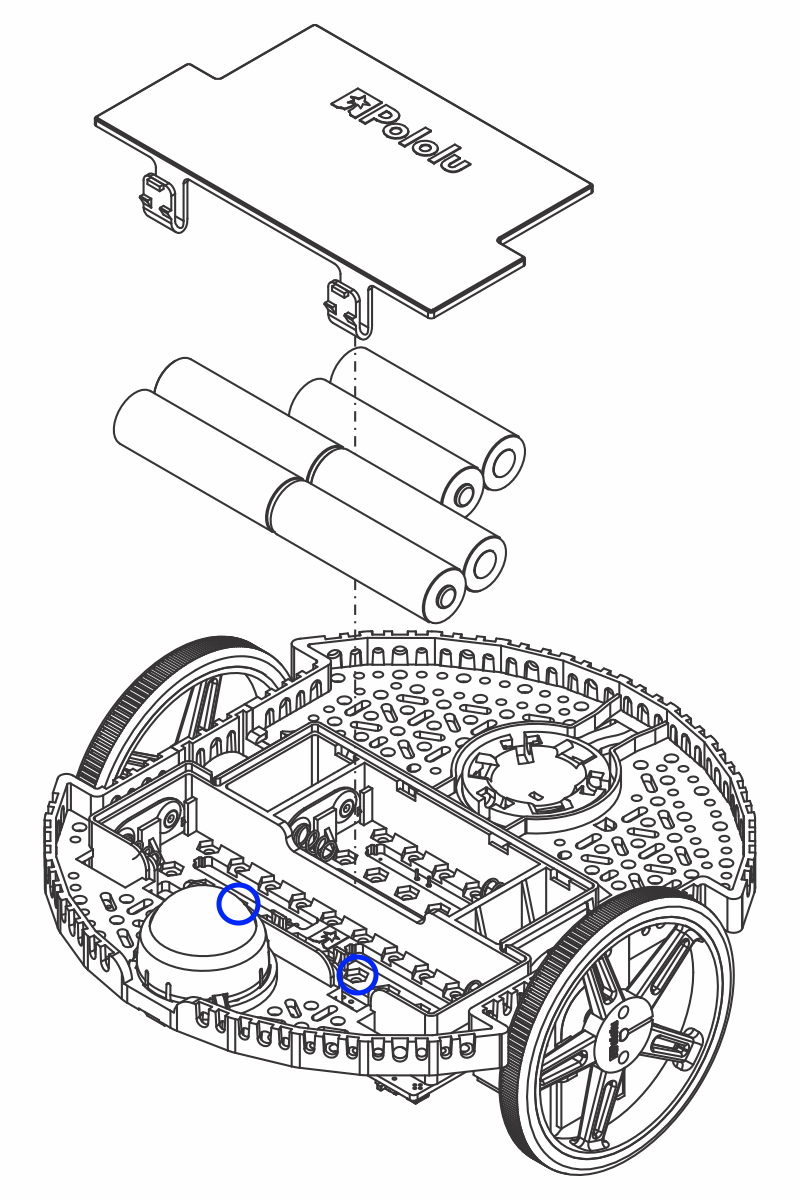

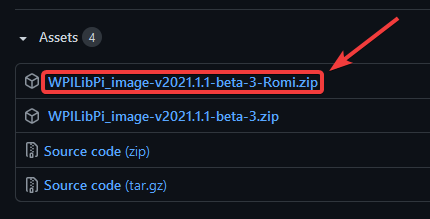

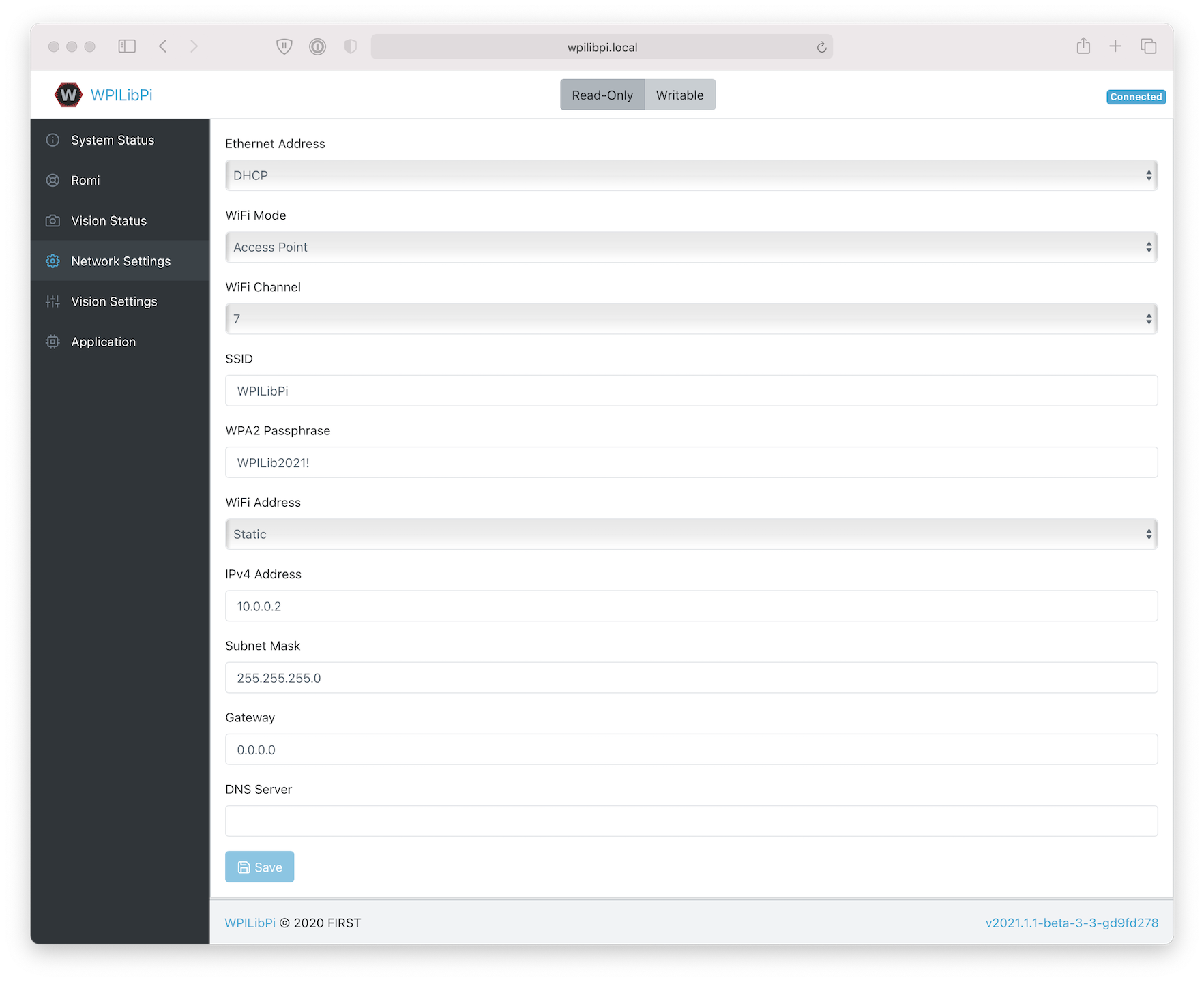

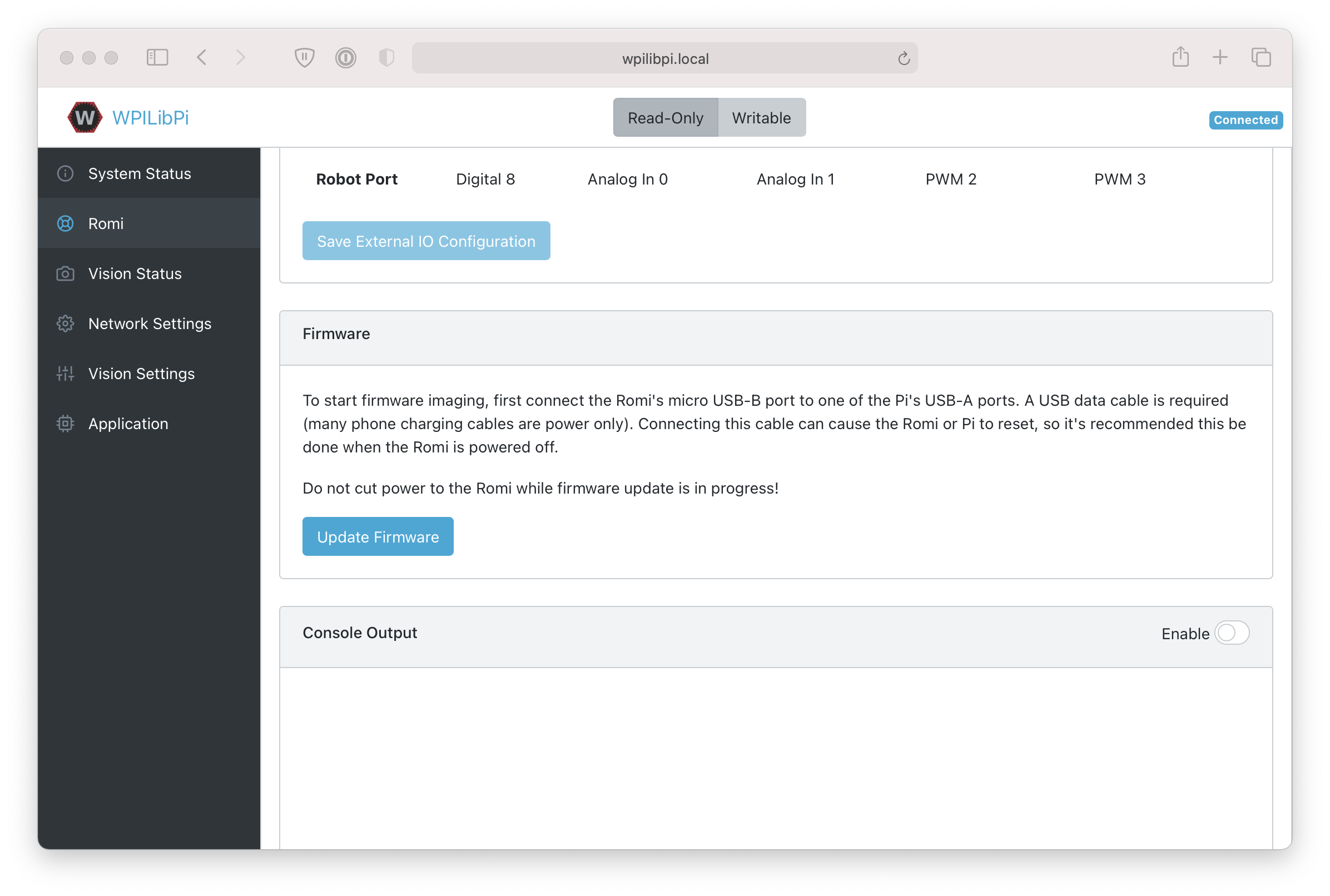

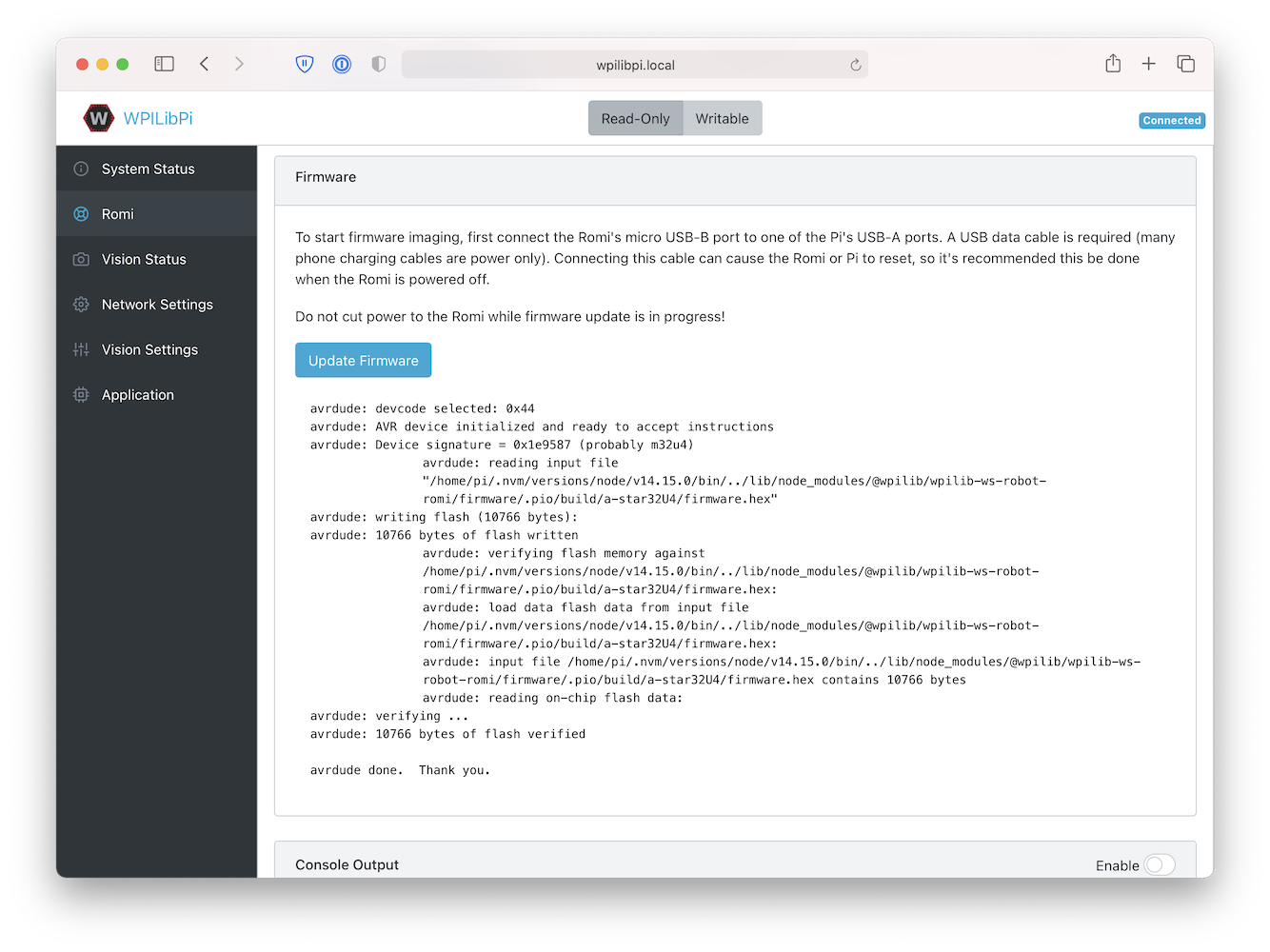

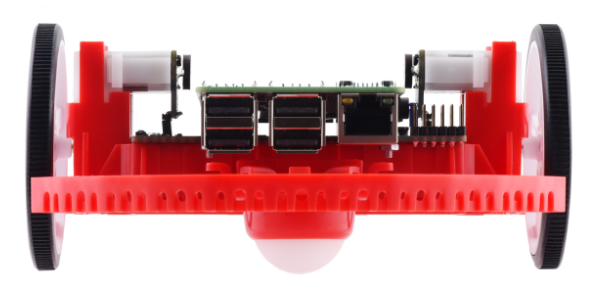

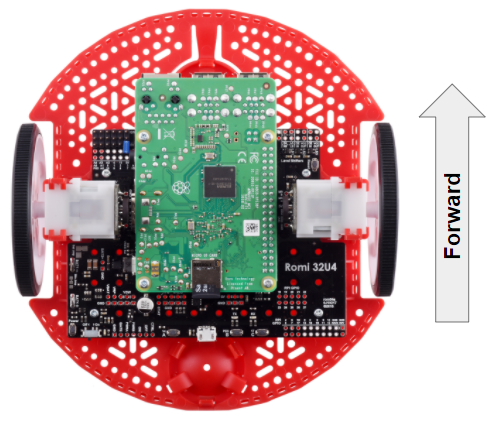

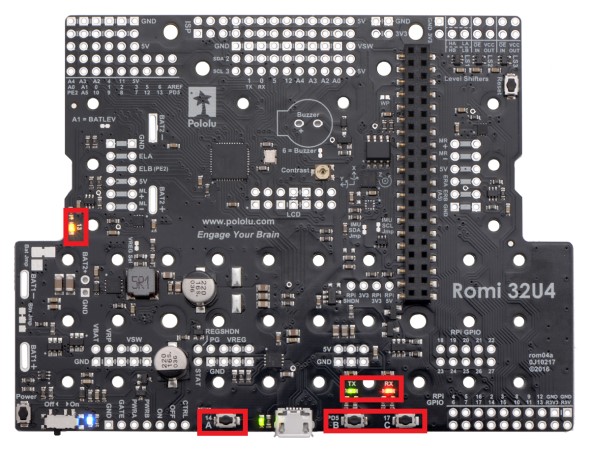

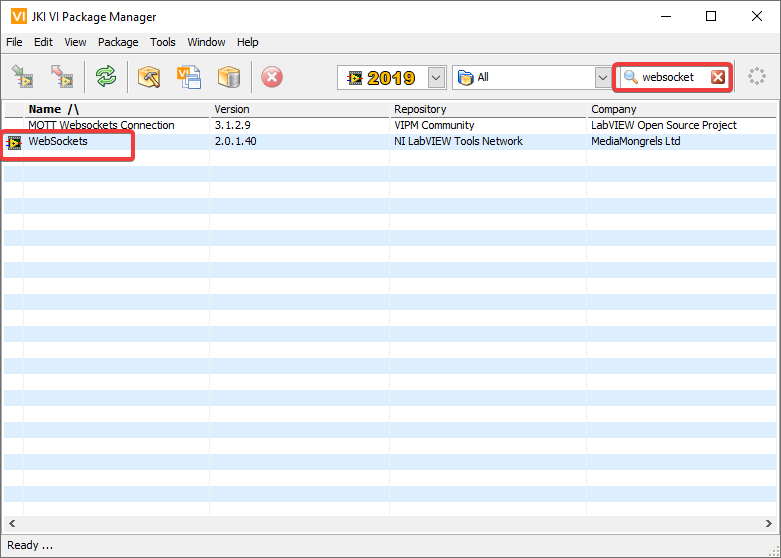

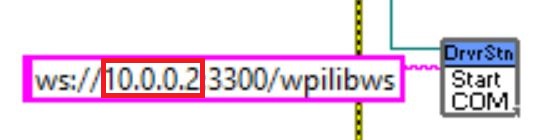

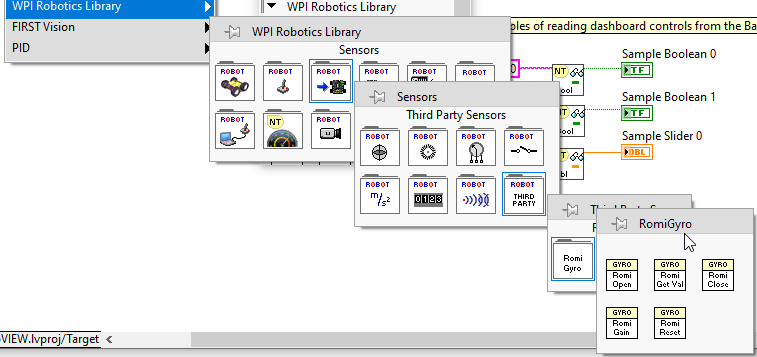

Romi Robot

The Romi Robot is a low-cost Raspberry Pi based platform for practicing WPILib programming.

API Documentation

Java and C++ class documentation.

Software Tools

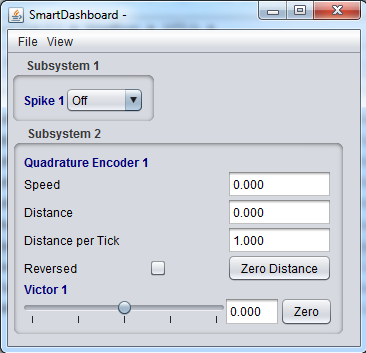

Essential tooling such as FRC Driver Station, Dashboards, roboRIO Imaging Tool and more.

Example Projects

This section showcases the available example projects that teams can reference in VS Code.

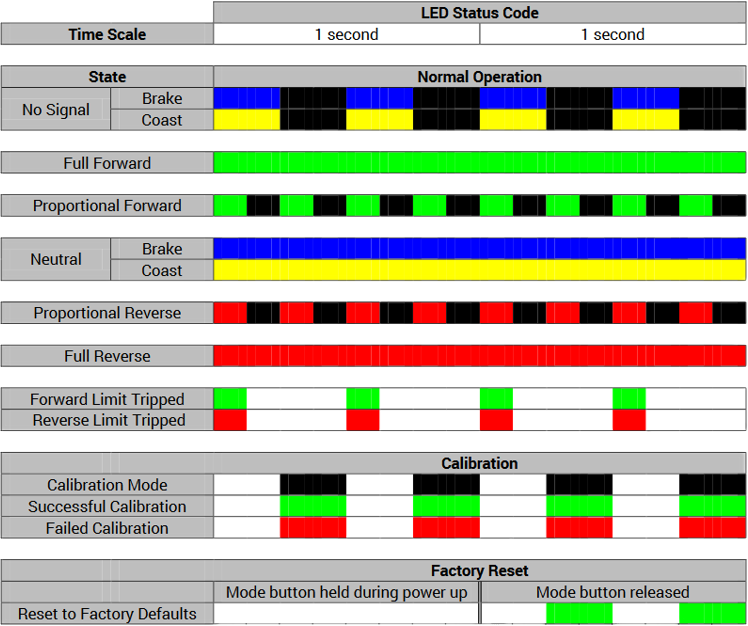

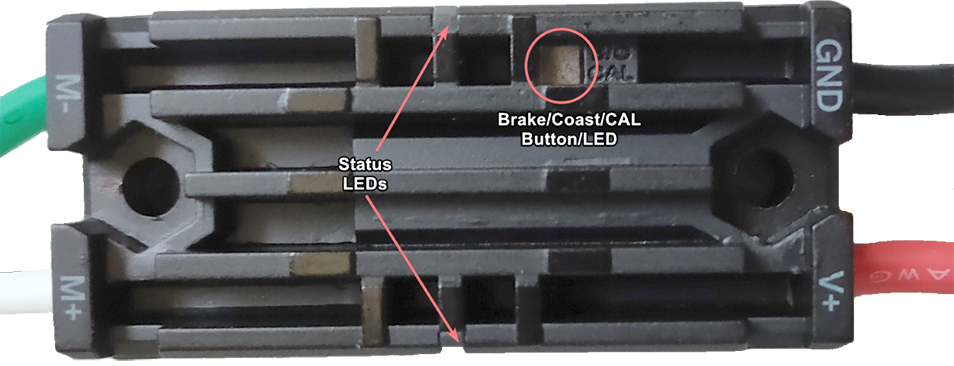

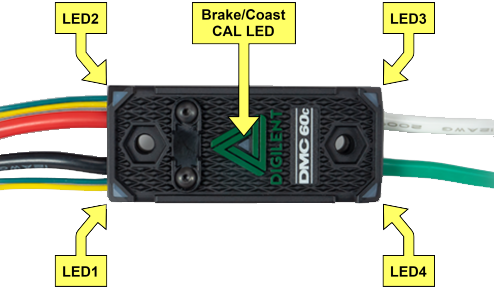

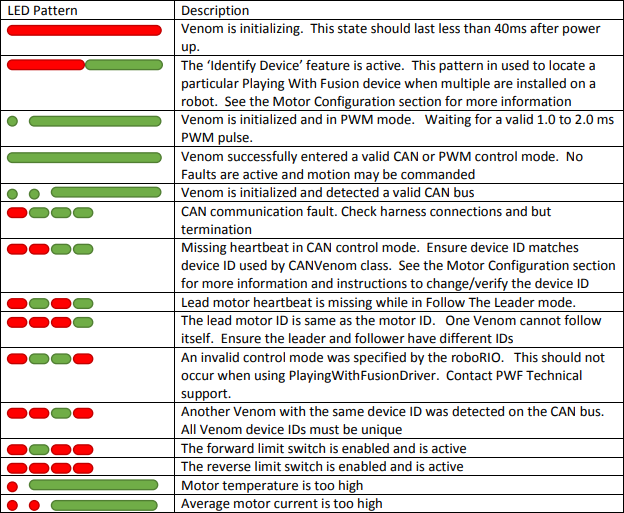

Status Light Quick Reference

Quick reference guide for the status lights on a variety of FRC hardware.

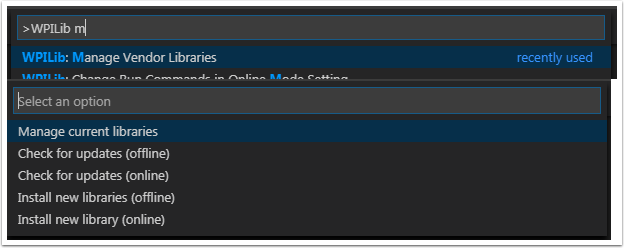

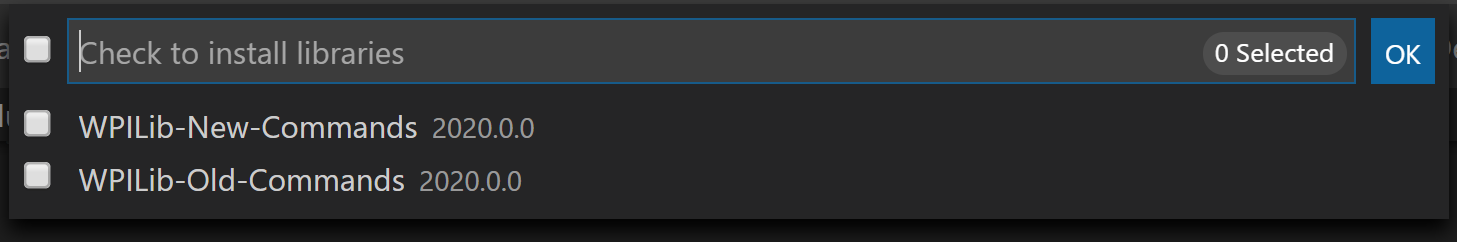

3rd Party Libraries

Tutorial on adding 3rd party libraries such as CTRE and REV to your robot project.

Introduction

Welcome to the official documentation home for the FIRST® Robotics Competition Control System and WPILib software packages. This page is the primary resource documenting the use of the FRC® Control System (including wiring, configuration and software) as well as the WPILib libraries and tools.

New to Programming?

These pages cover the specifics of the WPILib libraries and the FRC Control System and do not describe the basics of using the supported programming languages. If you would like resources on learning the supported programming languages check out the recommendations below:

Note

You can continue with this Zero-to-Robot section to get a functioning basic robot without knowledge of the programming language. To go beyond that, you will need to be familiar with the language you choose to program in.

Java

Head First Java 2nd Edition is a very beginner friendly introduction to programming in Java (ISBN-10: 0596009208).

C++

Programming: Principles and Practice Using C++ 2nd Edition is an introduction to C++ by the creator of the language himself (ISBN-10: 0321992784).

C++ Primer Plus 6th Edition (ISBN-10: 0321776402).

LabVIEW

Zero to Robot

The remaining pages in this tutorial are designed to be completed in order to go from zero to a working basic robot. The documents will walk you through wiring your robot, installation of all needed software, configuration of hardware, and loading a basic example program that should allow your robot to operate. When you complete a page, simply click Next to navigate to the next page and continue with the process. When you’re done, you can click Next to continue to an overview of WPILib in C++/Java or jump back to the home page using the logo at the top left to explore the rest of the content.

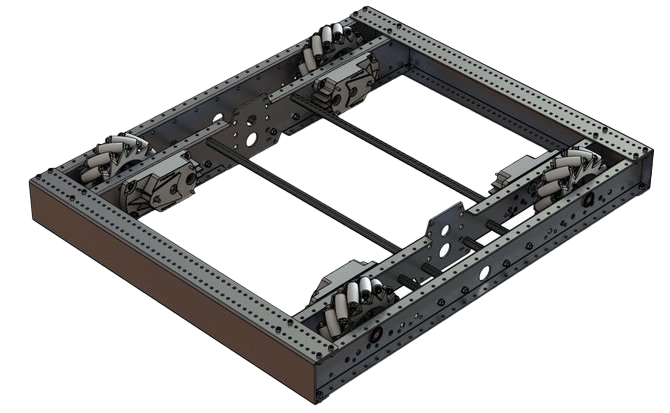

Step 1: Building your Robot

An overview of the available control system hardware can be found here.

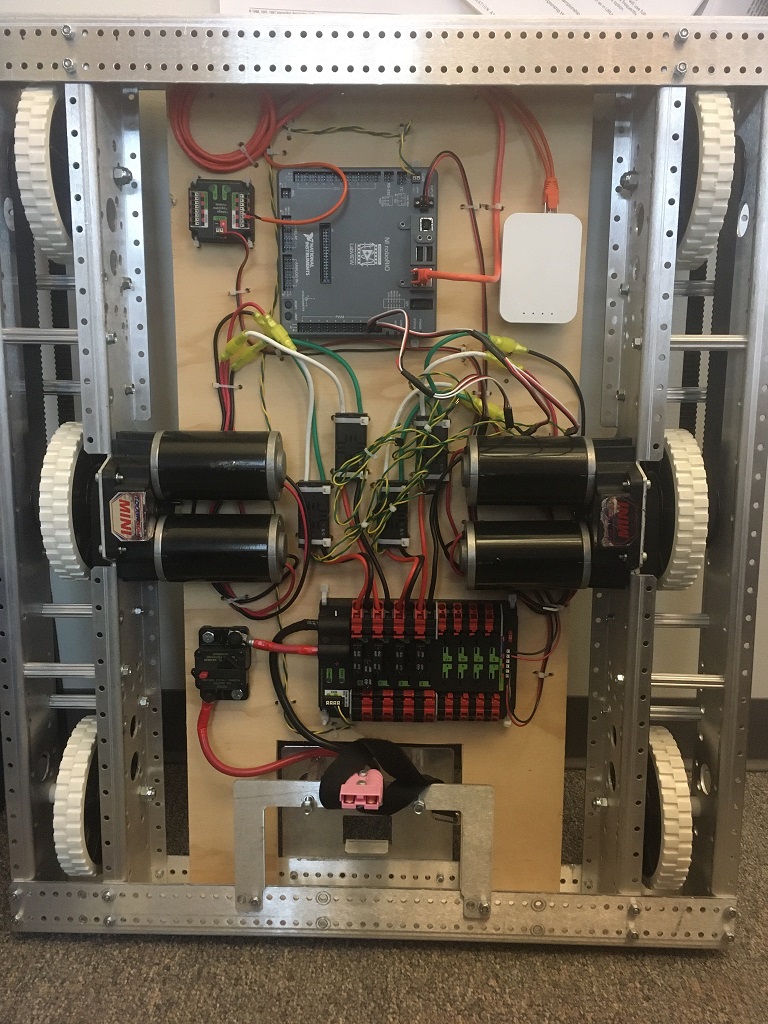

How to Wire an FRC Robot

Note

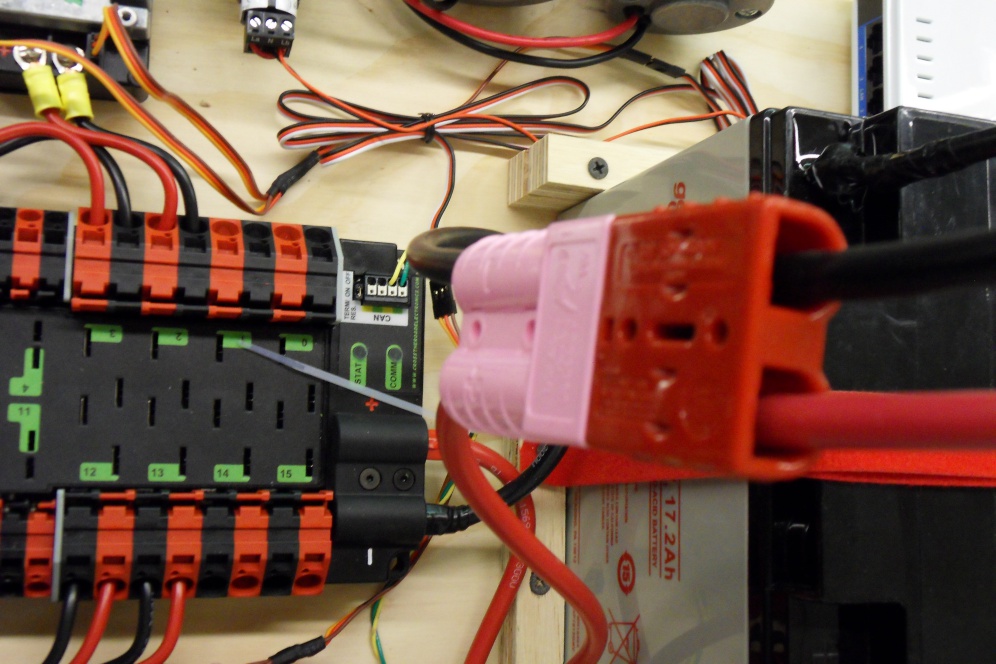

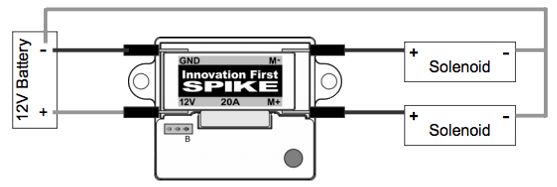

This document details the wiring of a basic electronics board for bench-top testing.

Some images shown in this section reflect the setup for a Robot Control System using Victor SPX Motor Controllers. Wiring diagram and layout should be similar for other motor controllers. Where appropriate, two sets of images are provided to show connections using controllers with and without integrated wires.

Gather Materials

Locate the following control system components and tools

Kit Materials:

Power Distribution Panel (PDP)

roboRIO

Pneumatics Control Module (PCM)

Voltage Regulator Module (VRM)

OpenMesh radio (with power cable and Ethernet cable)

Robot Signal Light (RSL)

4x SPARK MAX or other motor controllers

2x PWM y-cables

120A Circuit breaker

4x 40A Circuit breaker

6 AWG (16 \(mm^2\)) Red wire

10 AWG (6 \(mm^2\)) Red/Black wire

18 AWG (1 \(mm^2\)) Red/Black wire

22 AWG (0.5 \(mm^2\)) Yellow/Green twisted CAN cable

16x 10-12 AWG (4 - 6 \(mm^2\)) (Yellow) ring terminals (8x quick disconnect pairs if using integrated wire controllers)

2x Anderson SB50 battery connectors

6 AWG (16 \(mm^2\)) Terminal lugs

12V Battery

Red/Black Electrical tape

Dual Lock material or fasteners

Zip ties

1/4” or 1/2” (6-12 mm) plywood

Tools Required:

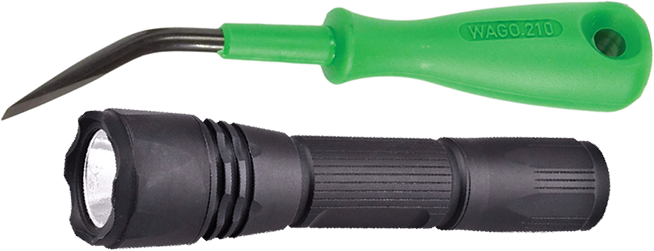

Wago Tool or small flat-head screwdriver

Very small flat head screwdriver (eyeglass repair size)

Philips head screw driver

5 mm Hex key (3/16” may work if metric is unavailable)

1/16” Hex key

Wire cutters, strippers, and crimpers

7/16” (11 mm may work if imperial is unavailable) box end wrench or nut driver

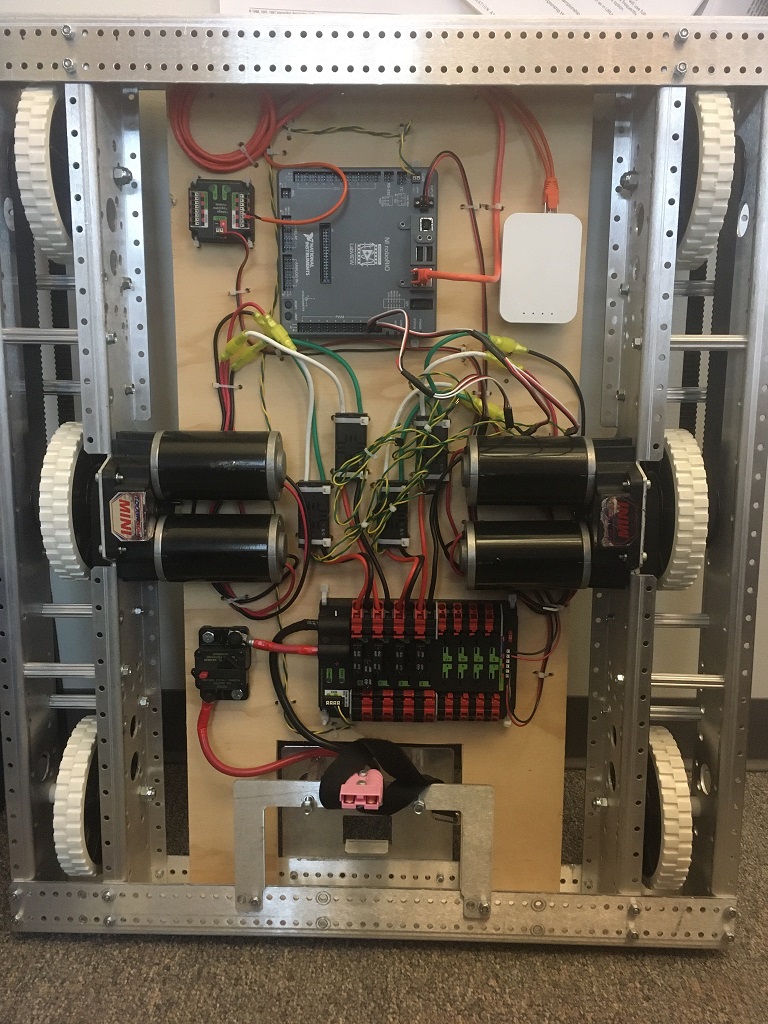

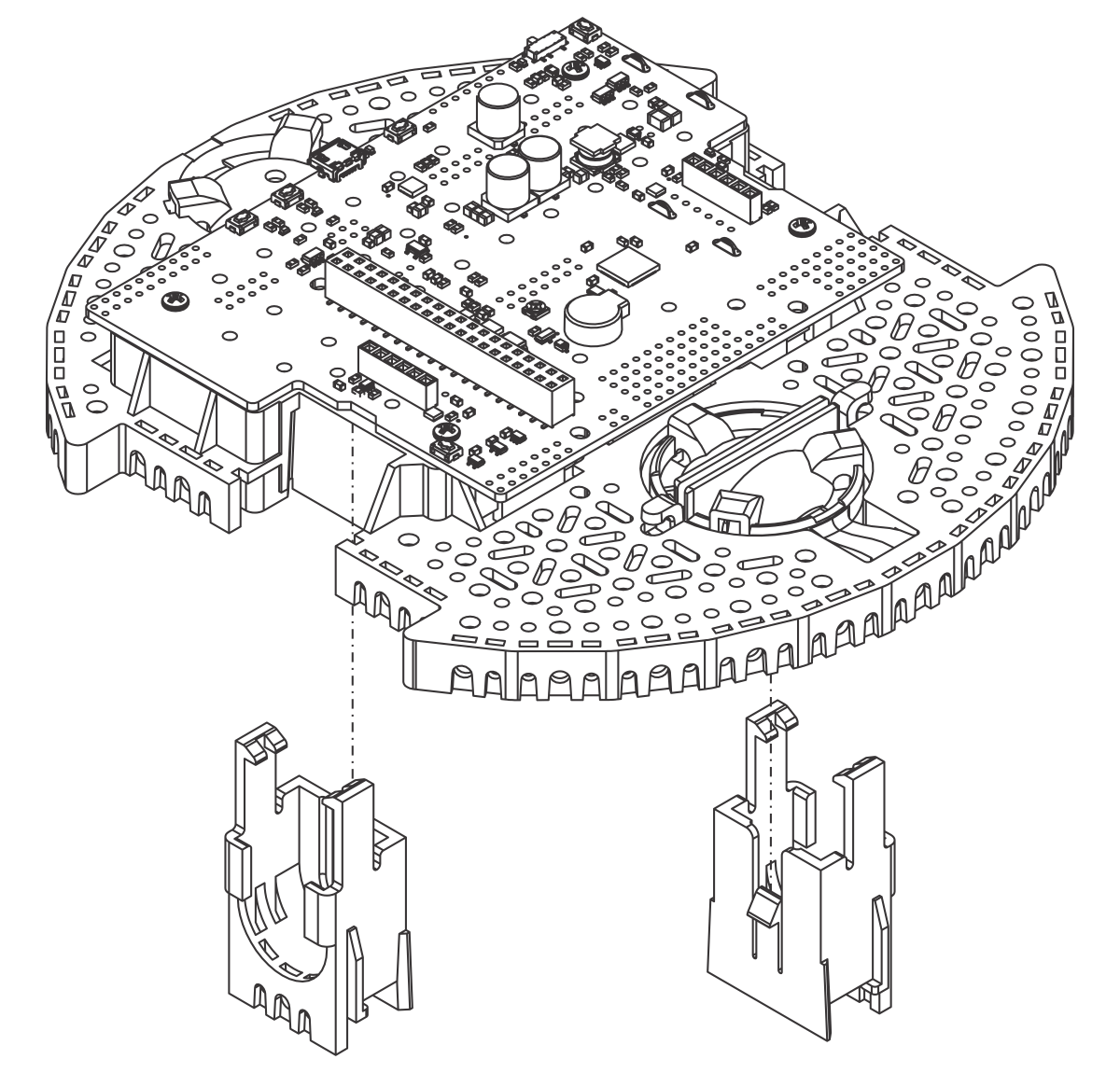

Create the Base for the Control System

For a benchtop test board, cut piece of 1/4” or 1/2” (6-12 mm) material (wood or plastic) approximately 24” x 16” (60 x 40 cm). For a Robot Quick Build control board see the supporting documentation for the proper size board for the chosen chassis configuration.

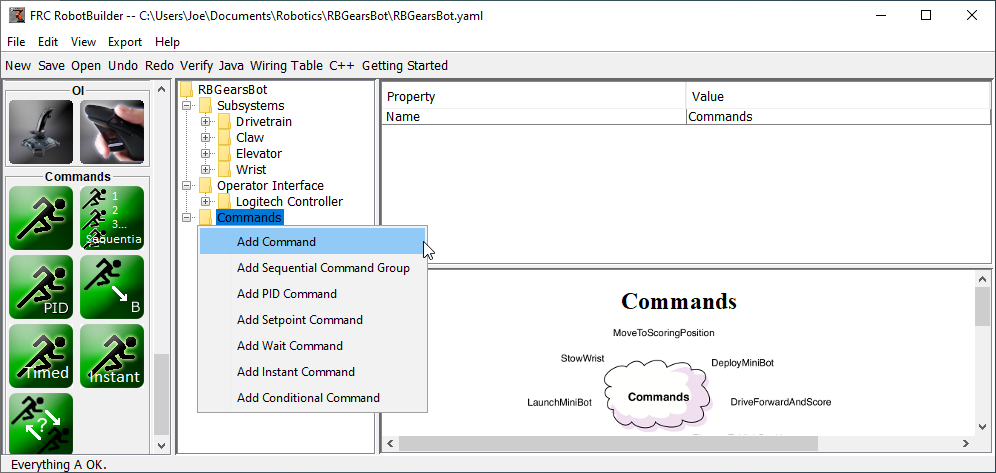

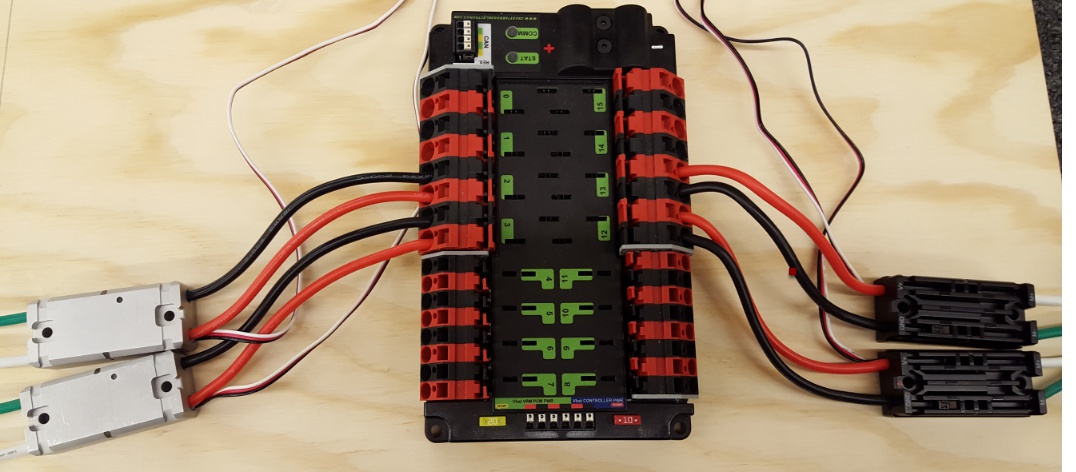

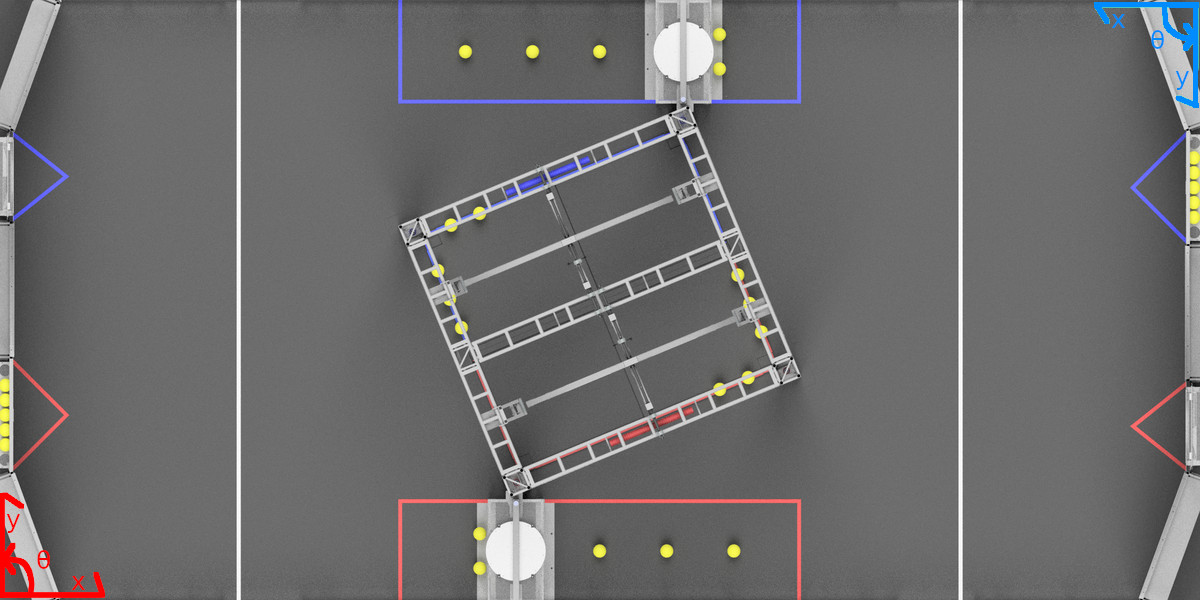

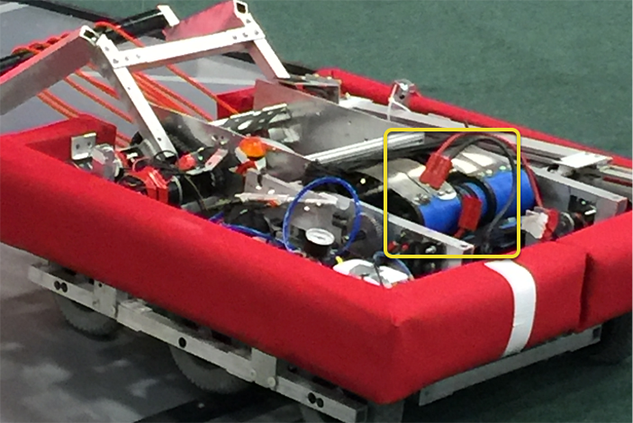

Layout the Core Control System Components

Lay out the components on the board. An example layout is shown in the image above.

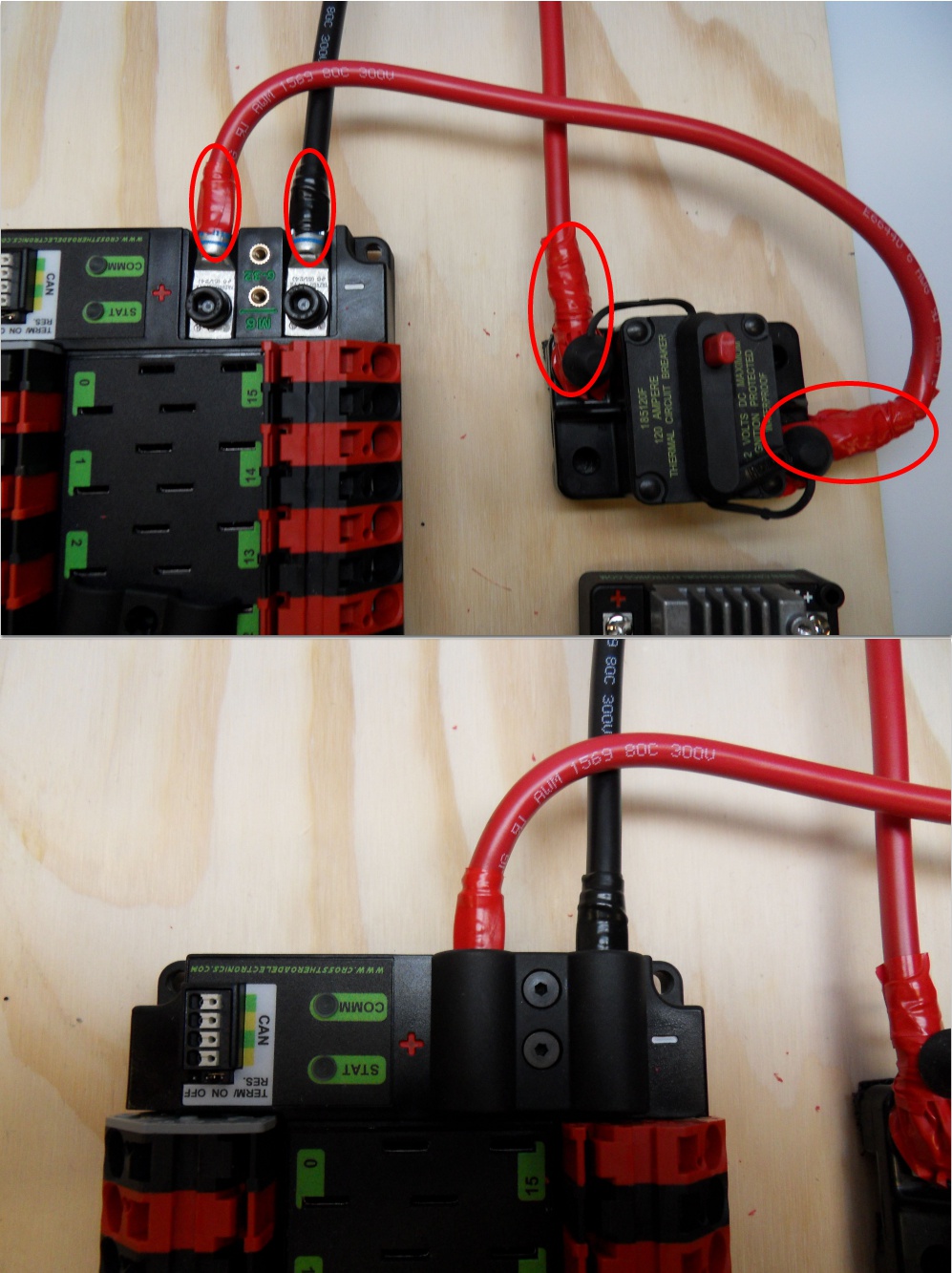

Fasten Components

Using the Dual Lock or hardware, fasten all components to the board. Note that in many FRC games robot-to-robot contact may be substantial and Dual Lock alone is unlikely to stand up as a fastener for many electronic components. Teams may wish to use nut and bolt fasteners or (as shown in the image above) cable ties, with or without Dual Lock to secure devices to the board.

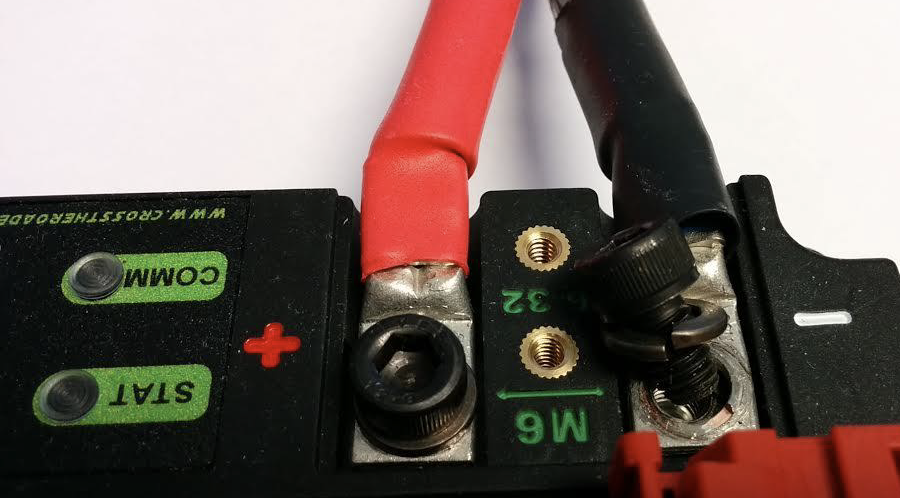

Attach Battery Connector to PDP

Requires: Battery Connector, 6 AWG (16 \(mm^2\)) terminal lugs, 1/16” Allen, 5 mm Allen, 7/16” (11 mm) Box end

Attach terminal lugs to battery connector:

Using a 1/16” Allen wrench, remove the two screws securing the PDP terminal cover.

Using a 5 mm Allen wrench (3/16”), remove the negative (-) bolt and washer from the PDP and fasten the negative terminal of the battery connector.

Using a 7/16” (11 mm) box end wrench, remove the nut on the “Batt” side of the main breaker and secure the positive terminal of the battery connector

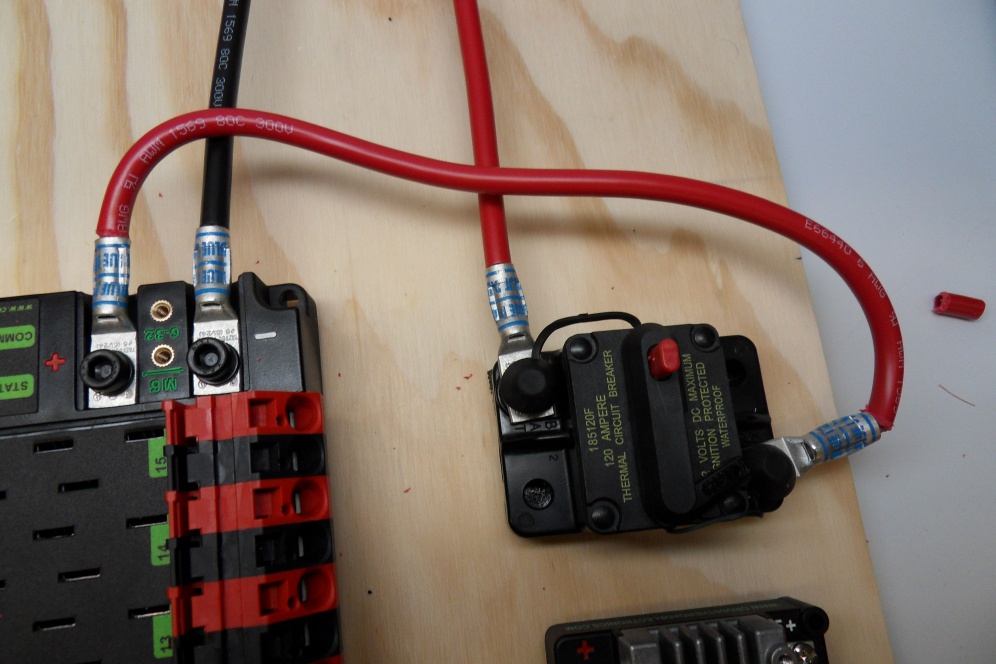

Wire Breaker to PDP

Requires: 6 AWG (16 \(mm^2\)) red wire, 2x 6 AWG (16 \(mm^2\)) terminal lugs, 5 mm Allen, 7/16” (11 mm) box end

Secure one terminal lug to the end of the 6 AWG (16 \(mm^2\)) red wire. Using the 7/16” (11 mm) box end, remove the nut from the “AUX” side of the 120A main breaker and place the terminal over the stud. Loosely secure the nut (you may wish to remove it shortly to cut, strip, and crimp the other end of the wire). Measure out the length of wire required to reach the positive terminal of the PDP.

Cut, strip, and crimp the terminal to the 2nd end of the red 6 AWG (16 \(mm^2\)) wire.

Using the 7/16” (11 mm) box end, secure the wire to the “AUX” side of the 120A main breaker.

Using the 5 mm Allen wrench, secure the other end to the PDP positive terminal.

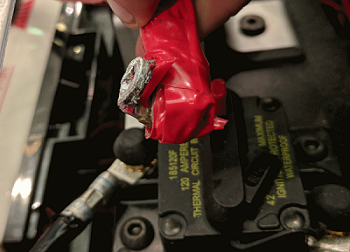

Insulate PDP connections

Requires: 1/16” Allen, Electrical tape

Using electrical tape, insulate the two connections to the 120A breaker. Also insulate any part of the PDP terminals which will be exposed when the cover is replaced. One method for insulating the main breaker connections is to wrap the stud and nut first, then use the tape wrapped around the terminal and wire to secure the tape.

Using the 1/16” Allen wrench, replace the PDP terminal cover

Wago connectors

The next step will involve using the Wago connectors on the PDP. To use the Wago connectors, insert a small flat blade screwdriver into the rectangular hole at a shallow angle then angle the screwdriver upwards as you continue to press in to actuate the lever, opening the terminal. Two sizes of Wago connector are found on the PDP:

Small Wago connector: Accepts 10 - 24 AWG (0.25 - 6 \(mm^2\)), strip 11-12 mm (~7/16”)

Large Wago connector: Accepts 6 - 12 AWG (4 - 16 \(mm^2\)), strip 12-13 mm (~1/2”)

To maximize pullout force and minimize connection resistance wires should not be tinned (and ideally not twisted) before inserting into the Wago connector.

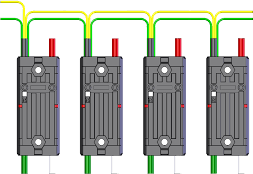

Motor Controller Power

Requires: Wire Stripper, Small Flat Screwdriver, 10 or 12 AWG (4 - 6 \(mm^2\)) wire, 10 or 12 AWG (4 - 6 \(mm^2\)) fork/ring terminals (terminal controllers only), wire crimper

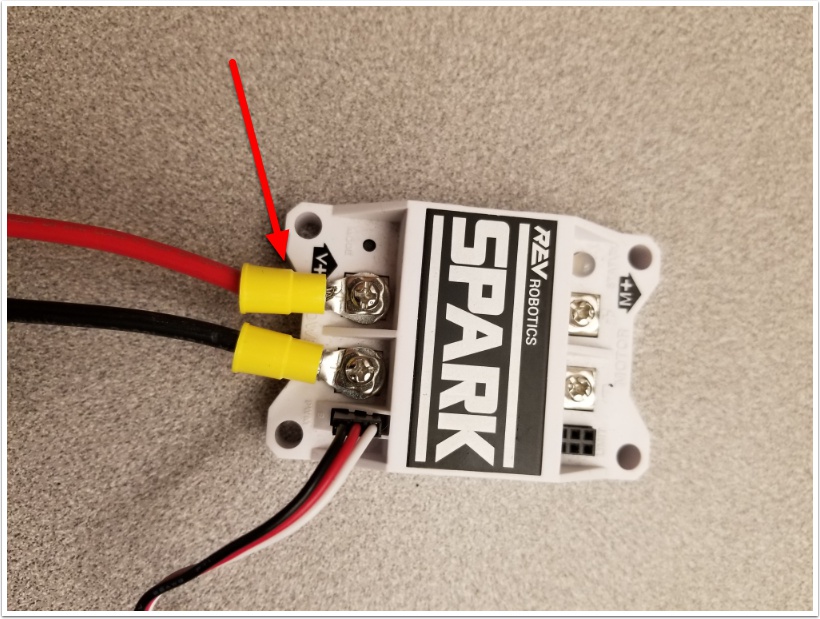

For SPARK MAX or other wire integrated motor controllers (top image):

Cut and strip the red and black power input wires, then insert into one of the 40A (larger) Wago terminal pairs.

For terminal motor controllers (bottom image):

Cut red and black wire to appropriate length to reach from one of the 40A (larger) Wago terminal pairs to the input side of the motor controller (with a little extra for the length that will be inserted into the terminals on each end)

Strip one end of each of the wires, then insert into the Wago terminals.

Strip the other end of each wire, and crimp on a ring or fork terminal

Attach the terminal to the motor controller input terminals (red to +, black to -)

Weidmuller Connectors

Warning

The correct strip length is ~5/16” (~8 mm), not the 5/8” (~16 mm) mentioned in the video.

A number of the CAN and power connectors in the system use a Weidmuller LSF series wire-to-board connector. There are a few things to keep in mind when using this connector for best results:

Wire should be 16 AWG (1.5 \(mm^2\)) to 24 AWG (0.25 \(mm^2\)) (consult rules to verify required gauge for power wiring)

Wire ends should be stripped approximately 5/16 (~8 mm)”

To insert or remove the wire, press down on the corresponding “button” to open the terminal

After making the connection check to be sure that it is clean and secure:

Verify that there are no “whiskers” outside the connector that may cause a short circuit

Tug on the wire to verify that it is seated fully. If the wire comes out and is the correct gauge it needs to be inserted further and/or stripped back further.

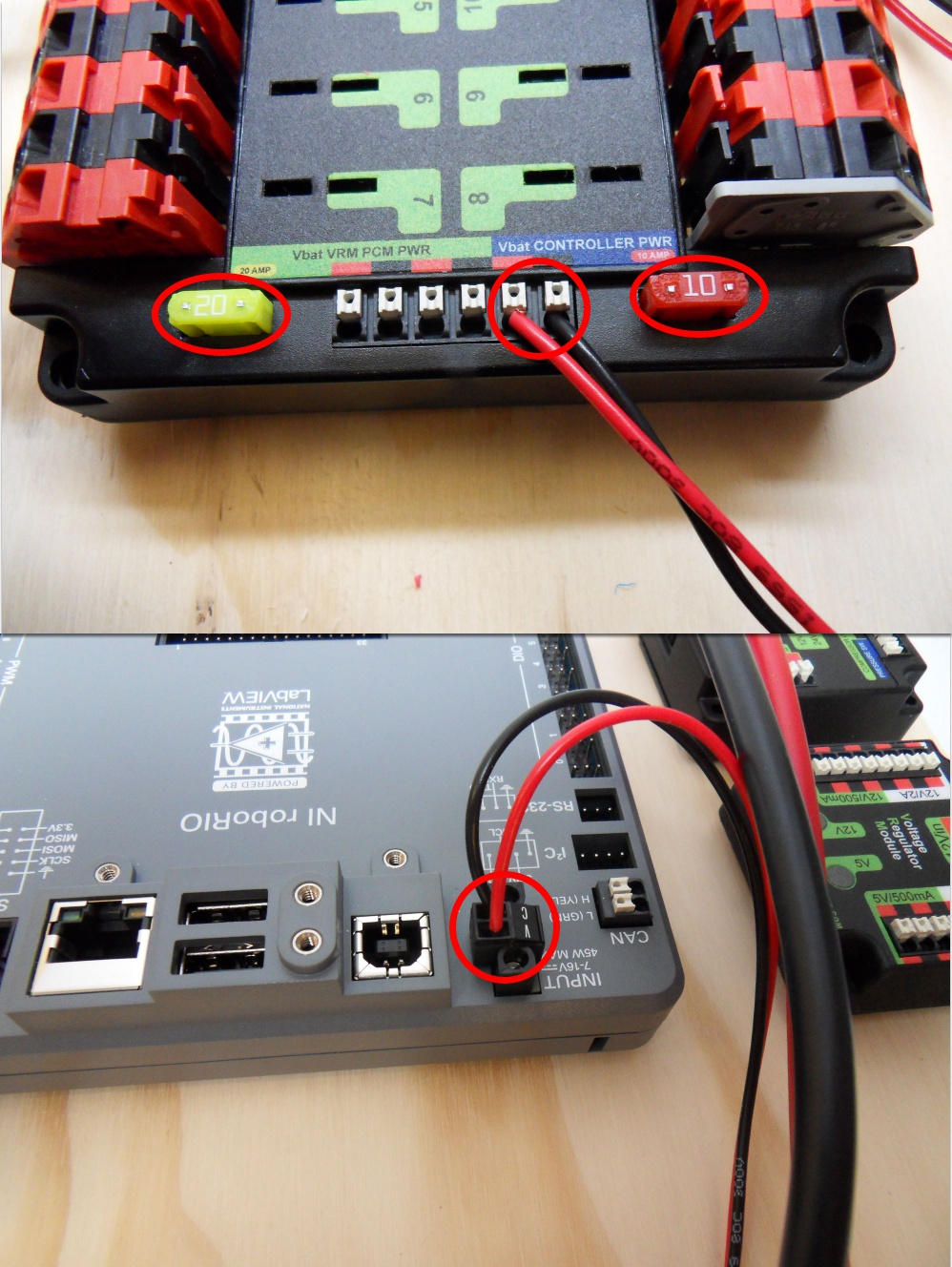

roboRIO Power

Requires: 10A/20A mini fuses, Wire stripper, very small flat screwdriver, 18 AWG (1 \(mm^2\)) Red and Black

Insert the 10A and 20A mini fuses in the PDP in the locations shown on the silk screen (and in the image above)

Strip ~5/16” (~8 mm) on both the red and black 18 AWG (1 \(mm^2\)) wire and connect to the “Vbat Controller PWR” terminals on the PDB

Measure the required length to reach the power input on the roboRIO. Take care to leave enough length to route the wires around any other components such as the battery and to allow for any strain relief or cable management.

Cut and strip the wire.

Using a very small flat screwdriver connect the wires to the power input connector of the roboRIO (red to V, black to C). Also make sure that the power connector is screwed down securely to the roboRIO.

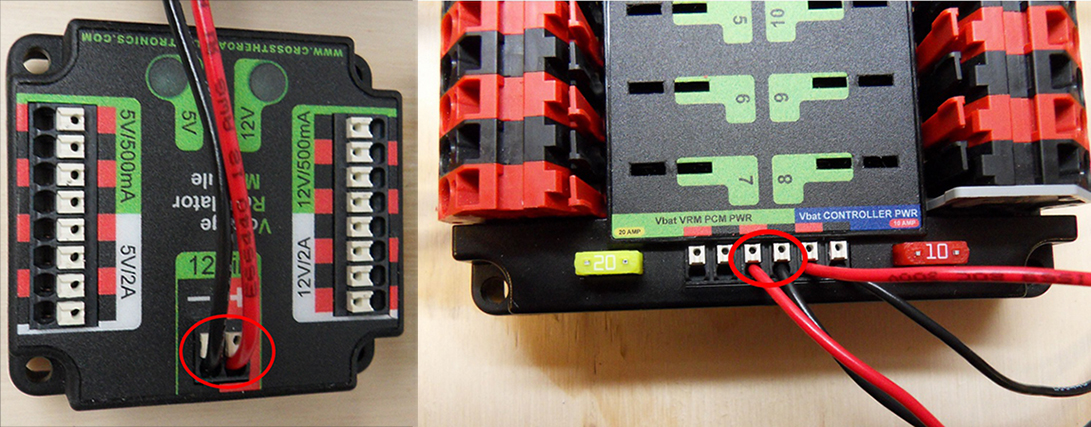

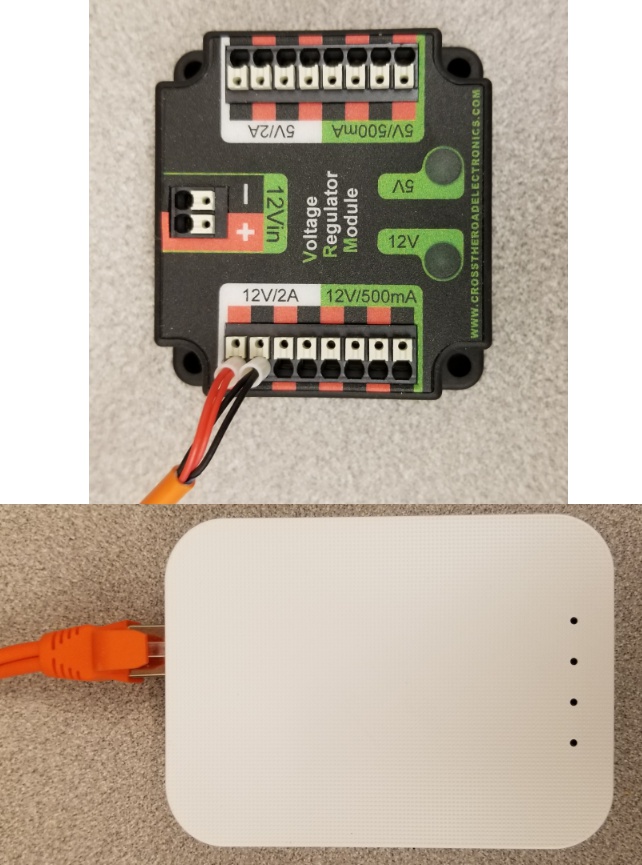

Voltage Regulator Module Power

Requires: Wire stripper, small flat screwdriver (optional), 18 AWG (1 \(mm^2\)) red and black wire:

Strip ~5/16” (~8 mm) on the end of the red and black 18 AWG (1 \(mm^2\)) wire.

Connect the wire to one of the two terminal pairs labeled “Vbat VRM PCM PWR” on the PDP.

Measure the length required to reach the “12Vin” terminals on the VRM. Take care to leave enough length to route the wires around any other components such as the battery and to allow for any strain relief or cable management.

Cut and strip ~5/16” (~8 mm) from the end of the wire.

Connect the wire to the VRM 12Vin terminals.

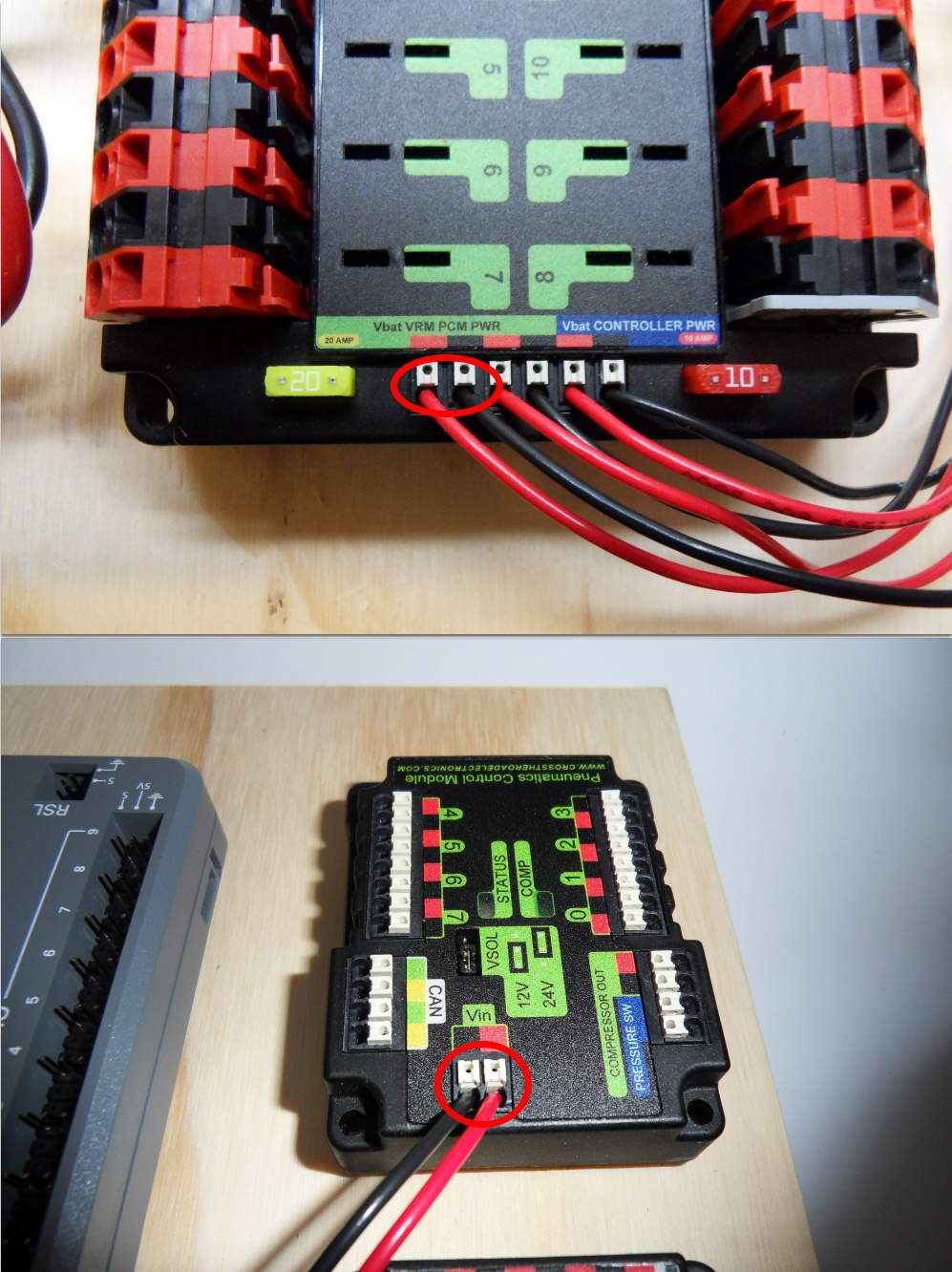

Pneumatics Control Module Power (Optional)

Requires: Wire stripper, small flat screwdriver (optional), 18 AWG (1 \(mm^2\)) red and black wire

Strip ~5/16” (~8 mm) on the end of the red and black 18 AWG (1 \(mm^2\)) wire.

Connect the wire to one of the two terminal pairs labeled “Vbat VRM PCM PWR” on the PDP.

Measure the length required to reach the “Vin” terminals on the PCM. Take care to leave enough length to route the wires around any other components such as the battery and to allow for any strain relief or cable management.

Cut and strip ~5/16” (~8 mm) from the end of the wire.

Connect the wire to the PCM 12Vin terminals.

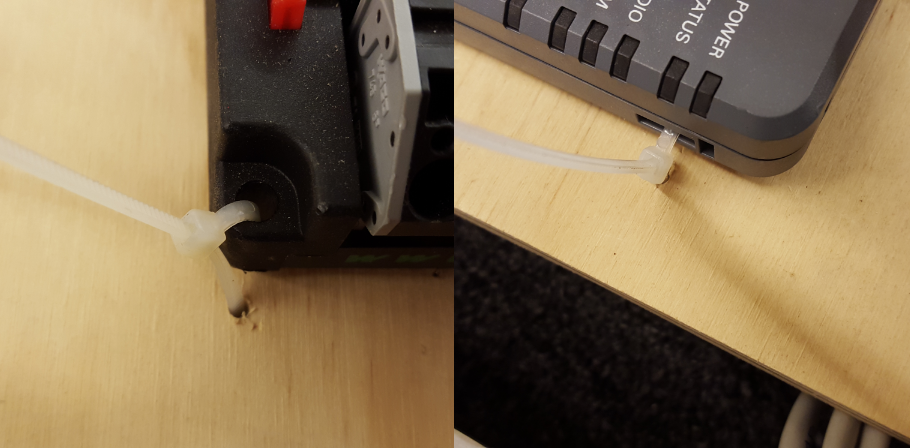

Radio Power and Ethernet

Warning

DO NOT connect the Rev passive POE injector cable directly to the roboRIO. The roboRIO MUST connect to the female end of the cable using an additional Ethernet cable as shown in the next step.

Requires: Small flat screwdriver (optional), Rev radio PoE cable

Insert the ferrules of the passive PoE injector cable into the corresponding colored terminals on the 12V/2A section of the VRM.

Connect the male RJ45 (Ethernet) end of the cable into the Ethernet port on the radio closest to the barrel connector (labeled 18-24v POE)

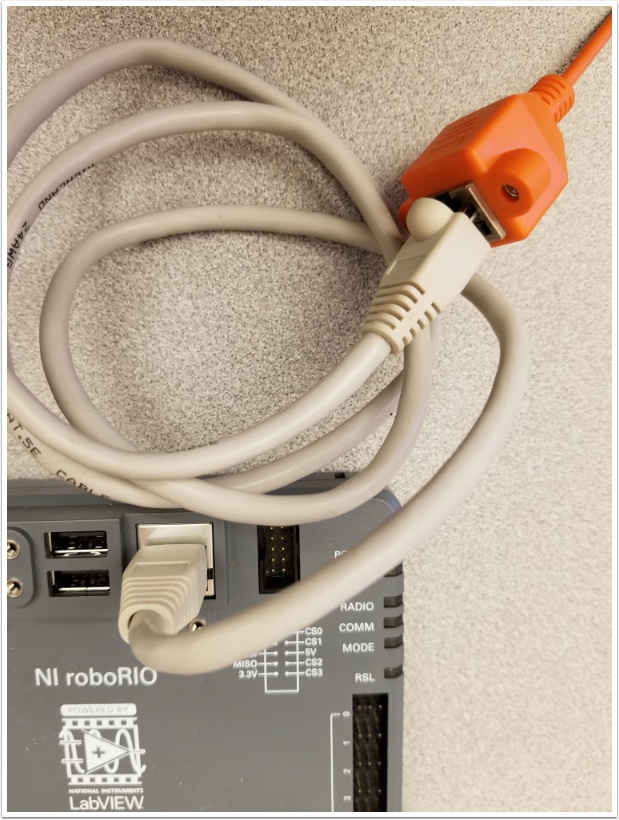

roboRIO to Radio Ethernet

Requires: Ethernet cable

Connect an Ethernet cable from the female RJ45 (Ethernet) port of the Rev Passive POE cable to the RJ45 (Ethernet) port on the roboRIO.

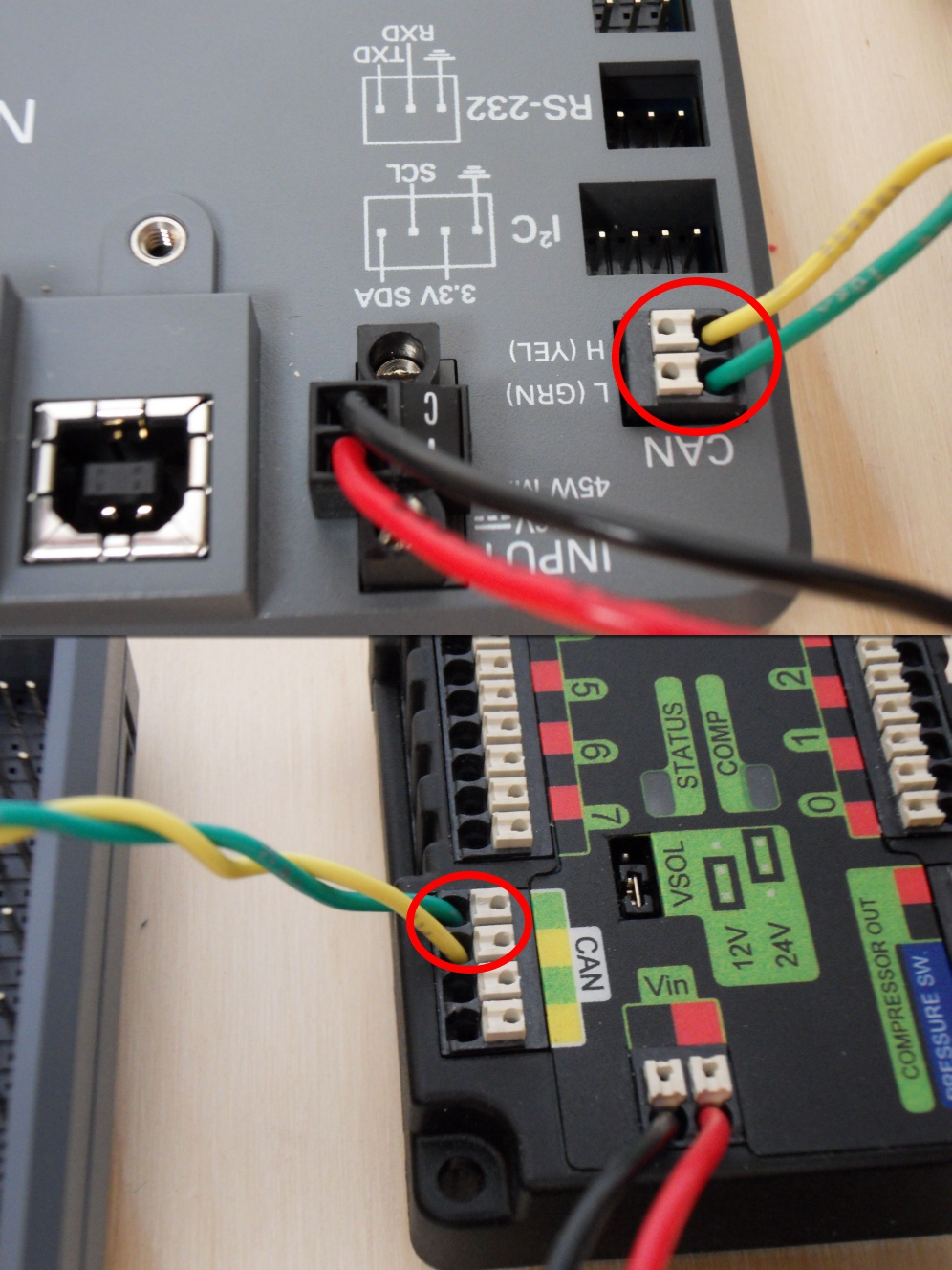

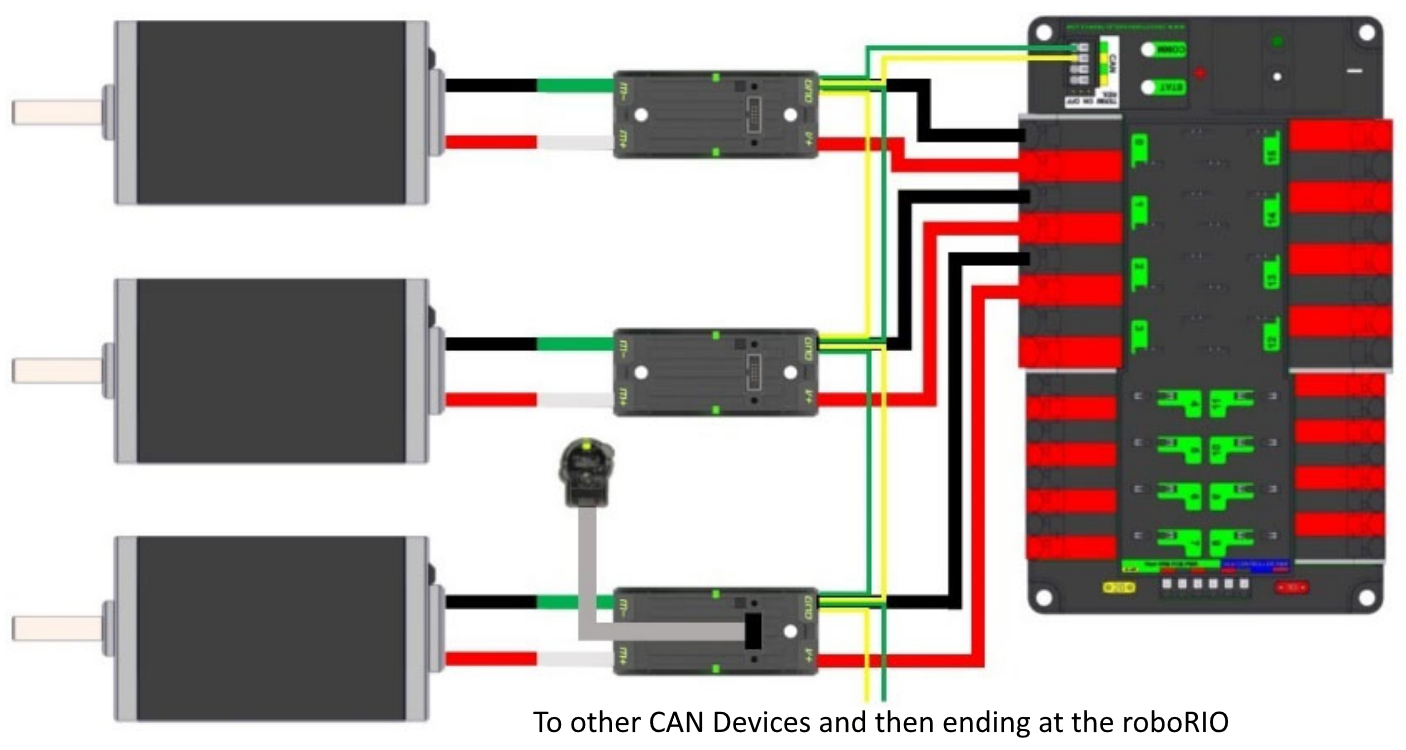

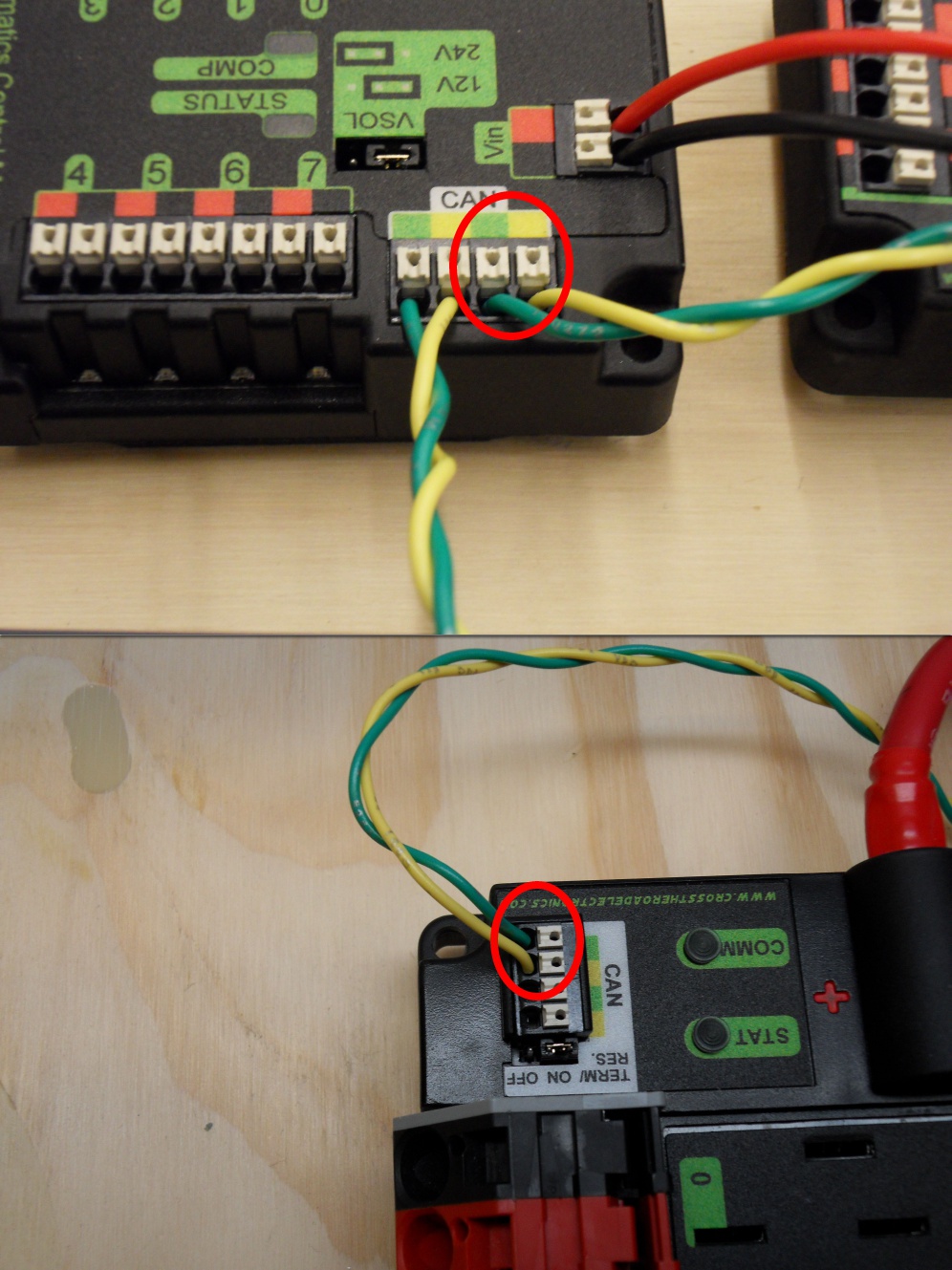

CAN Devices

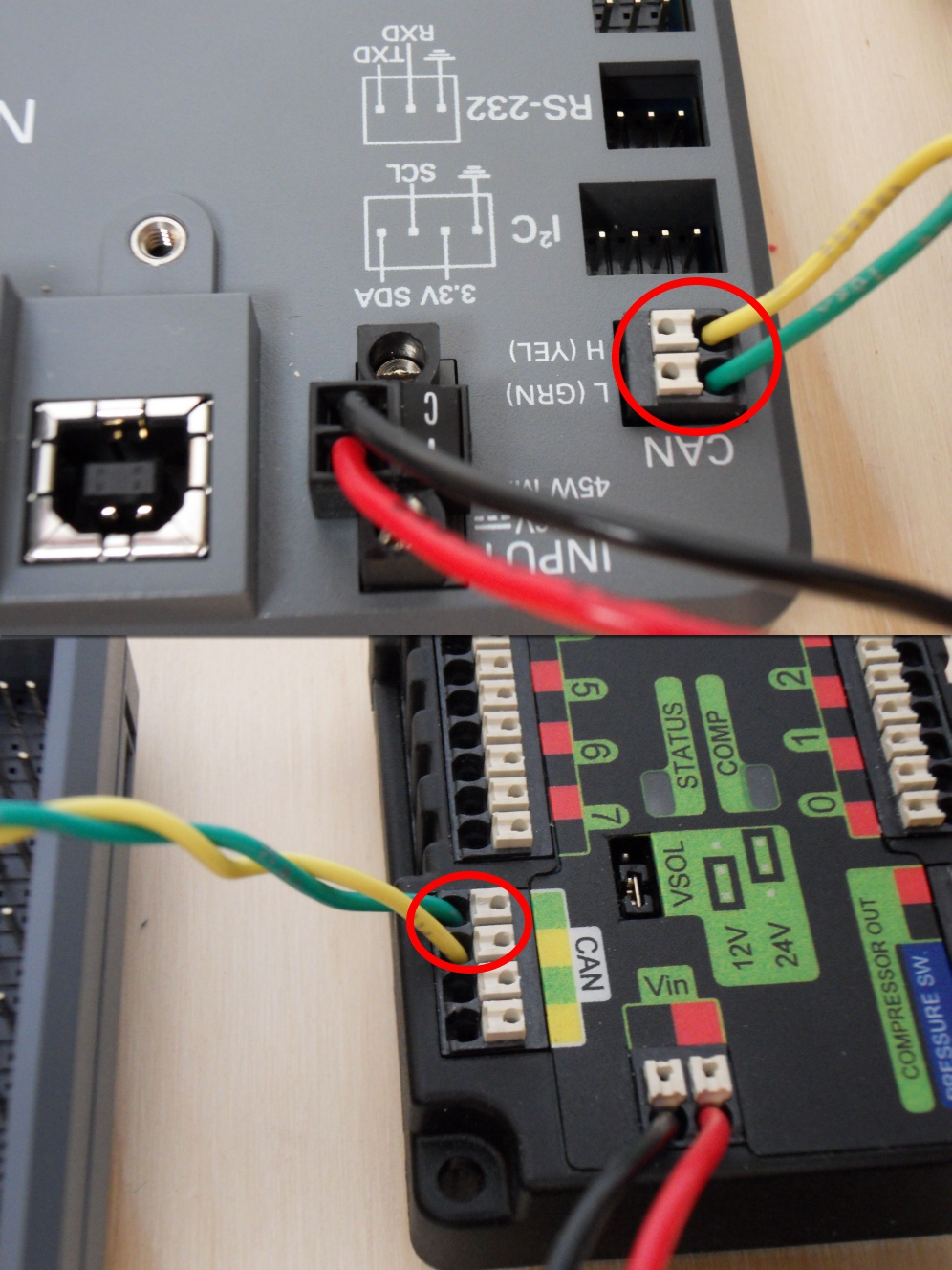

roboRIO to PCM CAN

Requires: Wire stripper, small flat screwdriver (optional), yellow/green twisted CAN cable

Strip ~5/16” (~8 mm) off of each of the CAN wires.

Insert the wires into the appropriate CAN terminals on the roboRIO (Yellow->YEL, Green->GRN).

Measure the length required to reach the CAN terminals of the PCM (either of the two available pairs). Cut and strip ~5/16” (~8 mm) off this end of the wires.

Insert the wires into the appropriate color coded CAN terminals on the PCM. You may use either of the Yellow/Green terminal pairs on the PCM, there is no defined in or out.

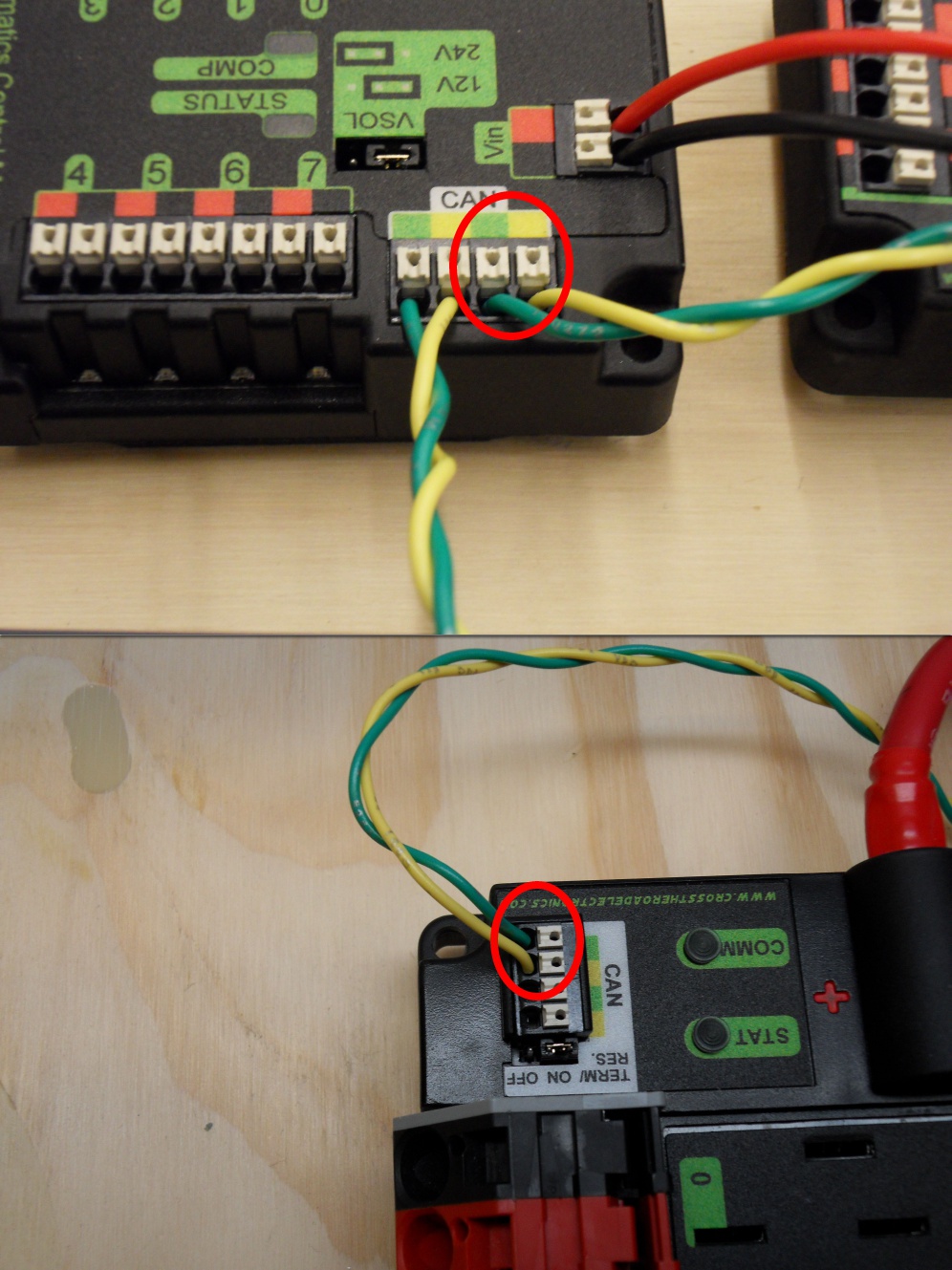

PCM to PDP CAN

Requires: Wire stripper, small flat screwdriver (optional), yellow/green twisted CAN cable

Strip ~5/16” (~8 mm) off of each of the CAN wires.

Insert the wires into the appropriate CAN terminals on the PCM.

Measure the length required to reach the CAN terminals of the PDP (either of the two available pairs). Cut and strip ~5/16” (~8 mm) off this end of the wires.

Insert the wires into the appropriate color coded CAN terminals on the PDP. You may use either of the Yellow/Green terminal pairs on the PDP, there is no defined in or out.

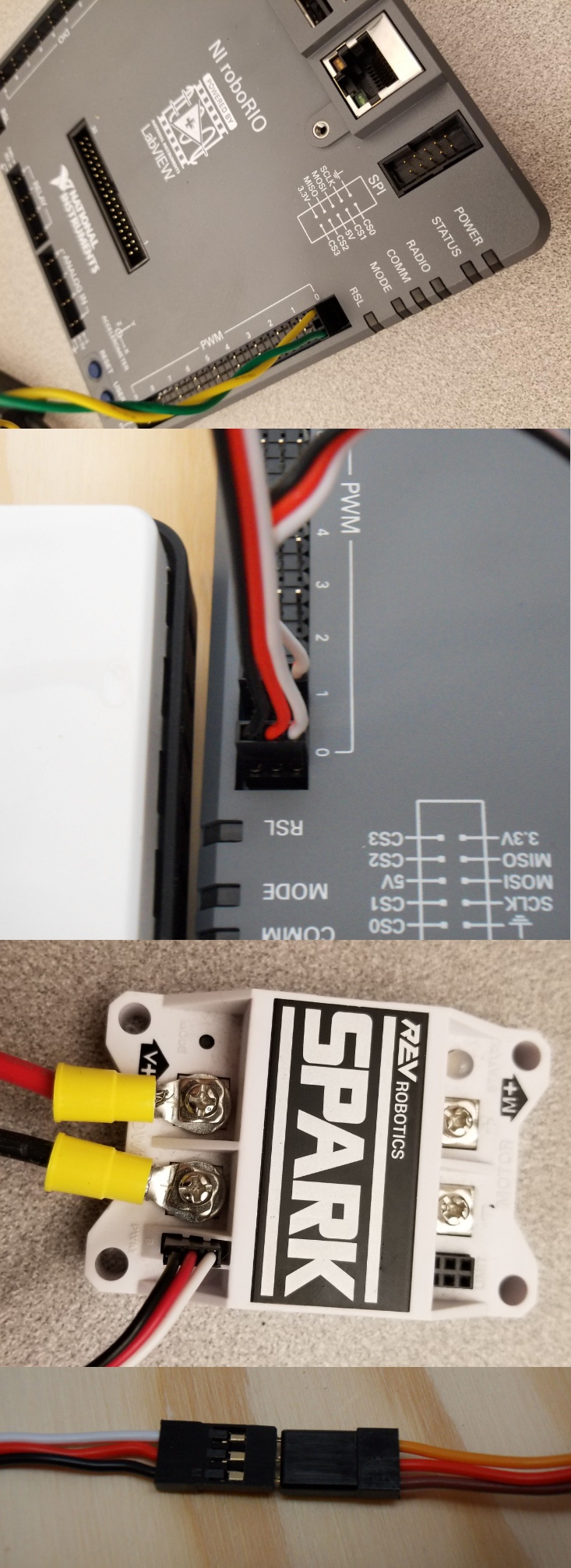

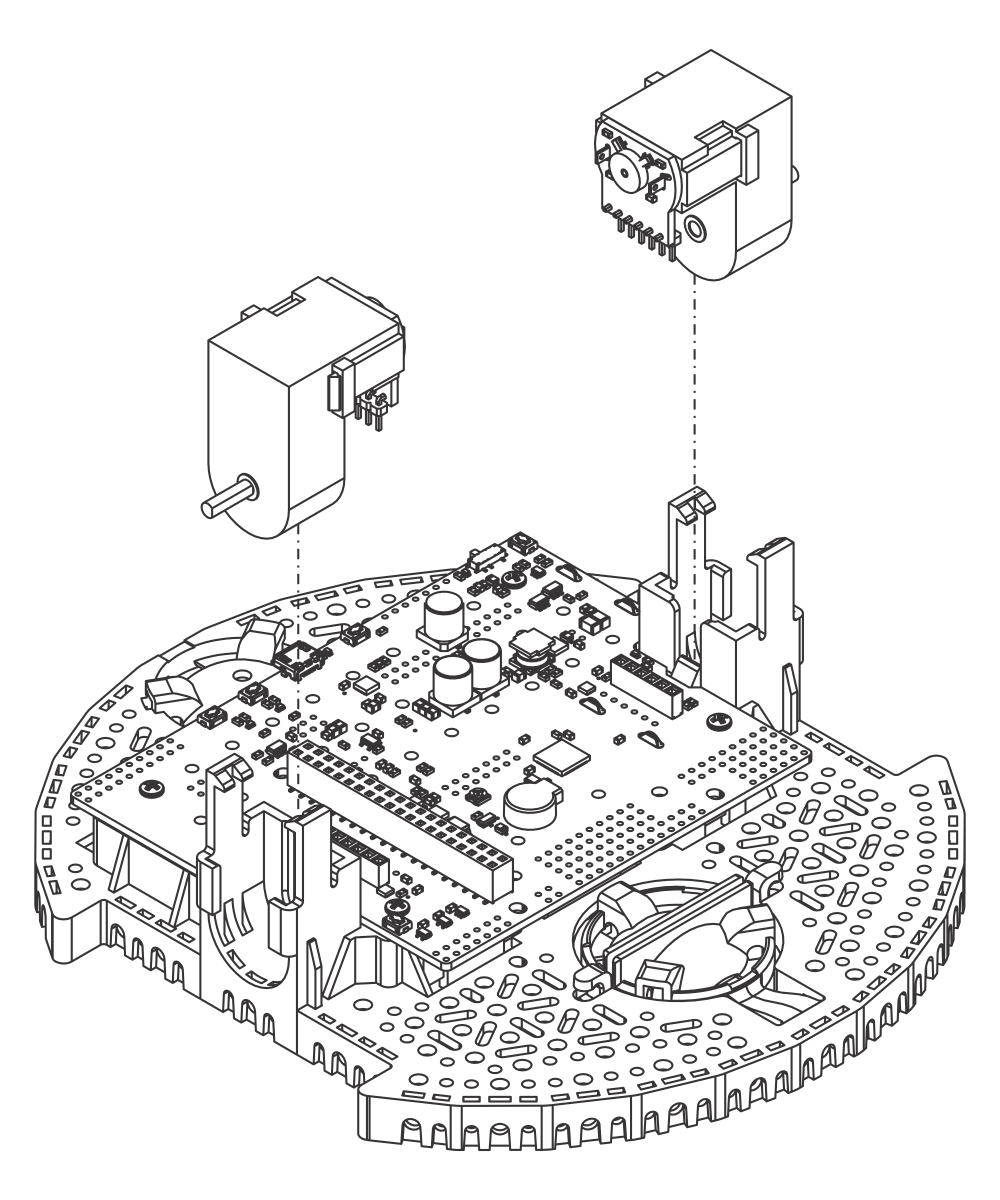

PWM Cables

This section details how to wire the SPARK MAX controllers using PWM signaling. This is a recommended starting point as it is less complex and easier to troubleshoot than CAN operation. The SPARK MAXs (and many other FRC motor controllers) can also be wired using CAN which unlocks easier configuration, advanced functionality, better diagnostic data and reduces the amount of wire needed.

Requires: 4x SPARK MAX PWM adapters (if using SPARK MAX), 4x PWM cables (if controllers without integrated wires or adapters, otherwise optional), 2x PWM Y-cable (Optional)

Option 1 (Direct connect):

If using SPARK MAX, attach the PWM adapter to the SPARK MAX (small adapter with a 3 pin connector with black/white wires).

If needed, attach PWM extension cables to the controller or adapter. On the controller side, match the colors or markings (some controllers may have green/yellow wiring, green should connect to black).

Attach the other end of the cable to the roboRIO with the black wire towards the outside of the roboRIO. It is recommended to connect the left side to PWM 0 and 1 and the right side to PWM 2 and 3 for the most straightforward programming experience, but any channel will work as long as you note which side goes to which channel and adjust the code accordingly.

Option 2 (Y-cable):

If using SPARK MAX, attach the PWM adapter to the SPARK MAX (small adapter with a 3 pin connector with black/white wires).

If needed, attach PWM extension cables between the controller or adapter and the PWM Y-cable. On the controller side, match the colors or markings (some controllers may have green/yellow wiring, green should connect to black).

Connect 1 PWM Y-cable to the 2 PWM cables for the controllers controlling each side of the robot. The brown wire on the Y-cable should match the black wire on the PWM cable.

Connect the PWM Y-cables to the PWM ports on the roboRIO. The brown wire should be towards the outside of the roboRIO. It is recommended to connect the left side to PWM 0 and the right side to PWM 1 for the most straightforward programming experience, but any channel will work as long as you note which side goes to which channel and adjust the code accordingly.

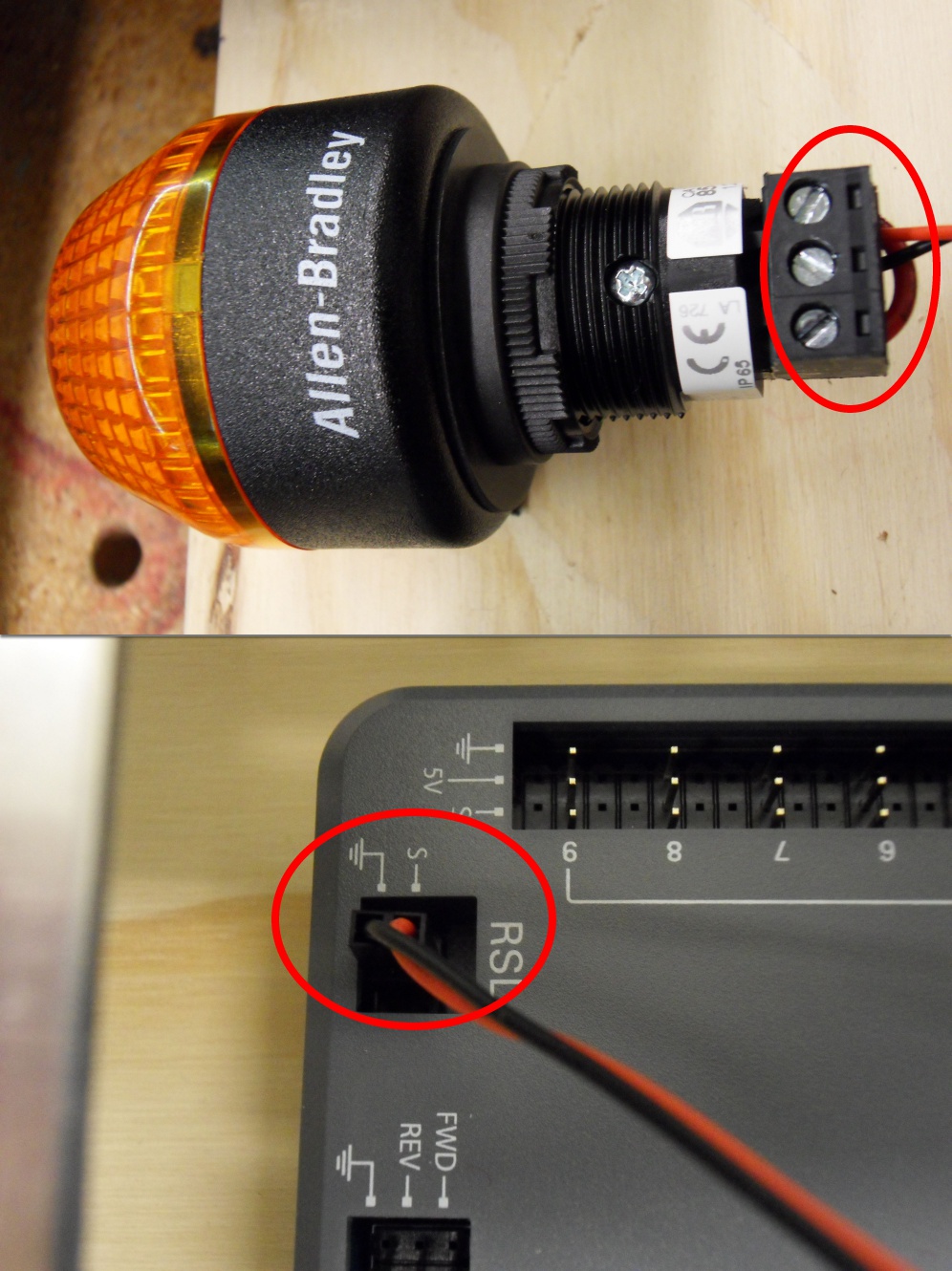

Robot Signal Light

Requires: Wire stripper, 2 pin cable, Robot Signal Light, 18 AWG (1 \(mm^2\)) red wire, very small flat screwdriver

Cut one end off of the 2 pin cable and strip both wires

Insert the black wire into the center, “N” terminal and tighten the terminal.

Strip the 18 AWG (1 \(mm^2\)) red wire and insert into the “La” terminal and tighten the terminal.

Cut and strip the other end of the 18 AWG (1 \(mm^2\)) wire to insert into the “Lb” terminal

Insert the red wire from the two pin cable into the “Lb” terminal with the 18 AWG (1 \(mm^2\)) red wire and tighten the terminal.

Connect the two-pin connector to the RSL port on the roboRIO. The black wire should be closest to the outside of the roboRIO.

Tip

You may wish to temporarily secure the RSL to the control board using cable ties or Dual Lock (it is recommended to move the RSL to a more visible location as the robot is being constructed)

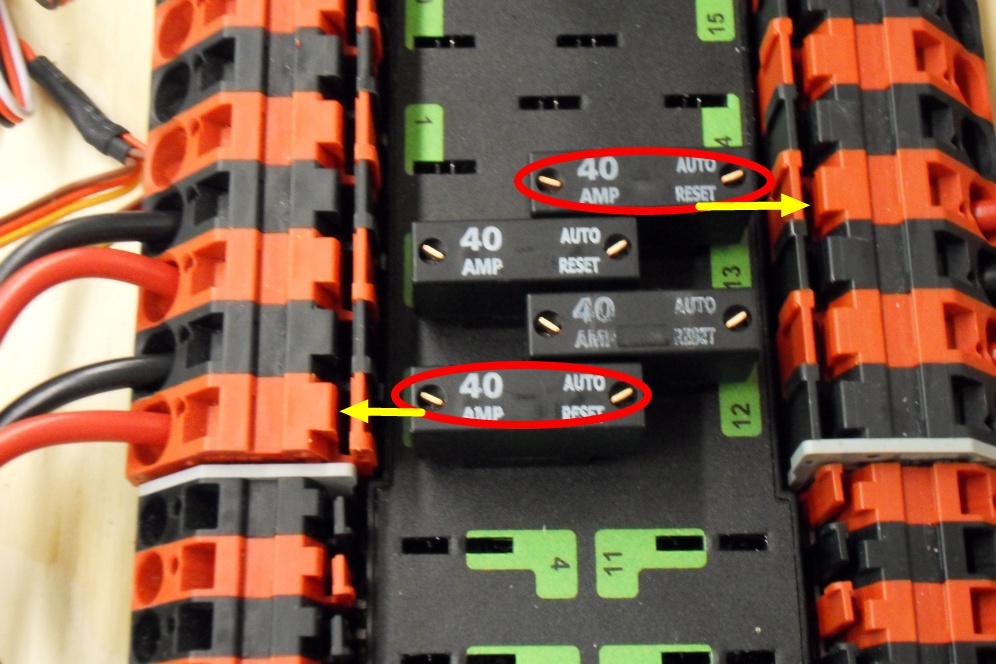

Circuit Breakers

Requires: 4x 40A circuit breakers

Insert 40-amp Circuit Breakers into the positions on the PDP corresponding with the Wago connectors the Talons are connected to. Note that, for all breakers, the breaker corresponds with the nearest positive (red) terminal (see graphic above). All negative terminals on the board are directly connected internally.

If working on a Robot Quick Build, stop here and insert the board into the robot chassis before continuing.

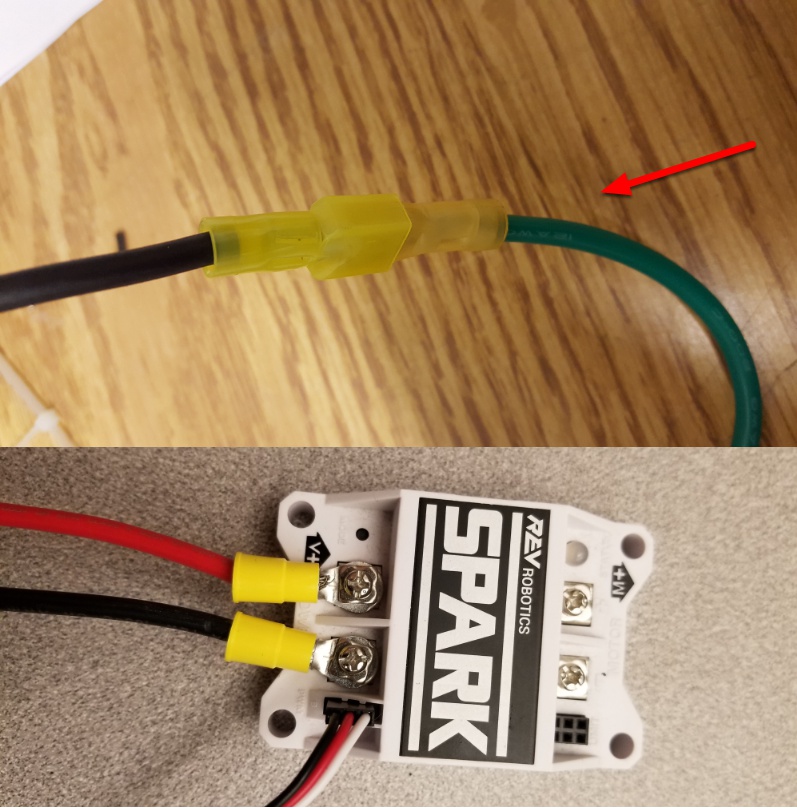

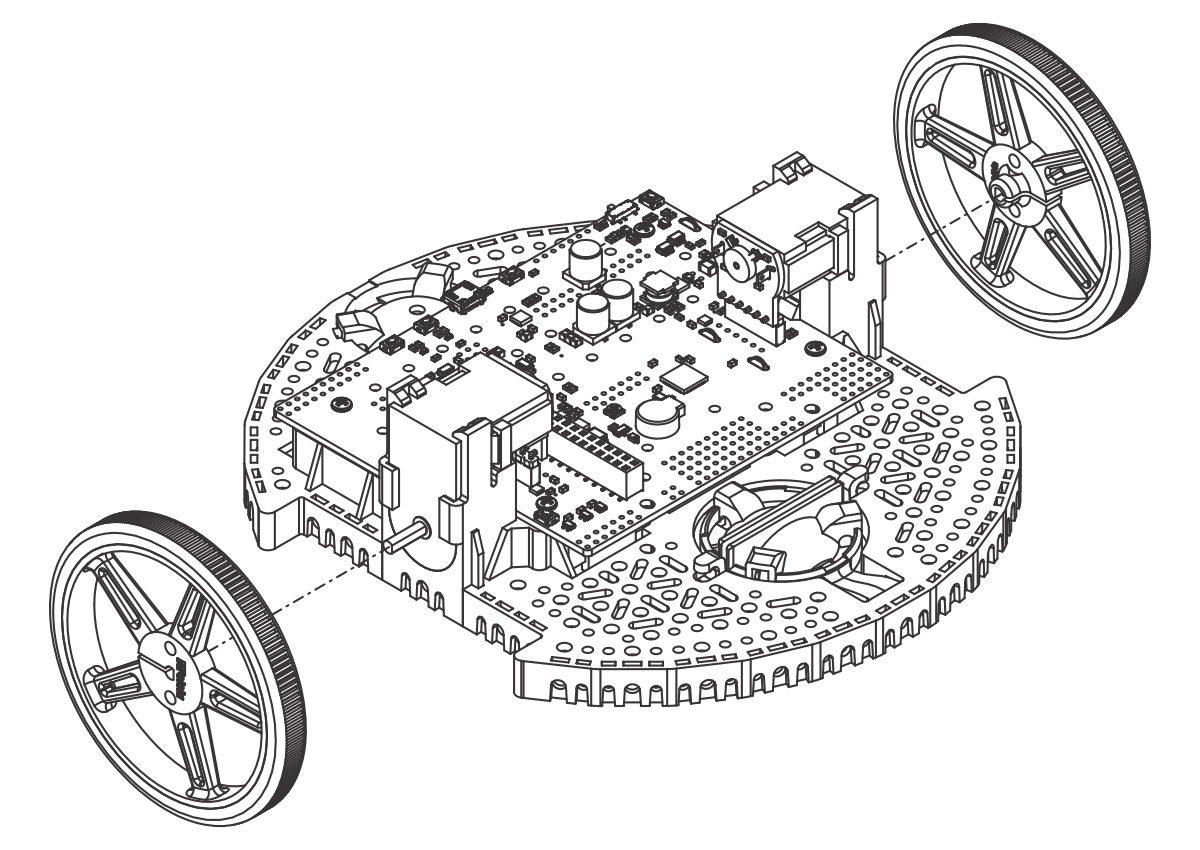

Motor Power

Requires: Wire stripper, wire crimper, phillips head screwdriver, wire connecting hardware

For each CIM motor:

Strip the ends of the red and black wires from the CIM

For integrated wire controllers including SPARK MAX (top image):

Strip the red and black wires (or white and green wires) from the controller (the SPARK MAX white wire is unused for brushed motors such as the CIM, it should be secured and the end should be insulated such with electrical tape or other insulation method).

Connect the motor wires to the matching controller output wires (for controllers with white/green, connect red to white and green to black). The images above show an example using quick disconnect terminals which are provided in the Rookie KOP.

For the SPARK or other non-integrated-wire controllers (bottom image):

Crimp a ring/fork terminal on each of the motor wires.

Attach the wires to the output side of the motor controller (red to +, black to -)

STOP

Danger

Before plugging in the battery, make sure all connections have been made with the proper polarity. Ideally have someone that did not wire the robot check to make sure all connections are correct.

Before plugging in the battery, make sure all connections have been made with the proper polarity. Ideally have someone that did not wire the robot check to make sure all connections are correct.

Start with the battery and verify that the red wire is connected to the positive terminal

Check that the red wire passes through the main breaker and to the + terminal of the PDP and that the black wire travels directly to the - terminal.

For each motor controller, verify that the red wire goes from the red PDP terminal to the Red wire on the Victor SPX (not the white M+!!!!)

For each device on the end of the PDP, verify that the red wire connects to the red terminal on the PDP and the red terminal on the component.

Make sure that the orange Passive PoE cable is plugged directly into the radio NOT THE roboRIO! It must be connected to the roboRIO using an additional Ethernet cable.

Tip

It is also recommended to put the robot on blocks so the wheels are off the ground before proceeding. This will prevent any unexpected movement from becoming dangerous.

Manage Wires

Requires: Zip ties

Tip

Now may be a good time to add a few zip ties to manage some of the wires before proceeding. This will help keep the robot wiring neat.

Connect Battery

Connect the battery to the robot side of the Anderson connector. Power on the robot by moving the lever on the top of the 120A main breaker into the ridge on the top of the housing.

If stuff blinks, you probably did it right.

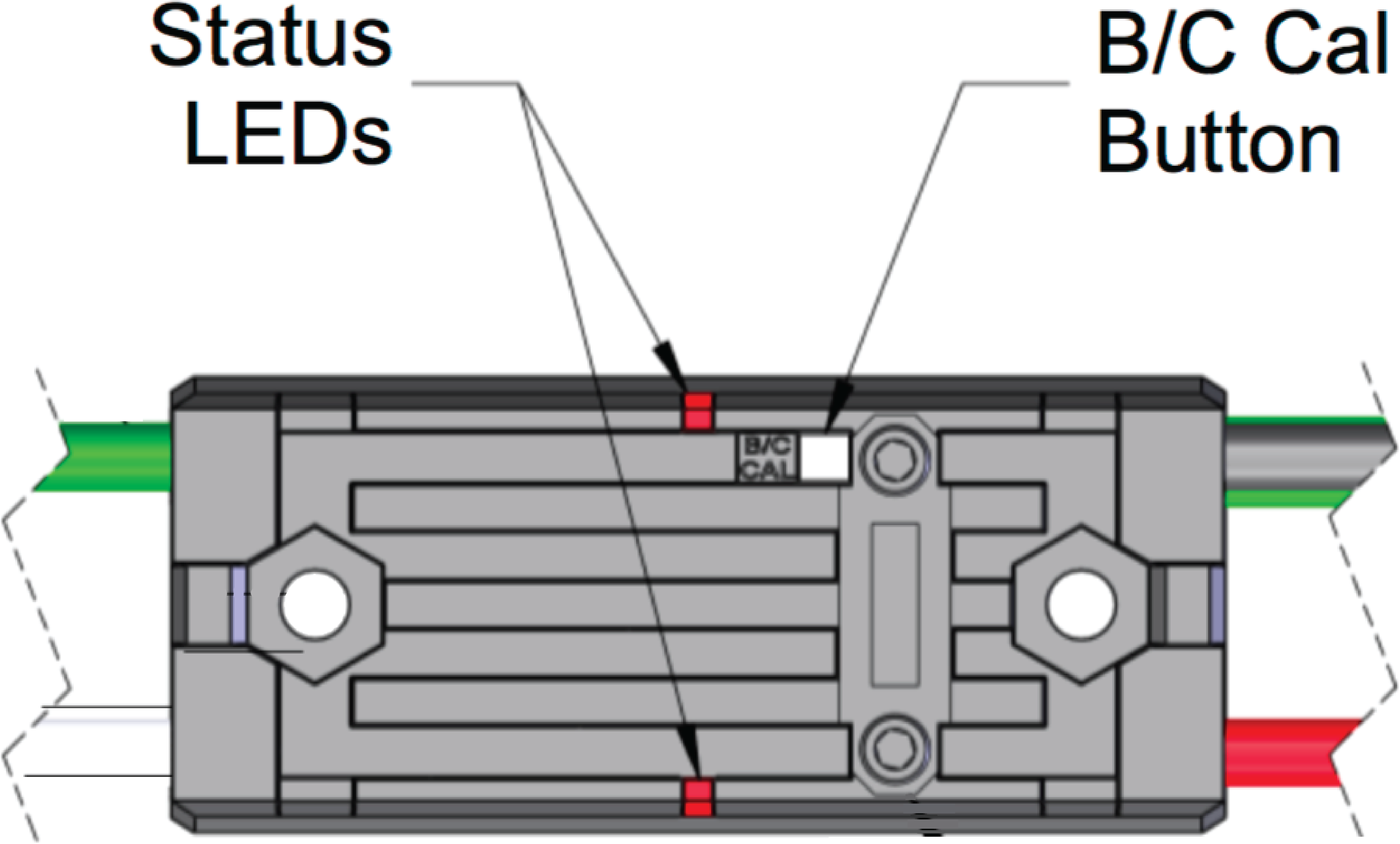

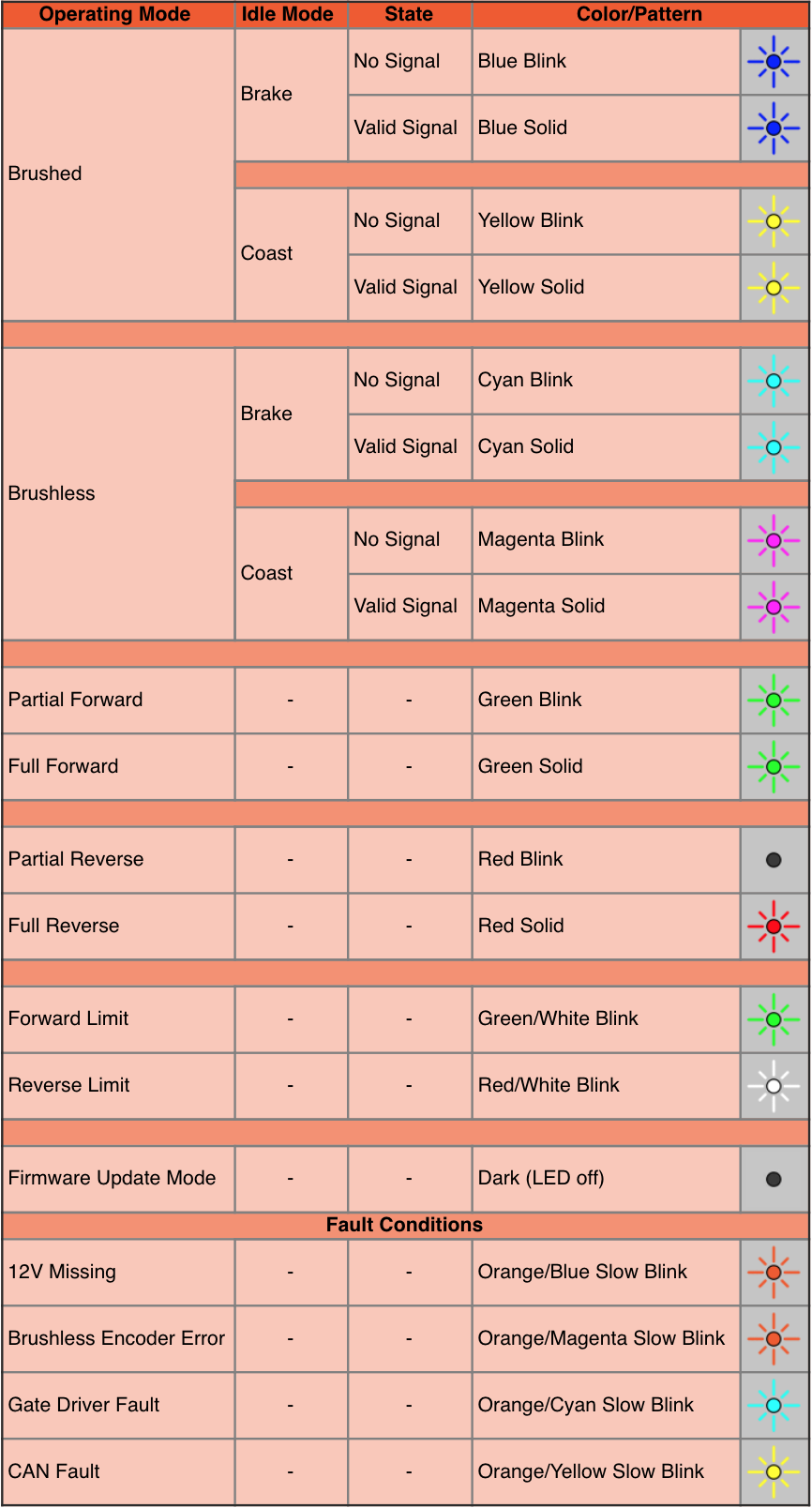

Before moving on, if using SPARK MAX controllers, there is one more configuration step to complete. The SPARK MAX motor controllers are configured to control a brushless motor by default. You can verify this by checking that the light on the controller is blinking either cyan or magenta (indicating brushless brake or brushless coast respectively). To change to to brushed mode, press and hold the mode button for 3-4 seconds until the status LED changes color. The LED should change to either blue or yellow, indicating that the controller is in brushed mode (brake or coast respectively). To change the brake or coast mode, which control how quickly the motor slows down when a neutral signal is applied, press the mode button briefly.

Tip

For more information on the SPARK MAX motor controllers, including how to test your motors/controllers without writing any code by using the REV Hardware Client, see the SPARK MAX Quickstart guide.

From here, you should connect to the roboRIO and try uploading your code!

Step 2: Installing Software

An overview of the available control system software can be found here.

Offline Installation Preparation

This article contains instructions/links to components you will want to gather if you need to do offline installation of the FRC® Control System software.

Tip

This document compiles all the download links from the following documents to make it easier to install on offline computers or on multiple computers. If you are you installing on a single computer that is connected to the internet, you can skip this page.

Note

The order in which these tools are installed does not matter for Java and C++ teams. LabVIEW should be installed before the FRC Game Tools or 3rd Party Libraries.

Documentation

This documentation can be downloaded for offline viewing. The link to download the PDF can be found here.

Installers

All Teams

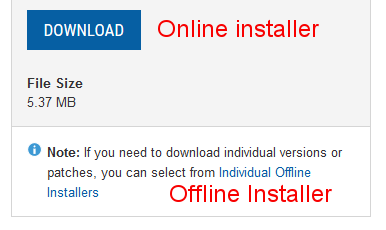

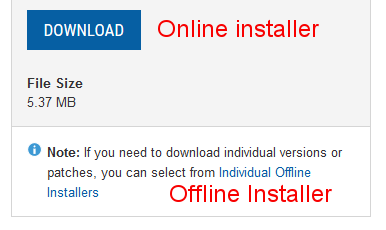

2022 FRC Game Tools (Note: Click on link for “Individual Offline Installers”)

2022 FRC Radio Configuration Utility or 2022 FRC Radio Configuration Utility Israel Version

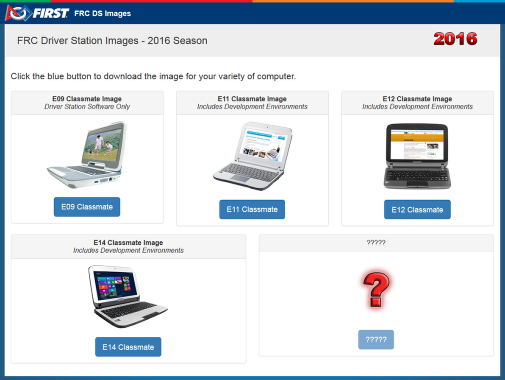

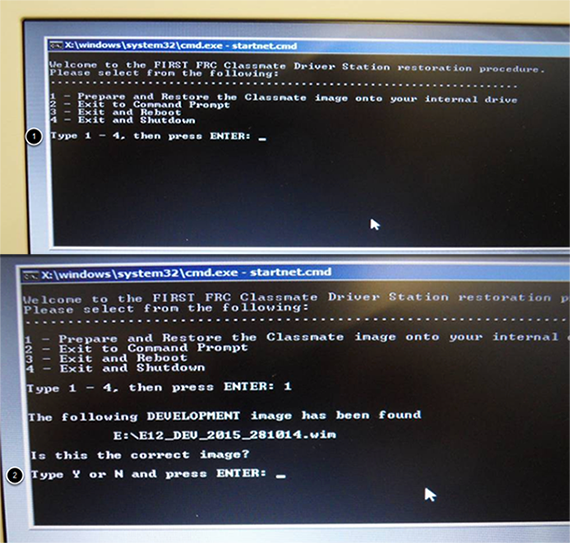

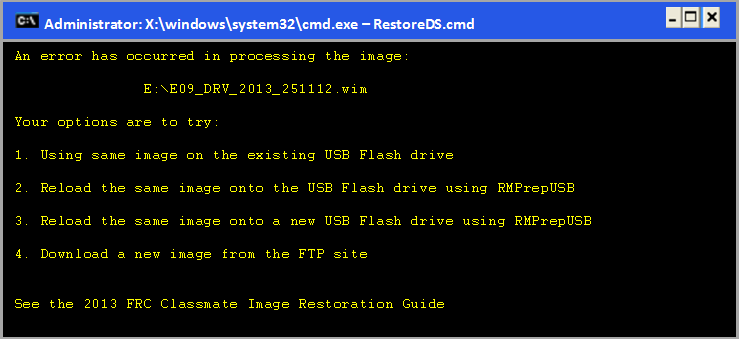

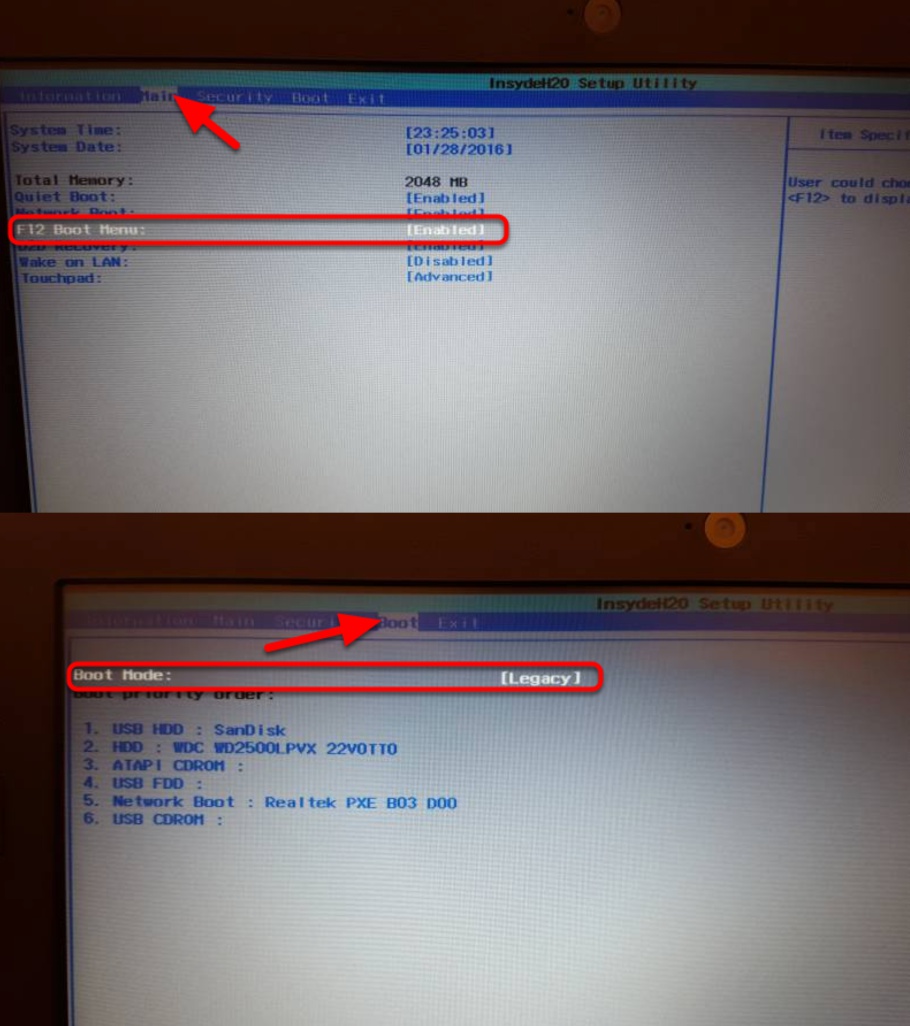

(Optional - Veterans Only!) Classmate/Acer PC Image

LabVIEW Teams

LabVIEW USB (from FIRST® Choice) or Download (Note: Click on link for “Individual Offline Installers”)

Java/C++ Teams

Note

After downloading the Java/C++ WPILib installer, run it once while connected to the internet and select Install for this User then Create VS Code zip to share with other computers/OSes for offline install and save the downloaded VS Code zip file for future offline installations.

3rd Party Libraries/Software

A directory of available 3rd party software that plugs in to WPILib can be found on 3rd Party Libraries.

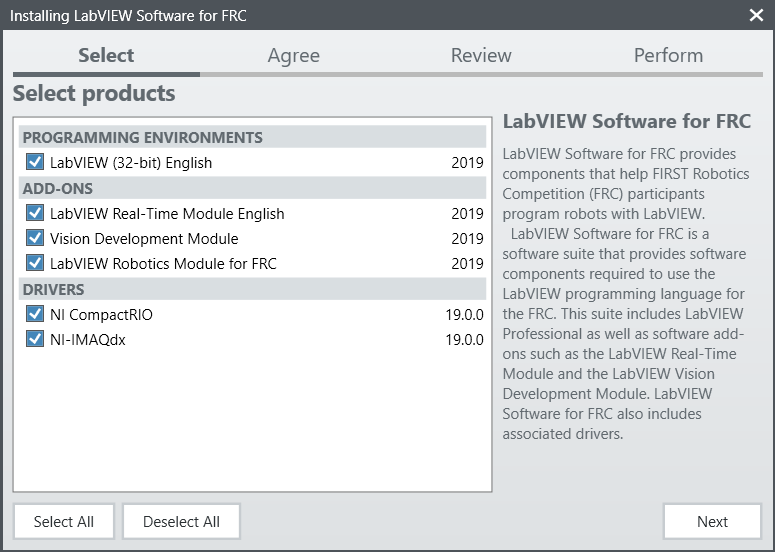

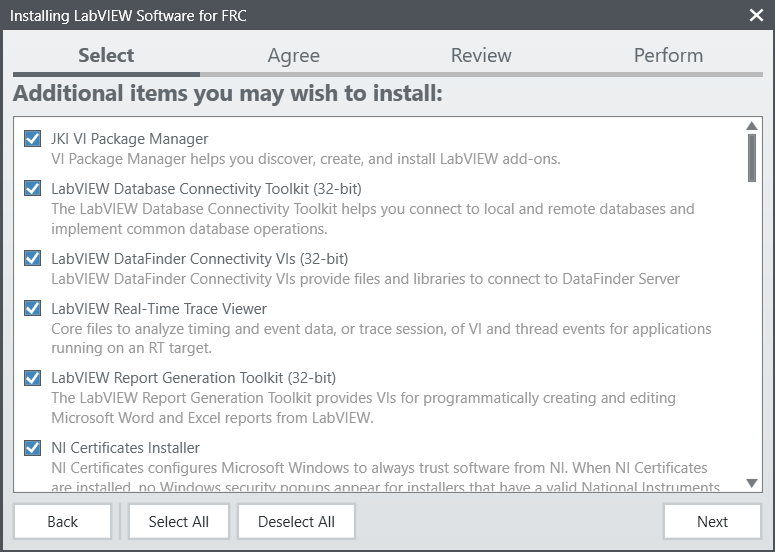

Installing LabVIEW for FRC (LabVIEW only)

Note

This installation is for teams programming in LabVIEW or using NI Vision Assistant only. C++ and Java teams not using these features do not need to install LabVIEW and should proceed to Installing the FRC Game Tools.

Download and installation times will vary widely with computer and internet connection specifications, however note that this process involves a large file download and installation and will likely take at least an hour to complete.

Requirements

Windows 10 or higher (Windows 10, 11). Windows 11 is not officially supported by NI, but has been tested to work.

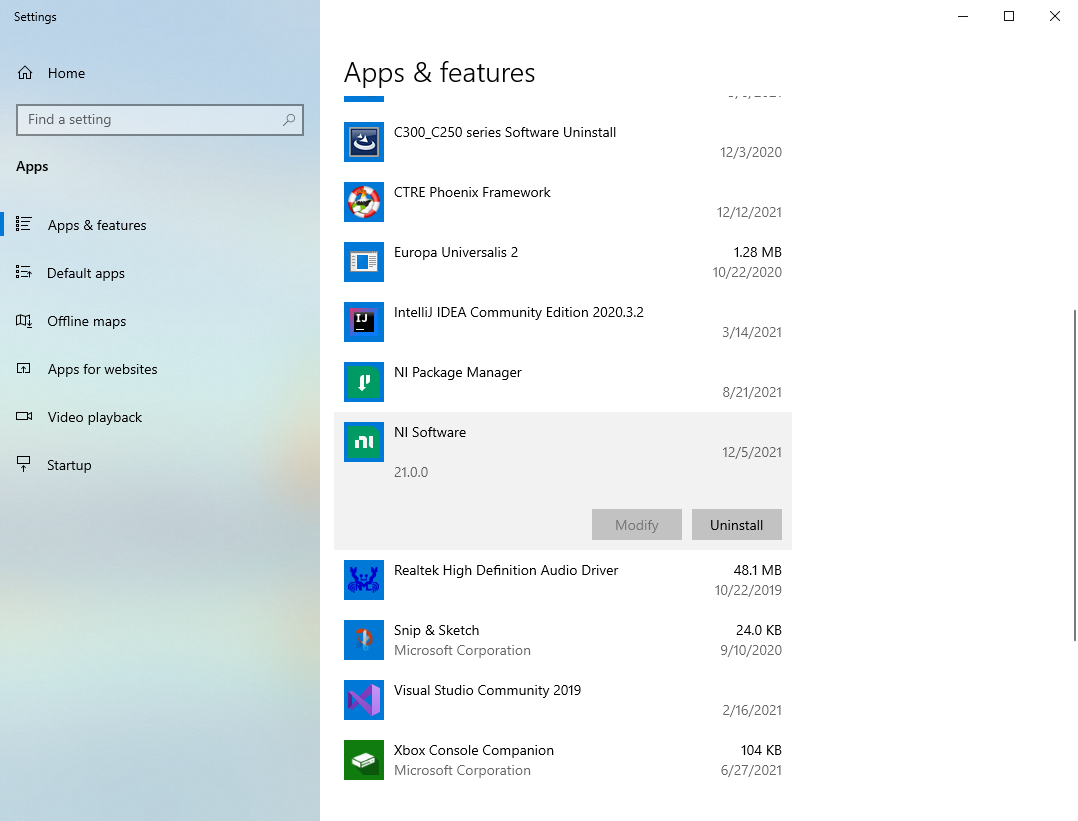

Uninstall Old Versions (Recommended)

Note

If you wish to keep programming cRIOs you will need to maintain an install of LabVIEW for FRC® 2014. The LabVIEW for FRC 2014 license has been extended. While these versions should be able to co-exist on a single computer, this is not a configuration that has been extensively tested.

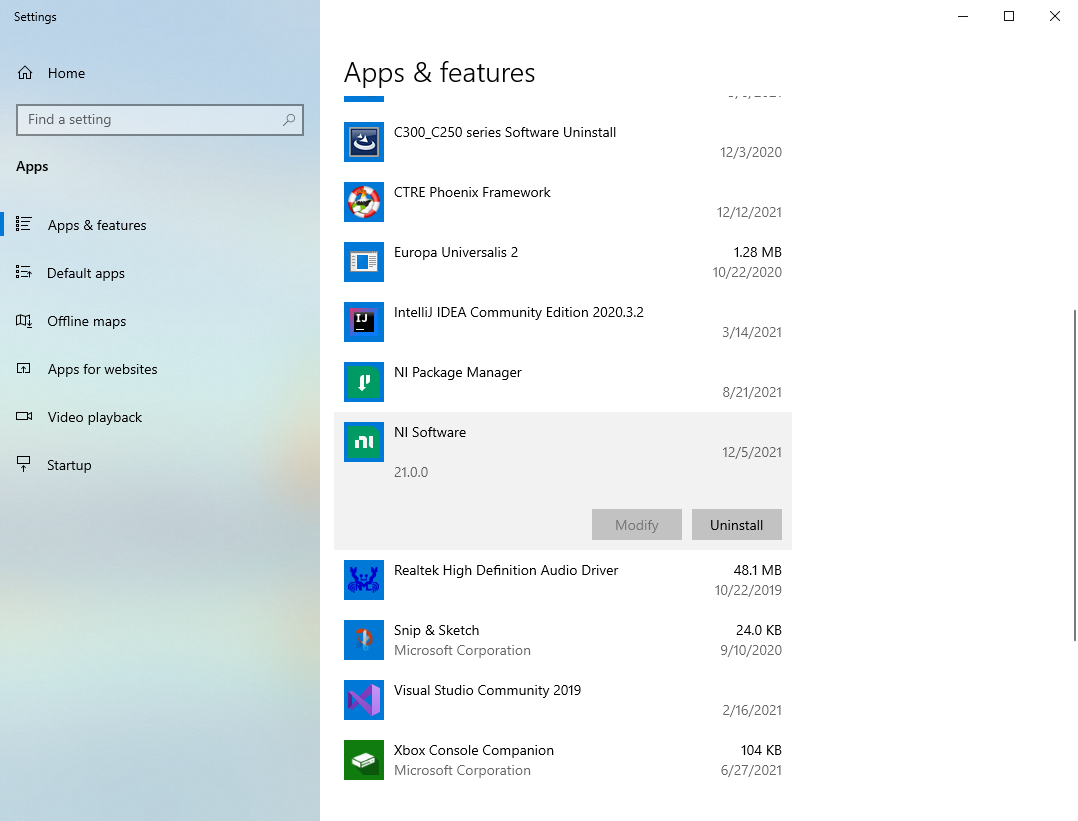

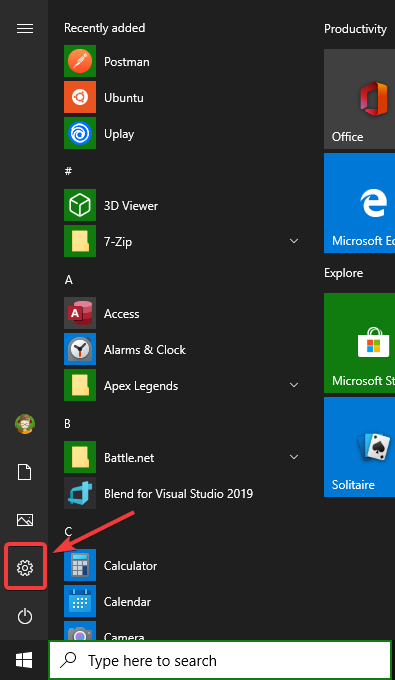

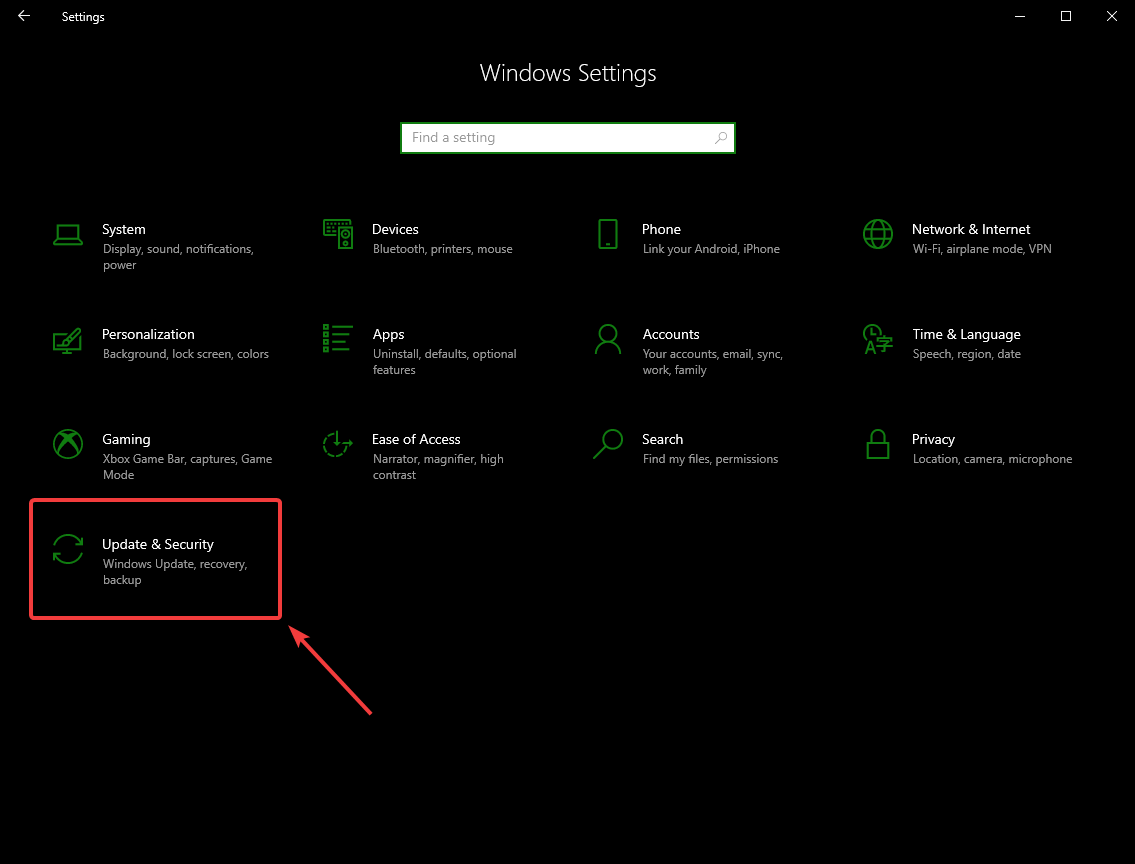

Before installing the new version of LabVIEW it is recommended to remove any old versions. The new version will likely co-exist with the old version, but all testing has been done with FRC 2020 only. Make sure to back up any team code located in the “User\LabVIEW Data” directory before un-installing. Then click Start >> Add or Remove Programs. Locate the entry labeled “National Instruments Software” or “NI Software”, and select Uninstall.

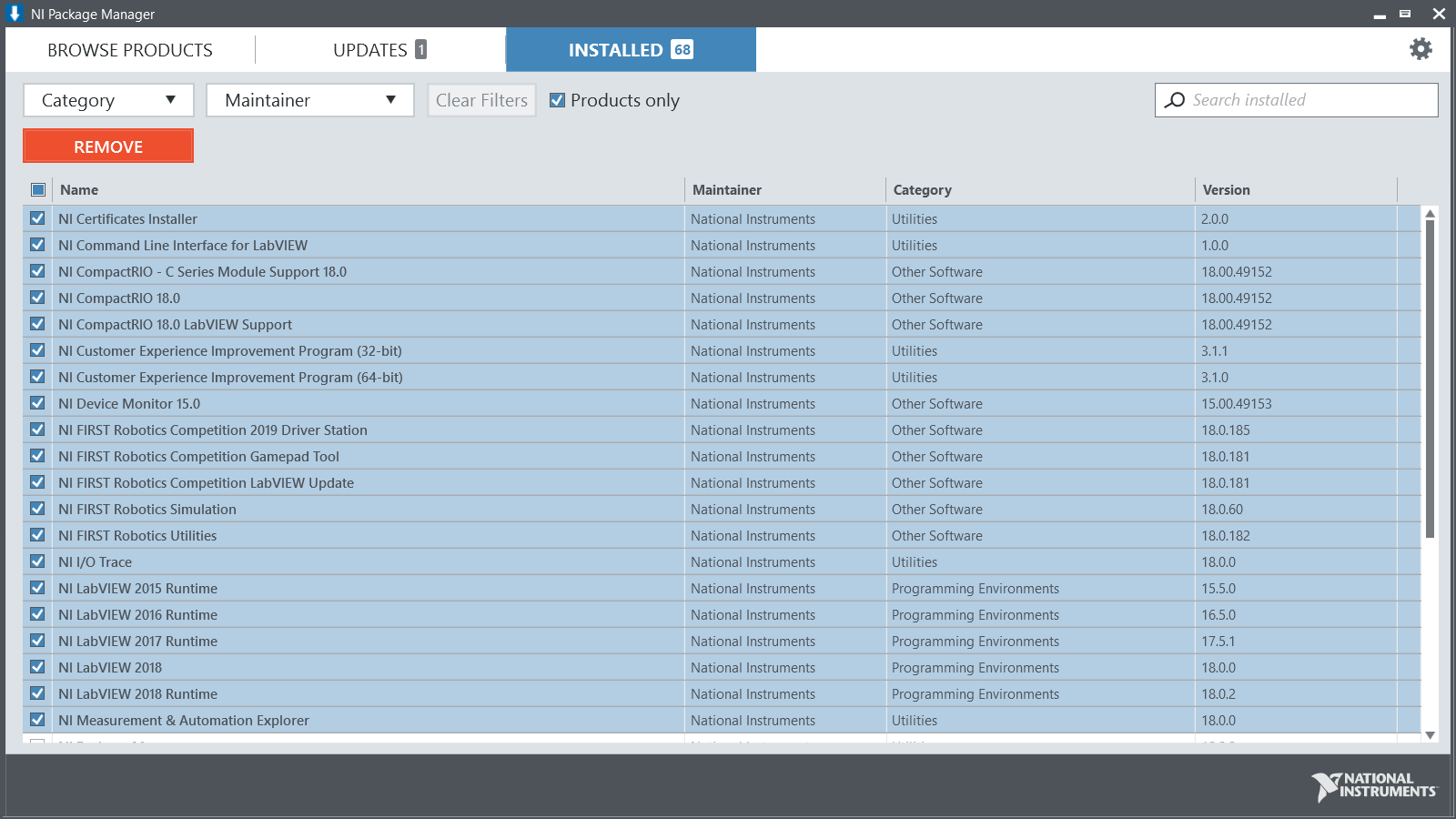

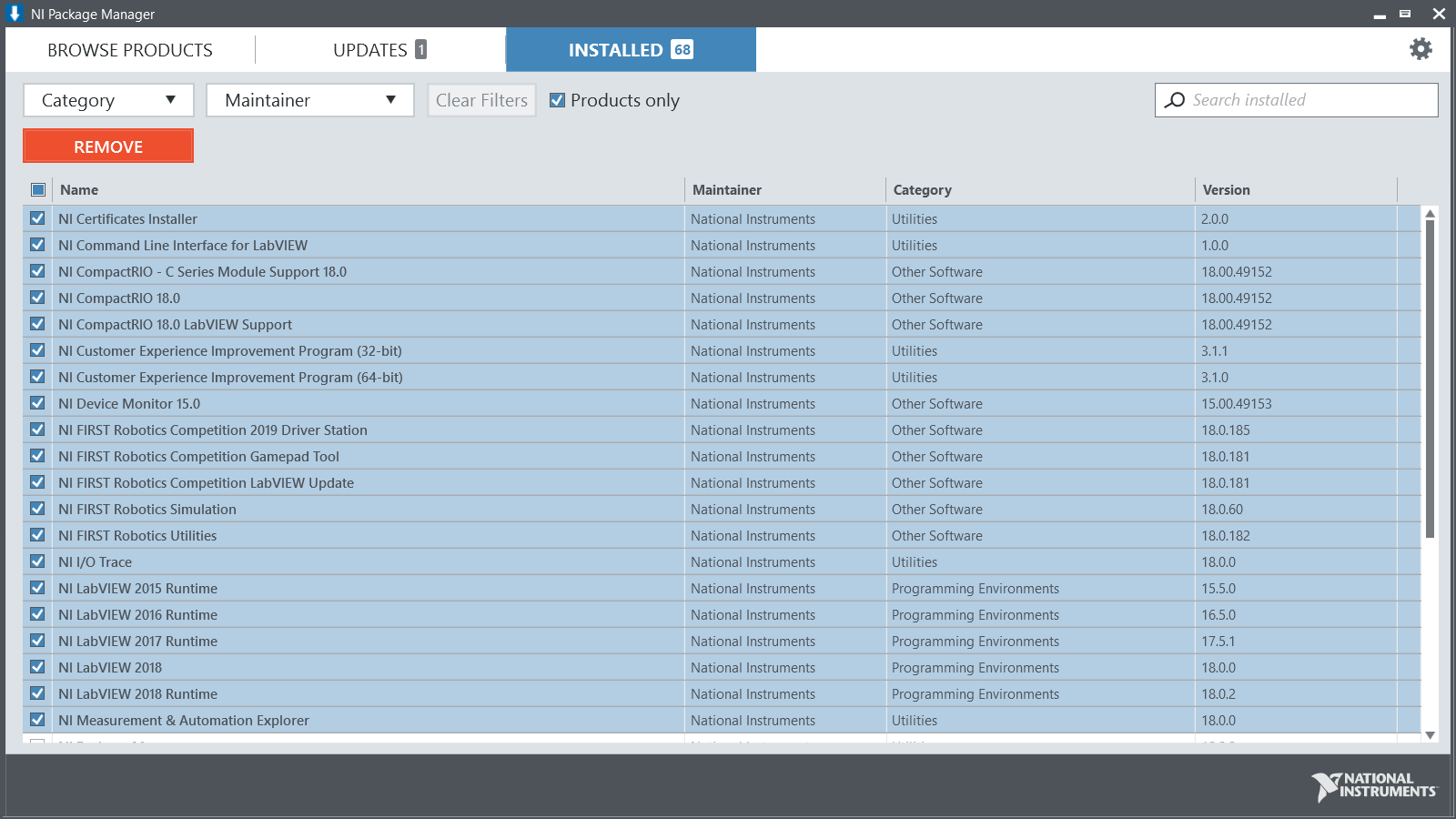

Select Components to Uninstall

In the dialog box that appears, select all entries. The easiest way to do this is to de-select the “Products Only” check-box and select the check-box to the left of “Name”. Click Remove. Wait for the uninstaller to complete and reboot if prompted.

Warning

These instructions assume that no other NI software is installed. If you have other NI software installed, it is necessary to uncheck the software that should not be uninstalled.

Getting LabVIEW installer

Either locate and insert the LabVIEW USB Drive or download the LabVIEW for FRC 2020 installer from NI. Be sure to select the correct version from the drop-down.

If you wish to install on other machines offline, do not click the Download button, click Individual Offline Installers and then click Download, to download the full installer.

Note

This is a large download (~8GB). It is recommended to use a fast internet connection and to use the NI Downloader to allow the download to resume if interrupted.

Installing LabVIEW

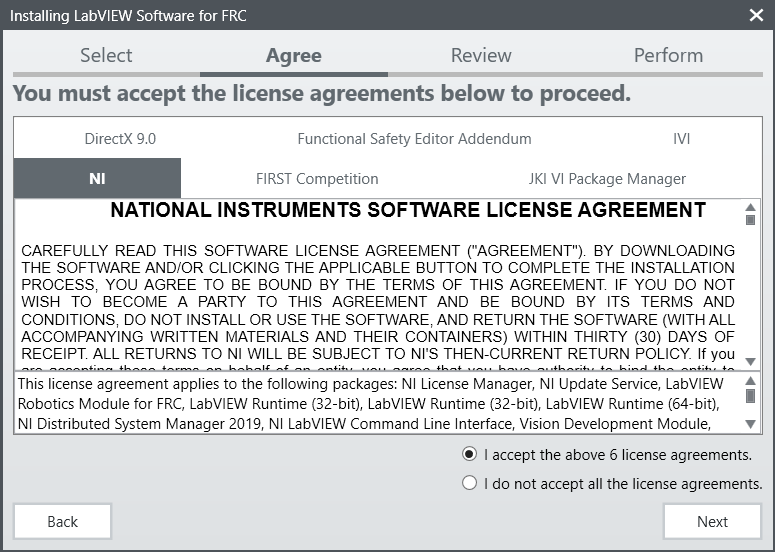

NI LabVIEW requires a license. Each season’s license is active until January 31st of the following year (e.g. the license for the 2020 season expires on January 31, 2021)

Teams are permitted to install the software on as many team computers as needed, subject to the restrictions and license terms that accompany the applicable software, and provided that only team members or mentors use the software, and solely for FRC. Rights to use LabVIEW are governed solely by the terms of the license agreements that are shown during the installation of the applicable software.

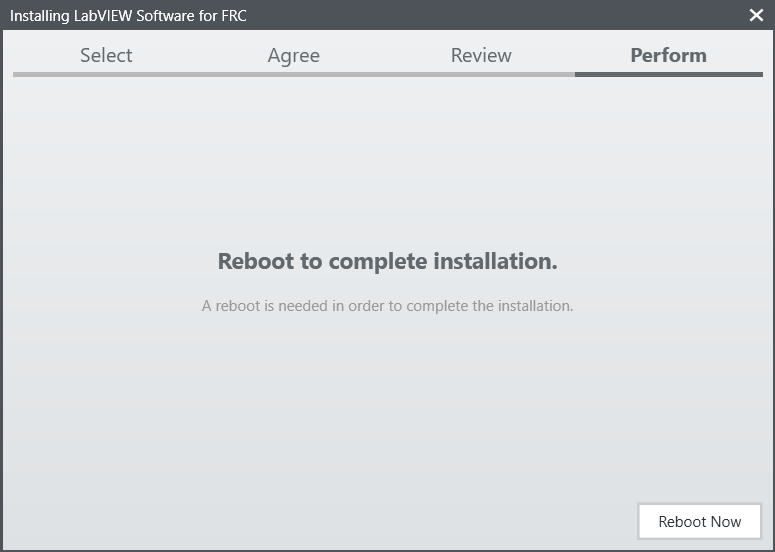

Starting Install

Run the downloaded exe file to start the install process. Click Yes if a Windows Security prompt

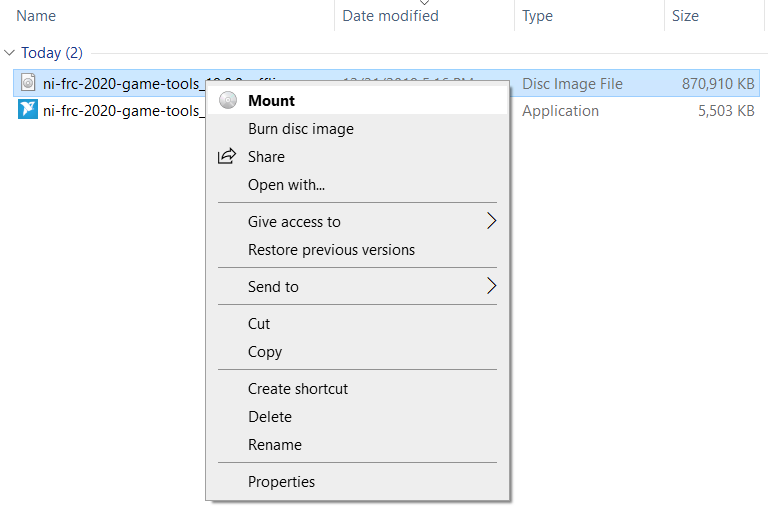

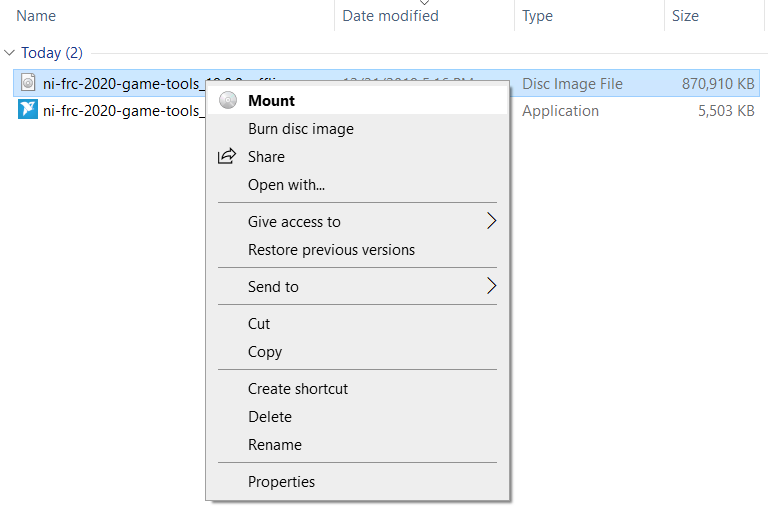

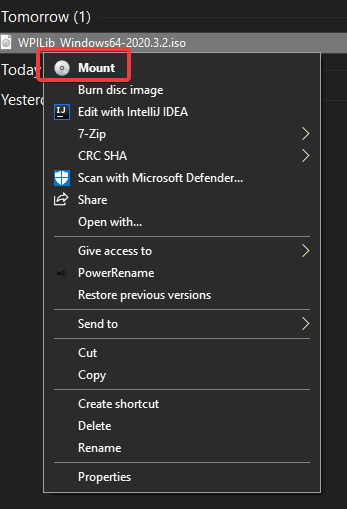

Right click on the downloaded iso file and select mount. Run install.exe from the mounted iso. Click “Yes” if a Windows Security prompt

Note

other installed programs may associate with iso files and the mount option may not appear. If that software does not give the option to mount or extract the iso file, then install 7-Zip and use that to extract the iso.

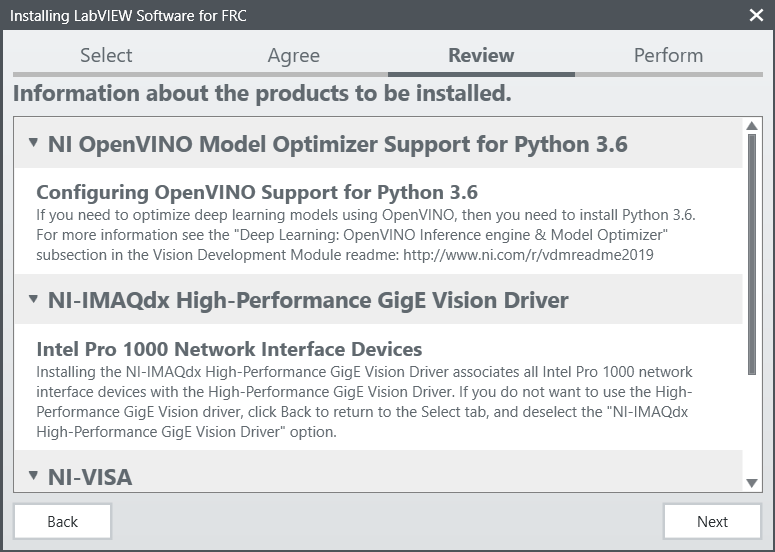

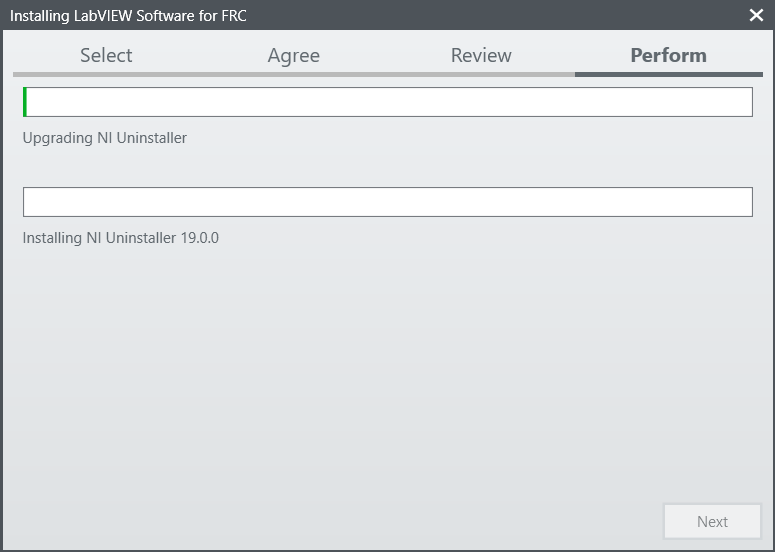

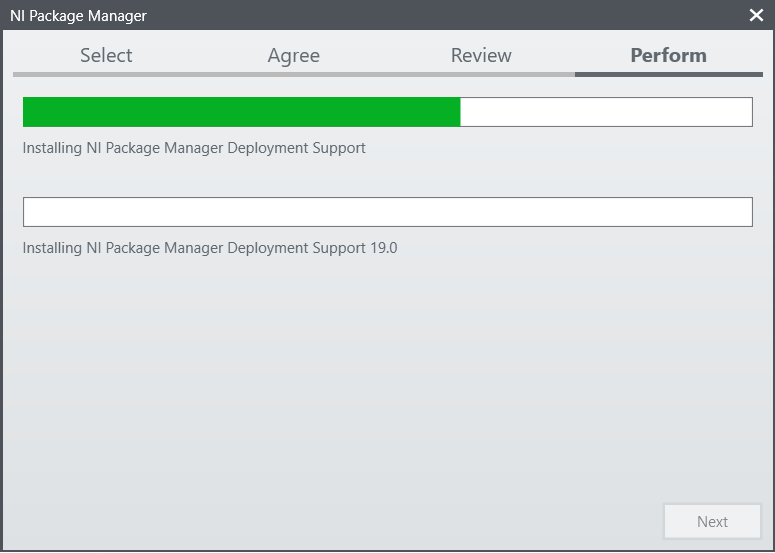

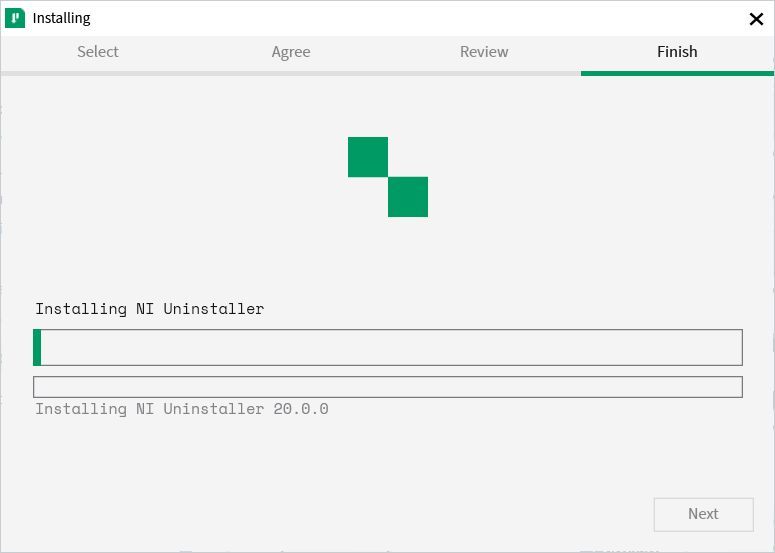

NI Package Manager Installation

Installation progress of the NI Package Manager will be tracked in this window

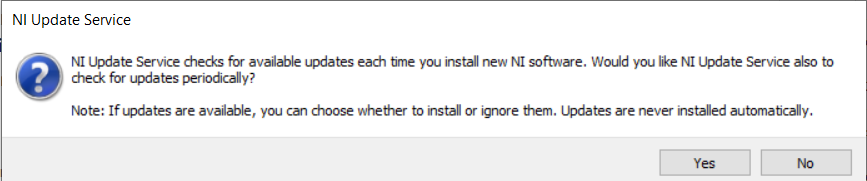

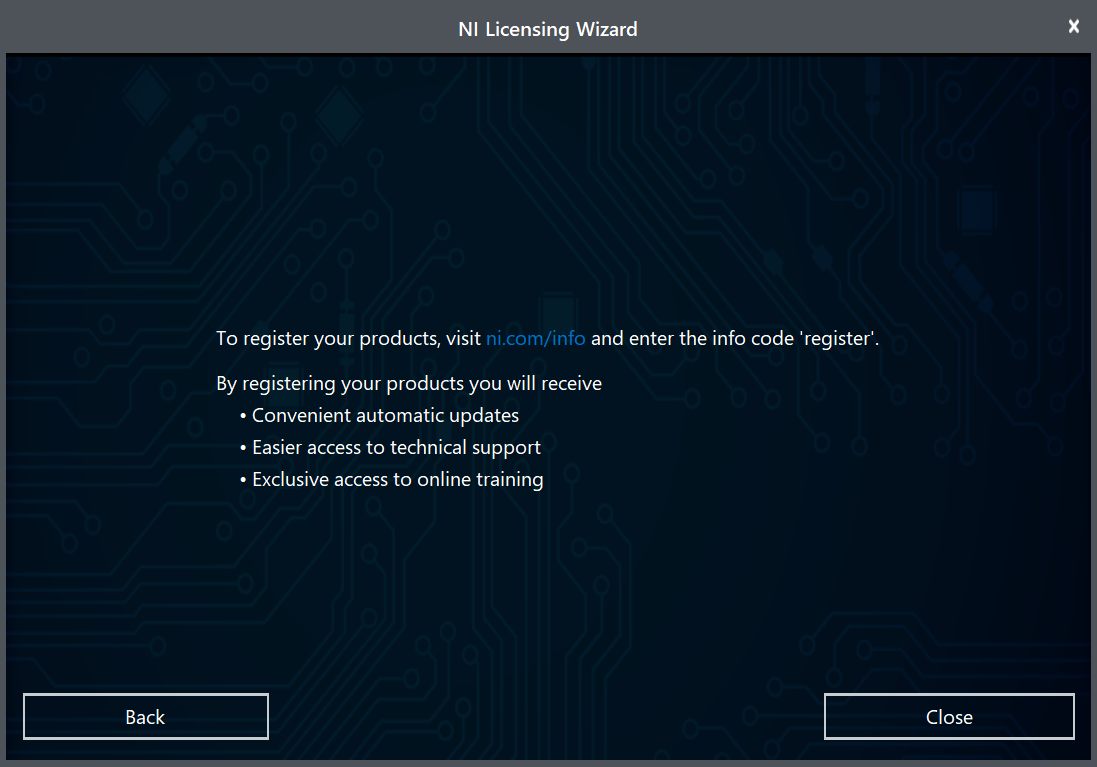

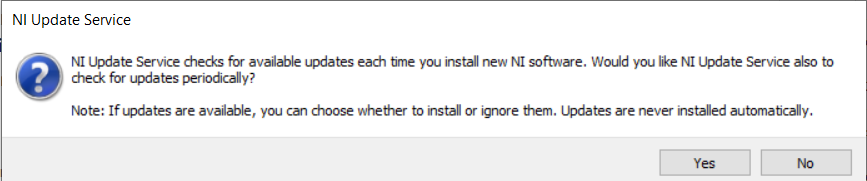

NI Update Service

You will be prompted whether to enable the NI update service. You can choose to not enable the update service.

Warning

It is not recommended to install these updates unless directed by FRC through our usual communication channels (FRC Blog, Team Updates or E-mail Blasts).

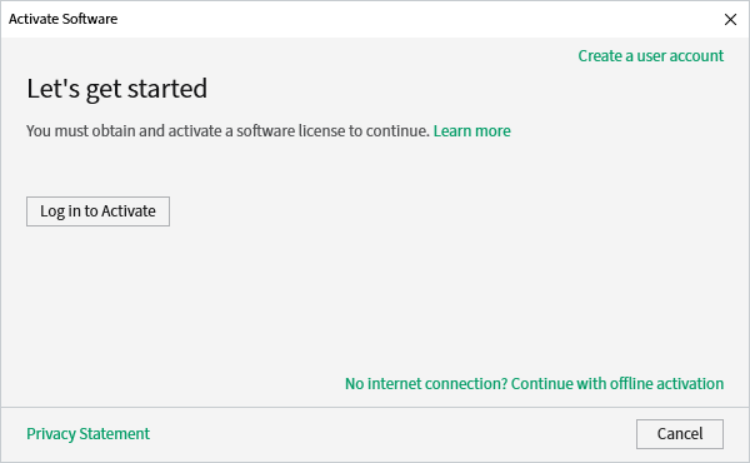

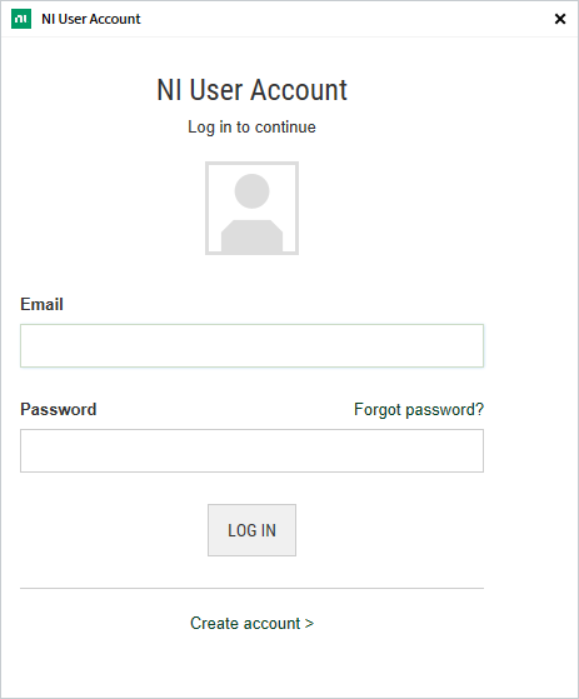

NI Activation Wizard

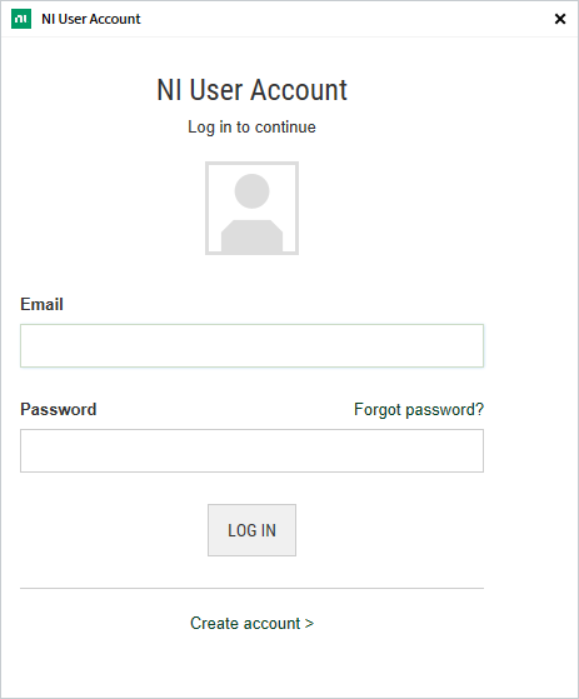

Click the Log in to Activate button.

Log into your ni.com account. If you don’t have an account, select Create account to create a free account.

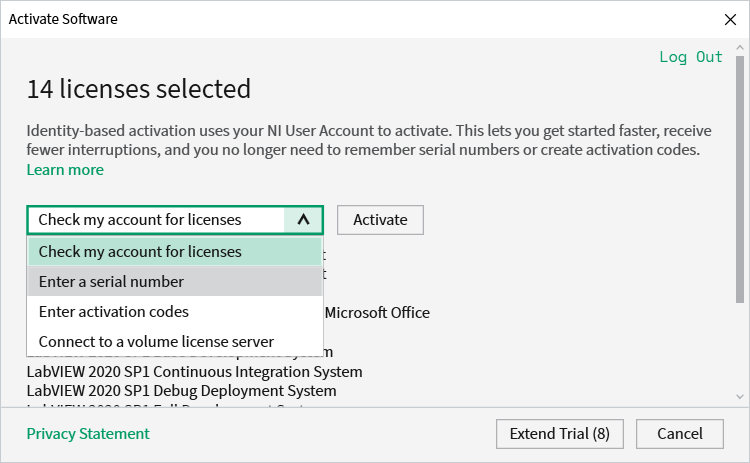

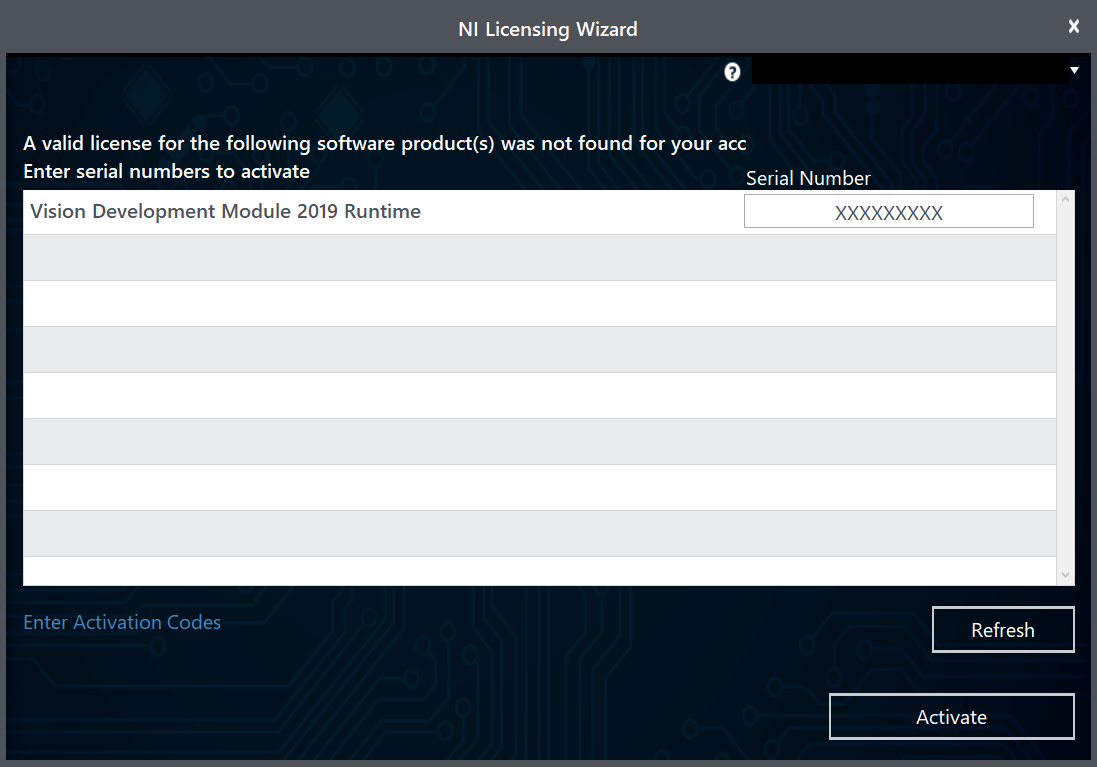

From the drop-down, select enter a serial number

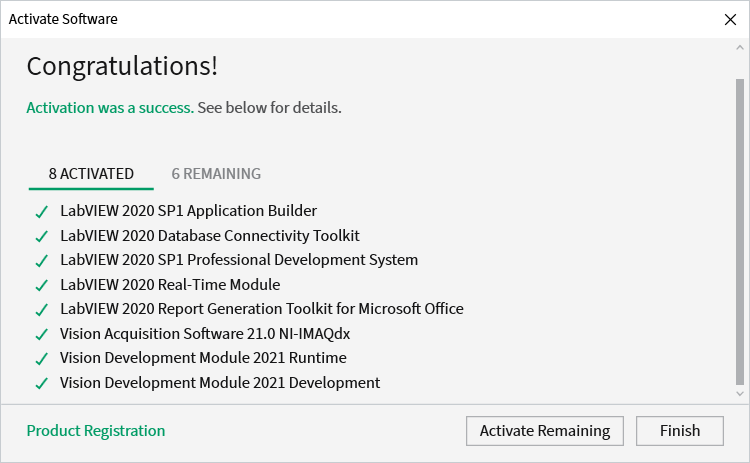

Enter the serial number in all the boxes. Click Activate.

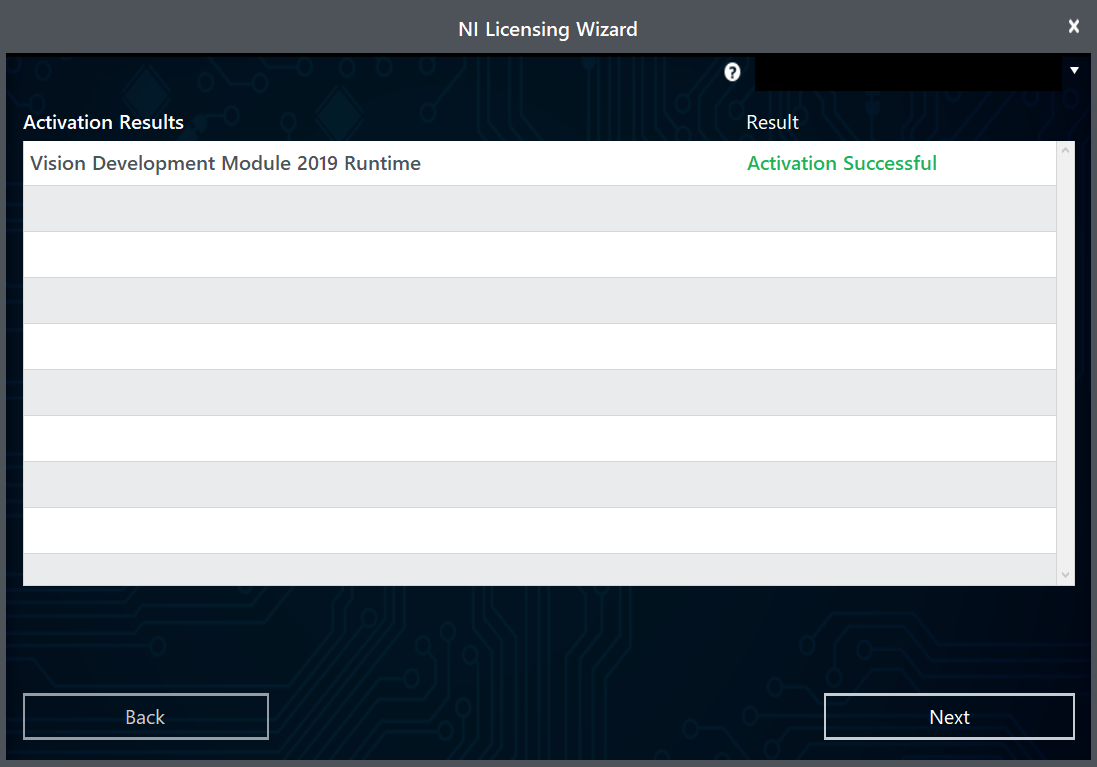

If your products activate successfully, an “Activation Successful” message will appear. If the serial number was incorrect, it will give you a text box and you can re-enter the number and select Try Again. The items shown above are not expected to activate. If everything activated successfully, click Finish.

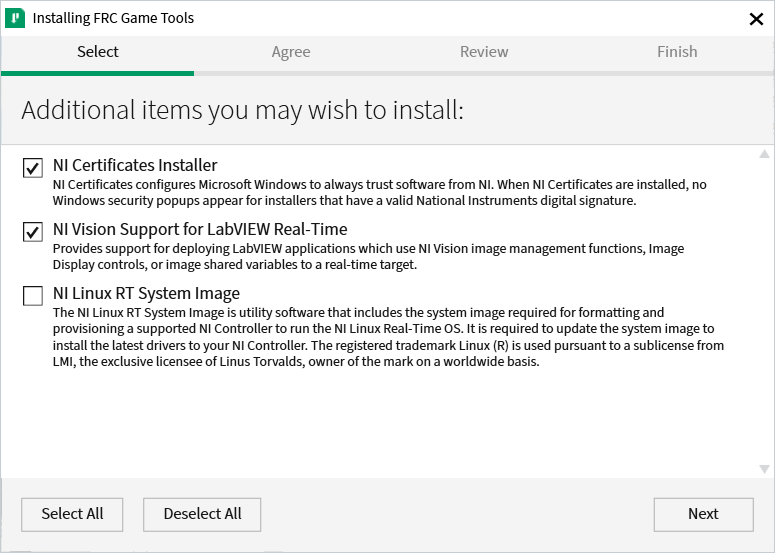

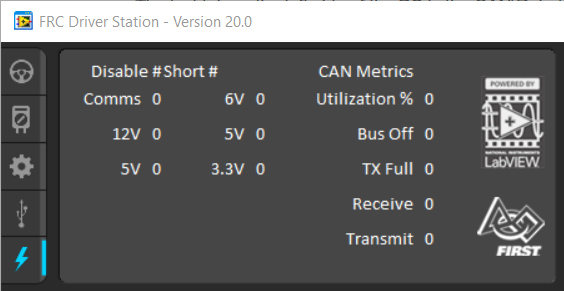

Installing the FRC Game Tools

The FRC® Game Tools contains the following software components:

LabVIEW Update

FRC Driver Station

FRC Utilities

The LabVIEW runtime components required for the Driver Station and Utilities are included in this package.

Note

No components from the LabVIEW Software for FRC package are required for running either the Driver Station or Utilities.

Requirements

Windows 10 or higher (Windows 10, 11). Windows 11 is not officially supported by NI, but has been tested to work.

Download the FRC Game Tools from NI.

If you wish to install on other machines offline, click Individual Offline Installers before clicking Download to download the full installer.

Uninstall Old Versions (Recommended)

Important

LabVIEW teams have already completed this step, do not repeat it. LabVIEW teams should skip to the Installation section.

Before installing the new version of the FRC Game Tools it is recommended to remove any old versions. The new version will likely co-exist with the old version (note that the DS will overwrite old versions), but all testing has been done with FRC 2022 only. Then click Start >> Add or Remove Programs. Locate the entry labeled “National Instruments Software” or “NI Software”, and select Uninstall.

Note

It is only necessary to uninstall previous versions when installing a new year’s tools. For example, uninstall the 2021 tools before installing the 2022 tools. It is not necessary to uninstall before upgrading to a new update of the 2022 game tools.

Select Components to Uninstall

In the dialog box that appears, select all entries. The easiest way to do this is to de-select the Products Only check-box and select the check-box to the left of “Name”. Click Remove. Wait for the uninstaller to complete and reboot if prompted.

Installation

Important

The Game Tools installer may prompt that .NET Framework 4.6.2 needs to be updated or installed. Follow prompts on-screen to complete the installation, including rebooting if requested. Then resume the installation of the FRC Game Tools, restarting the installer if necessary.

Extraction

Run the downloaded executable file to start the install process. Click Yes if a Windows Security prompt appears.

Right click on the downloaded iso file and select mount. Run install.exe from the mounted iso. Click Yes if a Windows Security prompt appears.

Note

Other installed programs may associate with iso files and the mount option may not appear. If that software does not give the option to mount or extract the iso file, then install 7-Zip and use that to extract the iso.

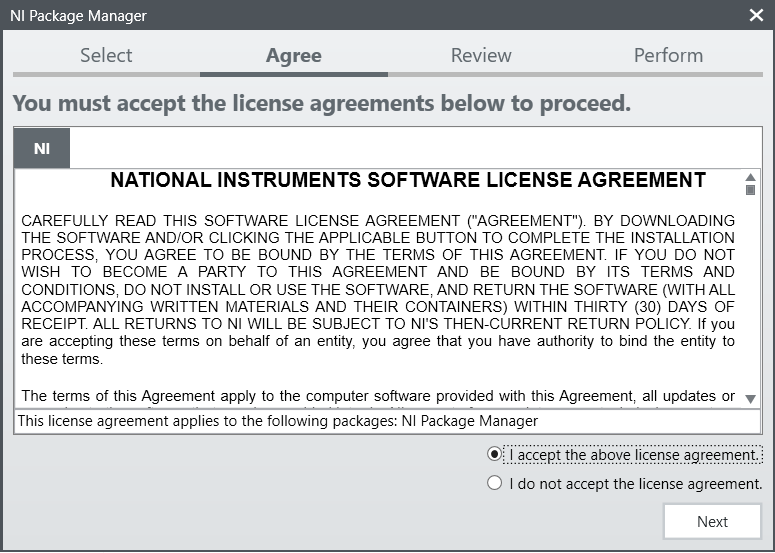

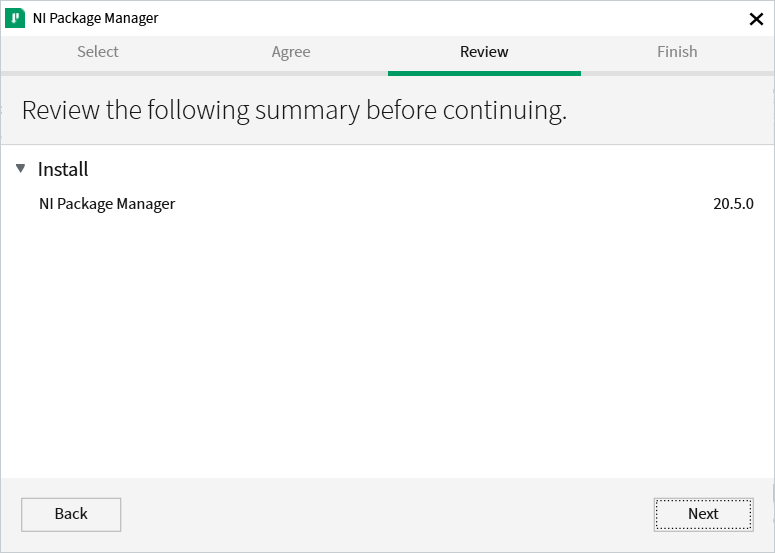

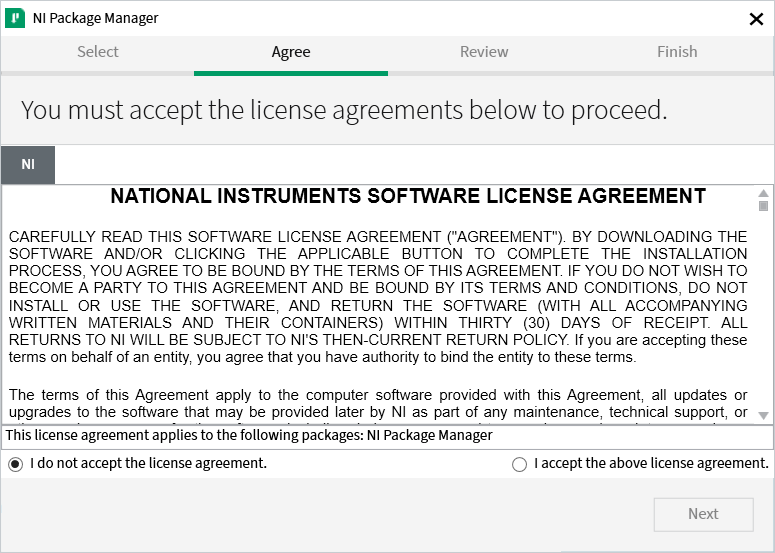

NI Package Manager License

If you see this screen, click Next. This screen confirms that you agree to NI Package Manager License agreement.

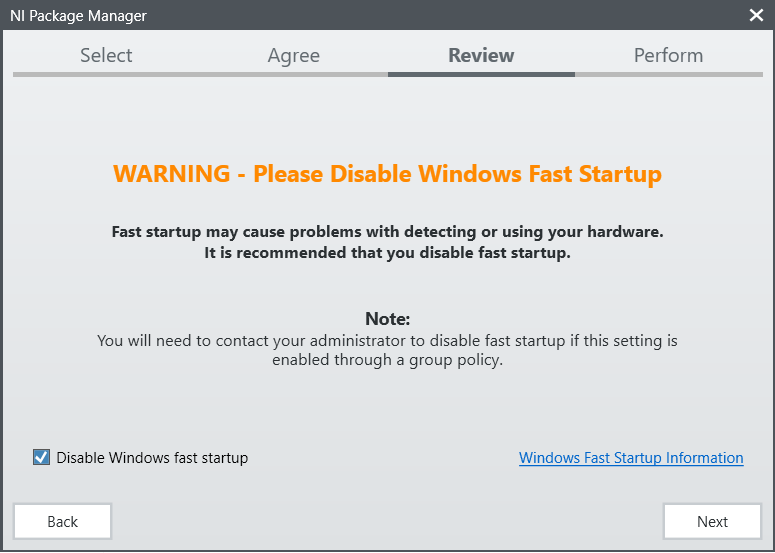

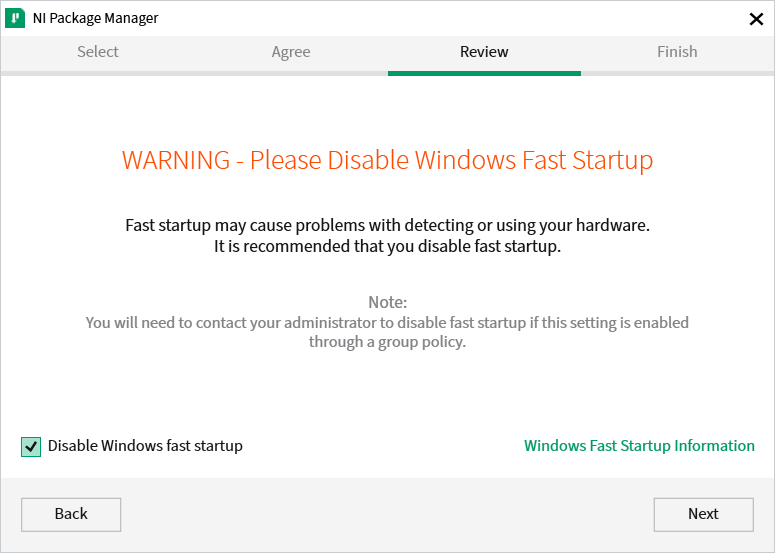

Disable Windows Fast Startup

It is recommended to leave this screen as-is, as Windows Fast Startup can cause issues with the NI drivers required to image the roboRIO. Go ahead and click Next.

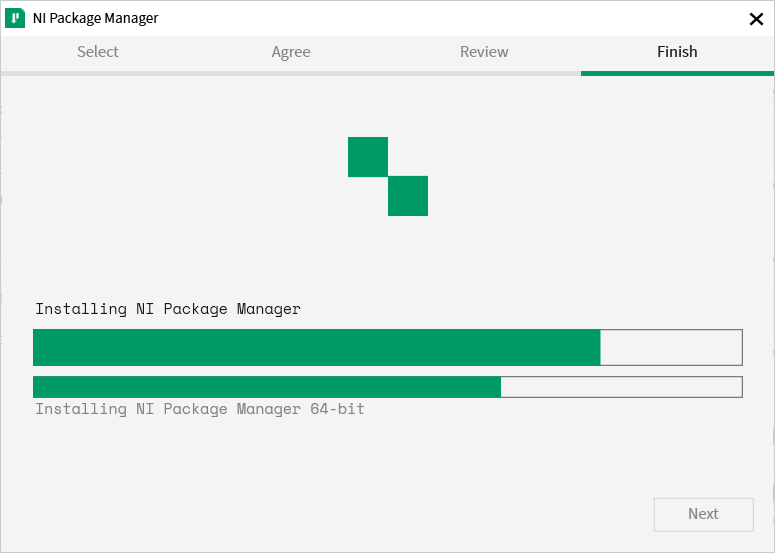

NI Package Manager Installation

Installation progress of the NI Package Manager will be tracked in this window.

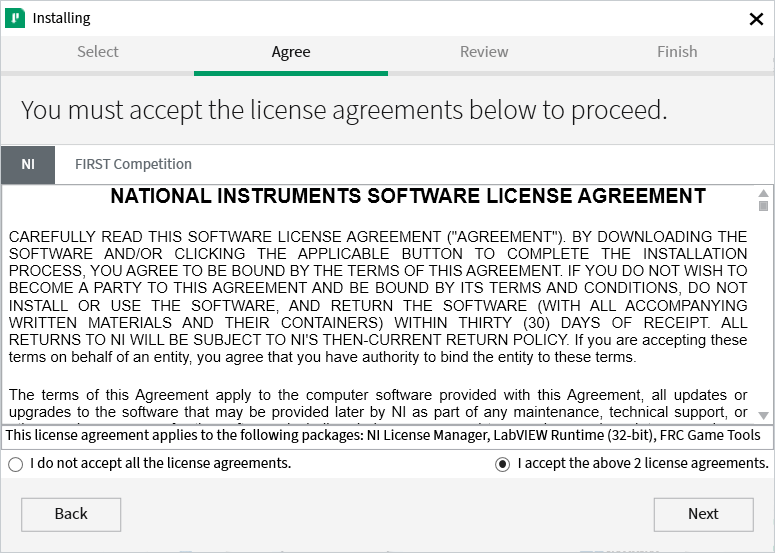

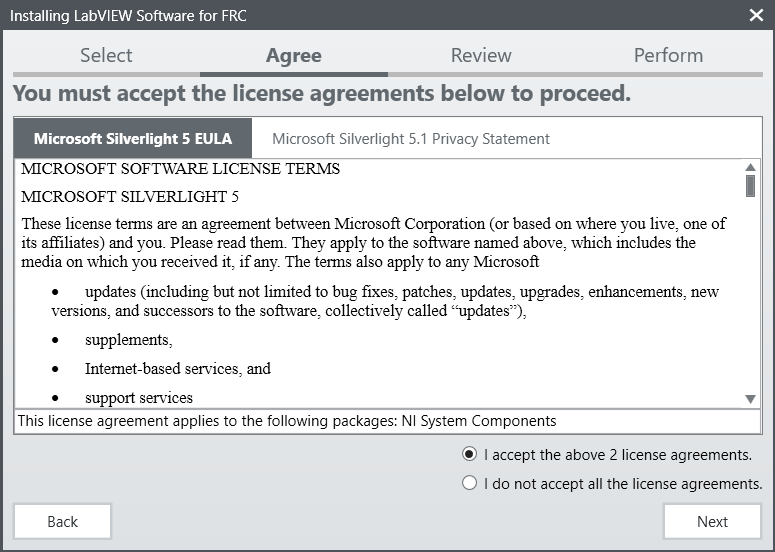

License Agreements

Select I accept… then click Next

Go ahead and press I accept… then click Next, confirming that you agree to the NI License agreement.

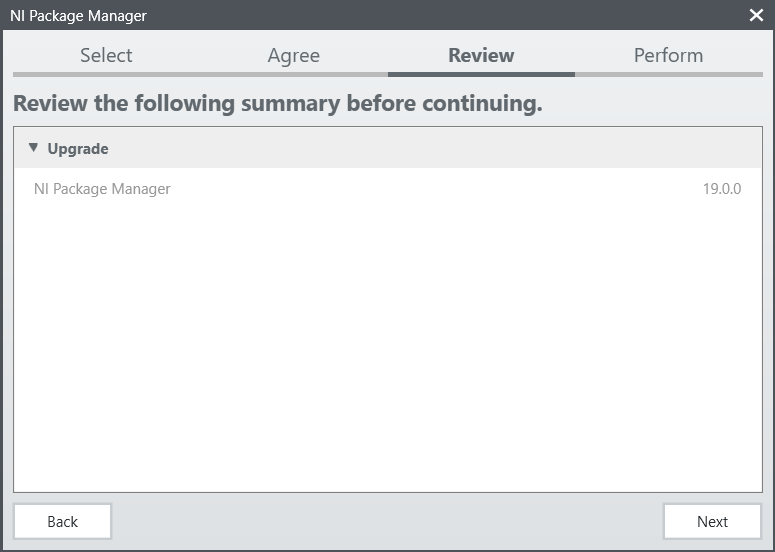

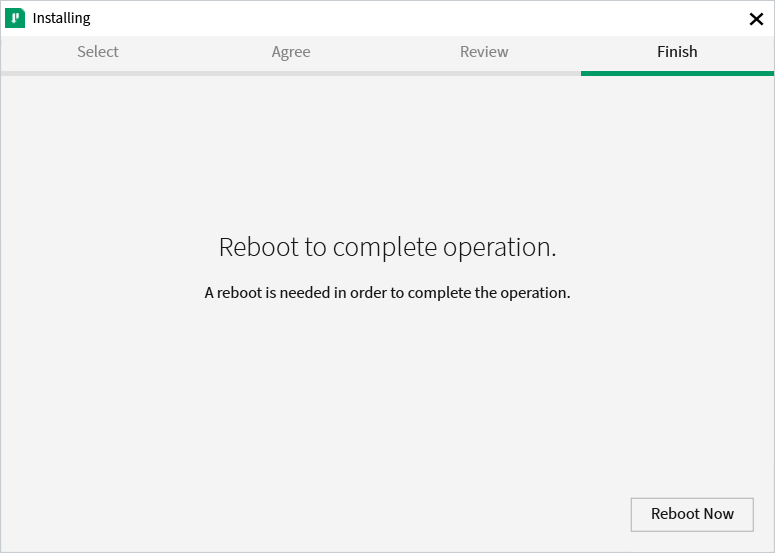

Detail Progress

This screen showcases the installation process, go ahead and press Next when it’s finished.

NI Activation Wizard

Log into your ni.com account. If you don’t have an account, select Create account to create a free account.

Enter the serial number. Click Activate.

Note

If this is the first time activating this year’s software on this account, you will see the message shown above about a valid license not being found. You can ignore this.

If your products activate successfully, an Activation Successful message will appear. If the serial number was incorrect, it will give you a text box and you can re-enter the number and select Try Again. If everything activated successfully, click Next.

Click Close.

NI Update Service

You will be prompted whether to enable the NI update service. You can choose to not enable the update service.

Warning

It is not recommended to install these updates unless directed by FRC through our usual communication channels (FRC Blog, Team Updates or E-mail Blasts).

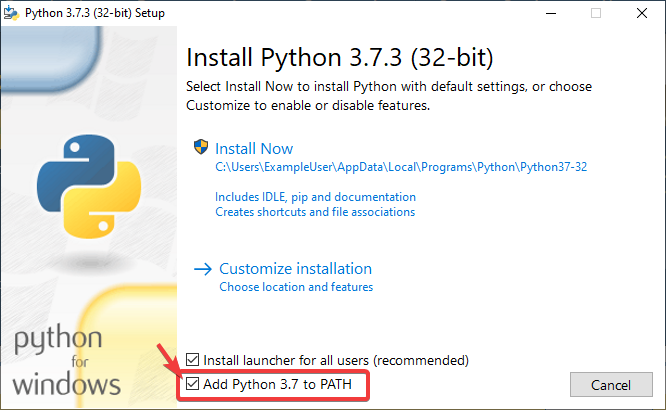

WPILib Installation Guide

This guide is intended for Java and C++ teams. LabVIEW teams can skip to Installing LabVIEW for FRC (LabVIEW only). Additionally, the below tutorial shows Windows 10, but the steps are identical for all operating systems. Notes differentiating operating systems will be shown.

Prerequisites

You can download the latest release of the installer from GitHub. Ensure that you download the correct binary for your OS and architecture.

Warning

Windows 7 users must have an updated system with this update installed. MacOS and Linux require python3 installed.

Warning

The following OSes will be unsupported starting 2023: Ubuntu 18.04, Windows 7, Windows 8.1, and any 32-bit Windows. MacOS 10.14 is no longer supported as of 2022.

WPILib is designed to install to different folders for different years, so that it is not necessary to uninstall a previous version before installing this year’s WPILib.

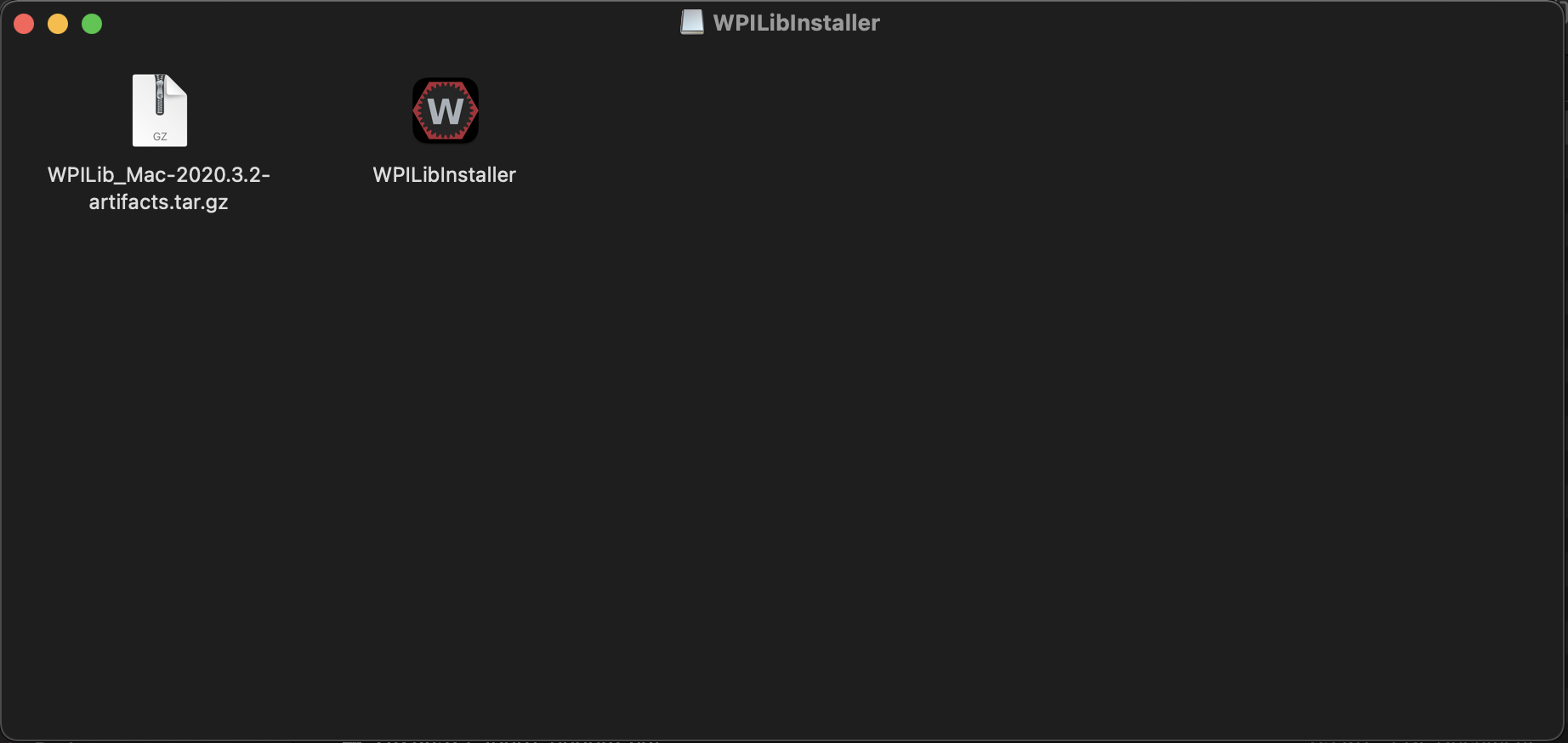

Extracting the Installer

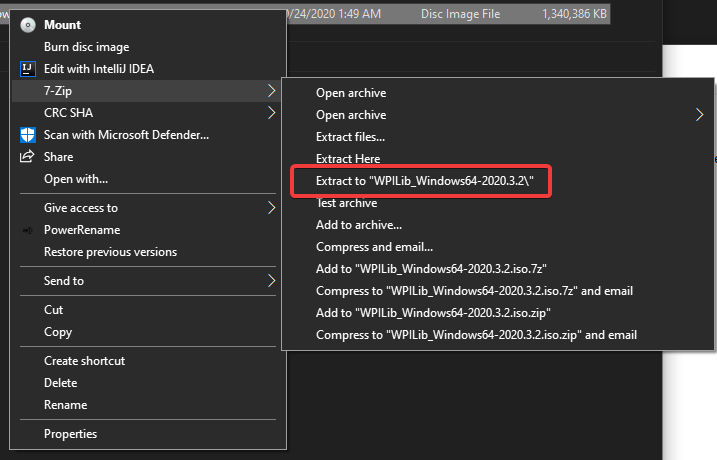

When you download the WPILib installer, it is distributed as a disk image file .iso for Windows, .tar.gz for Linux, and distributed as a DMG for MacOS.

Note

Other installed programs may associate with iso files and the mount option may not appear. If that software does not give the option to mount or extract the iso file, then follow the directions in the “Windows 7” tab.

You can use 7-zip to extract the disk image by right-clicking, selecting 7-Zip and selecting Extract to…. Then launch WPILibInstaller.exe

macOS users can double click on the downloaded DMG and then select WPILibInstaller to launch the application.

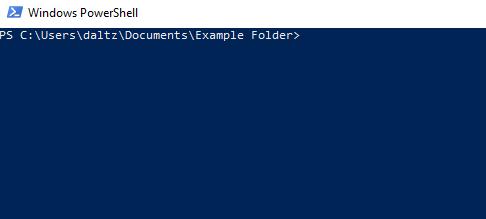

Linux users should extract the downloaded .tar.gz and then launch WPILibInstaller. Ubuntu treats executables in the file explorer as shared libraries, so double-clicking won’t run them. Run the following commands in a terminal instead with <version> replaced with the version you’re installing.

$ tar -xf WPILib_Linux-<version>.tar.gz

$ cd WPILib_Linux-<version>/

$ ./WPILibInstaller

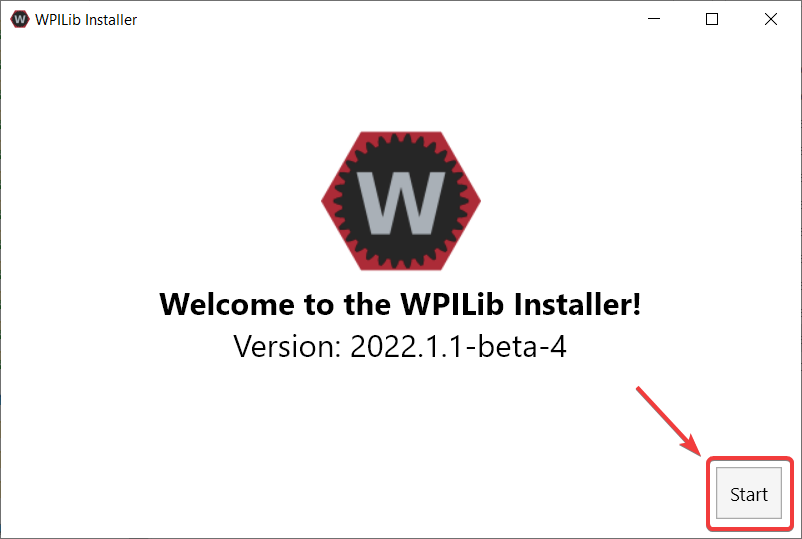

Running the Installer

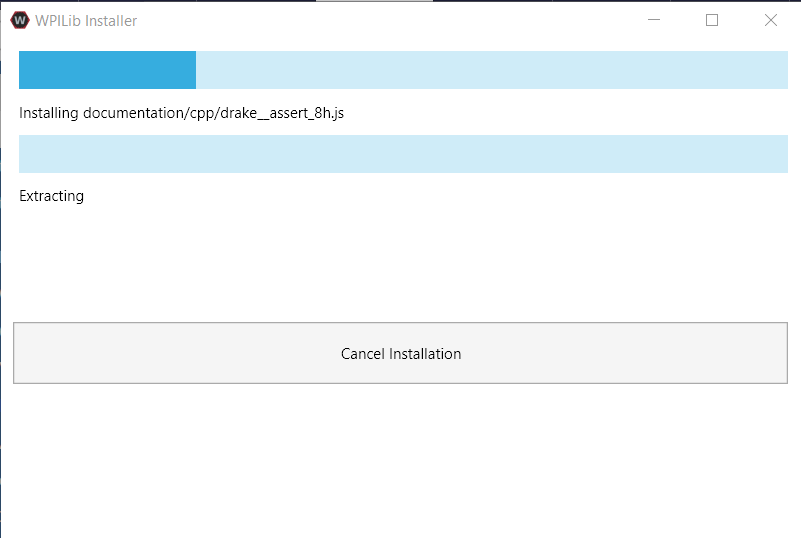

Upon opening the installer, you’ll be presented with the below screen. Go ahead and press Start.

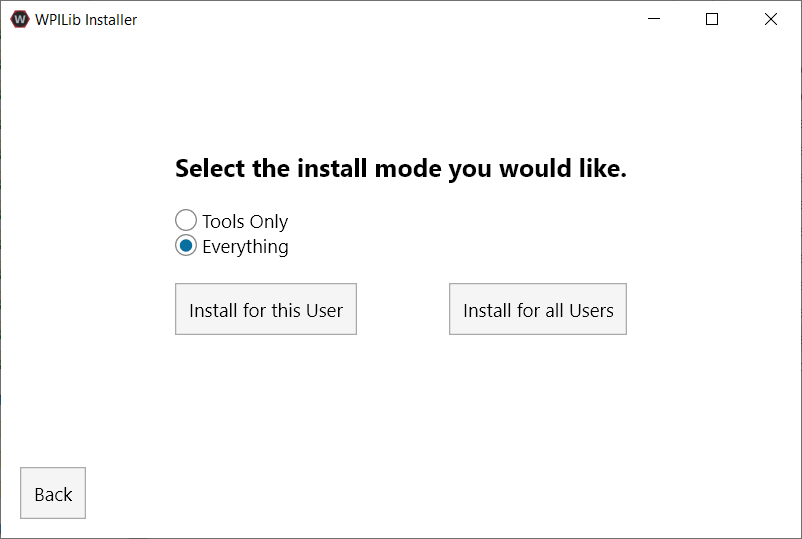

This showcases a list of options included with the WPILib installation.

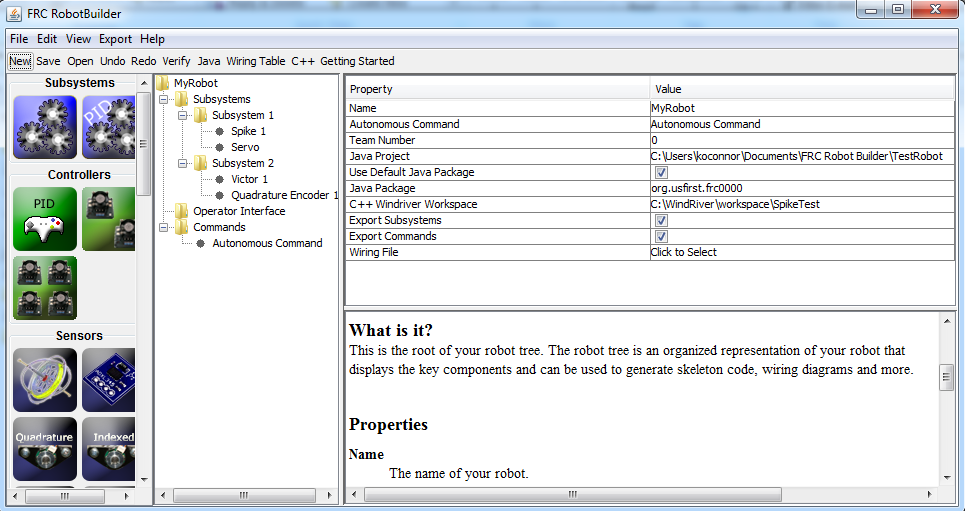

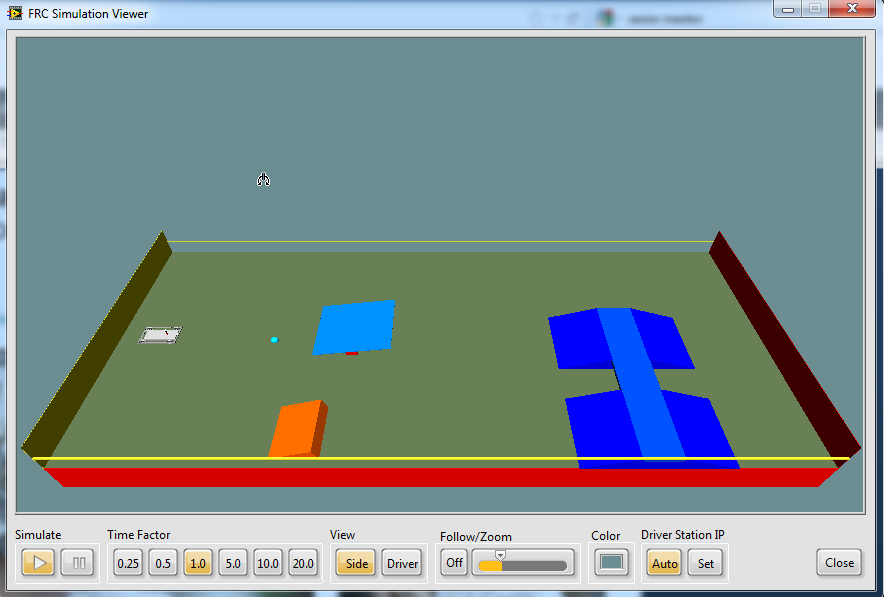

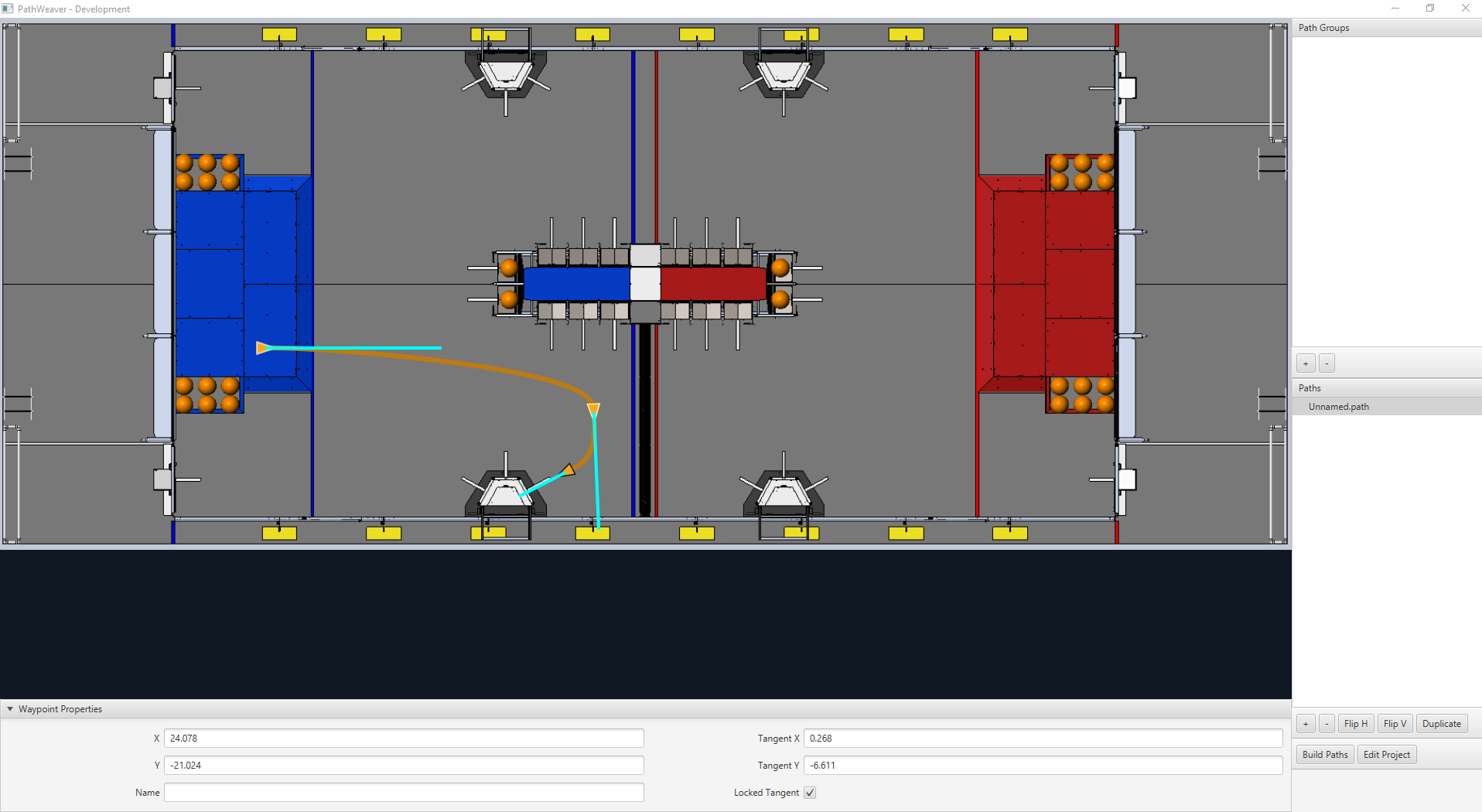

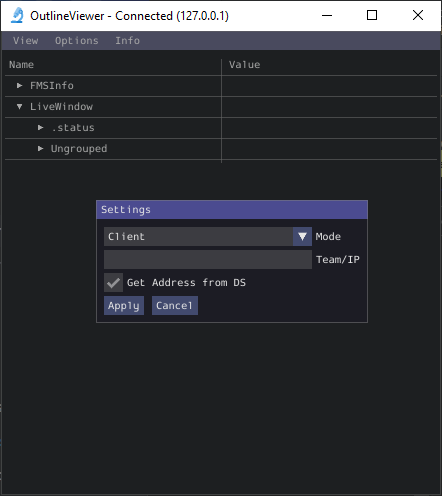

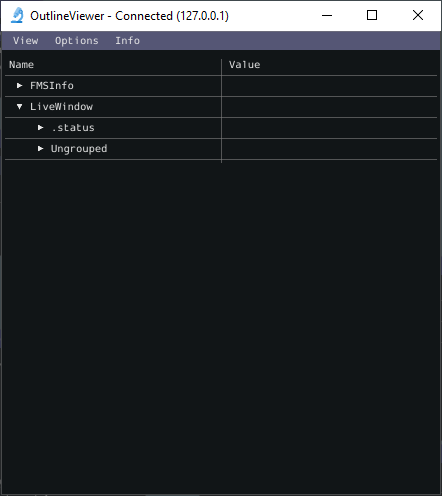

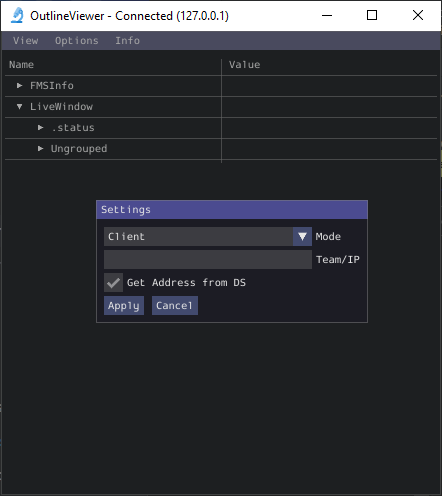

Tools Only installs just the WPILib tools (Pathweaver, Shuffleboard, RobotBuilder, SysID, Glass, and OutlineViewer) and JDK.

Everything installs the full development environment (VS Code, extensions, all dependencies), WPILib tools, and JDK.

You will notice two buttons, Install for this User and Install for all Users. Install for this User only installs it on the current user account, and does not require administrator privileges. However, Install for all Users installs the tools for all system accounts and will require administrator access. Install for all Users is not an option for macOS and Linux.

Note

If you select Install for all Users, Windows will prompt for administrator access through UAC during installation.

Select the option that is appropriate for you, and you’ll presented with the following installation screen.

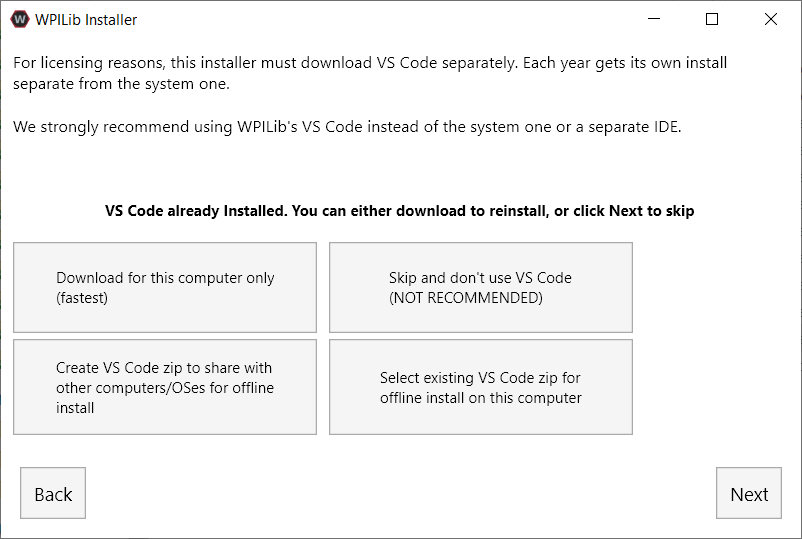

This next screen involves downloading VS Code. Unfortunately, due to licensing reasons, VS Code can not be bundled with the installer.

Download for this computer only

This downloads VS Code only for the current platform, which is also the smallest download.

Skip and don’t use VS Code

Skips installing VS Code. Useful for advanced installations or configurations. Generally not recommended.

Select existing VS Code zip for offline install on this computer

Selecting this option will bring up a prompt allowing you to select a pre-existing zip file of VS Code that has been downloaded by the installer previously. This option does not let you select an already installed copy of VS Code on your machine.

Create VS Code zip to share with other computers/OSes for offline install

This option downloads and saves a copy of VS Code for all platforms, which is useful for sharing the copy of the installer.

Go ahead and select Download VS Code for Single Install. This will begin the download process and can take a bit depending on internet connectivity (it’s ~100MB). Once the download is done, select Next. You should be presented with a screen that looks similar to the one below.

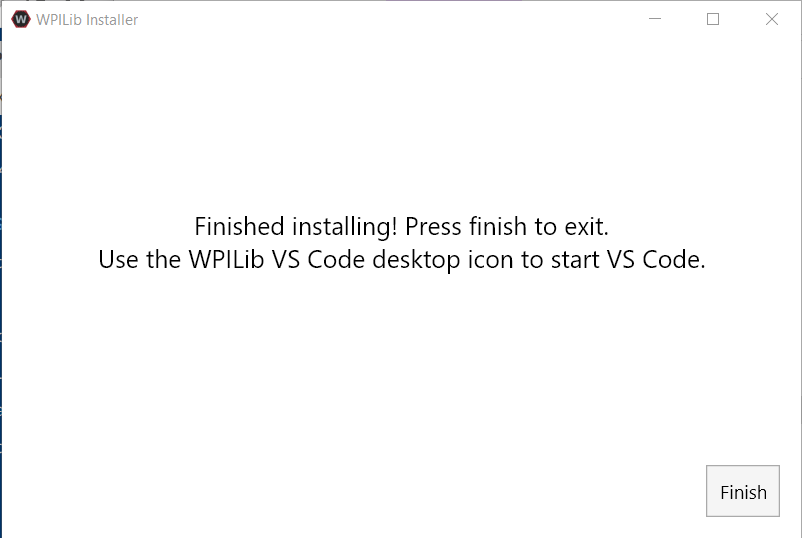

After installation is complete, you will be presented with the finished screen.

Important

WPILib installs a separate version of VS Code. It does not use an already existing installation. Each year has it’s own copy of the tools appended with the year. IE: WPILib VS Code 2022. Please launch the WPILib VS Code and not a system installed copy!

Congratulations, the WPILib development environment and tooling is now installed on your computer! Press Finish to exit the installer.

Post-Installation

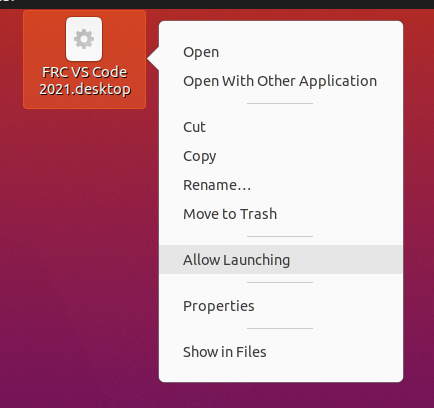

Some operating systems require some final action to complete installation.

After installation, the installer opens the WPILib VS Code folder. Drag the VS Code application to the dock. Eject WPILibInstaller image from the desktop.

Some versions of Linux (e.g. Ubuntu 20.04) require you to give the desktop shortcut the ability to launch. Right click on the desktop icon and select Allow Launching.

Note

Installing desktop tools and rebooting will create a folder on the desktop called YYYY WPILib Tools, where YYYY is the current year. Desktop tool shortcuts are not available on Linux and MacOS.

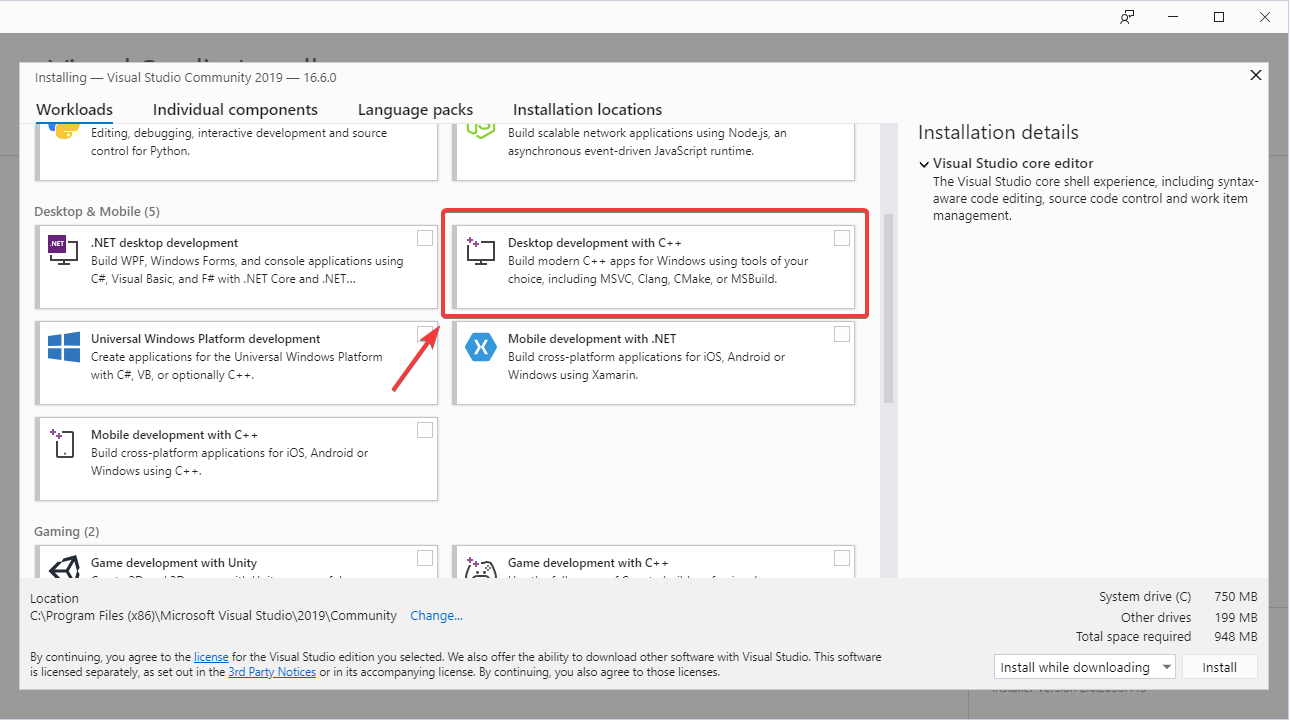

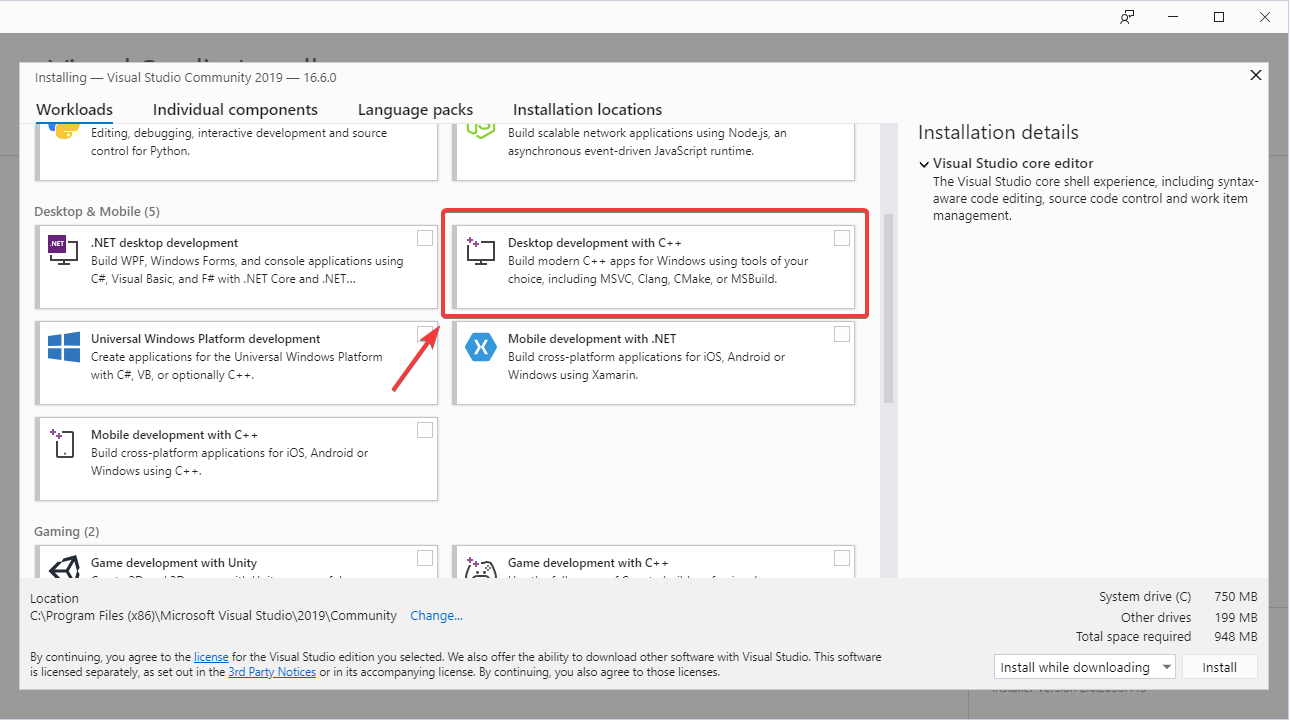

Additional C++ Installation for Simulation

C++ robot simulation requires that a native compiler to be installed. For Windows, this would be Visual Studio 2022 or 2019 (not VS Code), macOS requires Xcode, and Linux (Ubuntu) requires the build-essential package.

Ensure the Desktop Development with C++ option is checked in the Visual Studio installer for simulation support.

What is Installed?

The Offline Installer installs the following components:

Visual Studio Code - The supported IDE for 2019 and later robot code development. The offline installer sets up a separate copy of VS Code for WPILib development, even if you already have VS Code on your machine. This is done because some of the settings that make the WPILib setup work may break existing workflows if you use VS Code for other projects.

C++ Compiler - The toolchains for building C++ code for the roboRIO

Gradle - The specific version of Gradle used for building/deploying C++ or Java robot code

Java JDK/JRE - A specific version of the Java JDK/JRE that is used to build Java robot code and to run any of the Java based Tools (Dashboards, etc.). This exists side by side with any existing JDK installs and does not overwrite the JAVA_HOME variable

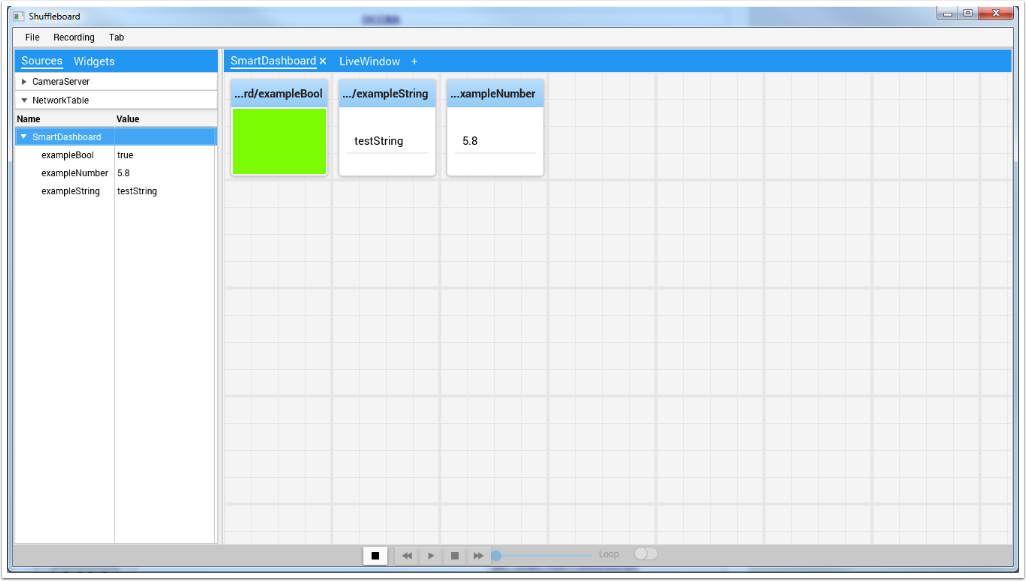

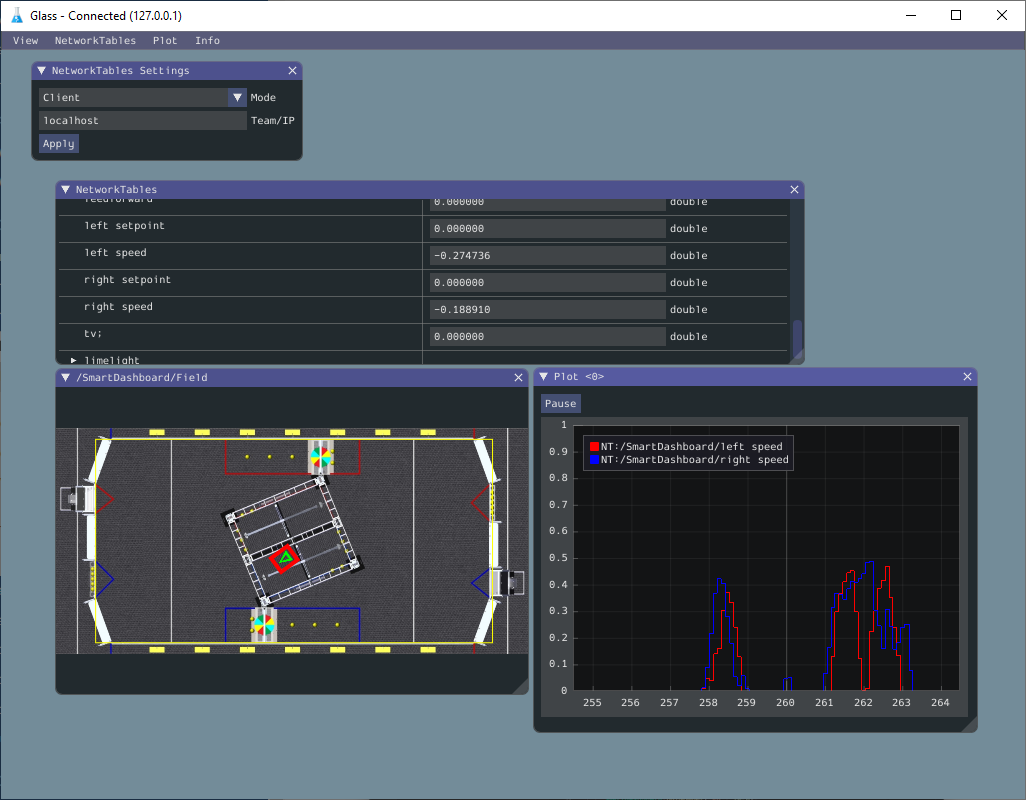

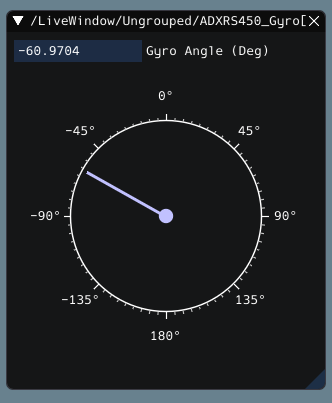

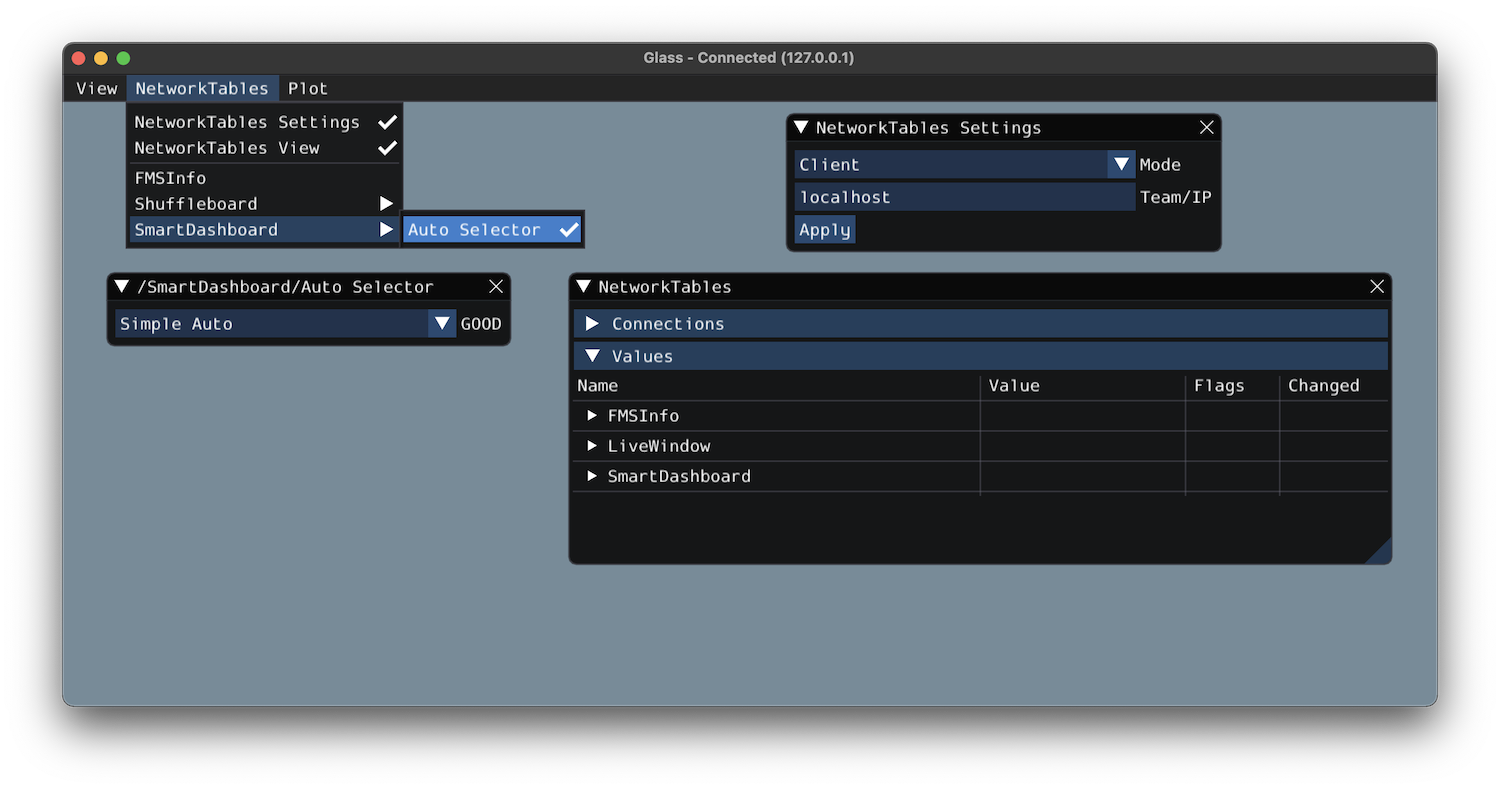

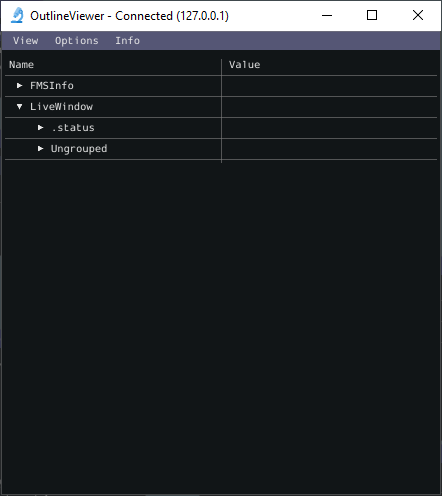

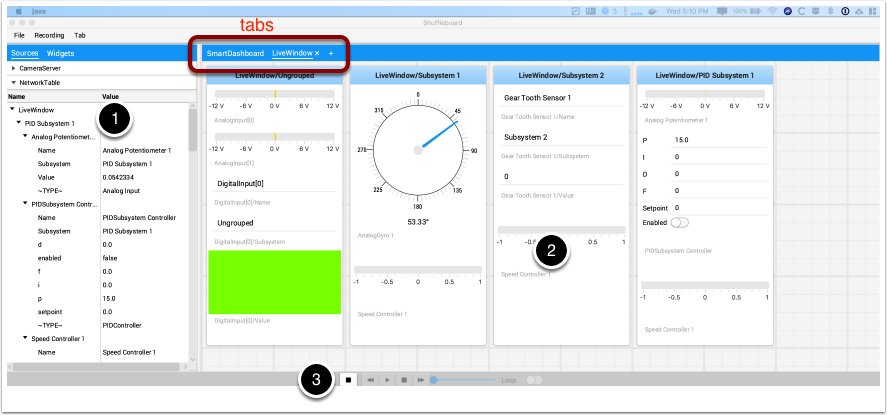

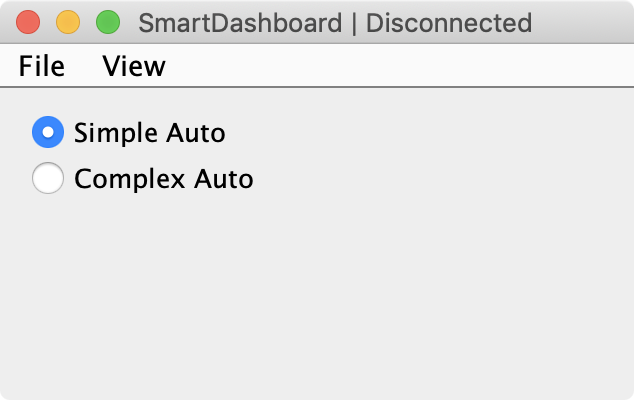

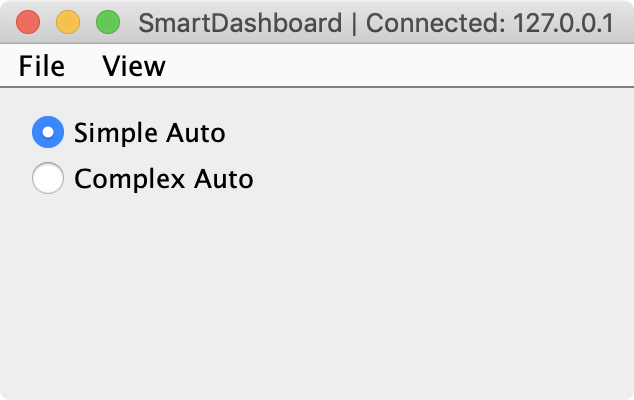

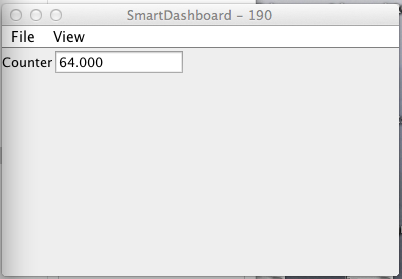

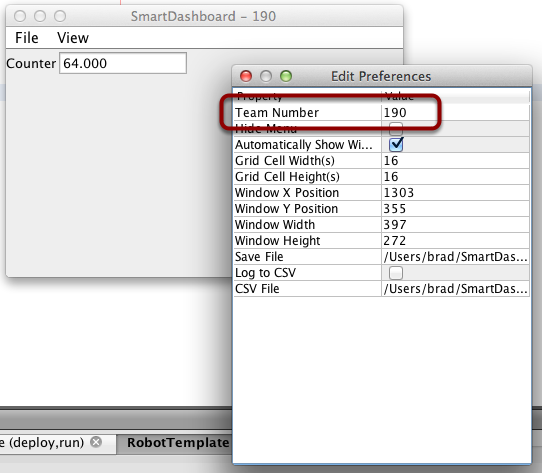

WPILib Tools - SmartDashboard, Shuffleboard, RobotBuilder, Outline Viewer, Pathweaver, Glass, SysID

WPILib Dependencies - OpenCV, etc.

VS Code Extensions - WPILib extensions for robot code development in VS Code

Uninstalling

WPILib is designed to install to different folders for different years, so that it is not necessary to uninstall a previous version before installing this year’s WPILib. However, the following instructions can be used to uninstall WPILib if desired.

Delete the appropriate wpilib folder (2019:

c:\Users\Public\frc2019, 2020 and later:c:\Users\Public\wpilib\YYYYwhereYYYYis the year to uninstall)Delete the desktop icons at

C:\Users\Public\Public DesktopDelete the path environment variables.

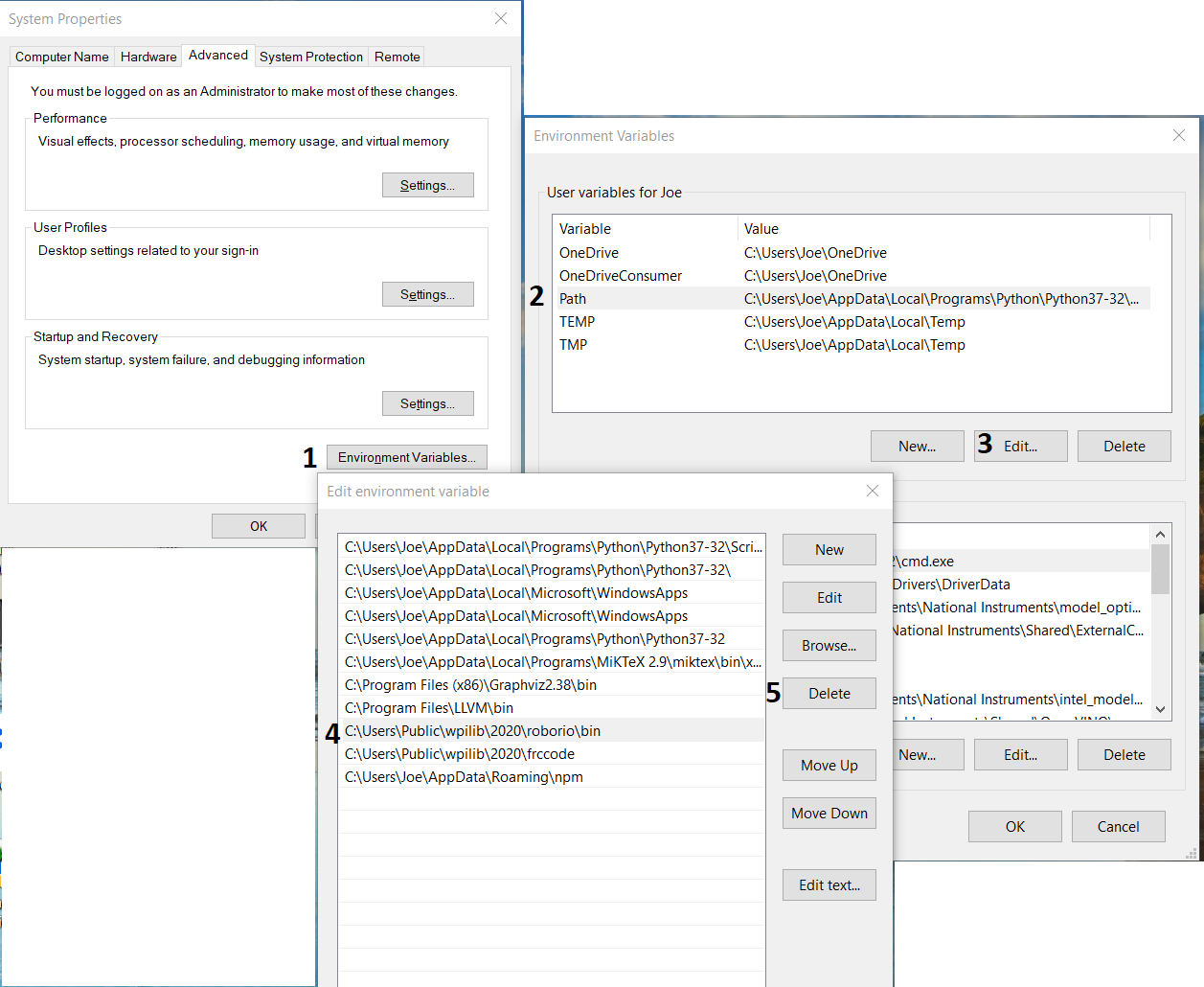

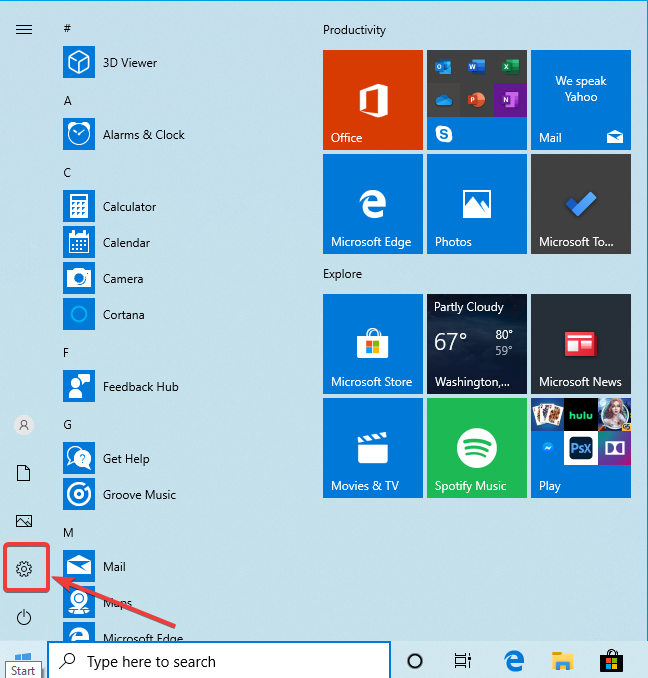

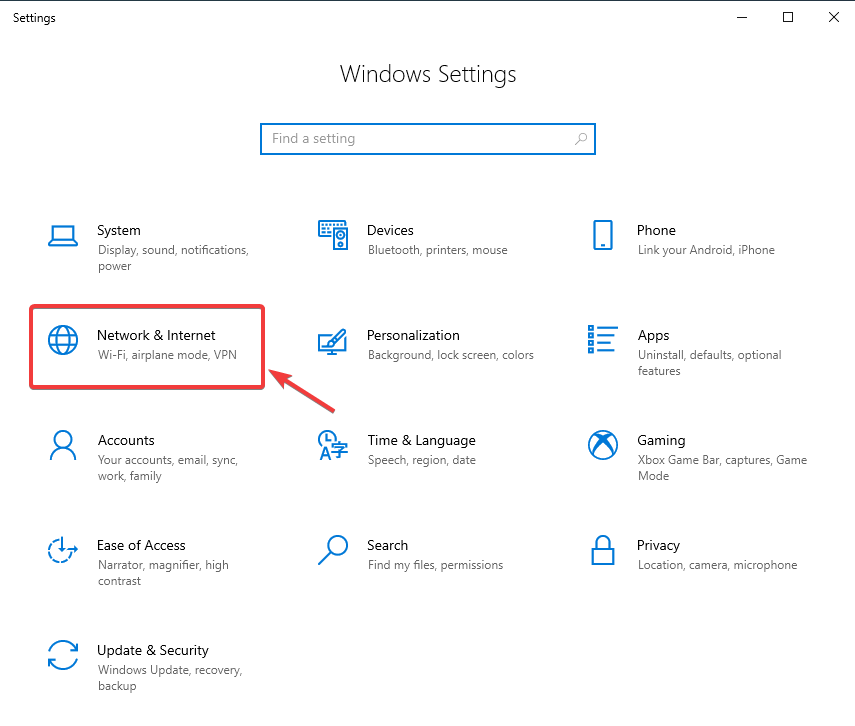

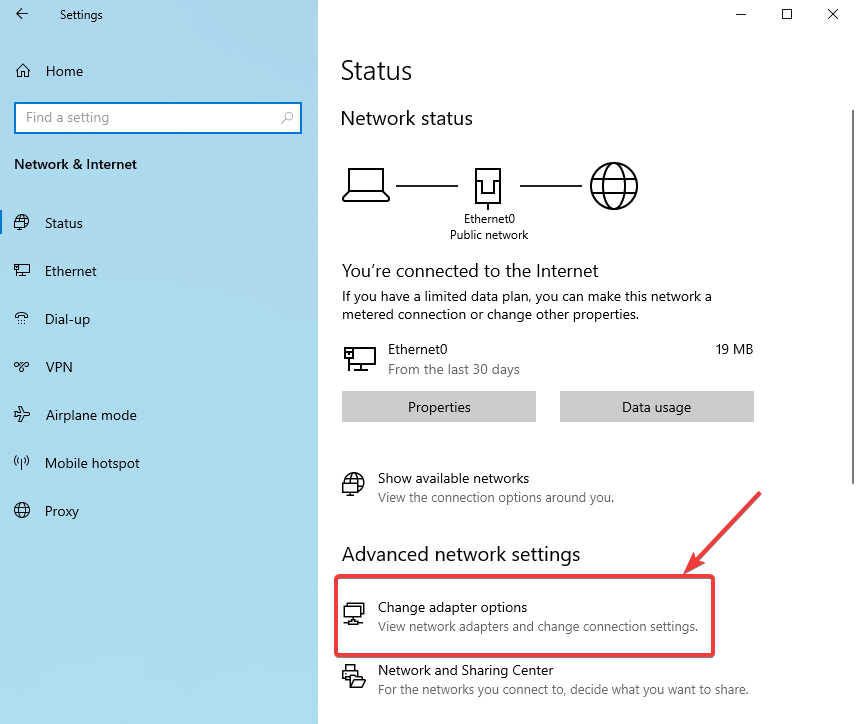

In the start menu, type environment and select “edit the system environment variables”

Click on the environment variables button (1).

In the user variables, select path (2) and then click on edit (3).

Select the path with

roborio\bin(4) and click on delete (5).Select the path with

frccodeand click on delete (5).Repeat steps 3-6 in the Systems Variable pane.

Delete the appropriate wpilib folder (2019:

~/frc2019, 2020 and later:~/wpilib/YYYYwhereYYYYis the year to uninstall)

Delete the appropriate wpilib folder (2019:

~/frc2019, 2020 and later:~/wpilib/YYYYwhereYYYYis the year to uninstall). egrm -rf ~/wpilib/YYYY

Next Steps

Congratulations! You have completed step 2 and should now have a working software development environment! Step 3 of this tutorial covers updating the hardware so that you can program it, while Step 4 showcases programming a robot in the VS Code Integrated Development Environment (IDE). For further information you can read through the VS Code section to familiarize yourself with the IDE.

Specific articles that are advised to be read are:

Additionally, you may need to do extra configuration that is applicable to your team’s robot. Please utilize the search feature to find necessary documentation.

Note

It’s important that teams using 3rd-party CAN motor controllers look at the Installing 3rd Party Libraries article as extra steps are required to code for these devices.

Step 3: Preparing Your Robot

Warning

The CAN Web Dashboard plugin is no longer supported by the roboRIO Web Dashboard. To configure CTRE CAN devices such as the PCM and PDP, use CTRE Phoenix Tuner

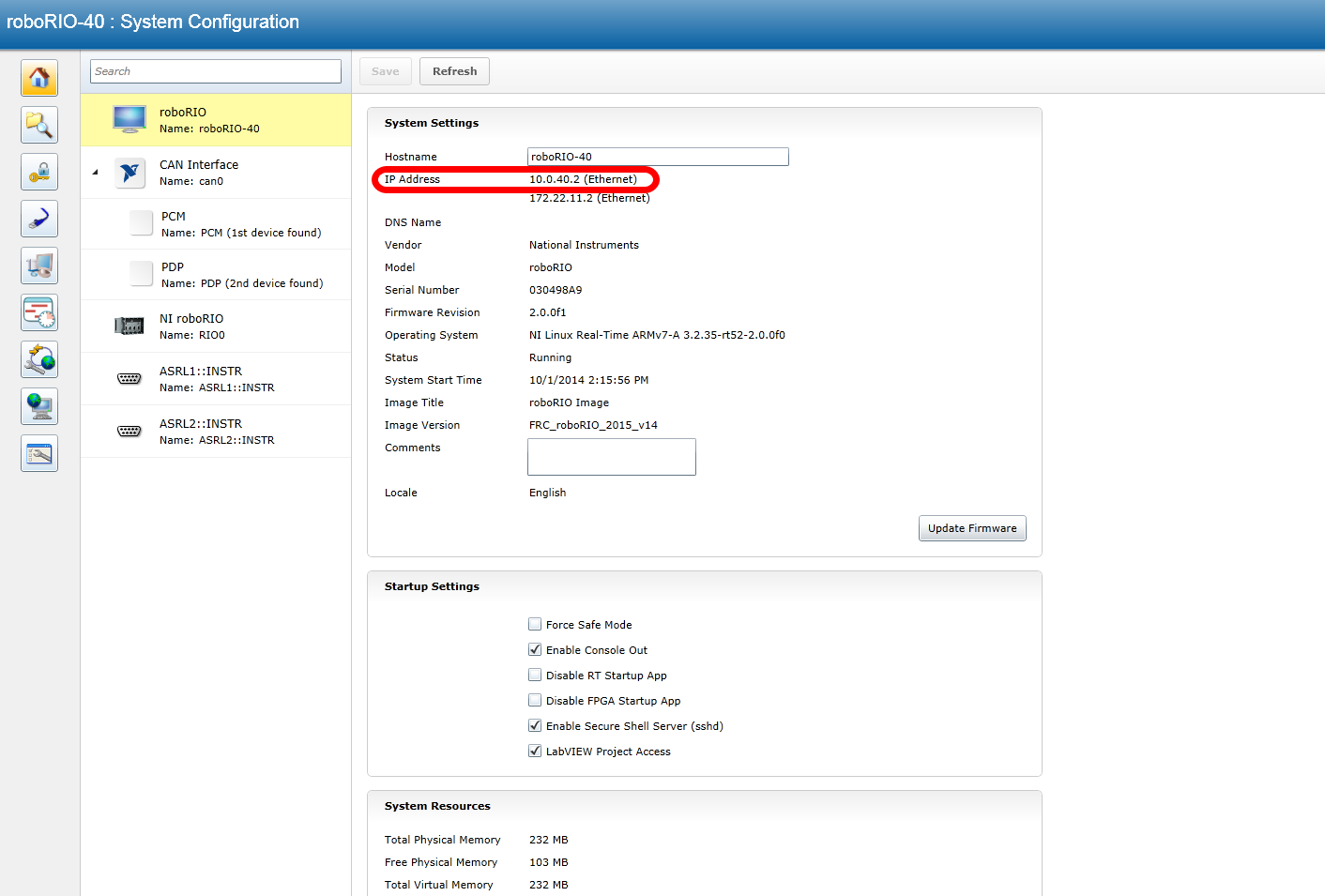

Imaging your roboRIO

Warning

Before imaging your roboRIO, you must have completed installation of the FRC Game Tools. You also must have the roboRIO power properly wired to the Power Distribution Panel. Make sure the power wires to the roboRIO are secure and that the connector is secure firmly to the roboRIO (4 total screws to check).

Important

The first time a roboRIO 2 is imaged (or if the microSD card is replaced with a new card), it must be imaged using roboRIO 2.0 microSD card imaging process.

Configuring the roboRIO

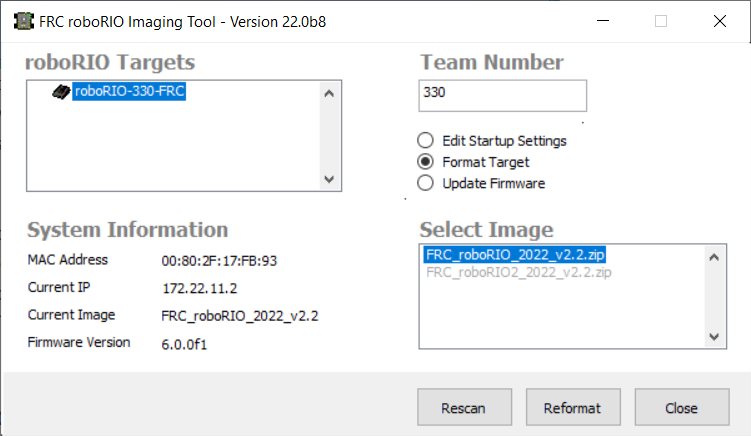

The roboRIO Imaging Tool will be used to image your roboRIO with the latest software.

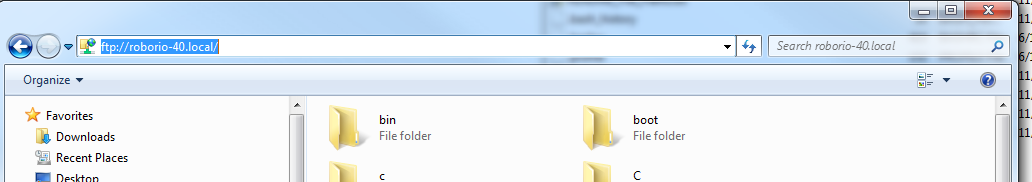

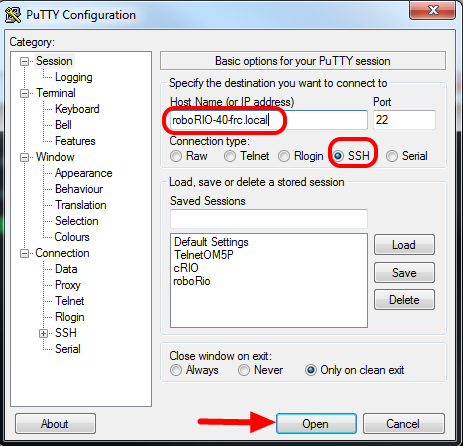

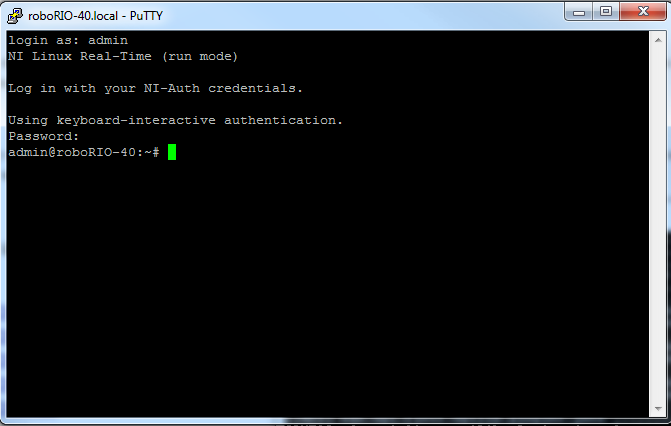

USB Connection

Connect a USB cable from the roboRIO USB Device port to the PC. This requires a USB Type A male (standard PC end) to Type B male cable (square with 2 cut corners), most commonly found as a printer USB cable.

Note

The roboRIO should only be imaged via the USB connection. It is not recommended to attempt imaging using the Ethernet connection.

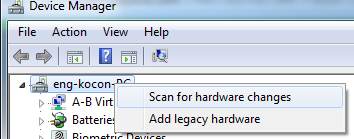

Driver Installation

The device driver should install automatically. If you see a “New Device” pop-up in the bottom right of the screen, wait for the driver install to complete before continuing.

Launching the Imaging Tool

The roboRIO imaging tool and latest image are installed with the NI FRC® Game Tools. Launch the imaging tool by double clicking on the shortcut on the Desktop. If you have difficulties imaging your roboRIO, you may need to try right-clicking on the icon and selecting Run as Administrator instead.

Note

The roboRIO imaging tool is also located at C:\Program Files (x86)\National Instruments\LabVIEW YYYY\project\roboRIO Tool where YYYY is the current year - 1. If it’s 2020, the directory would be LabVIEW 2019.

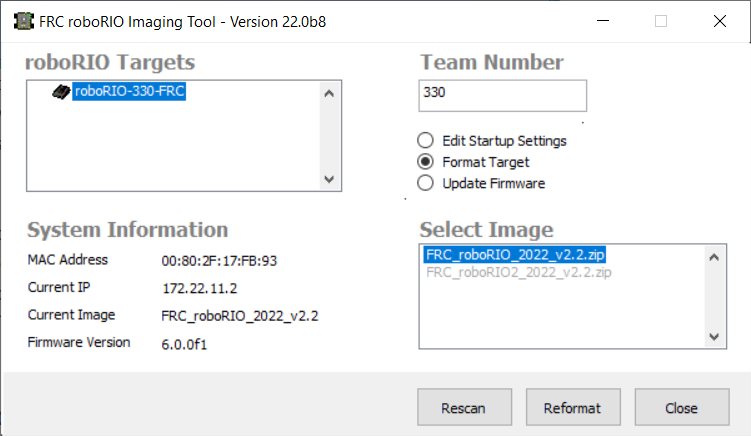

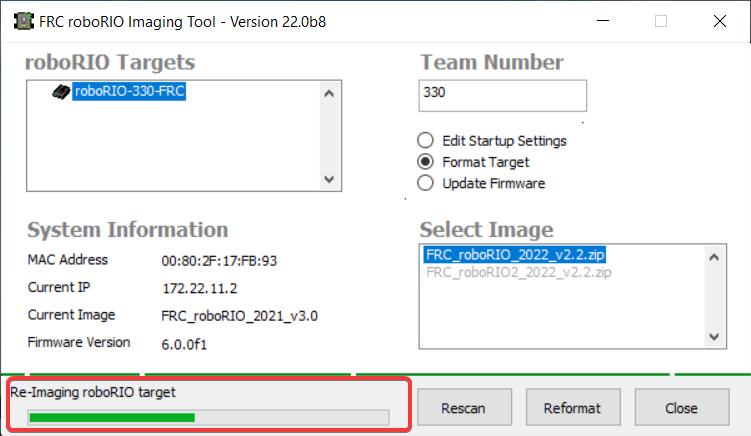

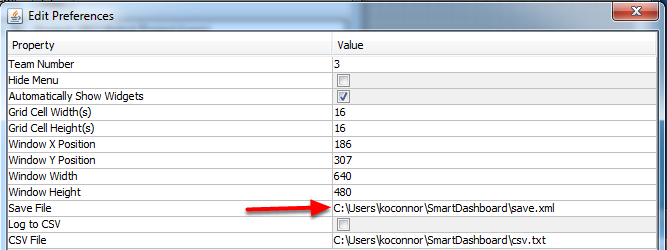

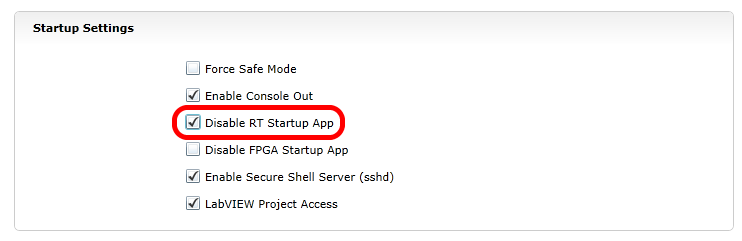

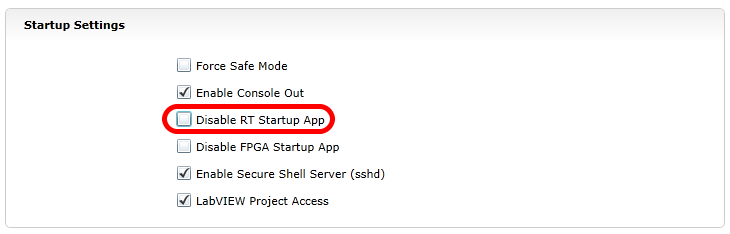

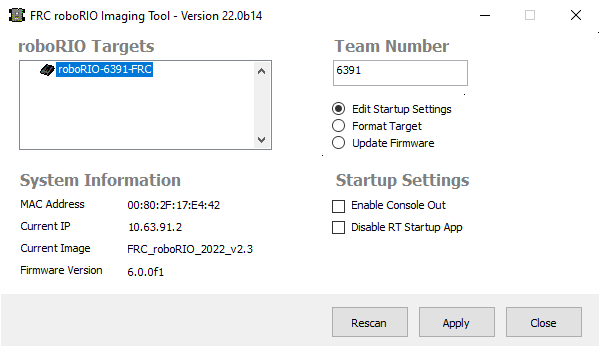

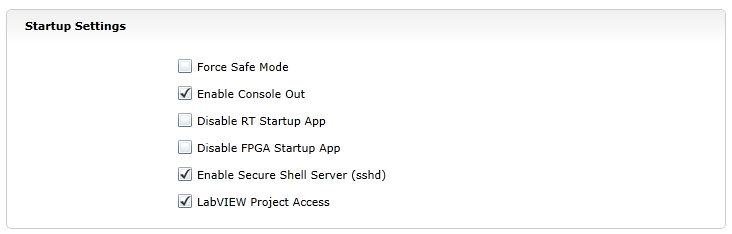

roboRIO Imaging Tool

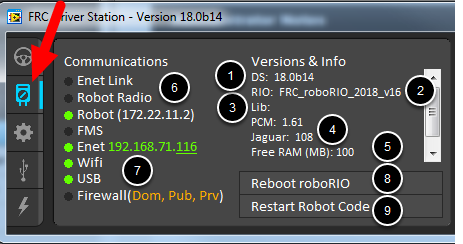

After launching, the roboRIO Imaging Tool will scan for available roboRIOs and indicate any found in the top left box. The bottom left box will show information and settings for the roboRIO currently selected. The right hand pane contains controls for modifying the roboRIO settings:

Edit Startup Settings - This option is used when you want to configure the startup settings of the roboRIO (the settings in the right pane), without imaging the roboRIO.

Format Target - This option is used when you want to load a new image on the roboRIO (or reflash the existing image). This is the most common option.

Update Firmware - This option is used to update the roboRIO firmware. For this season, the imaging tool will require roboRIO firmware to be version 5.0 or greater.

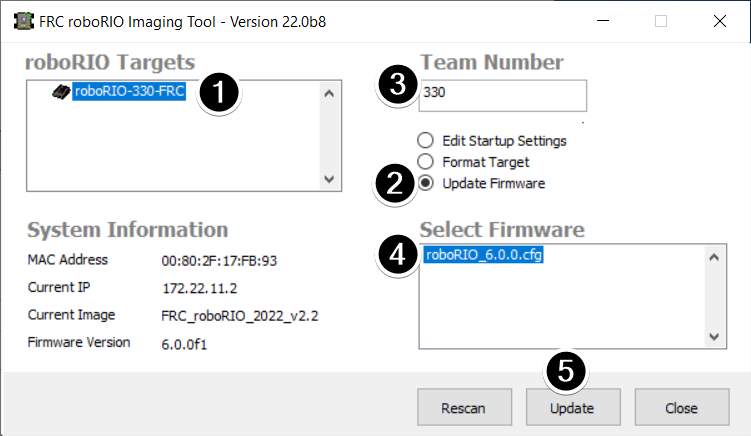

Updating Firmware

roboRIO firmware must be at least v5.0 to work with the 2019 or later image. If your roboRIO is at least version 5.0 (as shown in the bottom left of the imaging tool) you do not need to update.

To update roboRIO firmware:

Make sure your roboRIO is selected in the top left pane.

Select Update Firmware in the top right pane

Enter a team number in the Team Number box

Select the latest firmware file in the bottom right

Click the Update button

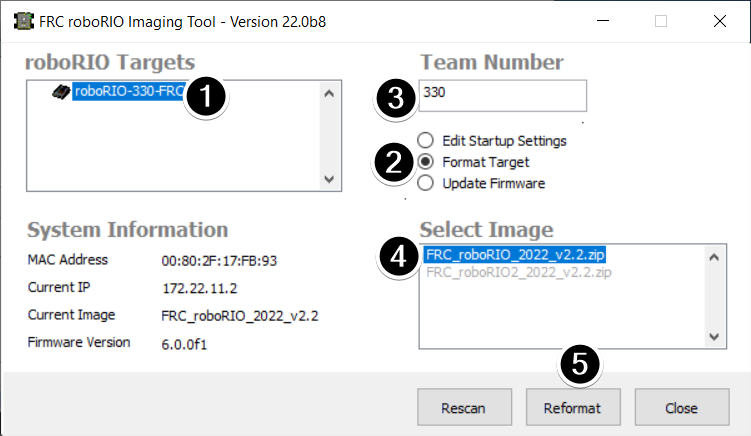

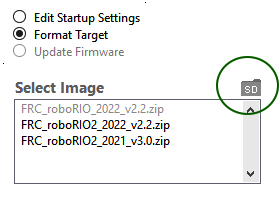

Imaging the roboRIO

Make sure the roboRIO is selected in the top left pane

Select Format Target in the right pane

Enter your team number in the box

Select the latest image version in the box.

Click Reformat to begin the imaging process.

Imaging Progress

The imaging process will take approximately 3-10 minutes. A progress bar in the bottom left of the window will indicate progress.

Imaging Complete

When the imaging completes you should see the dialog above. Click Ok, then click the Close button at the bottom right to close the imaging tool. Reboot the roboRIO using the Reset button to have the new team number take effect.

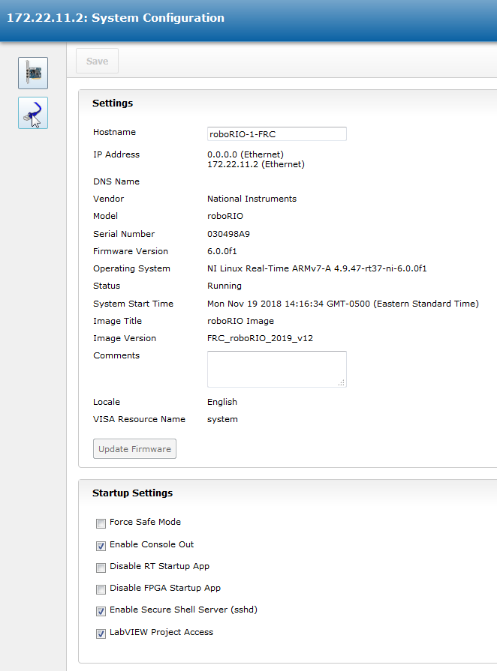

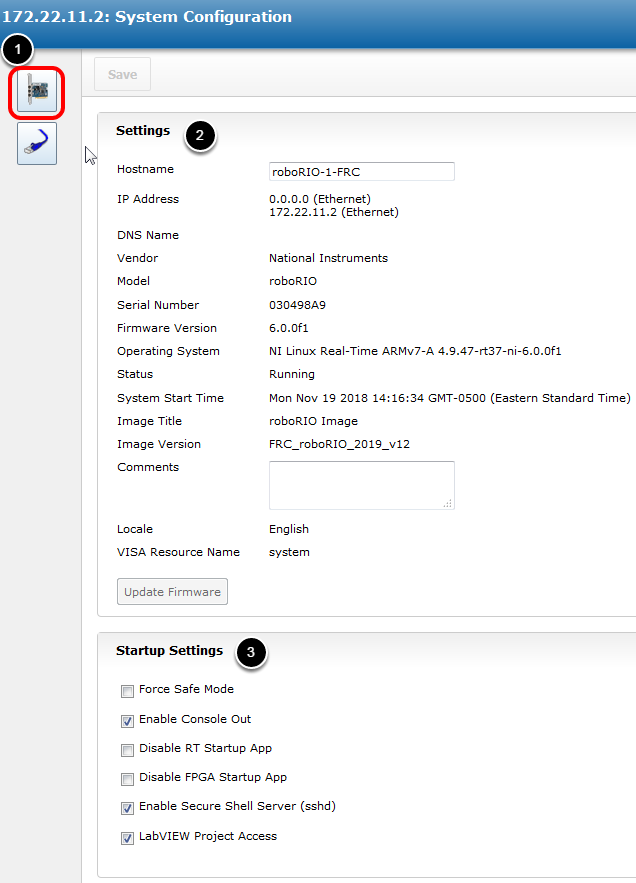

Troubleshooting

If you are unable to image your roboRIO, troubleshooting steps include:

Try running the roboRIO Imaging Tool as Administrator by right-clicking on the Desktop icon to launch it.

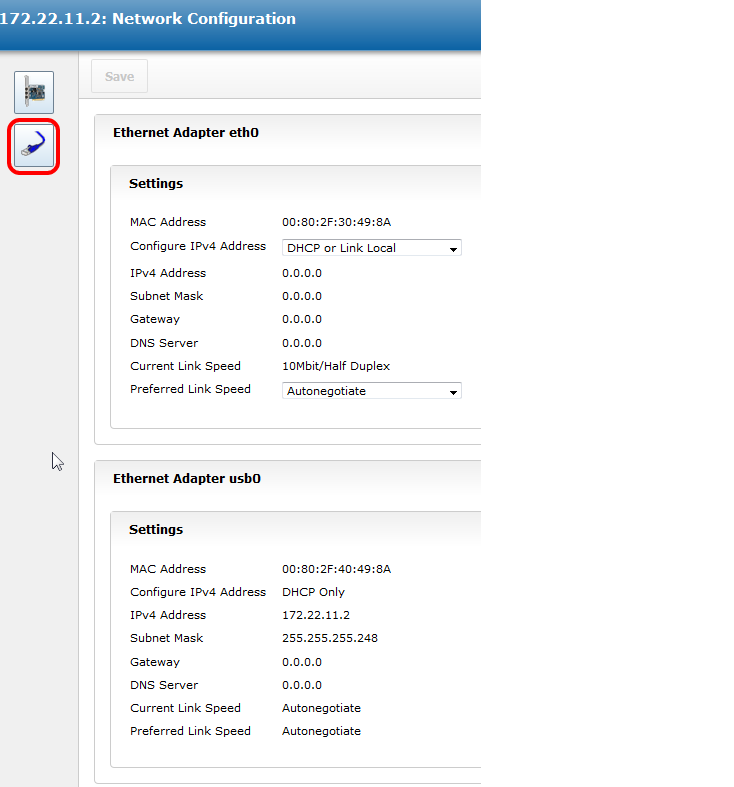

Try accessing the roboRIO webpage with a web-browser at

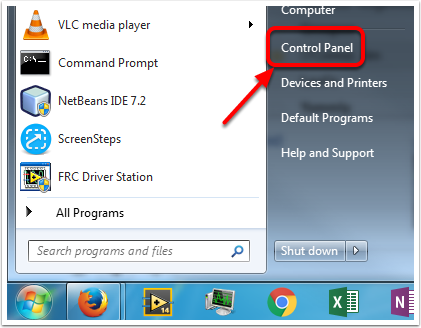

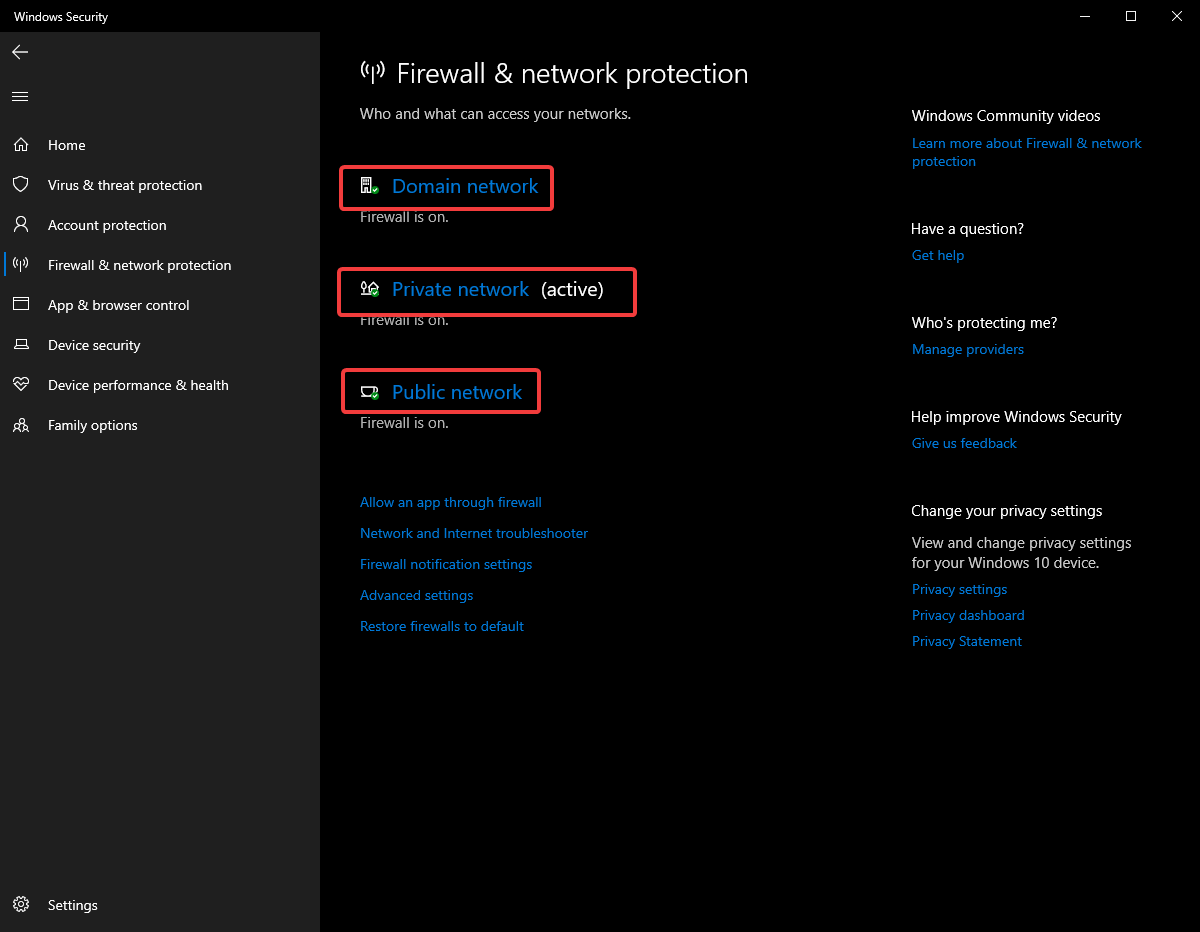

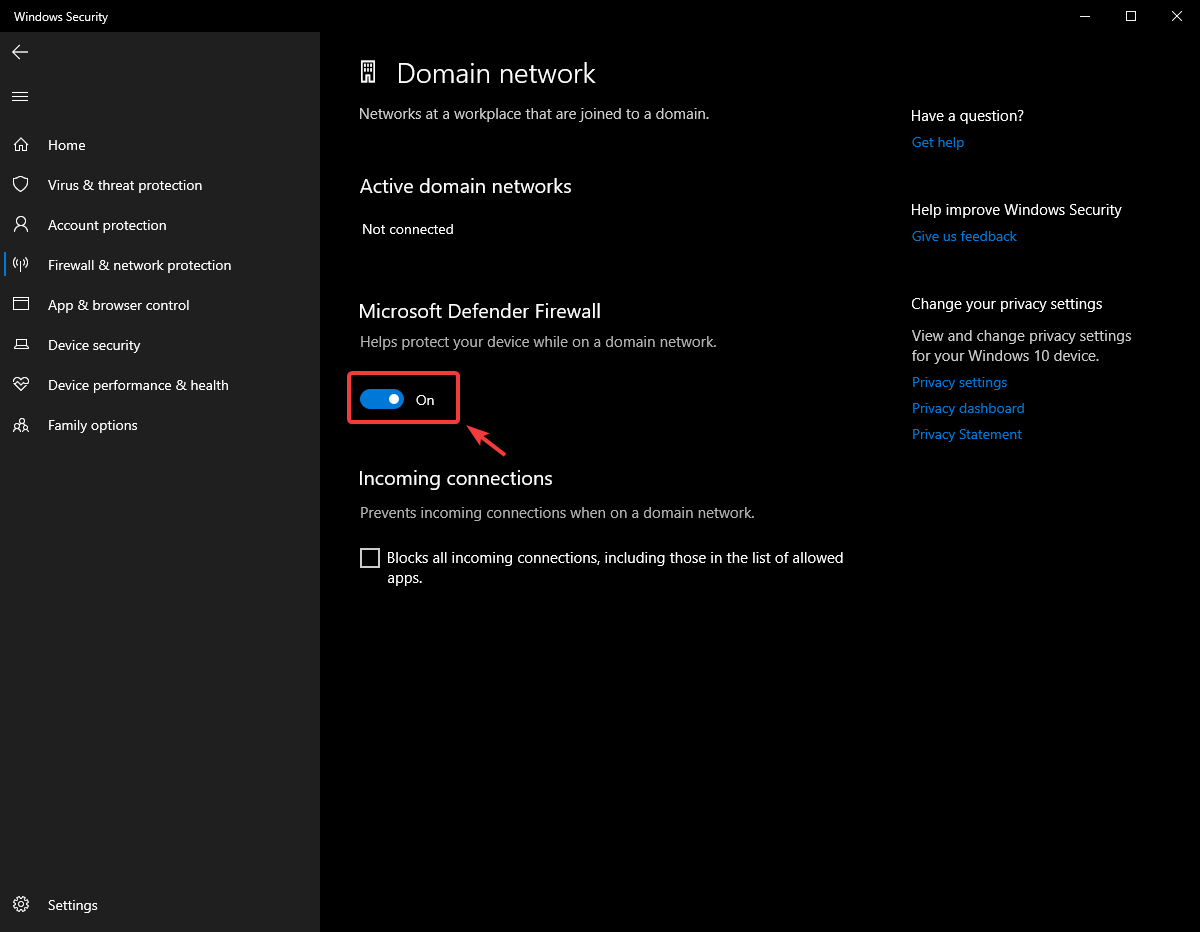

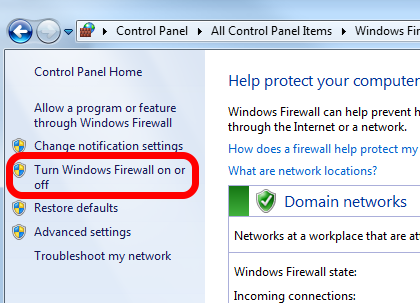

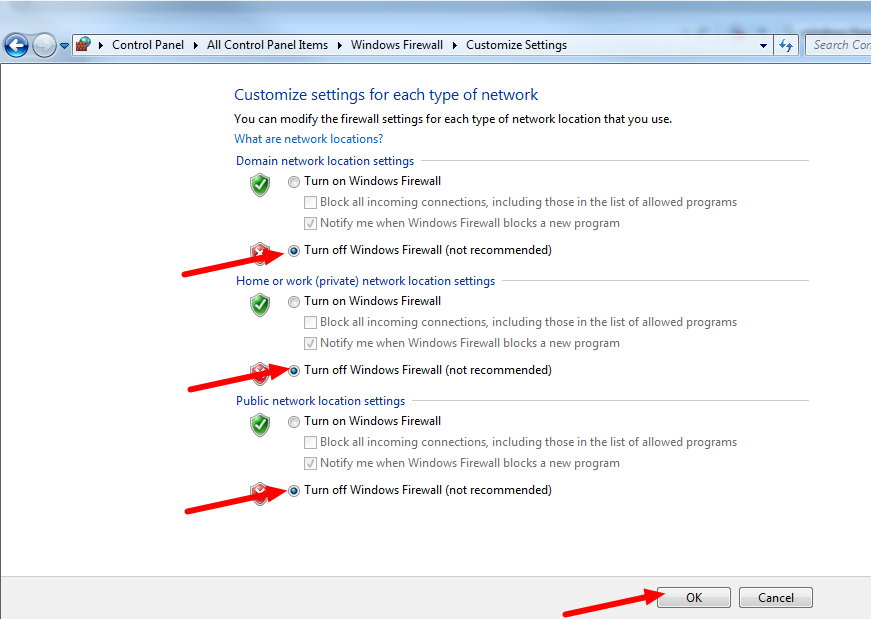

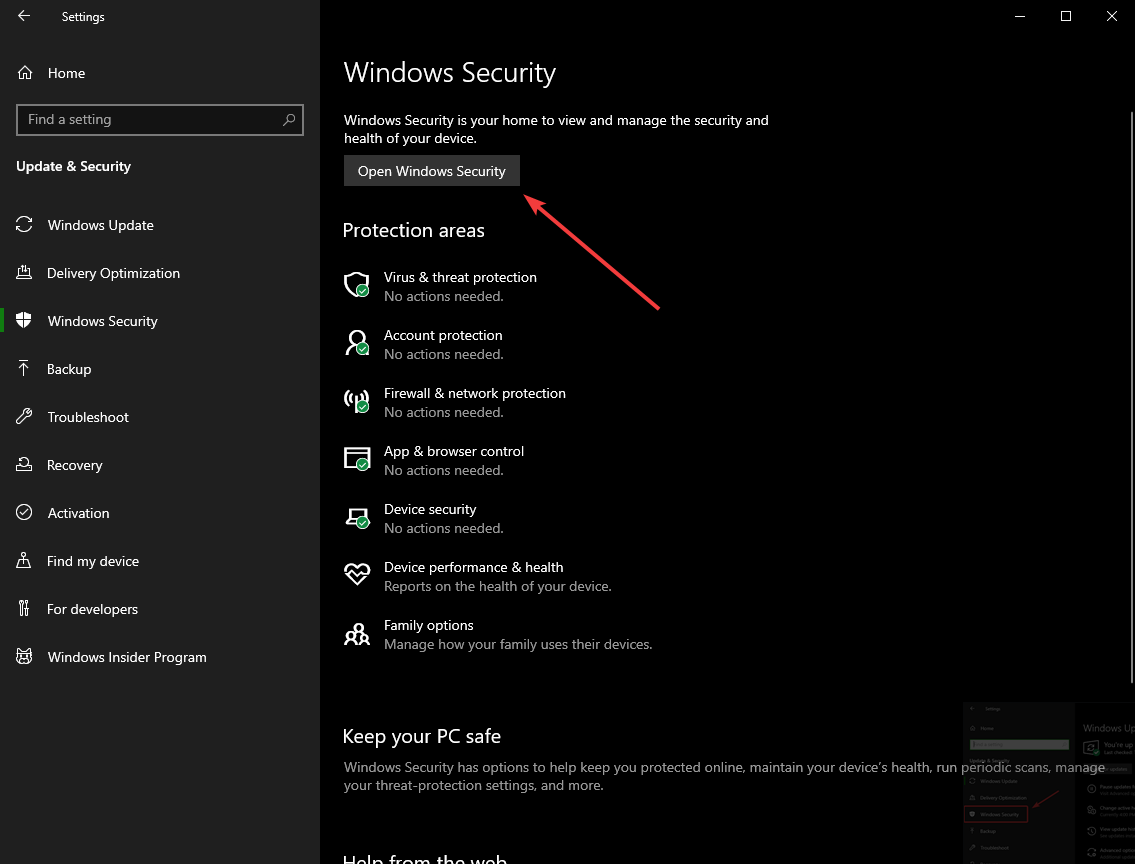

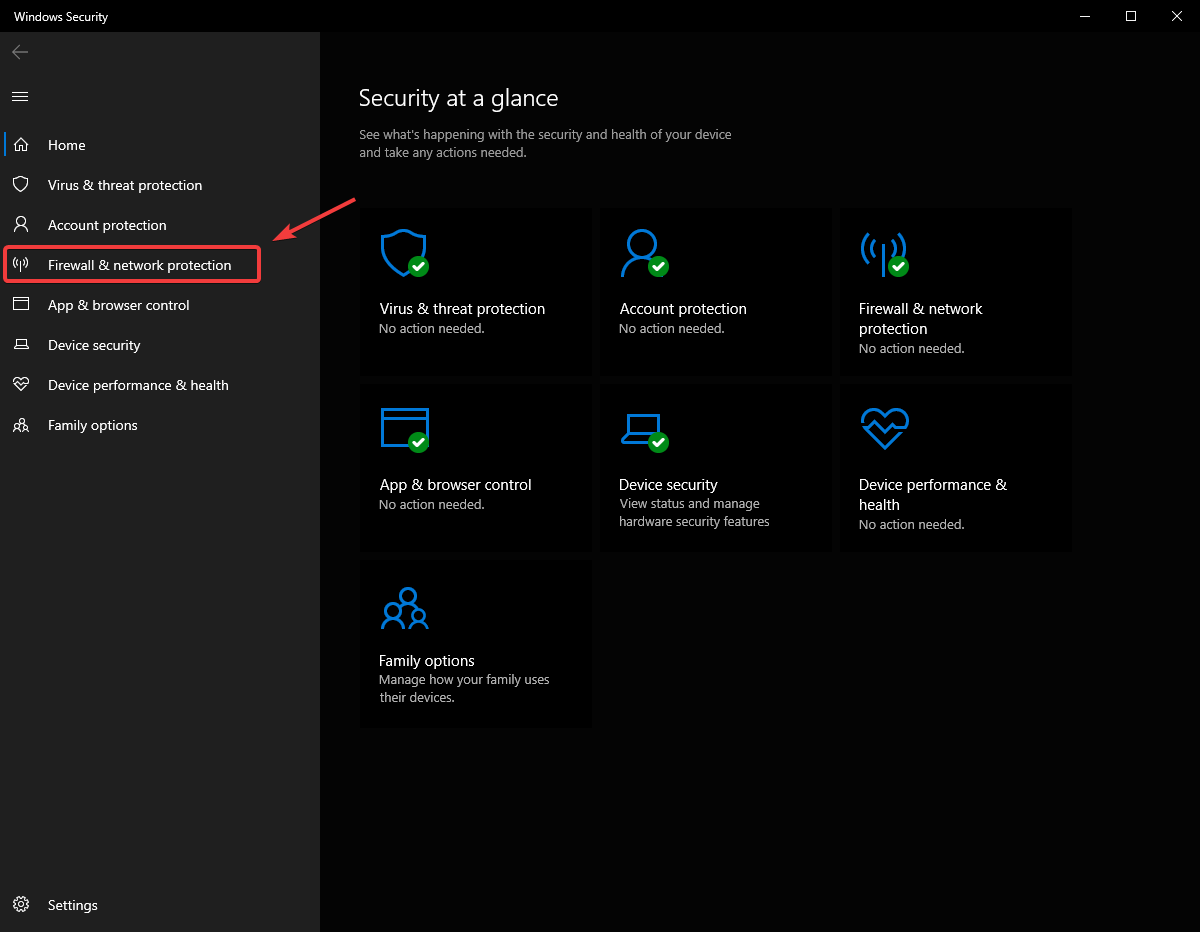

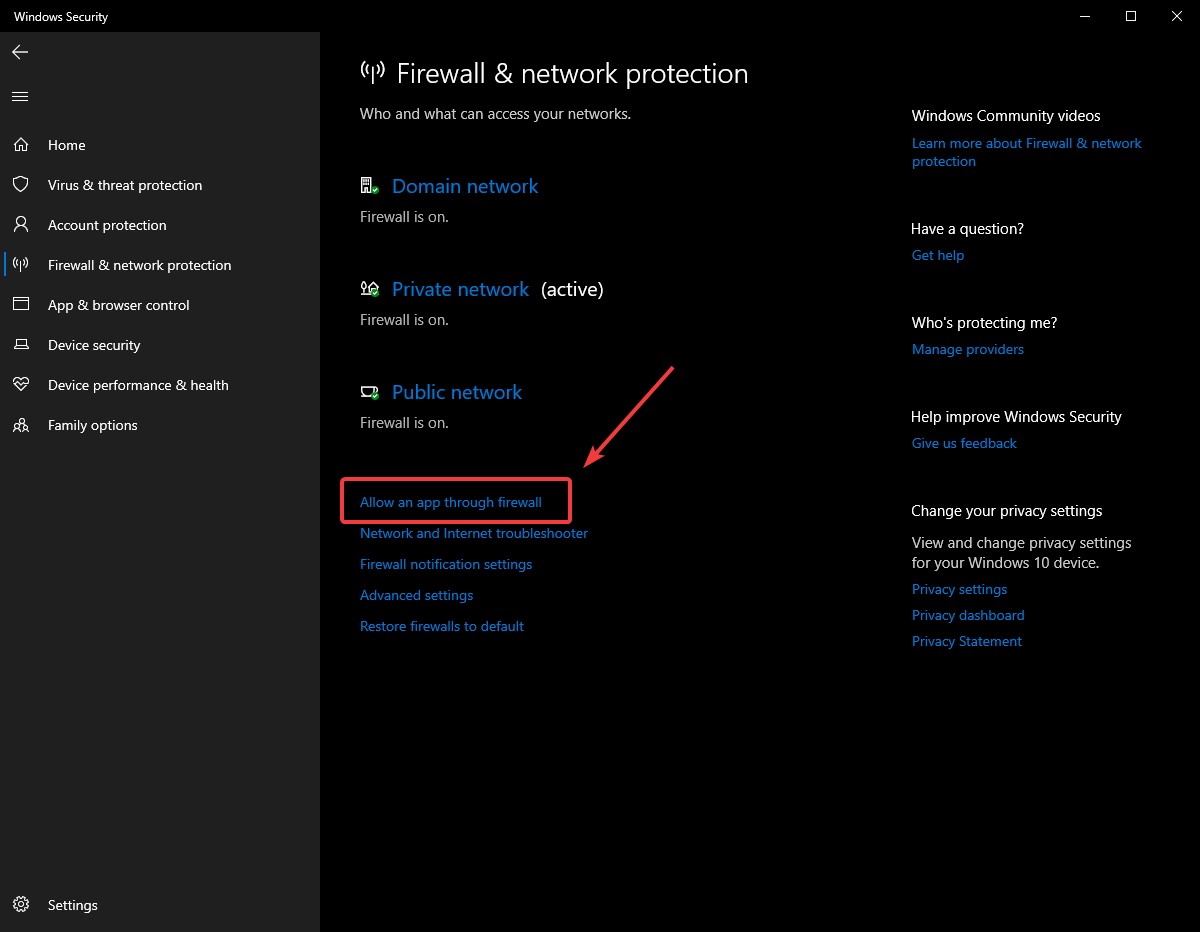

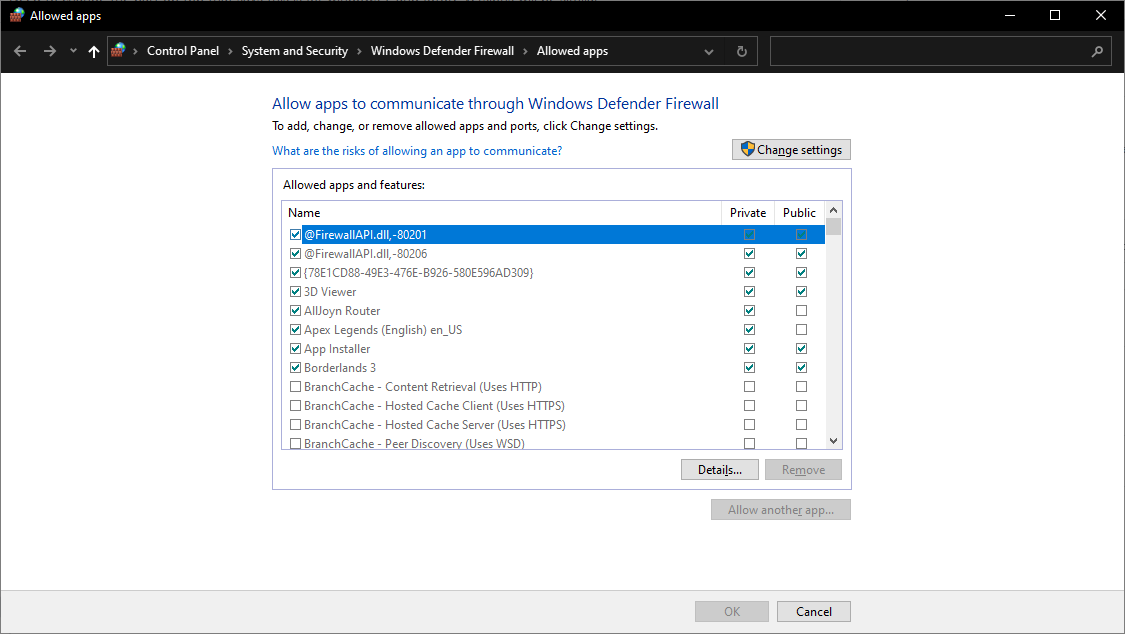

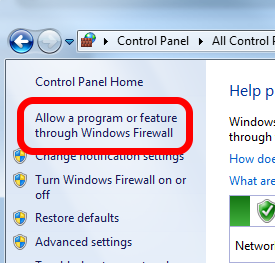

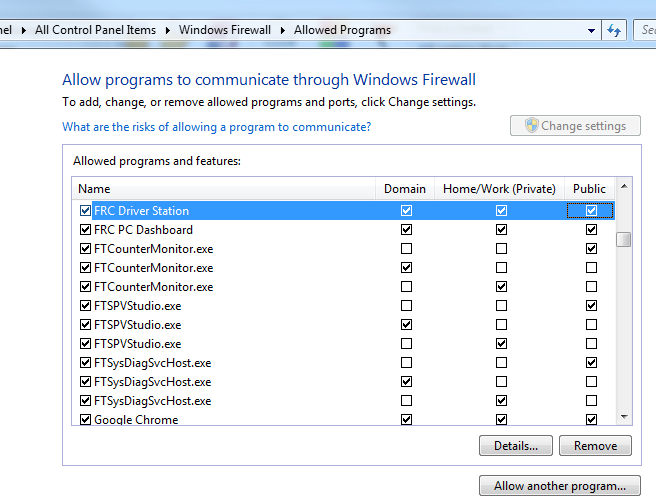

http://172.22.11.2/and/or verify that the NI network adapter appears in your list of Network Adapters in the Control Panel. If not, try re-installing the NI FRC Game Tools or try a different PC.Make sure your firewall is turned off.

Some teams have experienced an issue where imaging fails if the device name of the computer you’re using has a dash (

-) in it. Try renaming the computer (or using a different PC).Try booting the roboRIO into Safe Mode by pressing and holding the reset button for at least 5 seconds.

Try a different USB Cable

Try a different PC

Try imaging a roboRIO 2.0 using the roboRIO 2.0 microSD card imaging process

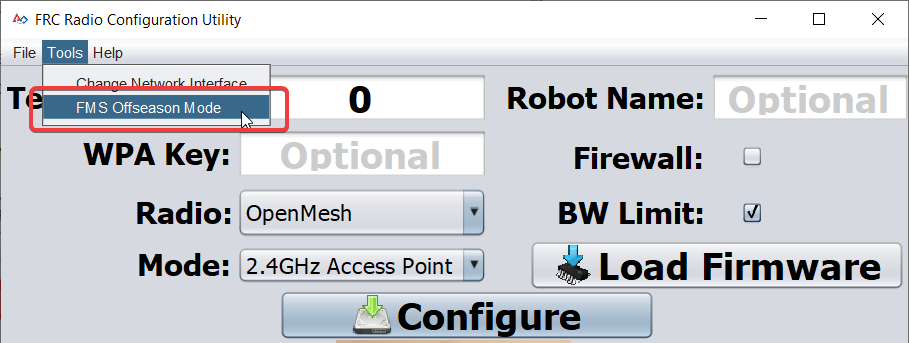

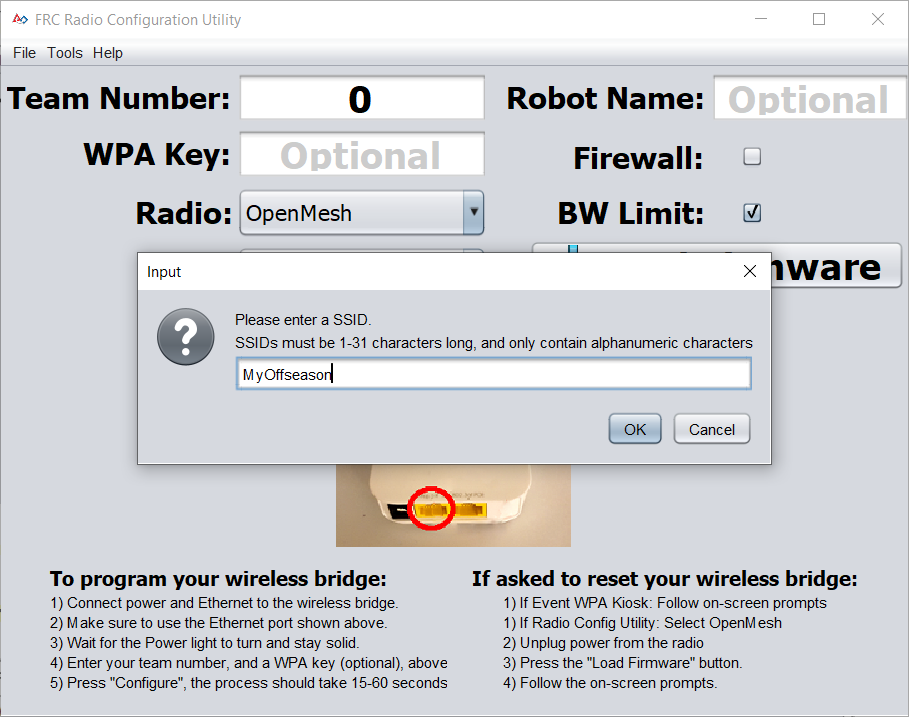

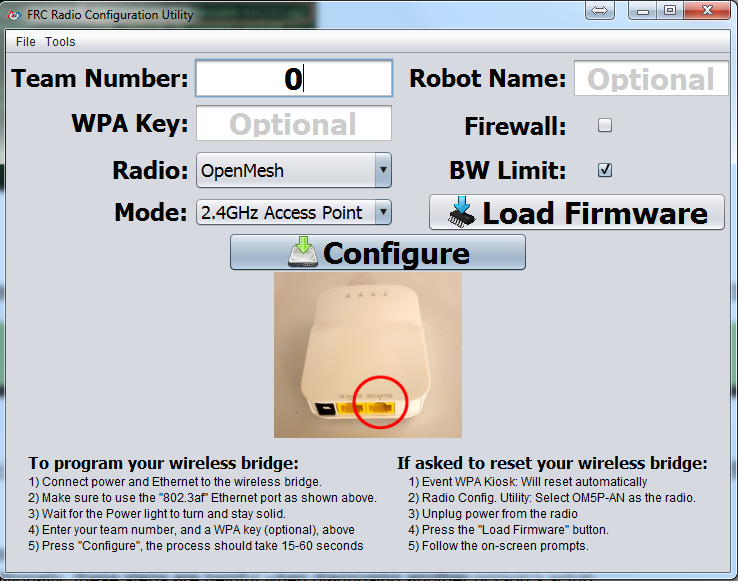

Programming your Radio

This guide will show you how to use the FRC® Radio Configuration Utility software to configure your robot’s wireless bridge for use outside of FRC events.

Prerequisites

The FRC Radio Configuration Utility requires administrator privileges to configure the network settings on your machine. The program should request the necessary privileges automatically (may require a password if run from a non-administrator account), but if you are having trouble, try running it from an administrator account.

Download the latest FRC Radio Configuration Utility Installer from the following links:

FRC Radio Configuration 22.0.1

FRC Radio Configuration 22.0.1 Israel Version

Note

The _IL version is for Israel teams and contains a version of the OM5PAC firmware with restricted channels for use in Israel.

Before you begin using the software:

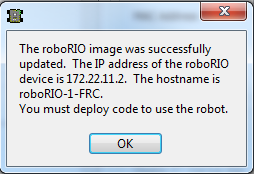

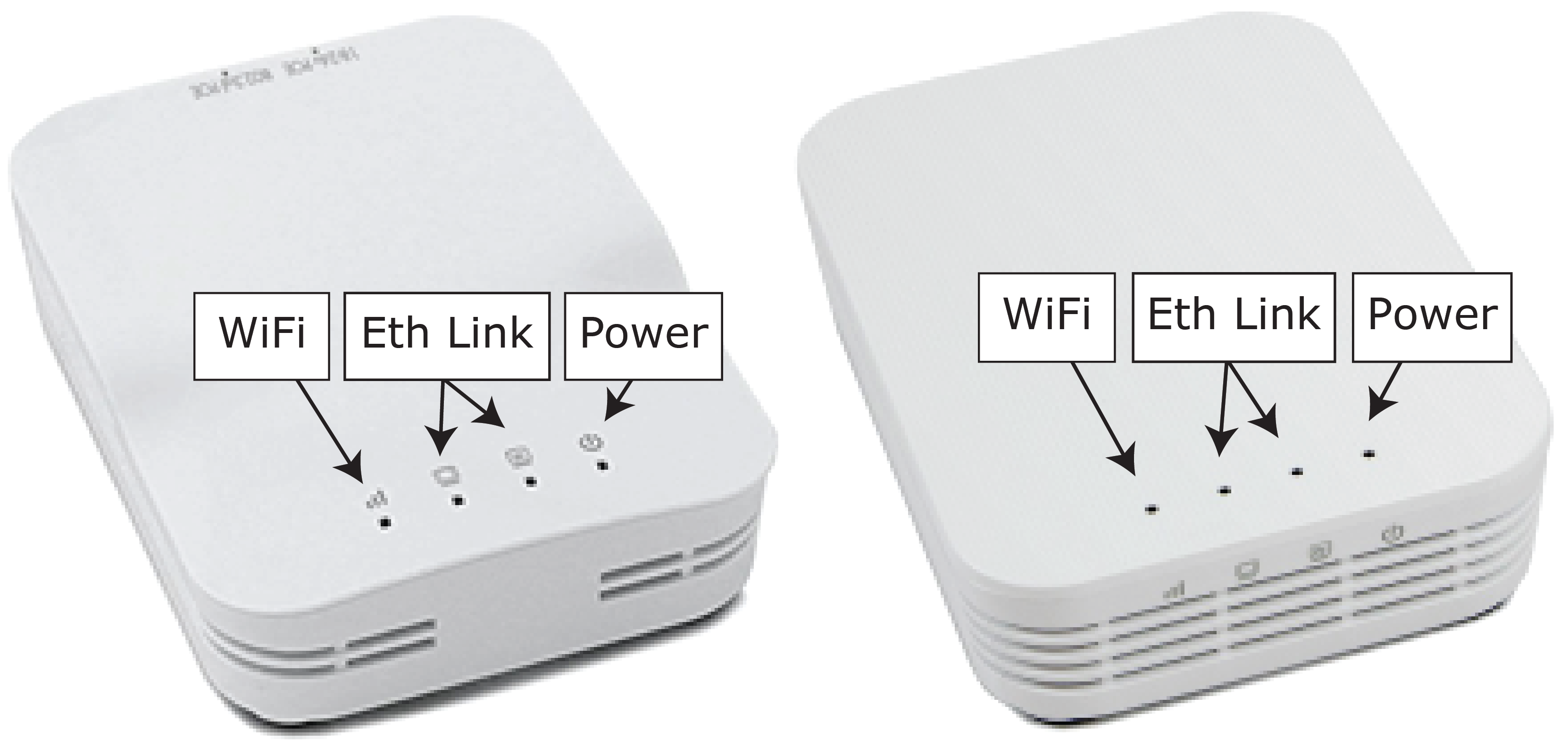

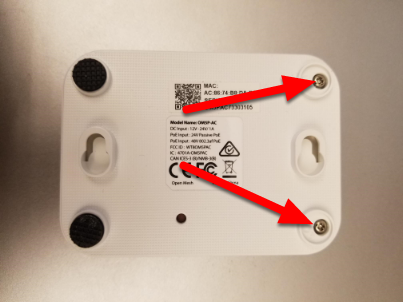

Plug directly from your computer into the wireless bridge ethernet port closest to the power jack. Make sure no other devices are connected to your computer via ethernet. If powering the radio via PoE, plug an Ethernet cable from the PC into the socket side of the PoE adapter (where the roboRIO would plug in). If you experience issues configuring through the PoE adapter, you may try connecting the PC to the alternate port on the radio.

Warning

The OM5P-AN and AC use the same power plug as the D-Link DAP1522, however they are 12V radios. Wire the radio to the 12V 2A terminals on the VRM (center-pin positive).

Application Notes

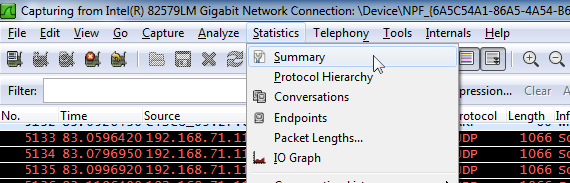

By default, the Radio Configuration Utility will program the radio to enforce the 4Mbps bandwidth limit on traffic exiting the radio over the wireless interface. In the home configuration (AP mode) this is a total, not a per client limit. This means that streaming video to multiple clients is not recommended.

The Utility has been tested on Windows 7, 8 and 10. It may work on other operating systems, but has not been tested.

Programmed Configuration

The Radio Configuration Utility programs a number of configuration settings into the radio when run. These settings apply to the radio in all modes (including at events). These include:

Set a static IP of

10.TE.AM.1Set an alternate IP on the wired side of

192.168.1.1for future programmingBridge the wired ports so they may be used interchangeably

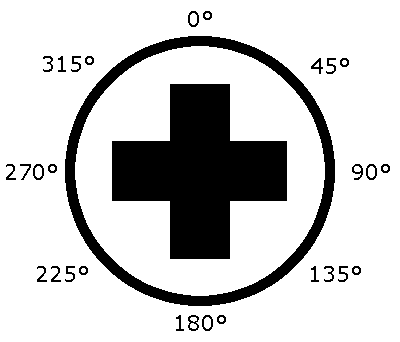

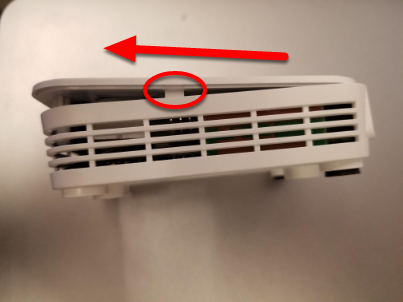

The LED configuration noted in the graphic above.

4Mb/s bandwidth limit on the outbound side of the wireless interface (may be disabled for home use)

QoS rules for internal packet prioritization (affects internal buffer and which packets to discard if bandwidth limit is reached). These rules are:

Robot Control and Status (UDP

1110,1115,1150)Robot TCP & NetworkTables (TCP

1735,1740)Bulk (All other traffic). (disabled if BW limit is disabled)

DHCP server enabled. Serves out:

10.TE.AM.11-10.TE.AM.111on the wired side10.TE.AM.138-10.TE.AM.237on the wireless sideSubnet mask of

255.255.255.0Broadcast address

10.TE.AM.255

DNS server enabled. DNS server IP and domain suffix (

.lan) are served as part of the DHCP.

At home only:

SSID may have a “Robot Name” appended to the team number to distinguish multiple networks.

Firewall option may be enabled to mimic the field firewall rules (open ports may be found in the Game Manual)

Warning

It is not possible to modify the configuration manually.

Install the Software

Double click on FRC_Radio_Configuration_VERSION.exe to launch the installer. Follow the prompts to complete the installation.

Part of the installation prompts will include installing Npcap if it is not already present. The Npcap installer contains a number of checkboxes to configure the install. You should leave the options as the defaults.

Launch the software

Use the Start menu or desktop shortcut to launch the program.

Note

If you need to locate the program, it is installed to C:\Program Files (x86)\FRC Radio Configuration Utility. For 32-bit machines the path is C:\Program Files\FRC Radio Configuration Utility

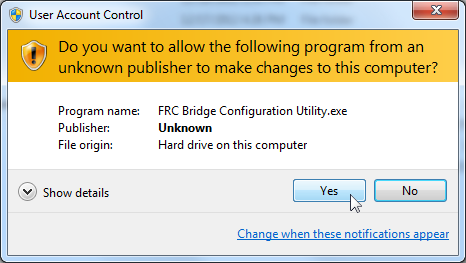

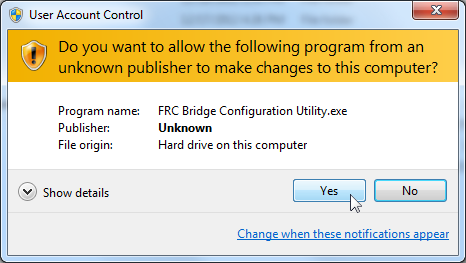

Allow the program to make changes, if prompted

A prompt may appear about allowing the configuration utility to make changes to the computer. Click Yes if the prompt appears.

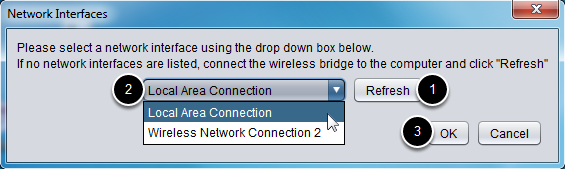

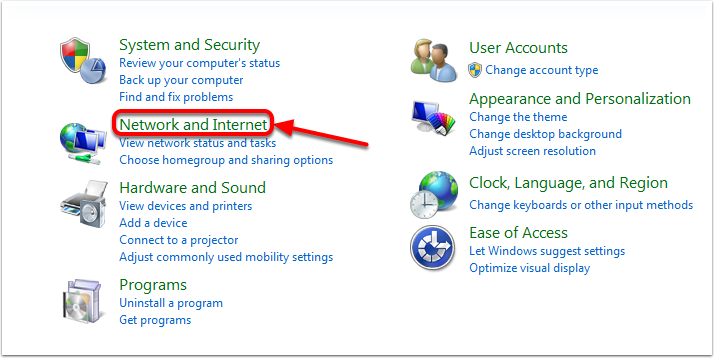

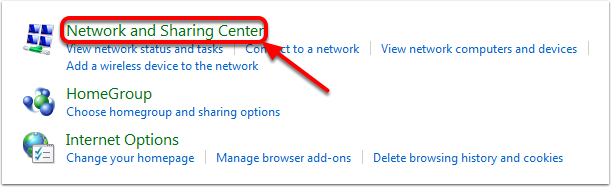

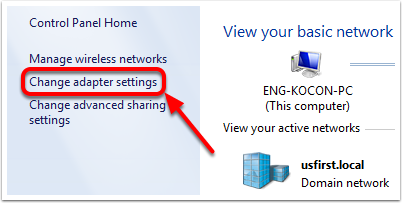

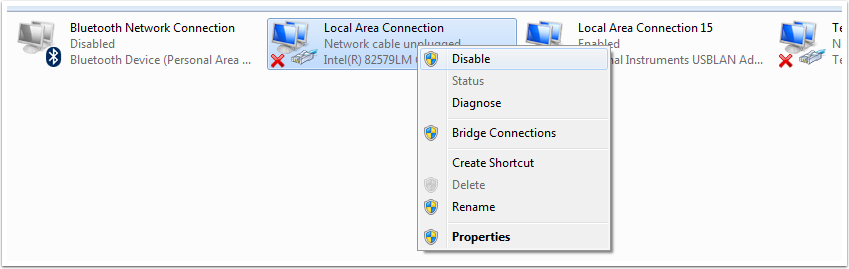

Select the network interface

Use the pop-up window to select the which ethernet interface the configuration utility will use to communicate with the wireless bridge. On Windows machines, ethernet interfaces are typically named “Local Area Connection”. The configuration utility can not program a bridge over a wireless connection.

If no ethernet interfaces are listed, click Refresh to re-scan for available interfaces.

Select the interface you want to use from the drop-down list.

Click OK.

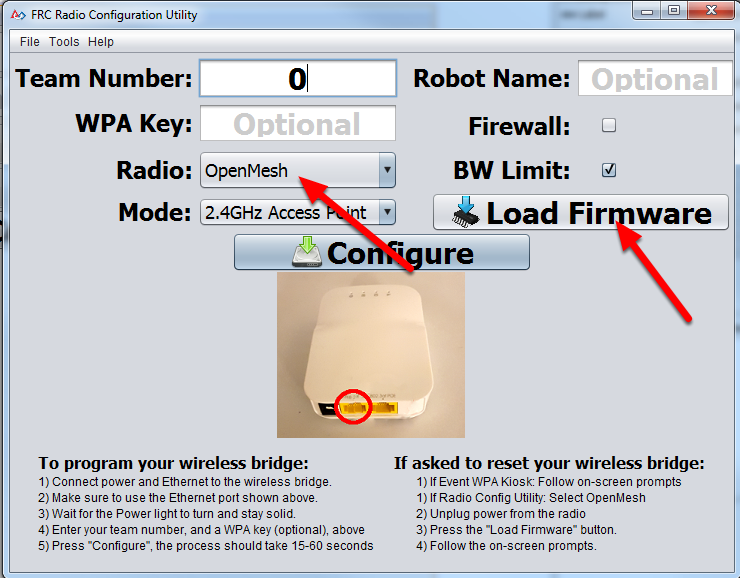

Open Mesh Firmware Note

For the FRC Radio Configuration Utility to program the OM5P-AN and OM5P-AC radio, the radio must be running an FRC specific build of the OpenWRT firmware.

If you do not need to update or re-load the firmware, skip the next step.

Warning

Radios used in 2019/2020/2021 do not need to be updated before configuring, the 2022 tool uses the same 2019 firmware.

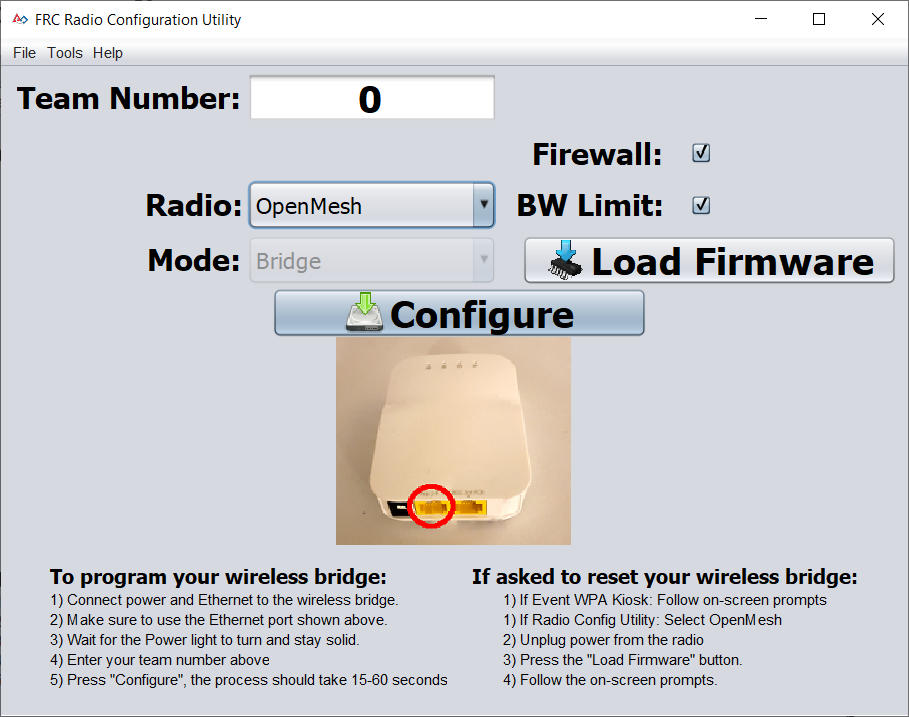

Loading FRC Firmware to Open Mesh Radio

If you need to load the FRC firmware (or reset the radio), you can do so using the FRC Radio Configuration Utility.

Follow the instructions above to install the software, launch the program and select the Ethernet interface.

Make sure the Open Mesh radio is selected in the Radio dropdown.

Make sure the radio is connected to the PC via Ethernet.

Unplug the power from the radio. (If using a PoE cable, this will also be unplugging the Ethernet to the PC, this is fine)

Press the Load Firmware button

When prompted, plug in the radio power. The software should detect the radio, load the firmware and prompt you when complete.

Warning

If you see an error about NPF name, try disabling all adapters other than the one being used to program the radio. If only one adapter is found, the tool should attempt to use that one. See the steps in Disabling Network Adapters for more info.

Teams may also see this error with foreign language Operating Systems. If you experience issues loading firmware or programming on a foreign language OS, try using an English OS, such as on the KOP provided PC or setting the Locale setting to “en_us” as described on this page.

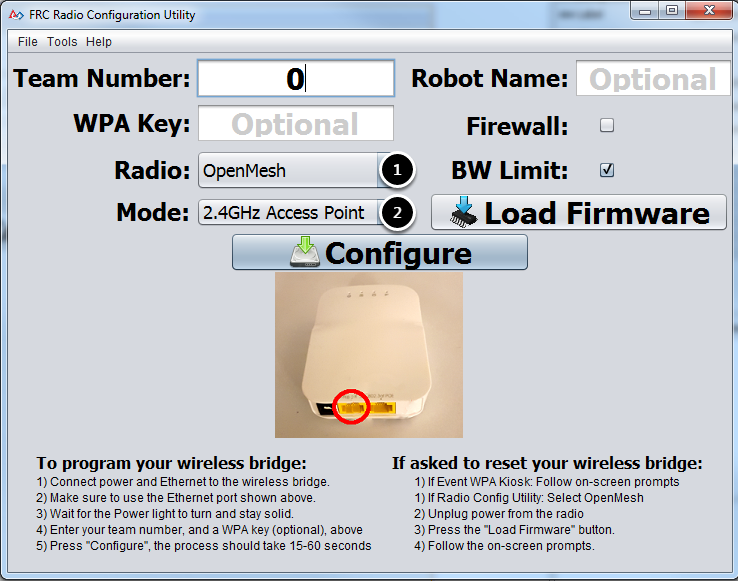

Select Radio and Operating Mode

Select which radio you are configuring using the drop-down list.

Select which operating mode you want to configure. For most cases, the default selection of 2.4GHz Access Point will be sufficient. If your computers support it, the 5GHz AP mode is recommended, as 5GHz is less congested in many environments.

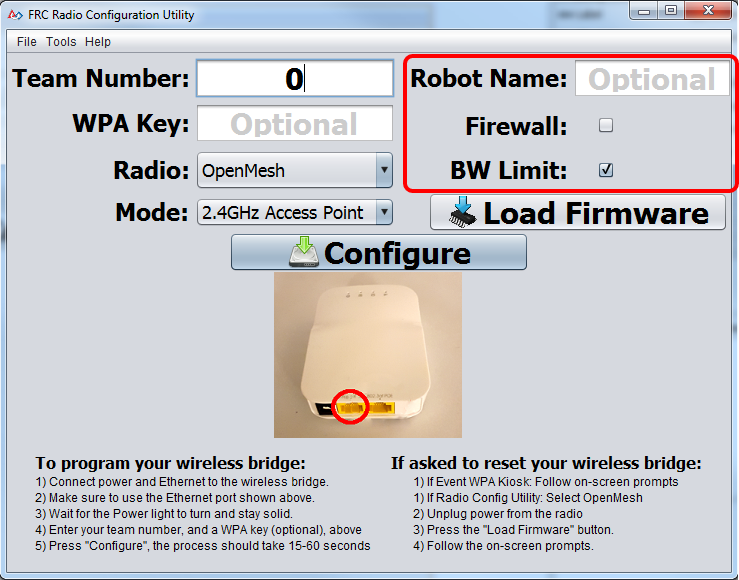

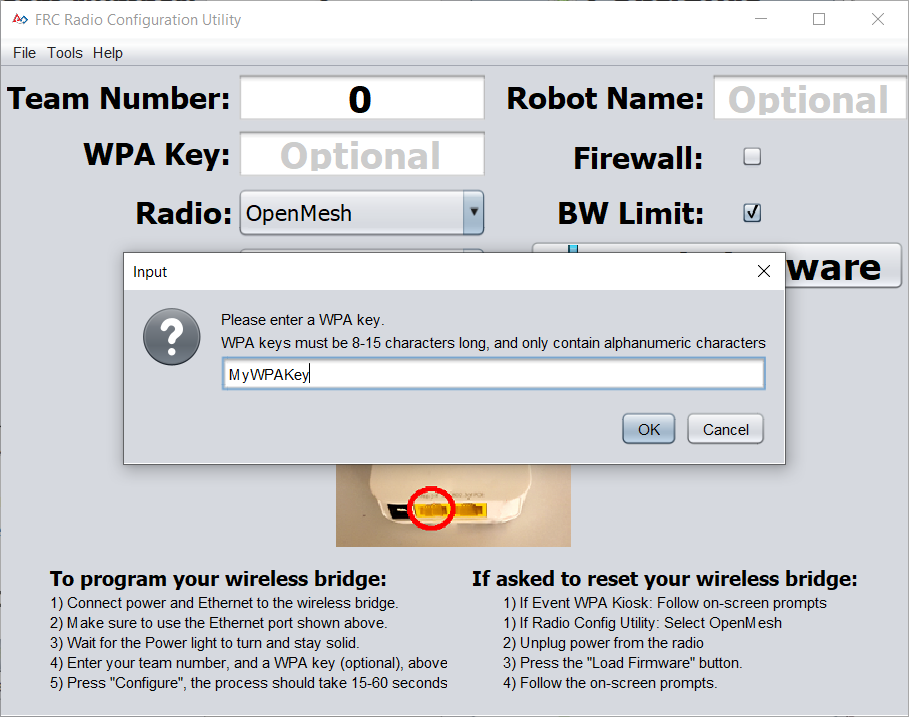

Select Options

The default values of the options have been selected to match the use case of most teams, however, you may wish to customize these options to your specific scenario:

Robot Name: This is a string that gets appended to the SSID used by the radio. This allows you to have multiple networks with the same team number and still be able to distinguish them.

Firewall: If this box is checked, the radio firewall will be configured to attempt to mimic the port blocking behavior of the firewall present on the FRC field. For a list of open ports, please see the FRC Game Manual.

BW Limit: If this box is checked, the radio enforces a 4 Mbps bandwidth limit like it does when programmed at events. Note that this is a total limit, not per client, so streaming video to multiple clients simultaneously may cause undesired behavior.

Note

Firewall and BW Limit only apply to the Open Mesh radios. These options have no effect on D-Link radios.

Warning

The “Firewall” option configures the radio to emulate the field firewall. This means that you will not be able to deploy code wirelessly with this option enabled. This is useful for simulating blocked ports that may exist at competitions.

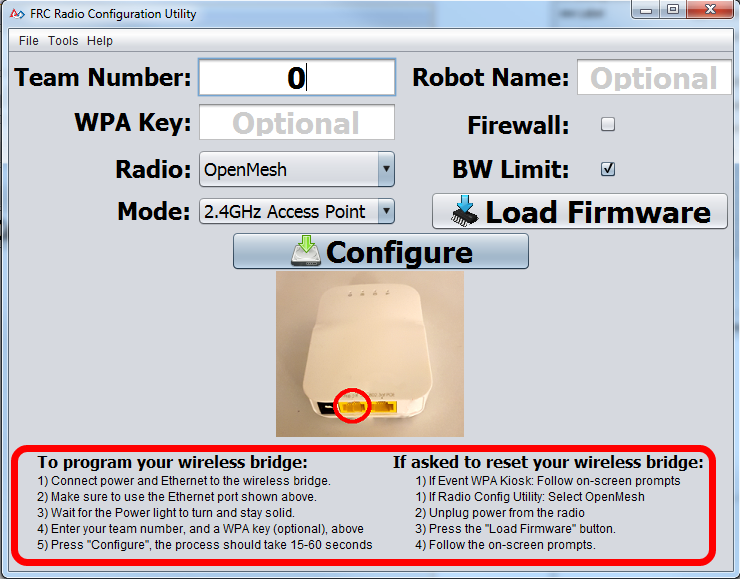

Starting the Configuration Process

Follow the on-screen instructions for preparing your wireless bridge, entering the settings the bridge will be configured with, and starting the configuration process. These on-screen instructions update to match the bridge model and operating mode chosen.

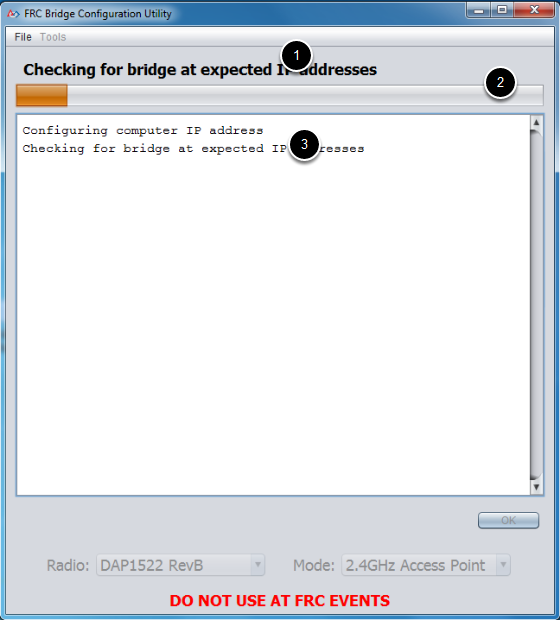

Configuration Progress

Throughout the configuration process, the window will indicate:

The step currently being executed.

The overall progress of the configuration process.

All steps executed so far.

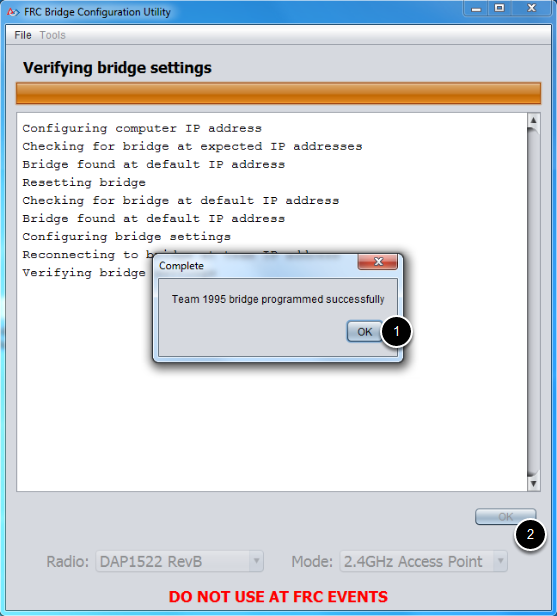

Configuration Completed

Once the configuration is complete:

Press OK on the dialog window.

Press OK on the main window to return to the settings screen.

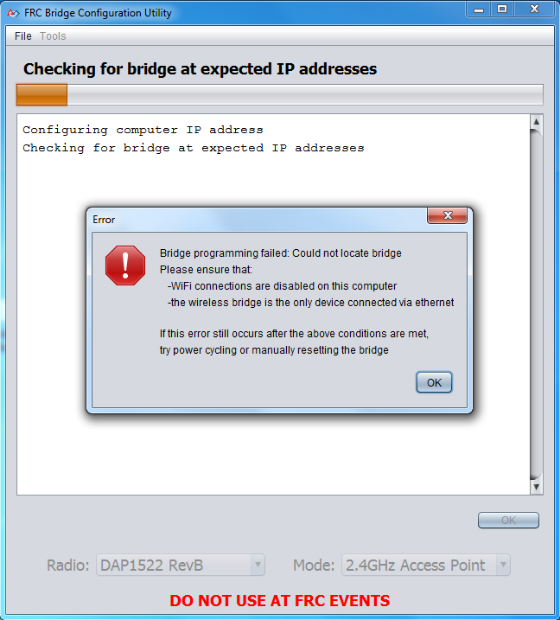

Configuration Errors

If an error occurs during the configuration process, follow the instructions in the error message to correct the problem.

Troubleshooting

Make sure you wait long enough that the power light has stayed solid for 10 seconds.

Make sure you have the correct network interface, and only one interface is listed in the drop-down.

Make sure your firewall is turned off.

Plug directly from your computer into the wireless bridge and make sure no other devices are connected to your computer via ethernet.

Ensure the ethernet is plugged into the port closest to the power jack on the wireless bridge.

If using a foreign language Operating System, try using an English OS, such as on the KOP provided PC or setting the Locale setting to “en_us” as described on this page.

If all else fails, try a different computer.

Step 4: Programming your Robot

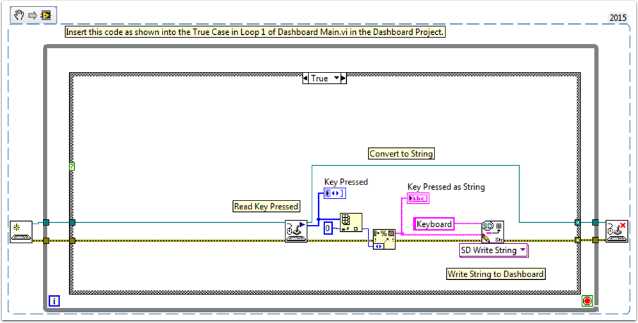

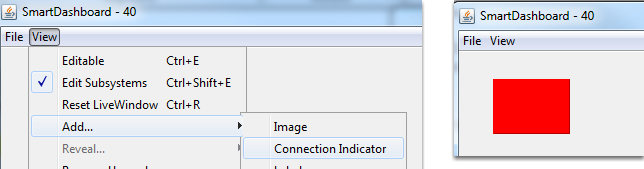

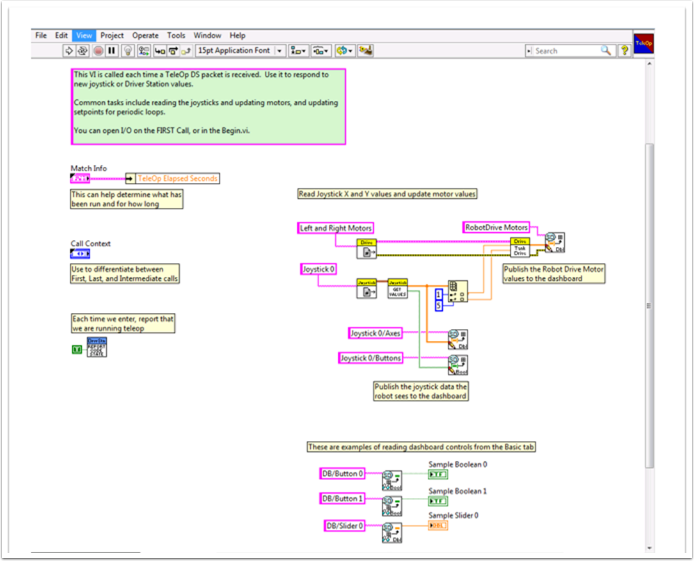

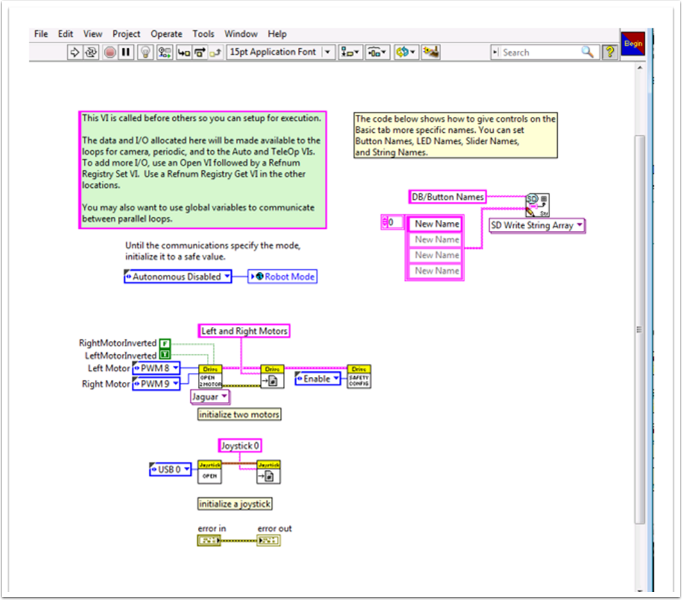

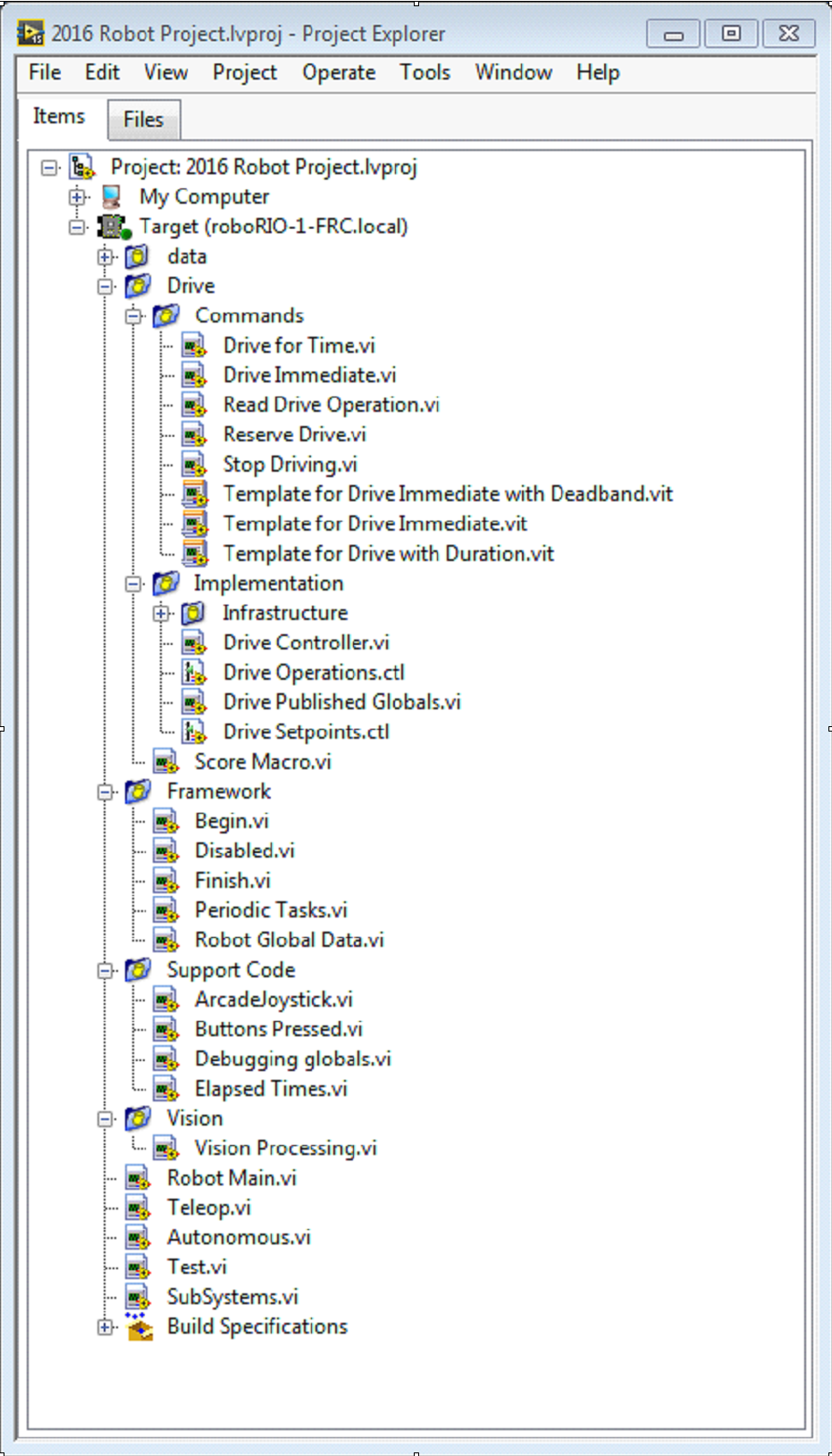

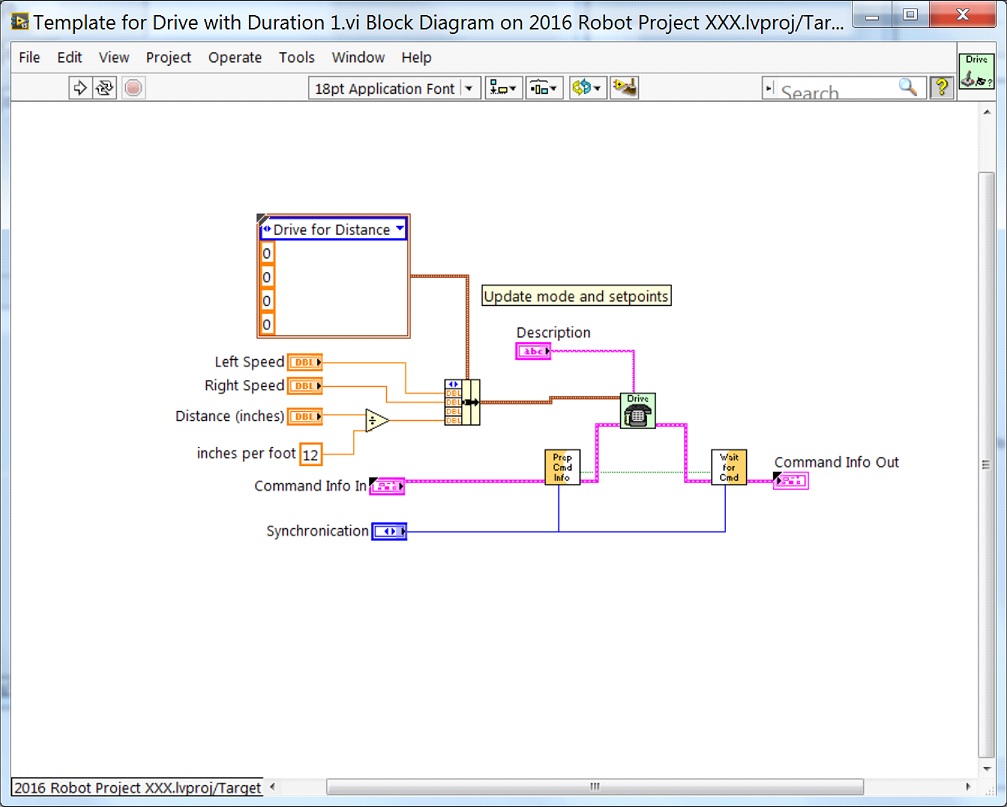

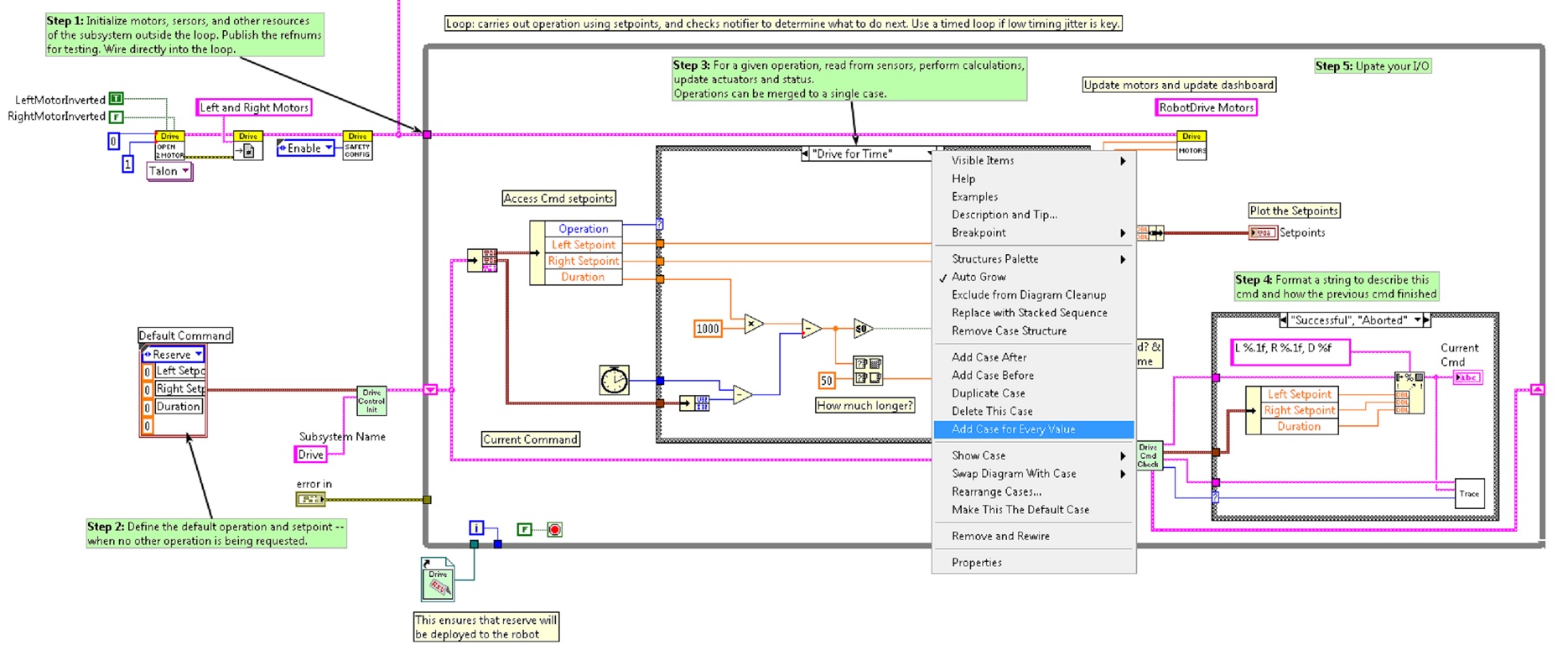

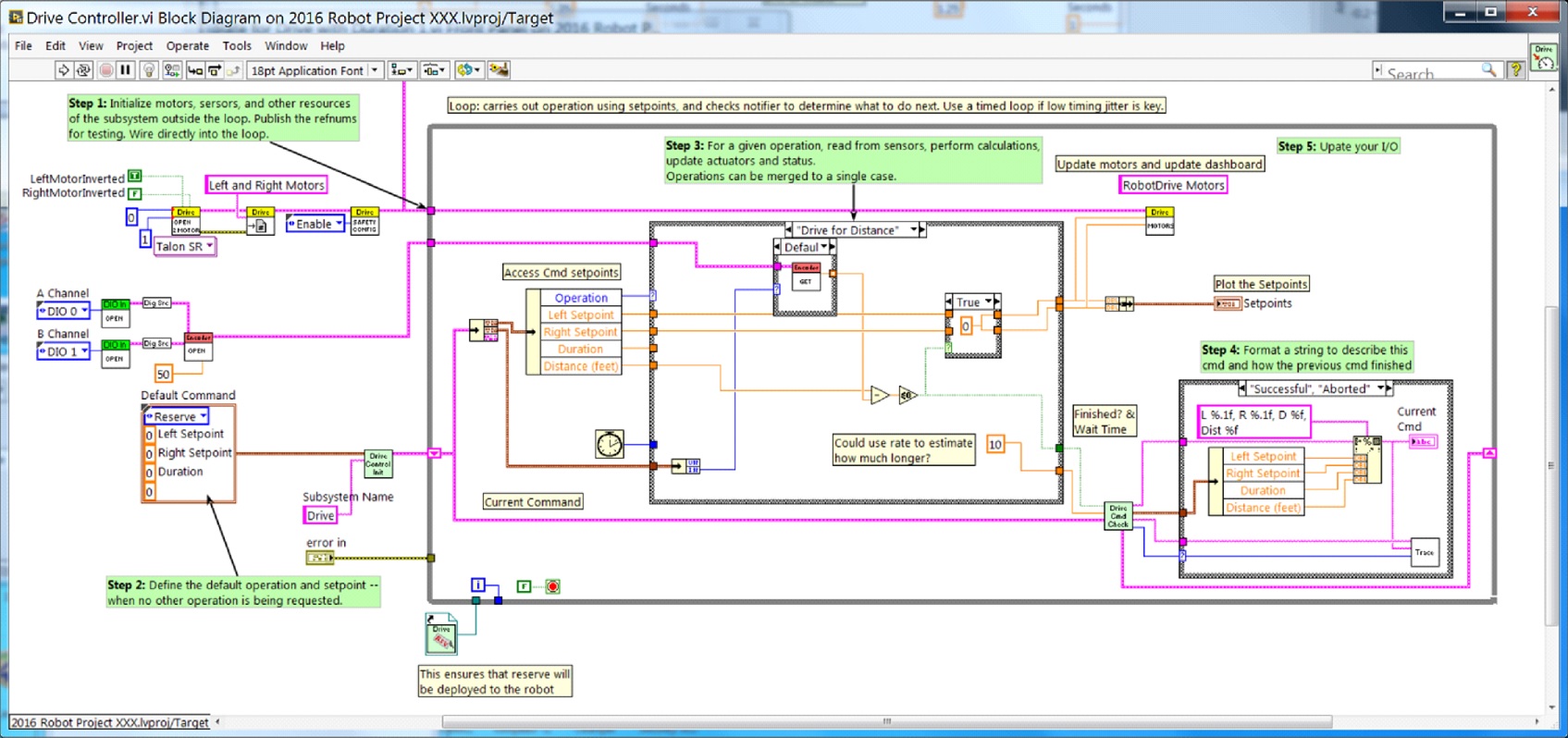

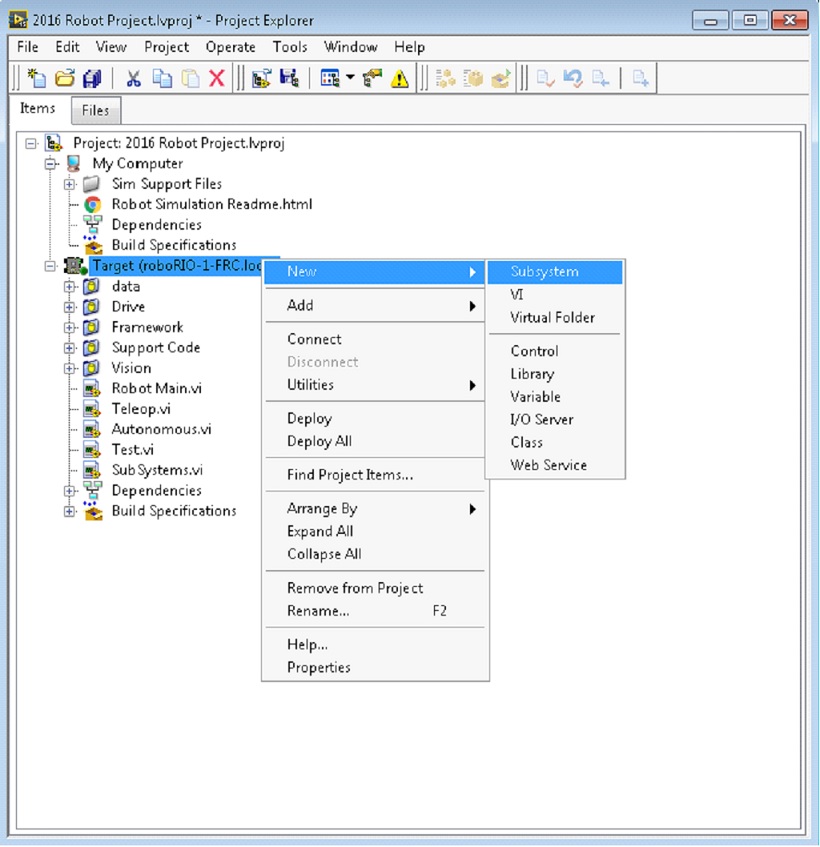

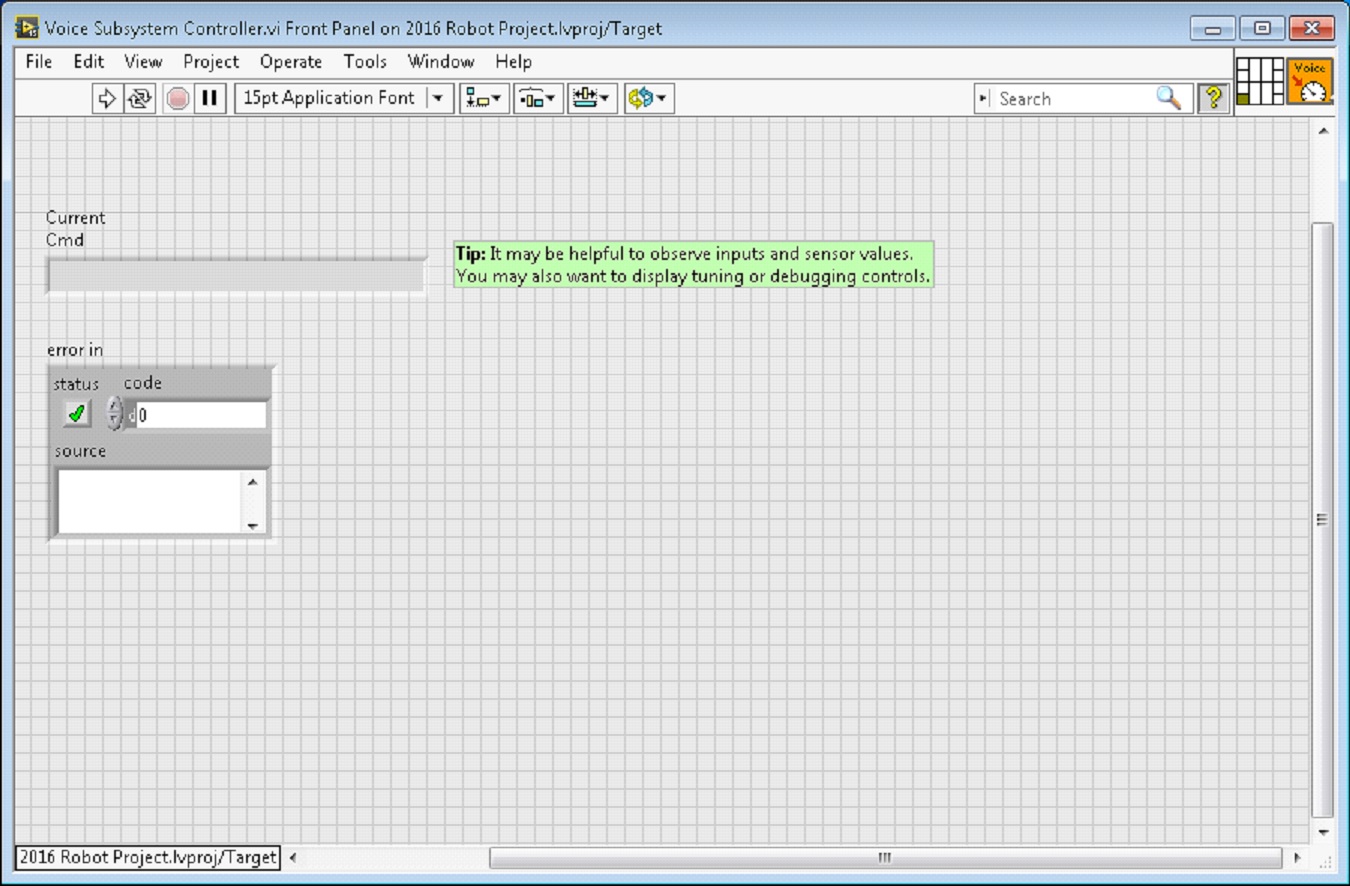

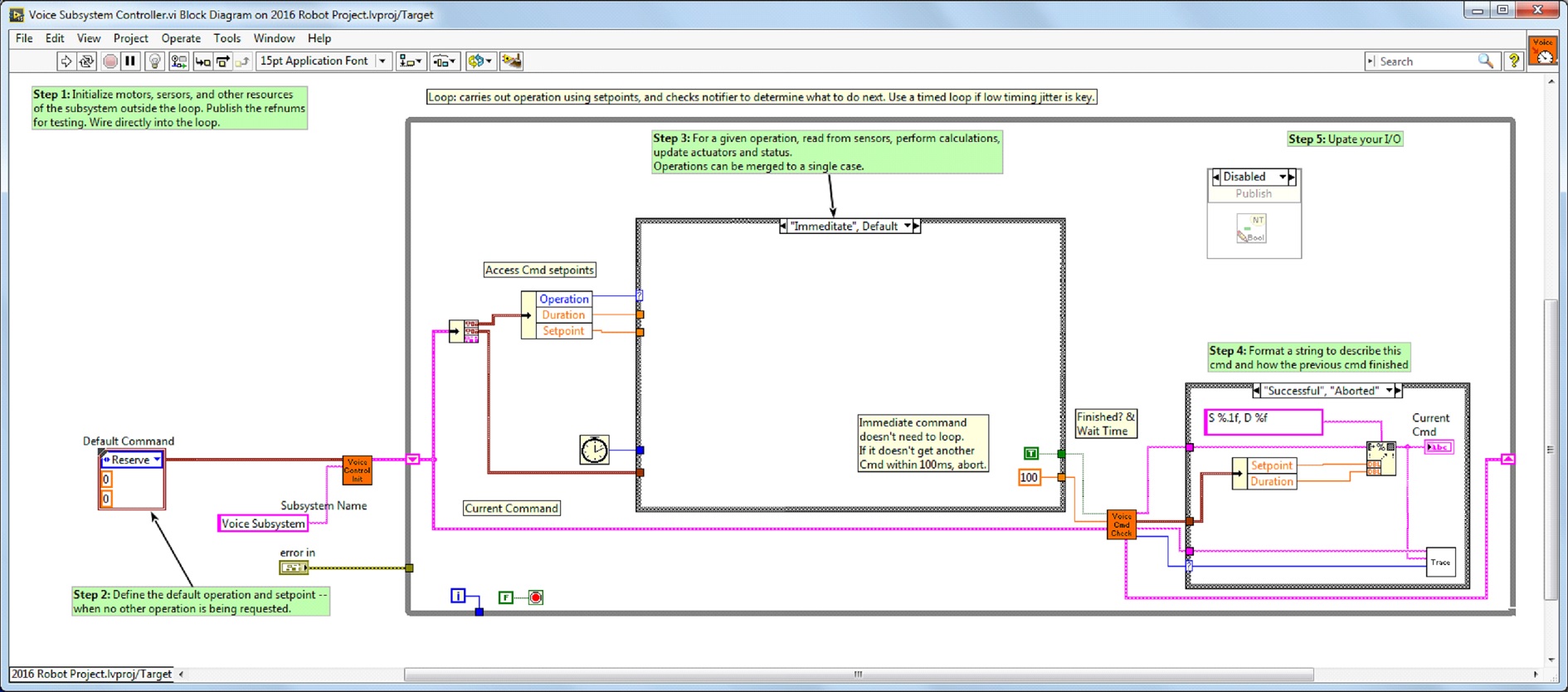

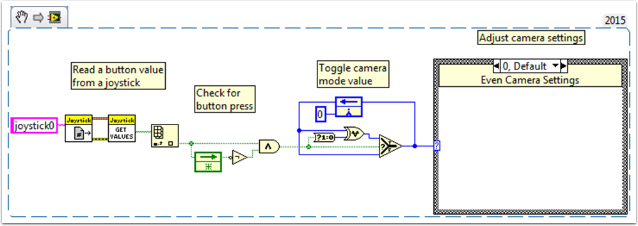

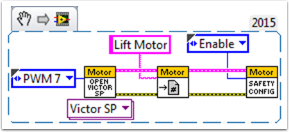

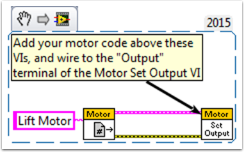

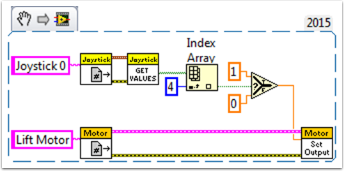

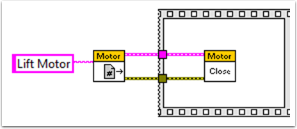

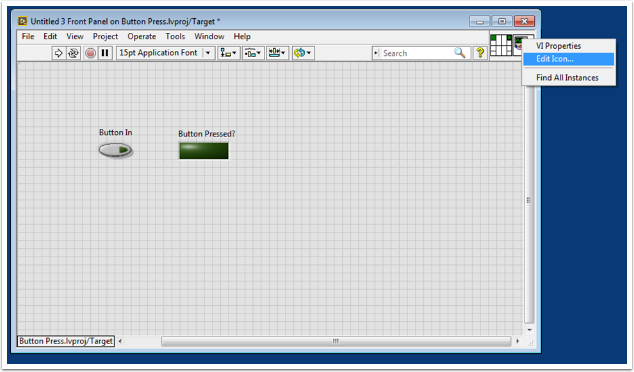

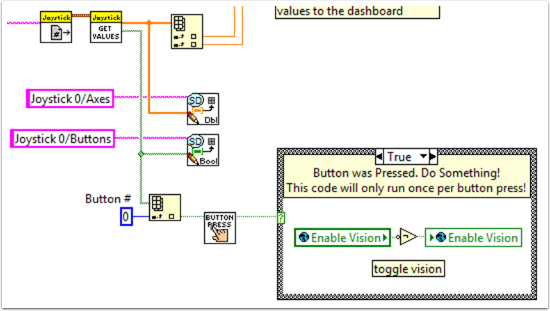

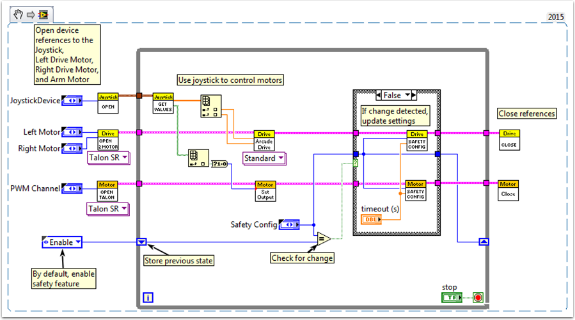

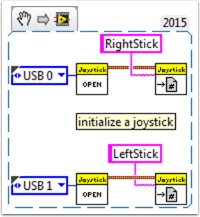

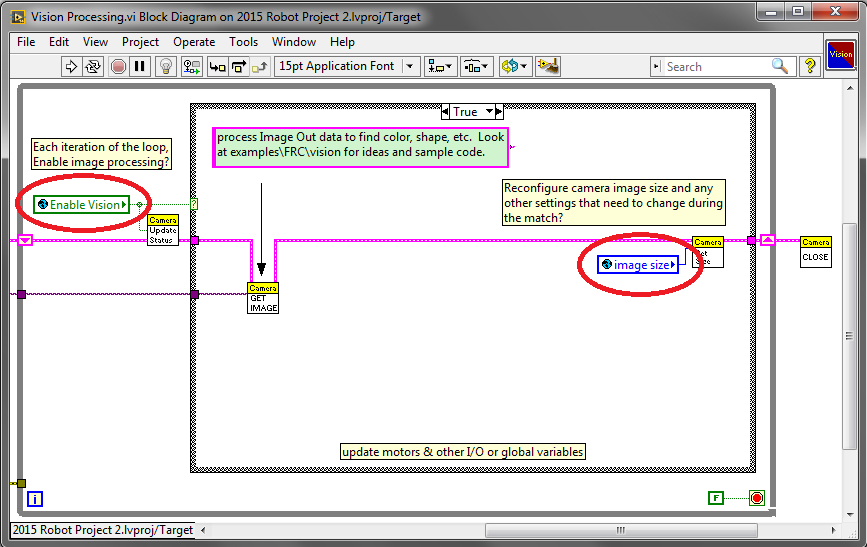

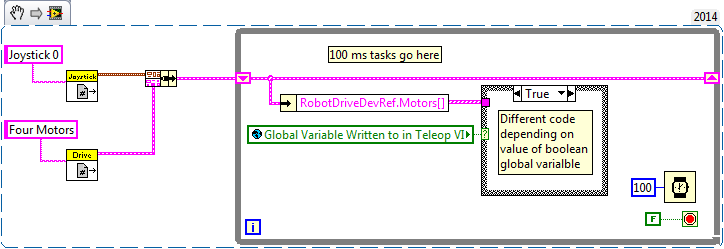

Creating your Benchtop Test Program (LabVIEW)

Note

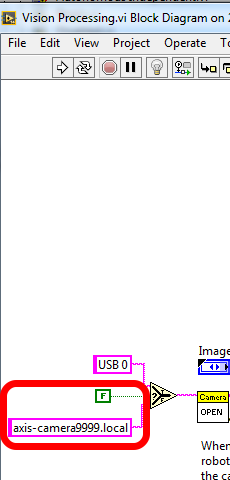

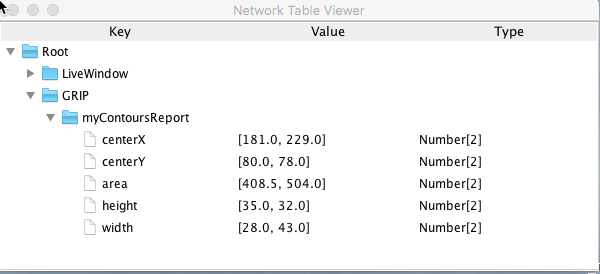

This document covers how to create, build and load an FRC® LabVIEW program onto a roboRIO. Before beginning, make sure that you have installed LabVIEW for FRC and the FRC Game Tools and that you have configured and imaged your roboRIO as described in the Zero-to-Robot tutorial.

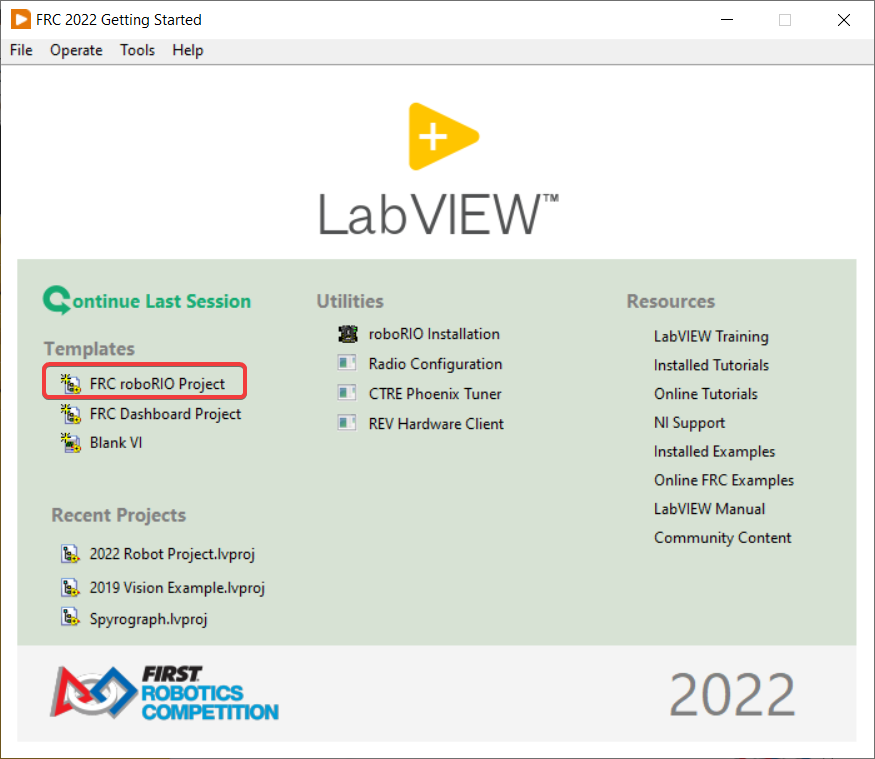

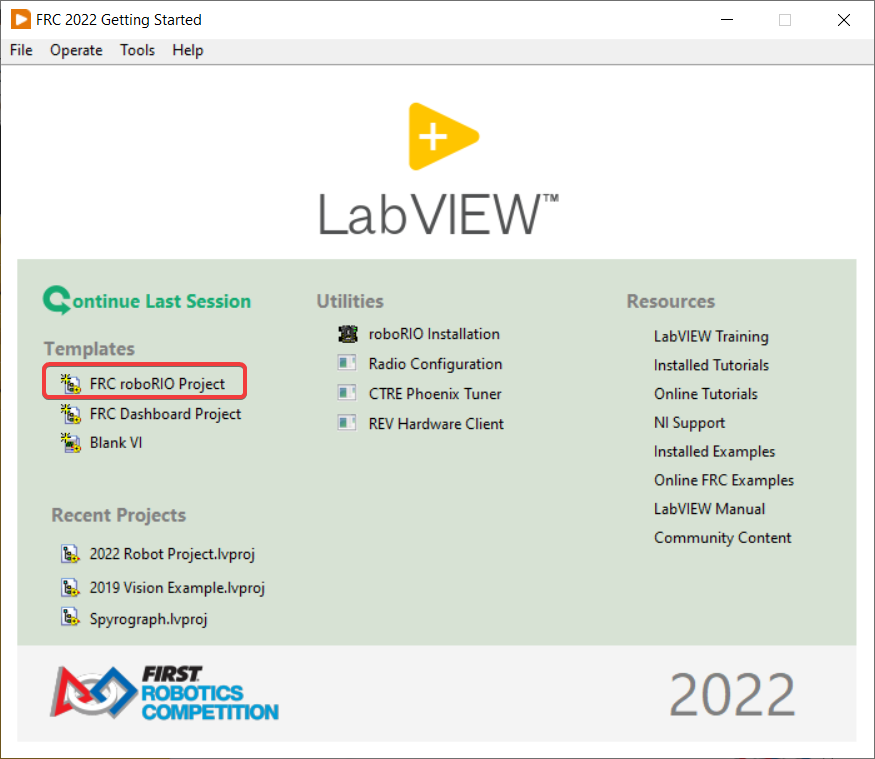

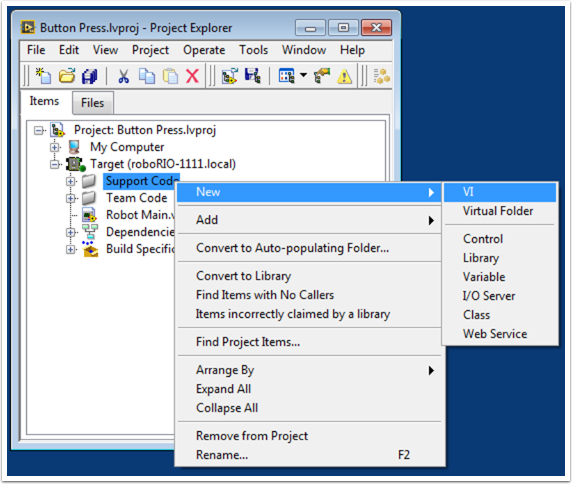

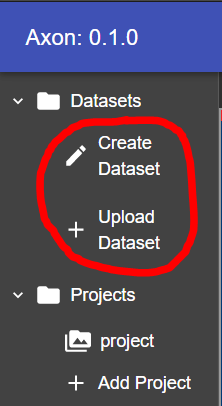

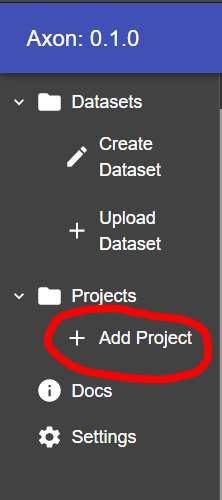

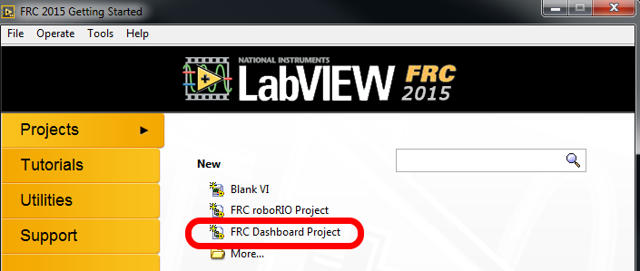

Creating a Project

Launch LabVIEW and click the FRC roboRIO Robot Project link to display the Create New FRC Robot Project dialog box.

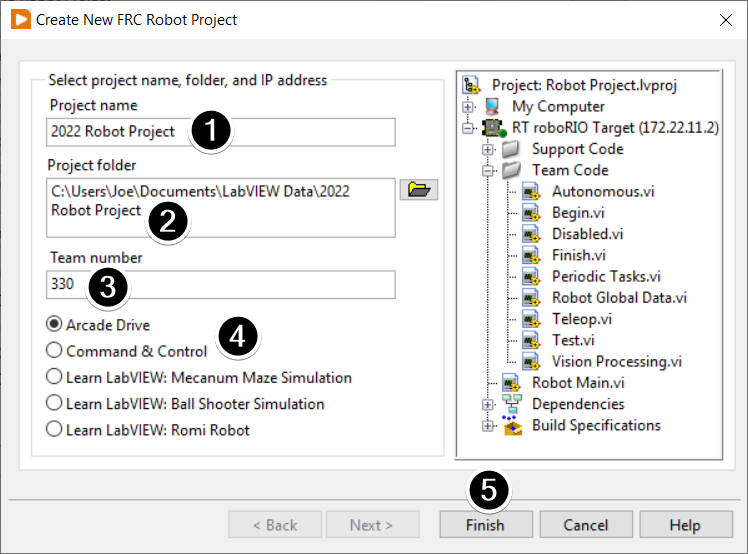

Configuring Project

Fill in the Create New FRC Project Dialog:

Pick a name for your project

Select a folder to place the project in.

Enter your team number

Select a project type. If unsure, select Arcade Drive.

Click Finish

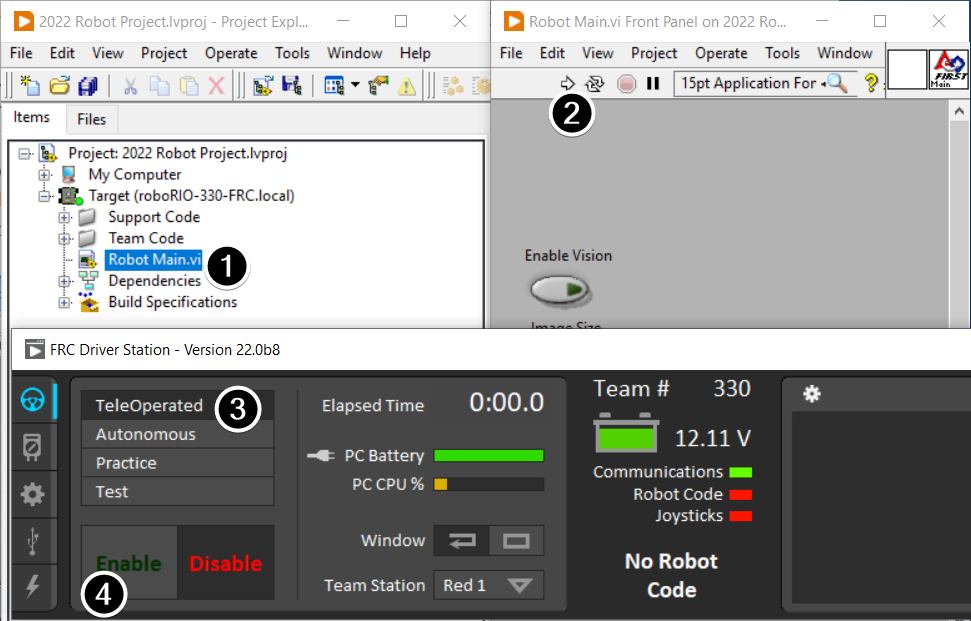

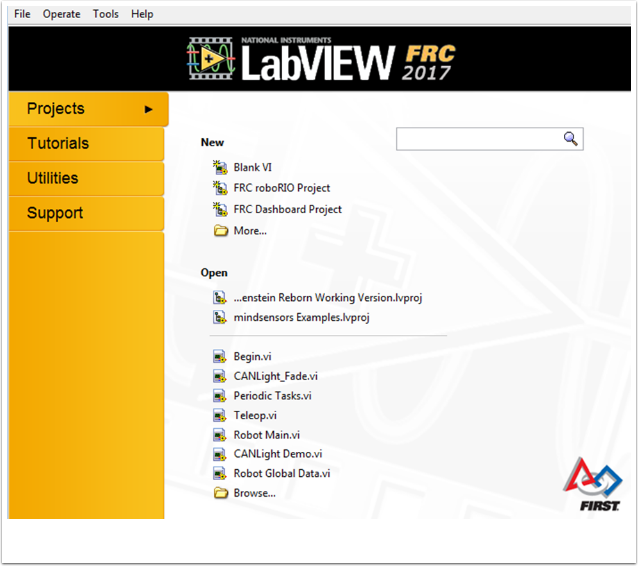

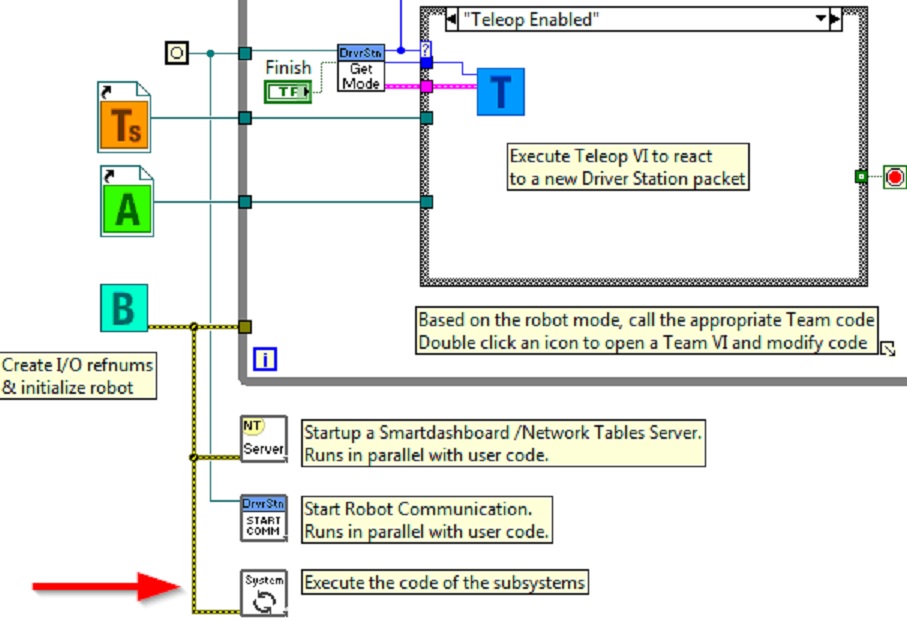

Running the Program

Note

Note that a program deployed in this manner will not remain on the roboRIO after a power cycle. To deploy a program to run every time the roboRIO starts follow the next step, Deploying the program.

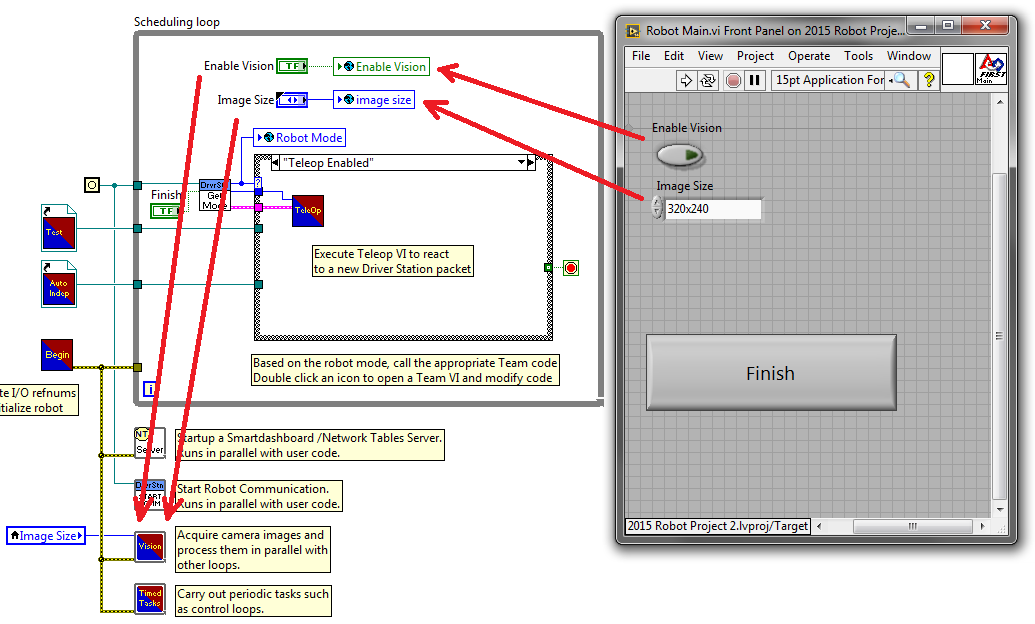

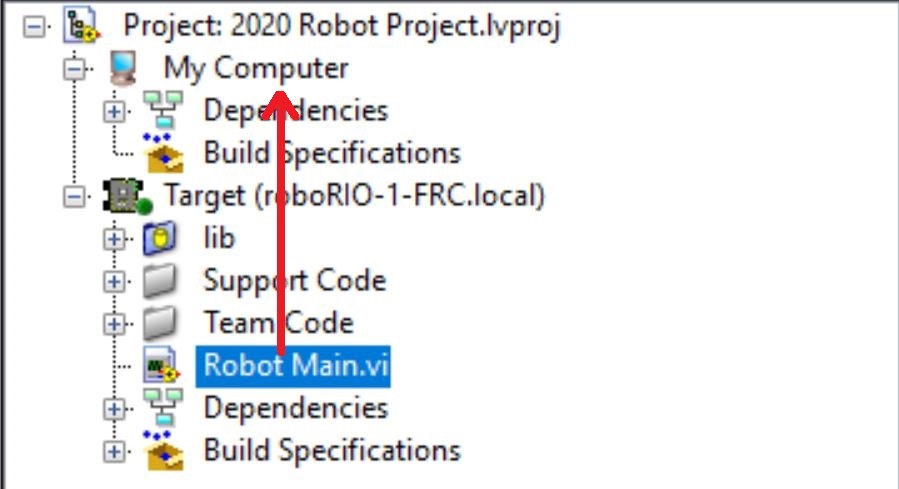

In the Project Explorer window, double-click the Robot Main.vi item to open the Robot Main VI.

Click the Run button (White Arrow on the top ribbon) of the Robot Main VI to deploy the VI to the roboRIO. LabVIEW deploys the VI, all items required by the VI, and the target settings to memory on the roboRIO. If prompted to save any VIs, click Save on all prompts.

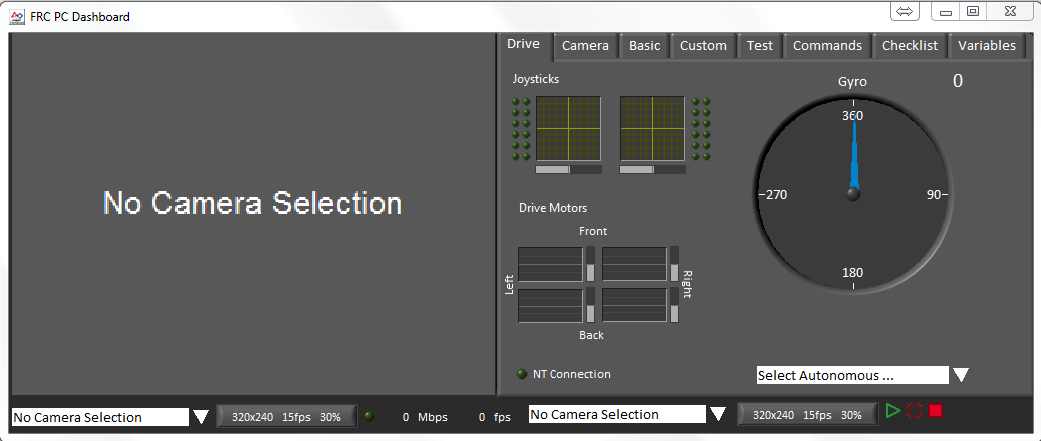

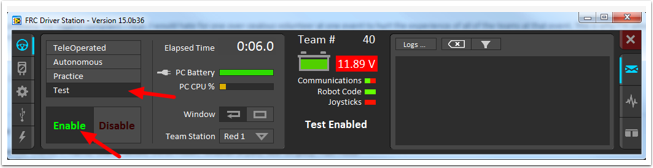

Using the Driver Station software, put the robot in Teleop Mode. For more information on configuring and using the Driver Station software, see the FRC Driver Station Software article.

Click Enable.

Move the joysticks and observe how the robot responds.

Click the Abort button of the Robot Main VI. Notice that the VI stops. When you deploy a program with the Run button, the program runs on the roboRIO, but you can manipulate the front panel objects of the program from the host computer.

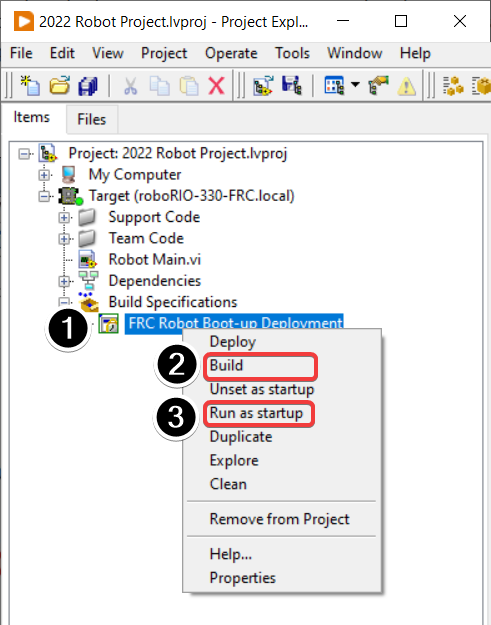

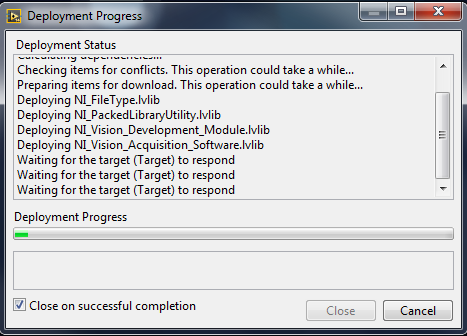

Deploying the Program

To run in the competition, you will need to deploy a program to your roboRIO. This allows the program to survive across reboots of the controller, but doesn’t allow the same debugging features (front panel, probes, highlight execution) as running from the front panel. To deploy your program:

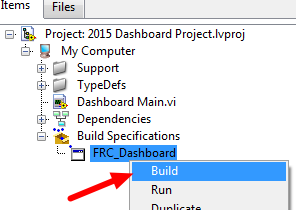

In the Project Explorer, click the + next to Build Specifications to expand it.

Right-click on FRC Robot Boot-up Deployment and select Build. Wait for the build to complete.

Right-click again on FRC Robot Boot-Up Deployment and select Run as Startup. If you receive a conflict dialog, click OK. This dialog simply indicates that there is currently a program on the roboRIO which will be terminated/replaced.

Either check the box to close the deployment window on successful completion or click the close button when the deployment completes.

The roboRIO will automatically start running the deployed code within a few seconds of the dialog closing.

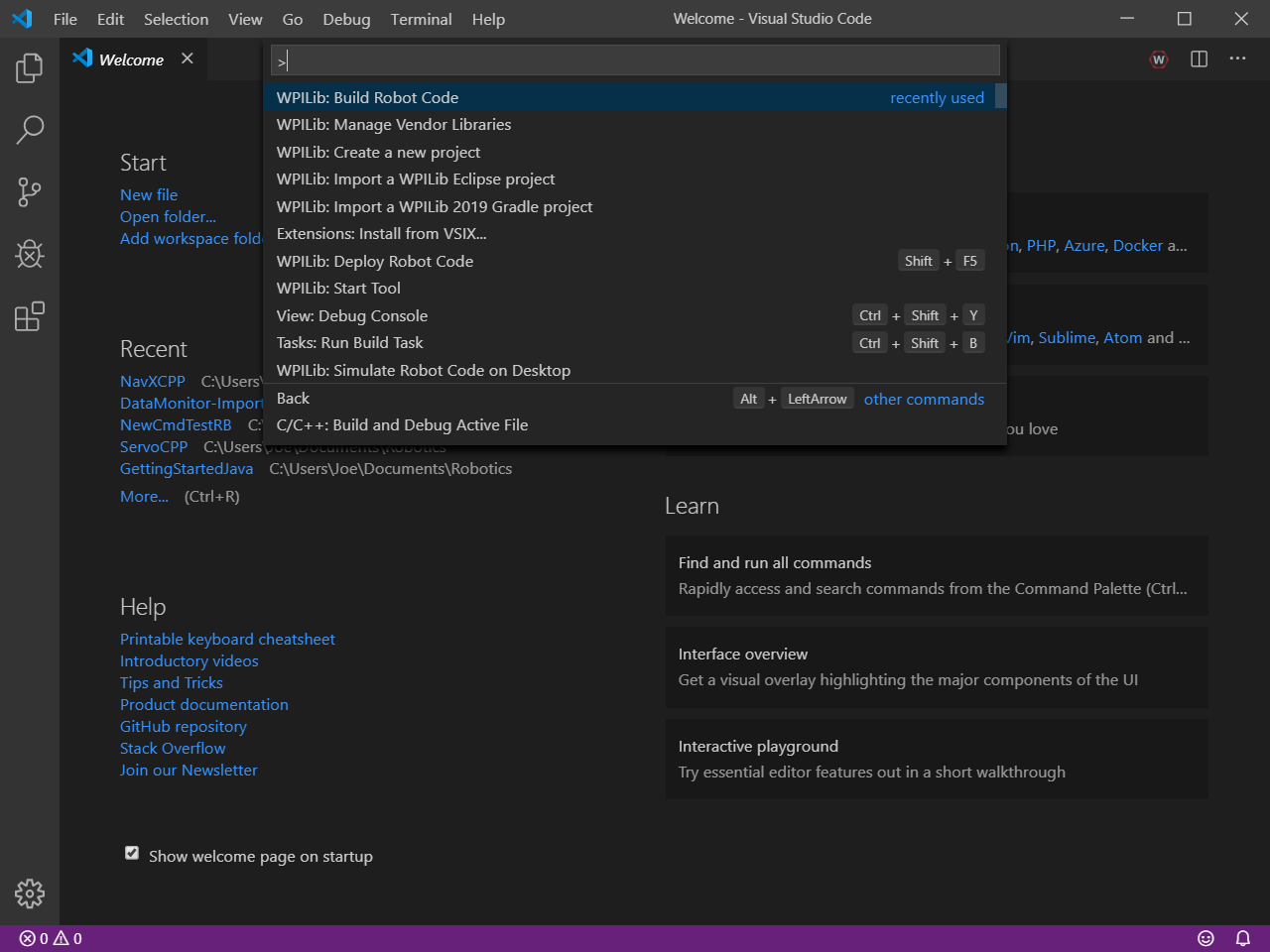

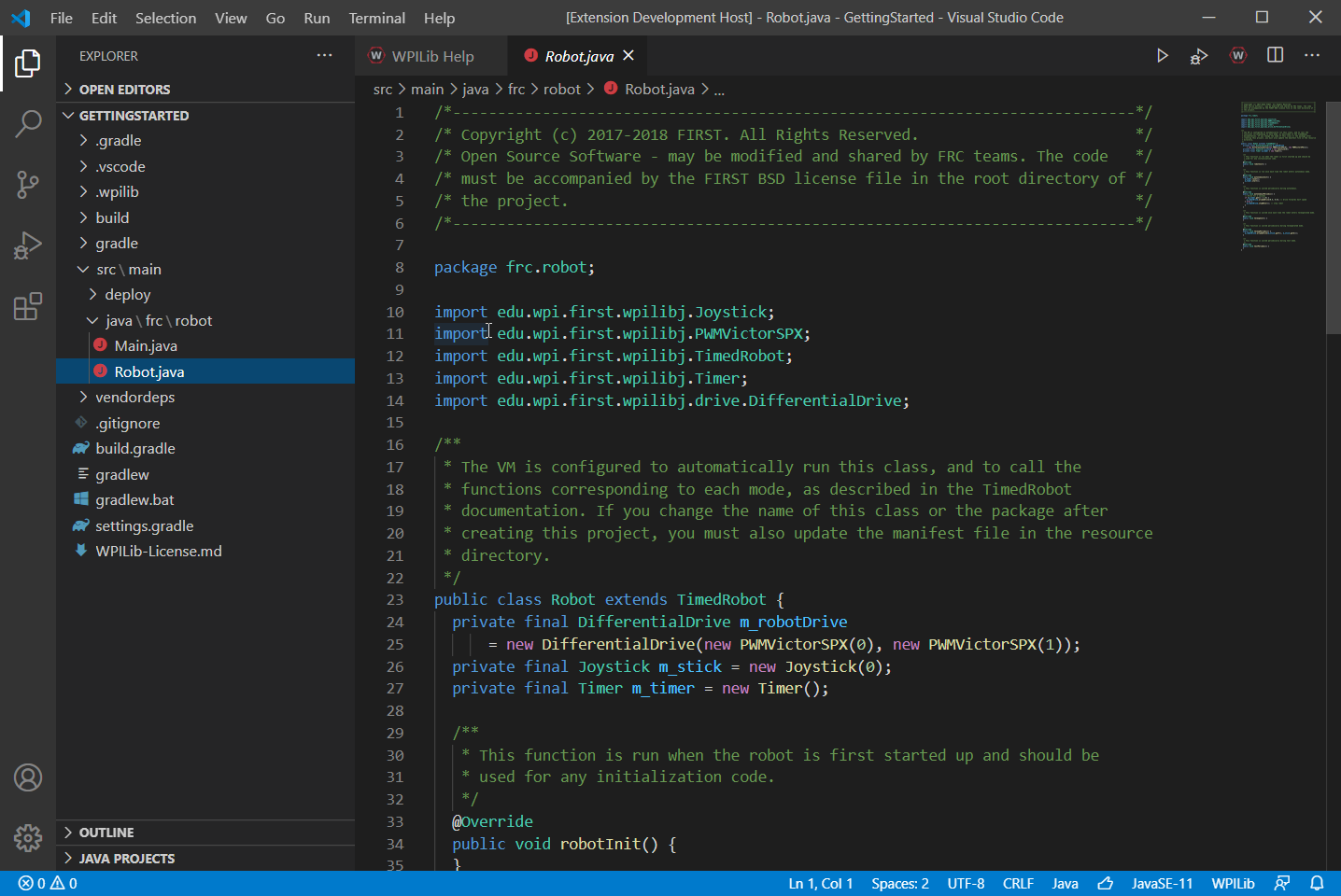

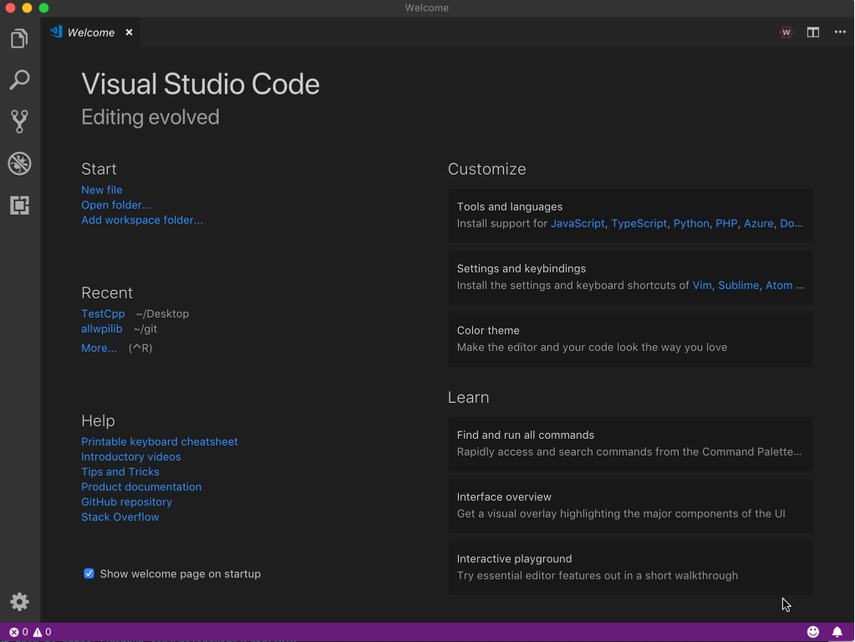

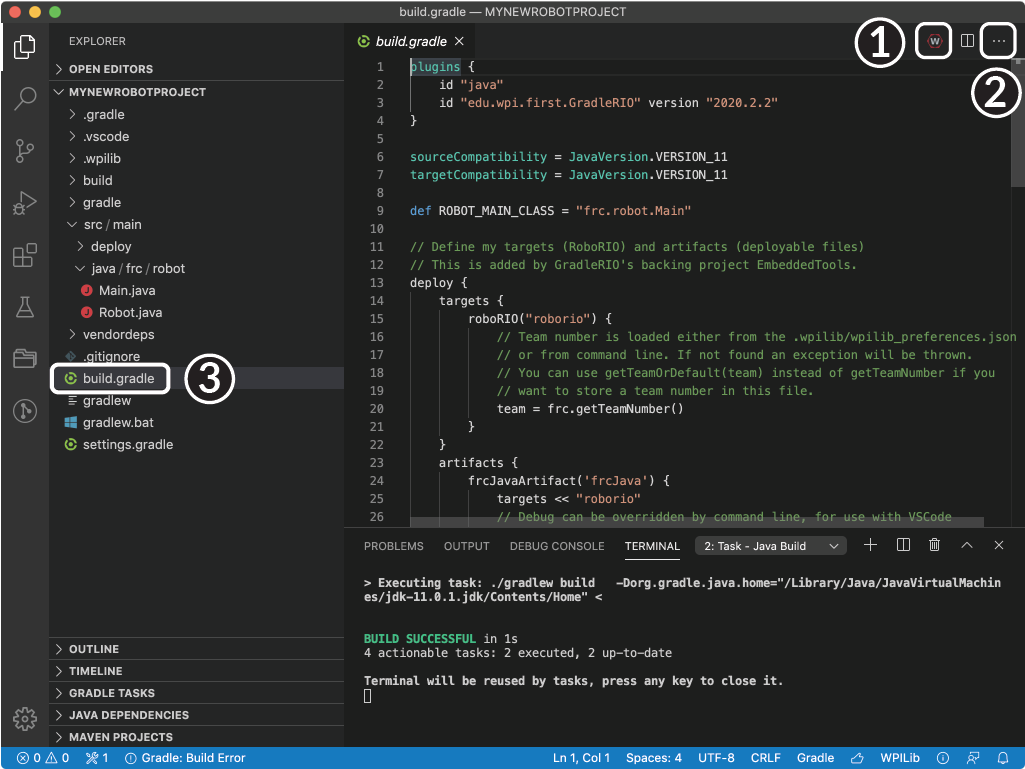

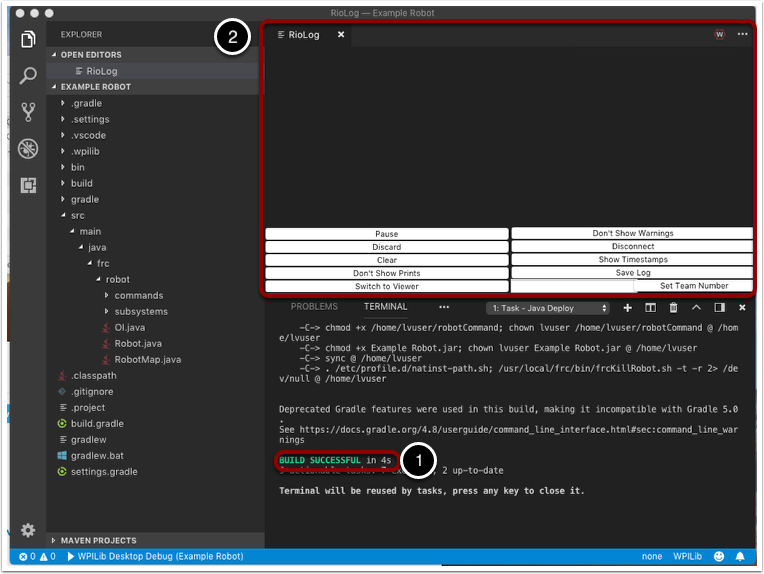

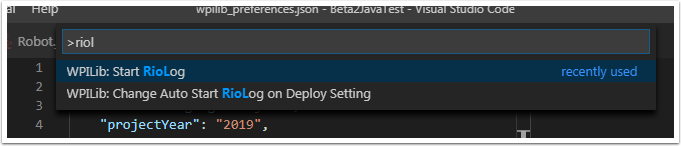

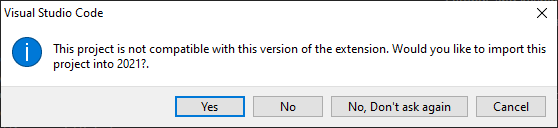

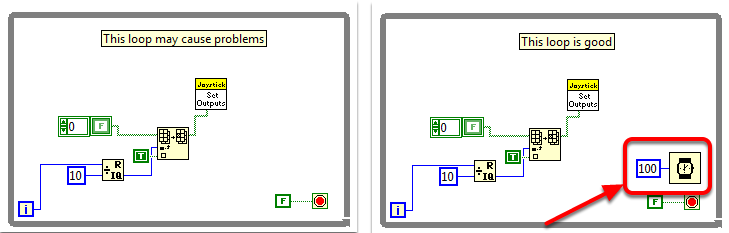

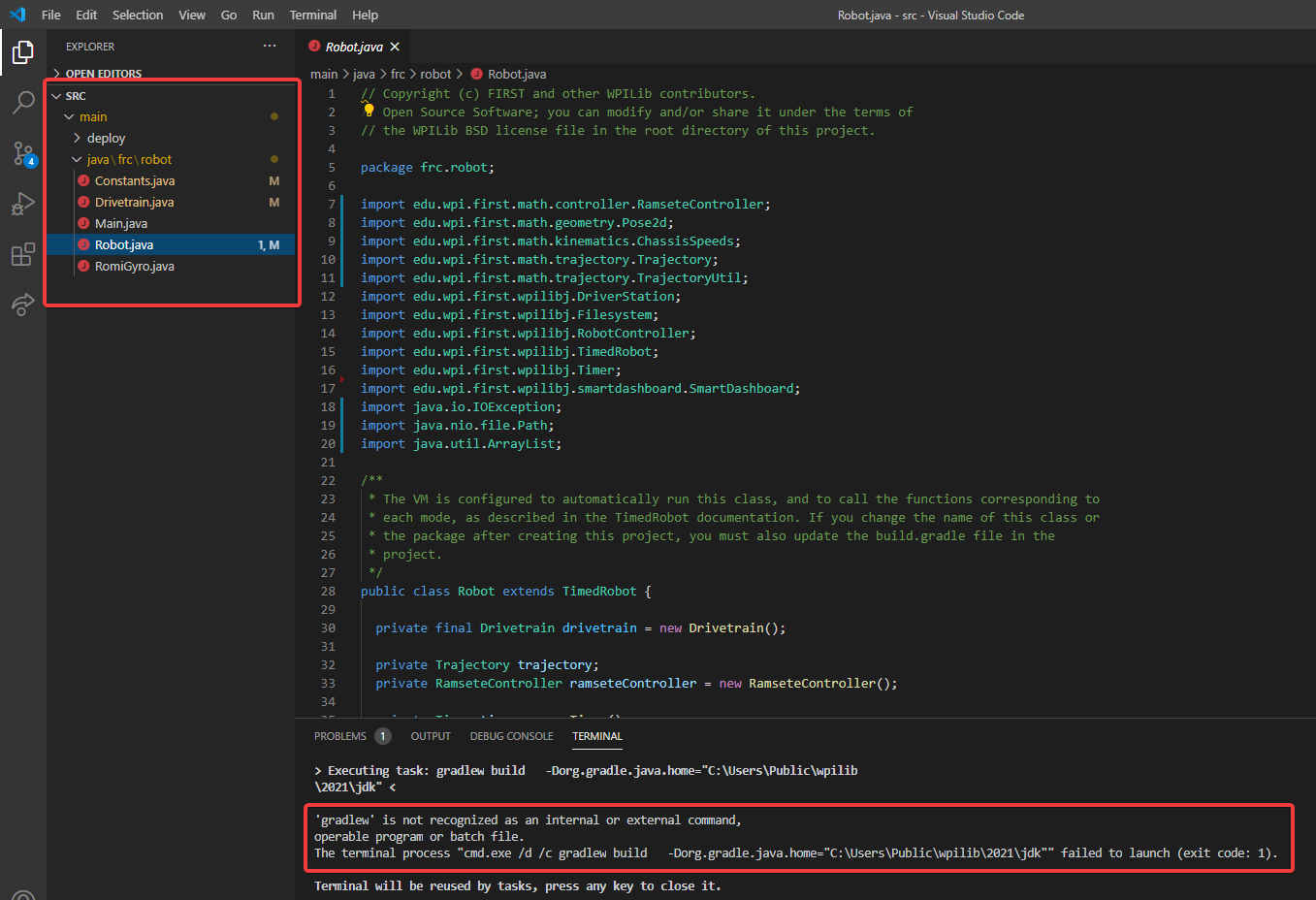

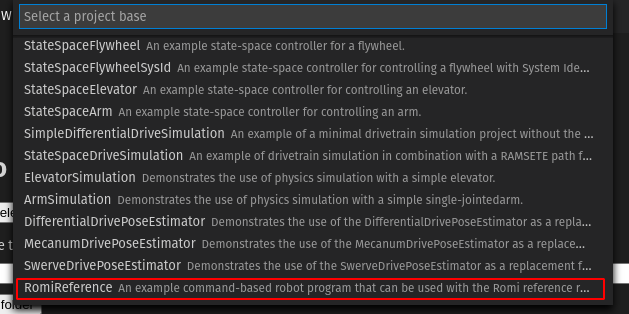

Creating your Benchtop Test Program (C++/Java)

Once everything is installed, we’re ready to create a robot program. WPILib comes with several templates for robot programs. Use of these templates is highly recommended for new users; however, advanced users are free to write their own robot code from scratch. This article walks through creating a project from one of the provided examples which has some code already written to drive a basic robot.

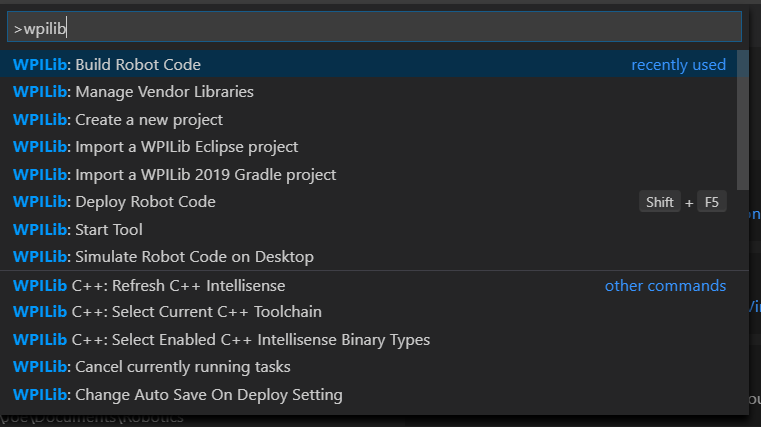

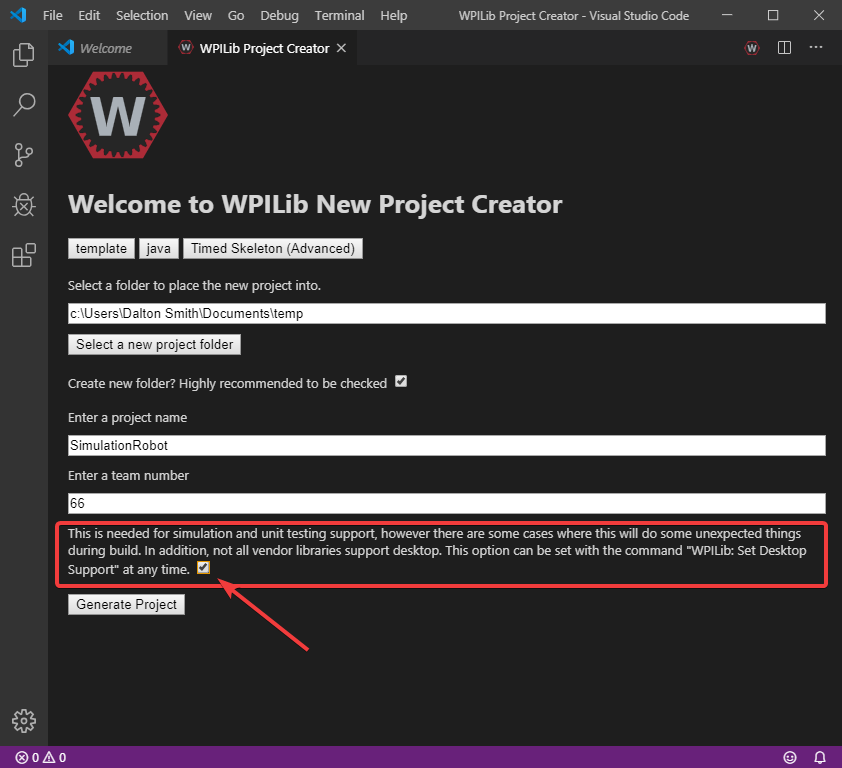

Creating a New WPILib Project

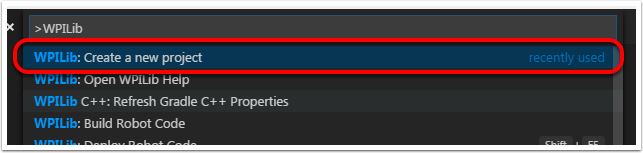

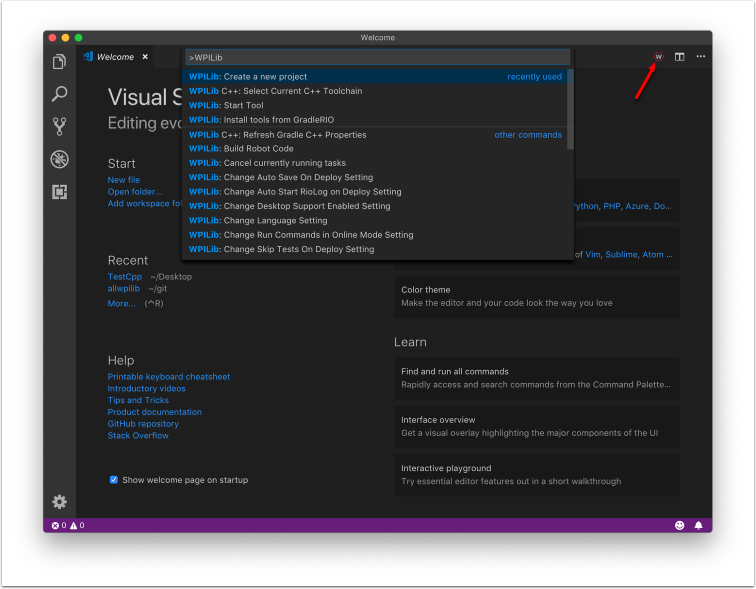

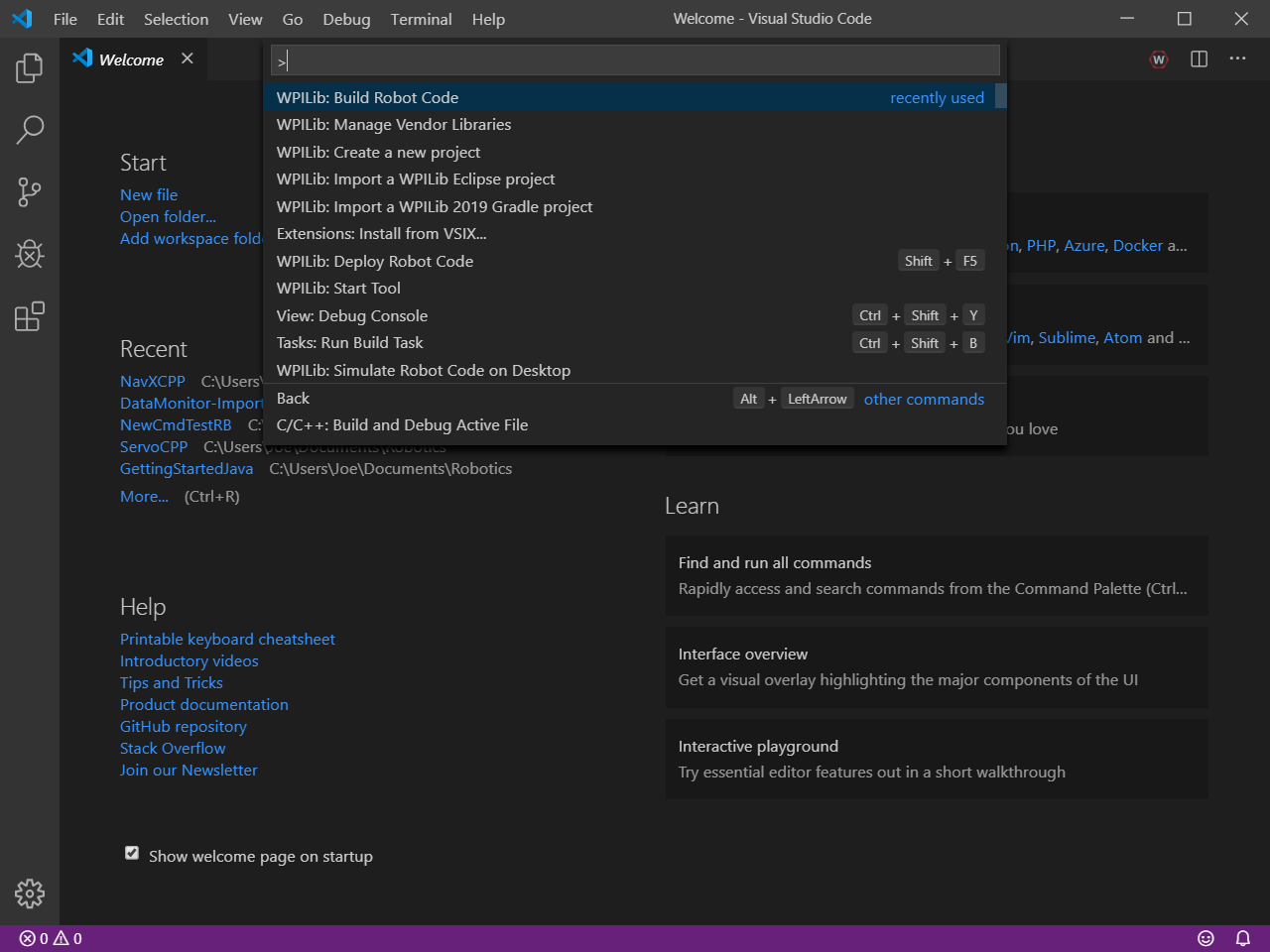

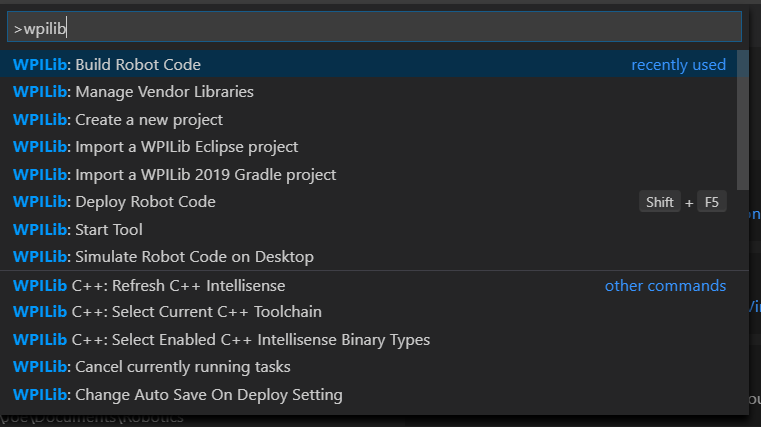

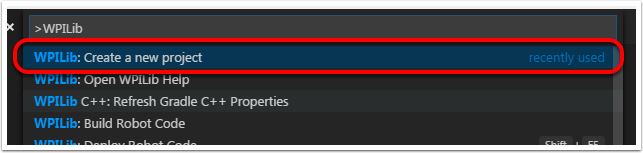

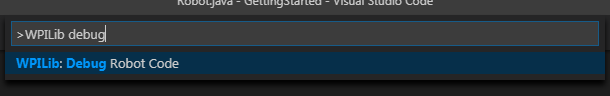

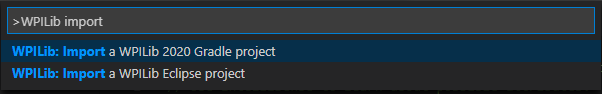

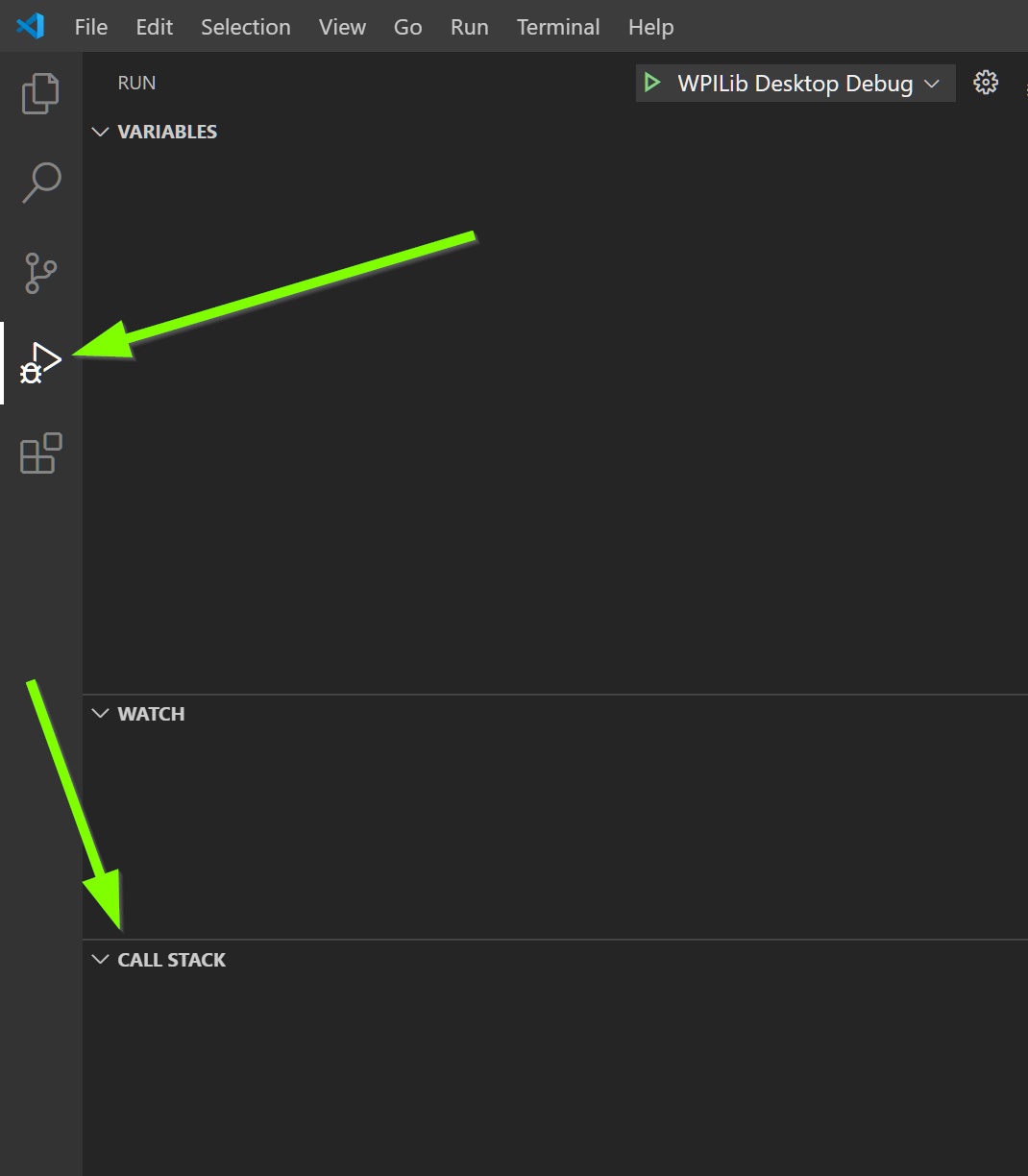

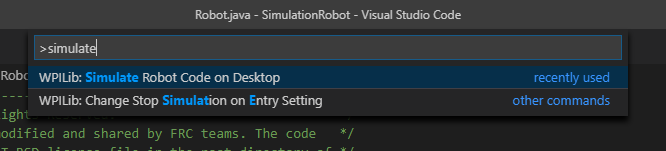

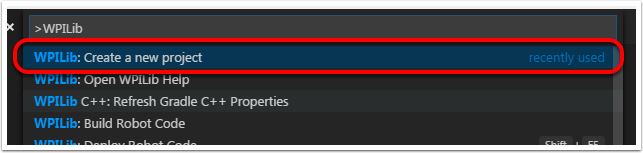

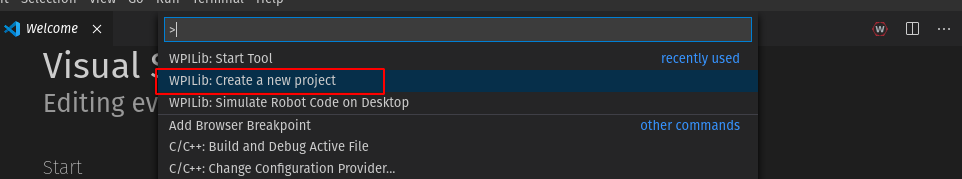

Bring up the Visual Studio Code command palette with Ctrl+Shift+P:

Then, type “WPILib” into the prompt. Since all WPILib commands start with “WPILib,” this will bring up the list of WPILib-specific VS Code commands:

Now, select the “Create a new project” command:

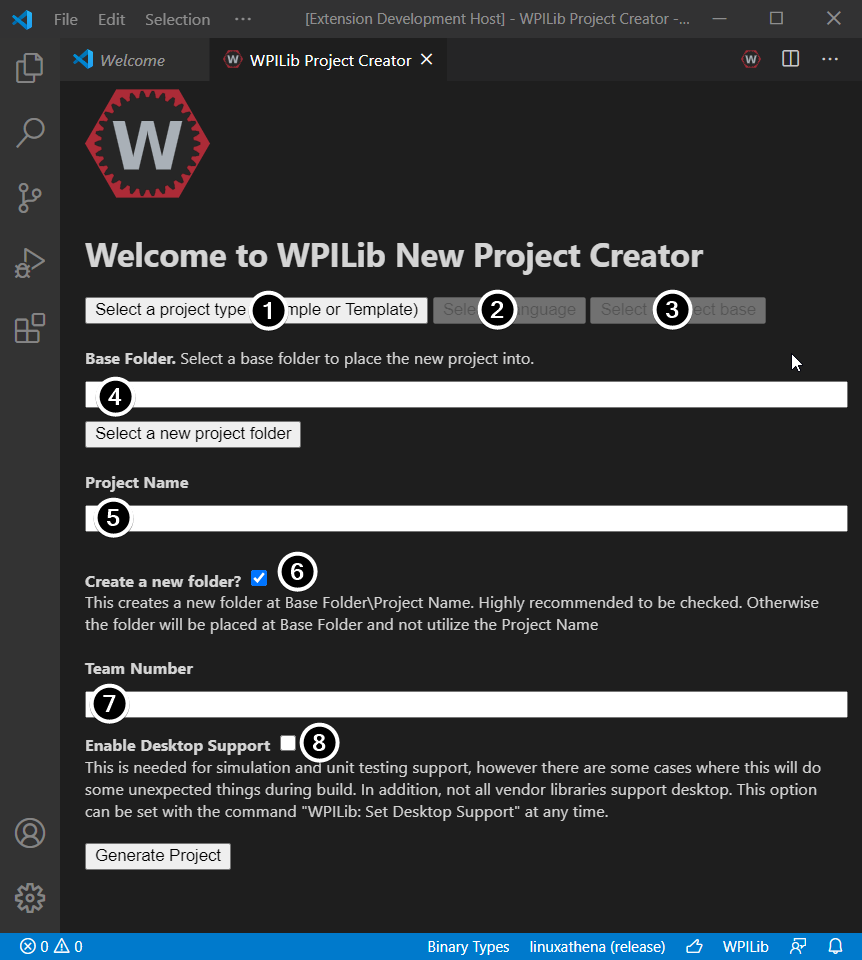

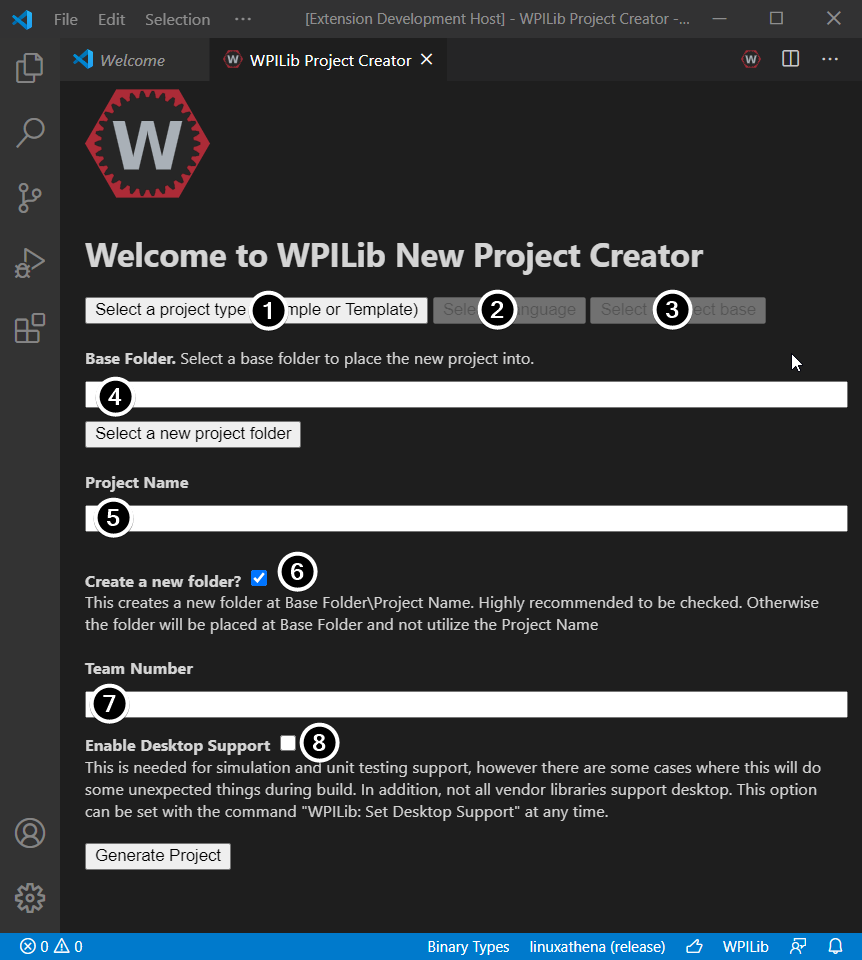

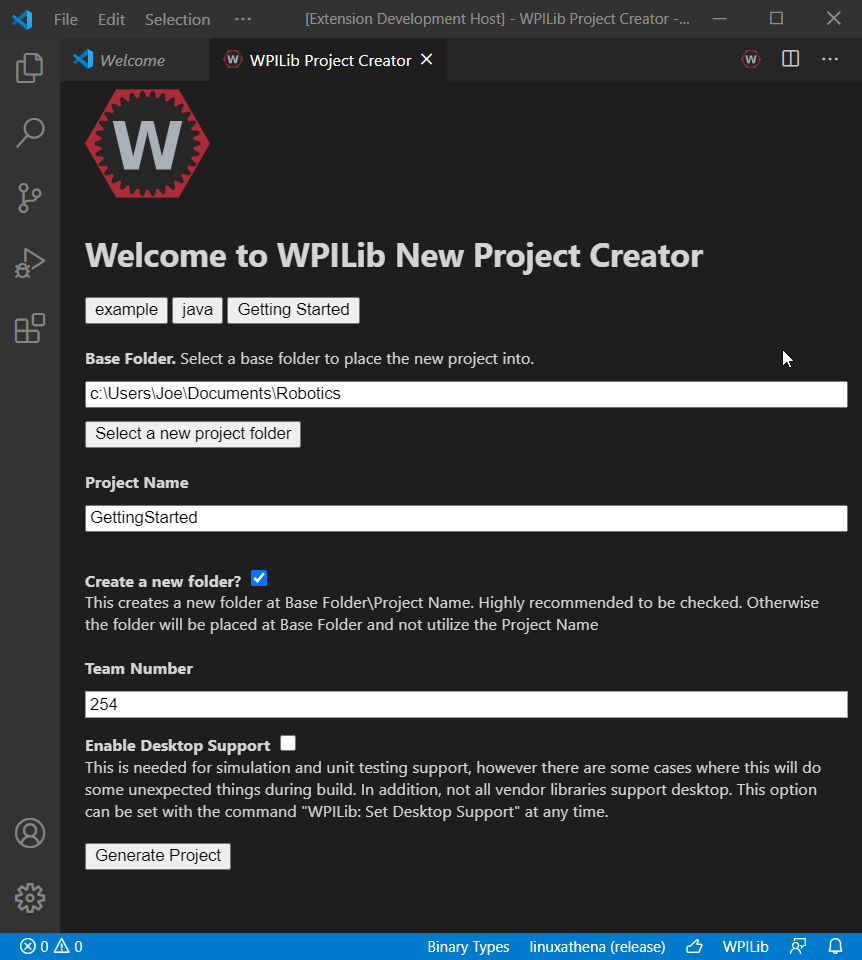

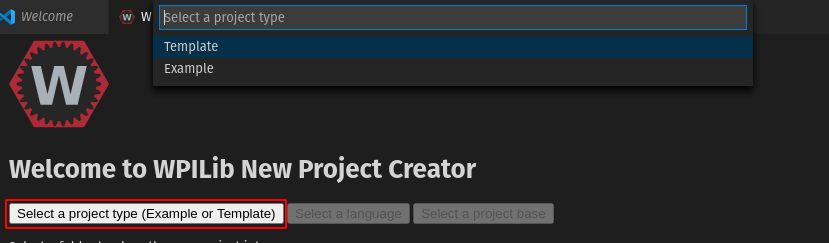

This will bring up the “New Project Creator Window:”

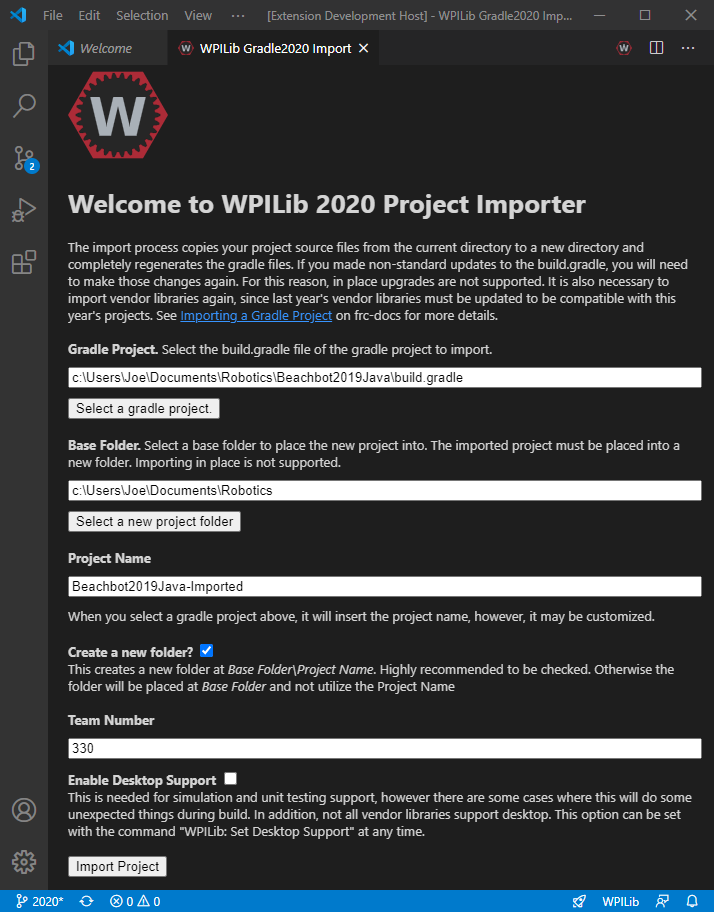

The elements of the New Project Creator Window are explained below:

Project Type: The kind of project we wish to create. For this example, select Example

Language: This is the language (C++ or Java) that will be used for this project.

Project Base: This box is used to select the base class or example to generate the project from. For this example, select Getting Started

Base Folder: This determines the folder in which the robot project will be located.

Project Name: The name of the robot project. This also specifies the name that the project folder will be given if the Create New Folder box is checked.

Create a New Folder: If this is checked, a new folder will be created to hold the project within the previously-specified folder. If it is not checked, the project will be located directly in the previously-specified folder. An error will be thrown if the folder is not empty and this is not checked. project folder will be given if the Create New Folder box is checked.

Team Number: The team number for the project, which will be used for package names within the project and to locate the robot when deploying code.

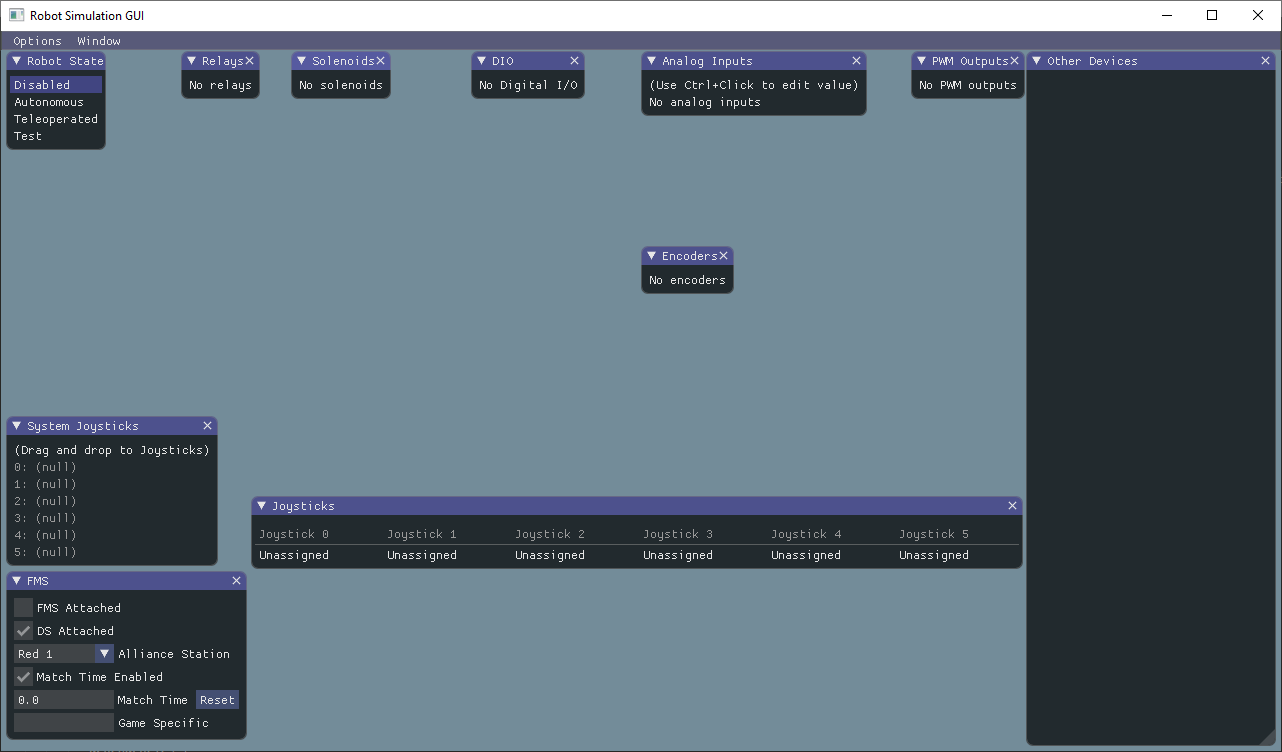

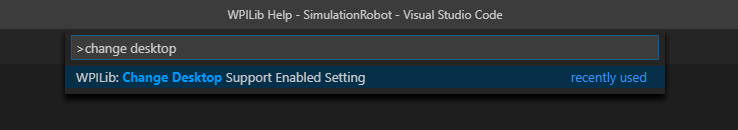

Enable Desktop Support: Enables unit test and simulation. While WPILib supports this, third party software libraries may not. If libraries do not support desktop, then your code may not compile or may crash. It should be left unchecked unless unit testing or simulation is needed and all libraries support it. For this example, do not check this box.

Once all the above have been configured, click “Generate Project” and the robot project will be created.

Note

Any errors in project generation will appear in the bottom right-hand corner of the screen.

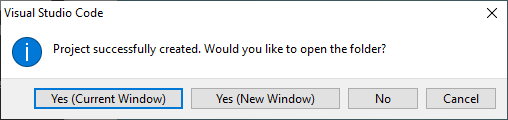

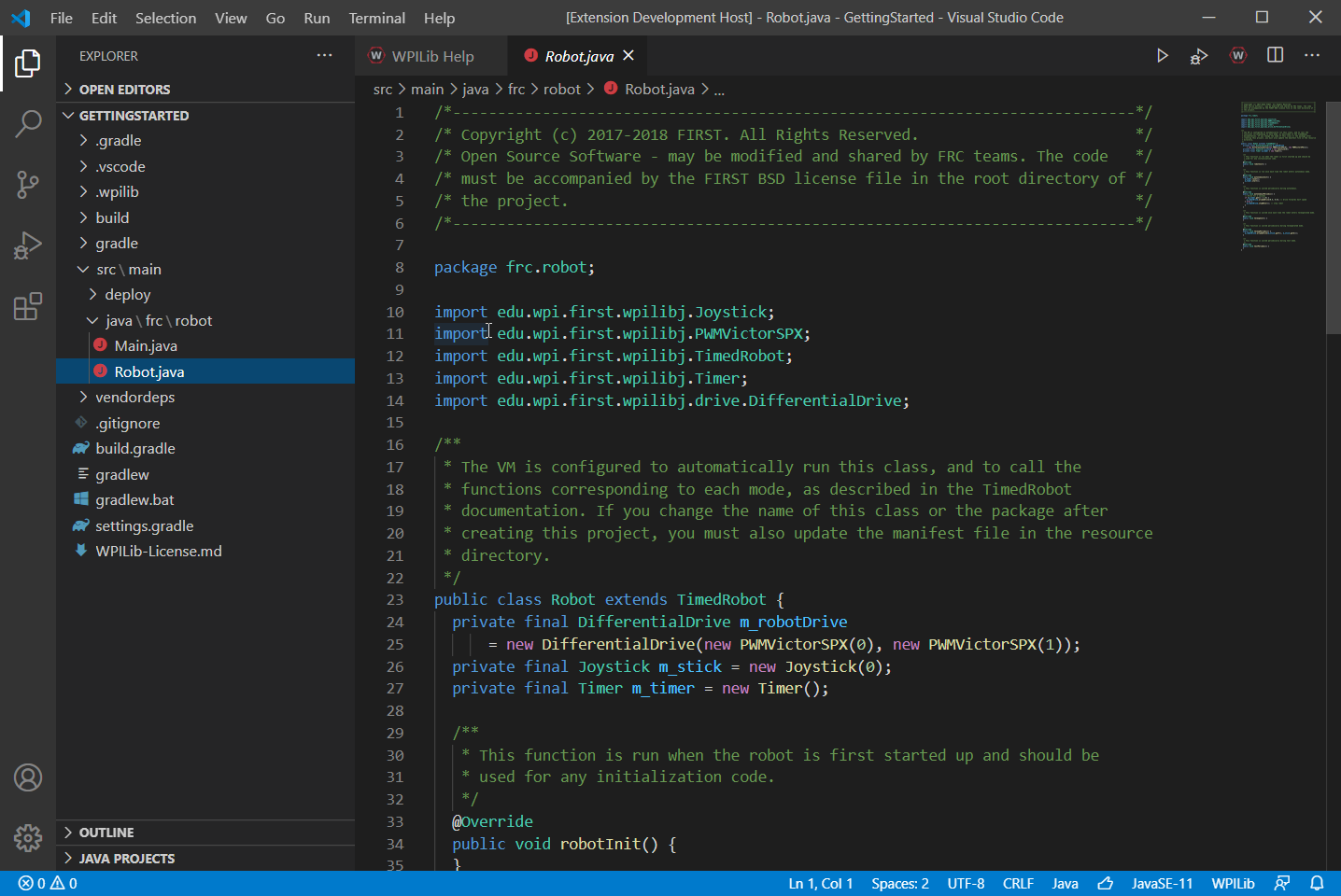

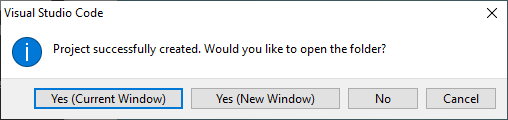

Opening The New Project

After successfully creating your project, VS Code will give the option of opening the project as shown above. We can choose to do that now or later by typing Ctrl+K then Ctrl+O (or just Command+O on macOS) and select the folder where we saved our project.

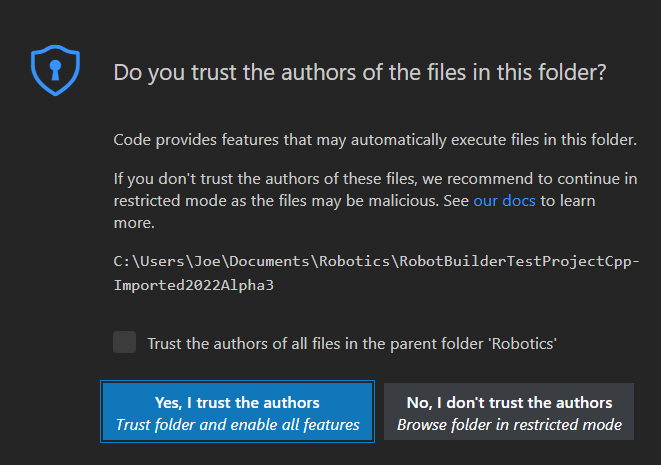

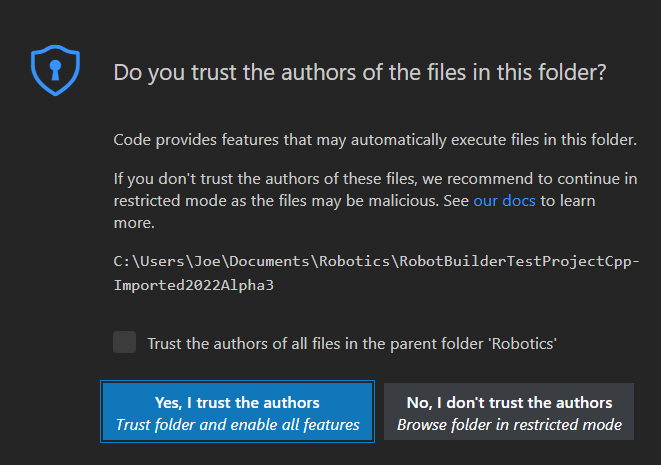

Click Yes I trust the authors.

Once opened we will see the project hierarchy on the left. Double clicking on the file will open that file in the editor.

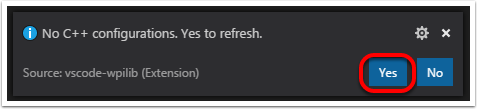

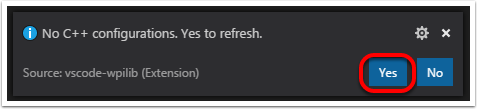

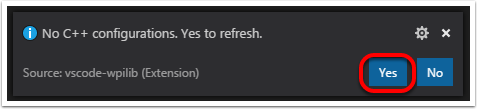

C++ Configurations (C++ Only)

For C++ projects, there is one more step to set up IntelliSense. Whenever we open a project, we should get a pop-up in the bottom right corner asking to refresh C++ configurations. Click “Yes” to set up IntelliSense.

Imports/Includes

7import edu.wpi.first.wpilibj.Joystick;

8import edu.wpi.first.wpilibj.TimedRobot;

9import edu.wpi.first.wpilibj.Timer;

10import edu.wpi.first.wpilibj.drive.DifferentialDrive;

11import edu.wpi.first.wpilibj.motorcontrol.PWMSparkMax;

5#include <frc/Joystick.h>

6#include <frc/TimedRobot.h>

7#include <frc/Timer.h>

8#include <frc/drive/DifferentialDrive.h>

9#include <frc/motorcontrol/PWMSparkMax.h>

Our code needs to reference the components of WPILib that are used. In C++ this is accomplished using #include statements; in Java it is done with import statements. The program references classes for Joystick (for driving), PWMSparkMax (for controlling motors), TimedRobot (the base class used for the example), Timer (used for autonomous), DifferentialDrive (for connecting the joystick control to the motors), and LiveWindow (C++ only).

Defining the variables for our sample robot

19public class Robot extends TimedRobot {

20 private final PWMSparkMax m_leftDrive = new PWMSparkMax(0);

21 private final PWMSparkMax m_rightDrive = new PWMSparkMax(1);

22 private final DifferentialDrive m_robotDrive = new DifferentialDrive(m_leftDrive, m_rightDrive);

23 private final Joystick m_stick = new Joystick(0);

24 private final Timer m_timer = new Timer();

12 public:

13 Robot() {

14 m_right.SetInverted(true);

15 m_robotDrive.SetExpiration(100_ms);

16 // We need to invert one side of the drivetrain so that positive voltages

17 // result in both sides moving forward. Depending on how your robot's

18 // gearbox is constructed, you might have to invert the left side instead.

19 m_timer.Start();

20 }

49 private:

50 // Robot drive system

51 frc::PWMSparkMax m_left{0};

52 frc::PWMSparkMax m_right{1};

53 frc::DifferentialDrive m_robotDrive{m_left, m_right};

54

55 frc::Joystick m_stick{0};

56 frc::Timer m_timer;

57};

The sample robot in our examples will have a joystick on USB port 0 for arcade drive and two motors on PWM ports 0 and 1. Here we create objects of type DifferentialDrive (m_robotDrive), Joystick (m_stick) and Timer (m_timer). This section of the code does three things:

Defines the variables as members of our Robot class.

Initializes the variables.

Note

The variable initializations for C++ are in the private section at the bottom of the program. This means they are private to the class (Robot). The C++ code also sets the Motor Safety expiration to 0.1 seconds (the drive will shut off if we don’t give it a command every .1 seconds) and starts the Timer used for autonomous.

Robot Initialization

@Override

public void robotInit() {}

void RobotInit() {}

The RobotInit method is run when the robot program is starting up, but after the constructor. The RobotInit for our sample program doesn’t do anything. If we wanted to run something here we could provide the code above to override the default).

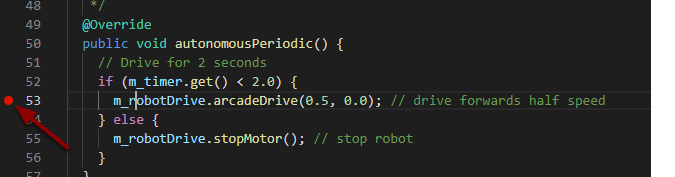

Simple Autonomous Example

38 /** This function is run once each time the robot enters autonomous mode. */

39 @Override

40 public void autonomousInit() {

41 m_timer.reset();

42 m_timer.start();

43 }

44

45 /** This function is called periodically during autonomous. */

46 @Override

47 public void autonomousPeriodic() {

48 // Drive for 2 seconds

49 if (m_timer.get() < 2.0) {

50 m_robotDrive.arcadeDrive(0.5, 0.0); // drive forwards half speed

51 } else {

52 m_robotDrive.stopMotor(); // stop robot

53 }

54 }

22 void AutonomousInit() override {

23 m_timer.Reset();

24 m_timer.Start();

25 }

26

27 void AutonomousPeriodic() override {

28 // Drive for 2 seconds

29 if (m_timer.Get() < 2_s) {

30 // Drive forwards half speed

31 m_robotDrive.ArcadeDrive(0.5, 0.0);

32 } else {

33 // Stop robot

34 m_robotDrive.ArcadeDrive(0.0, 0.0);

35 }

36 }

The AutonomousInit method is run once each time the robot transitions to autonomous from another mode. In this program, we reset the Timer and then start it in this method.

AutonomousPeriodic is run once every period while the robot is in autonomous mode. In the TimedRobot class the period is a fixed time, which defaults to 20ms. In this example, the periodic code checks if the timer is less than 2 seconds and if so, drives forward at half speed using the ArcadeDrive method of the DifferentialDrive class. If more than 2 seconds has elapsed, the code stops the robot drive.

Joystick Control for teleoperation

56 /** This function is called once each time the robot enters teleoperated mode. */

57 @Override

58 public void teleopInit() {}

59

60 /** This function is called periodically during teleoperated mode. */

61 @Override

62 public void teleopPeriodic() {

63 m_robotDrive.arcadeDrive(m_stick.getY(), m_stick.getX());

64 }

38 void TeleopInit() override {}

39

40 void TeleopPeriodic() override {

41 // Drive with arcade style (use right stick)

42 m_robotDrive.ArcadeDrive(-m_stick.GetY(), m_stick.GetX());

43 }

Like in Autonomous, the Teleop mode has a TeleopInit and TeleopPeriodic function. In this example we don’t have anything to do in TeleopInit, it is provided for illustration purposes only. In TeleopPeriodic, the code uses the ArcadeDrive method to map the Y-axis of the Joystick to forward/back motion of the drive motors and the X-axis to turning motion.

Test Mode

66 /** This function is called once each time the robot enters test mode. */

67 @Override

68 public void testInit() {}

69

70 /** This function is called periodically during test mode. */

71 @Override

72 public void testPeriodic() {}

73}

45 void TestInit() override {}

46

47 void TestPeriodic() override {}

Test Mode is used for testing robot functionality. Similar to TeleopInit, the TestInit and TestPeriodic methods are provided here for illustrative purposes only.

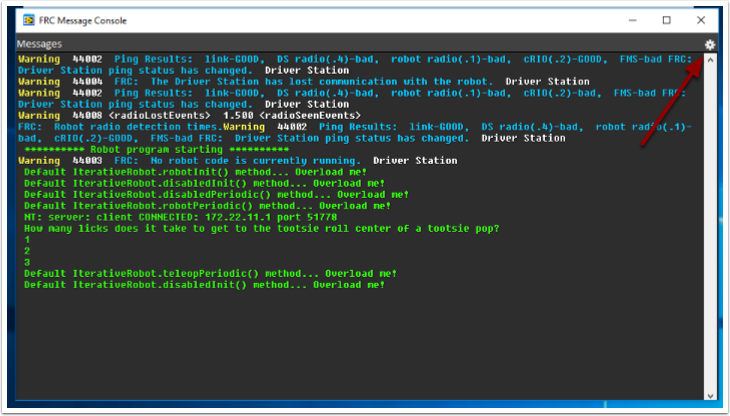

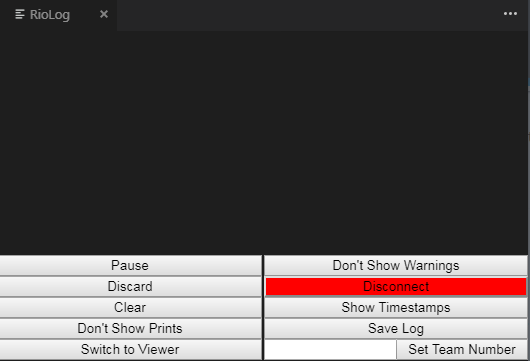

Running your Benchtop Test Program

Overview

You should create and download a Benchtop Test Program as described for your programming language:

Tethered Operation

Running your benchtop testing program while tethered to the Driver Station via ethernet or USB cable will confirm the the program was successfully deployed and that the driver station and roboRIO are properly configured.

The roboRIO should be powered on and connected to the PC over Ethernet or USB.

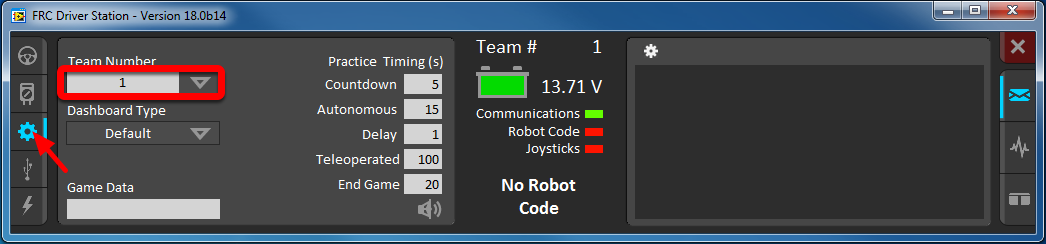

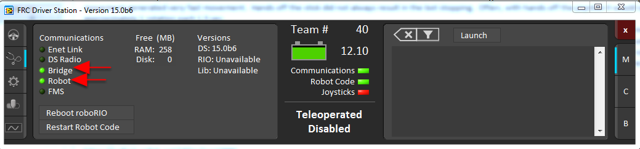

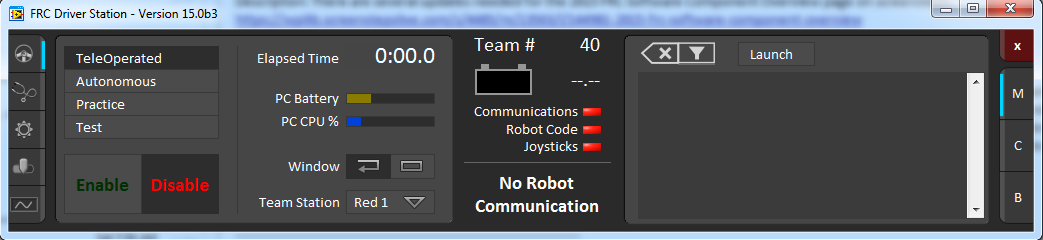

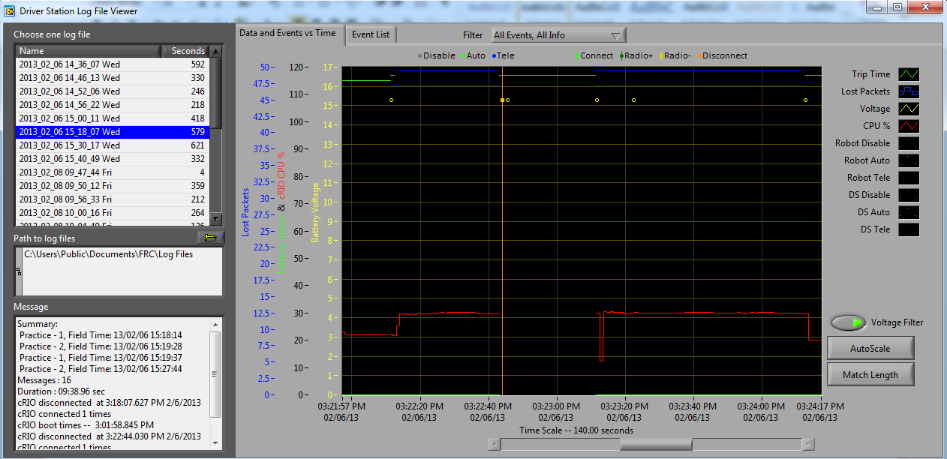

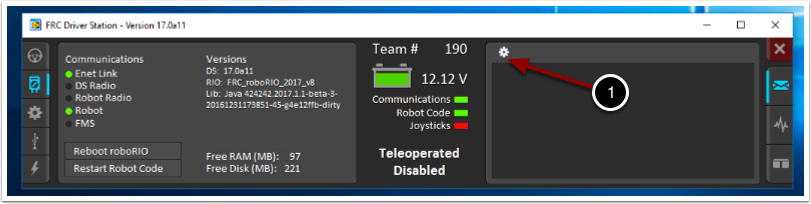

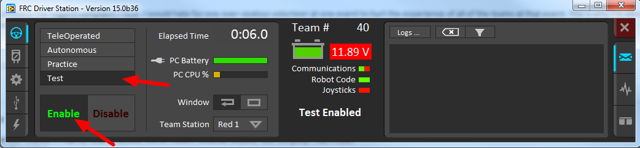

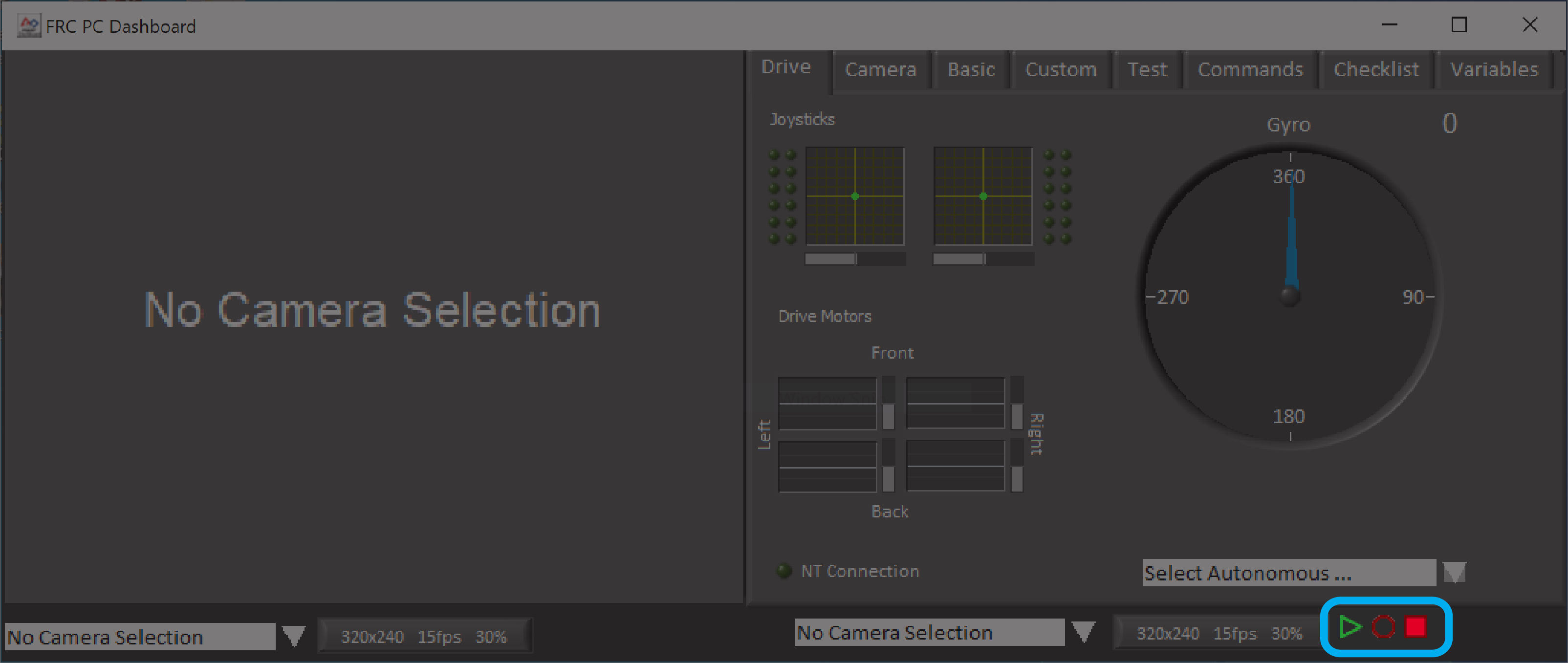

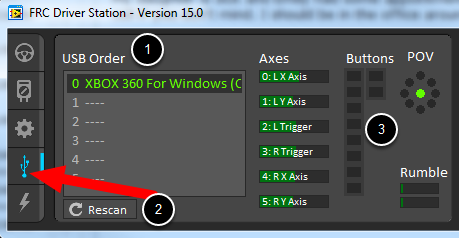

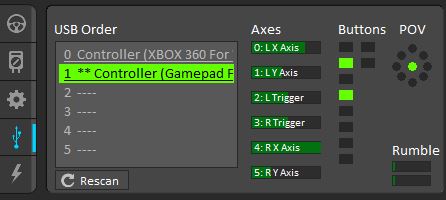

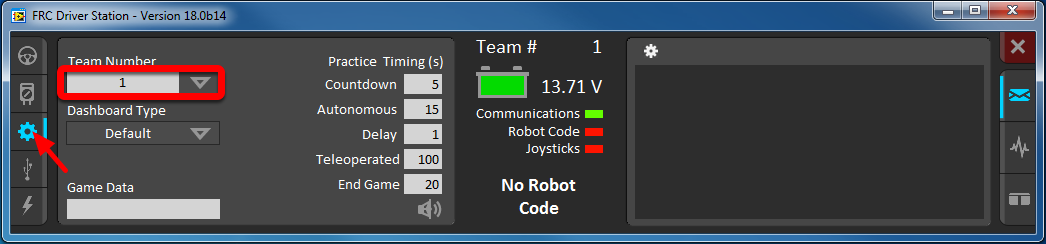

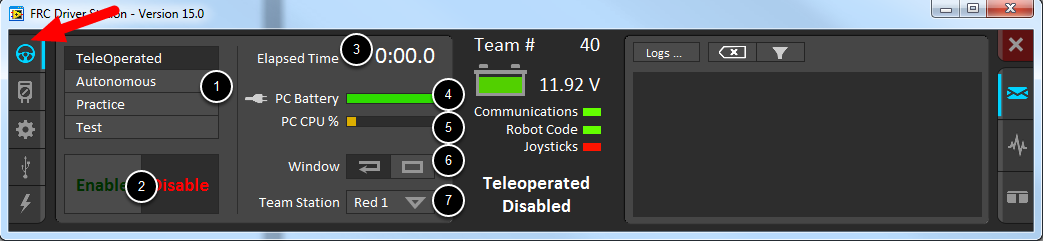

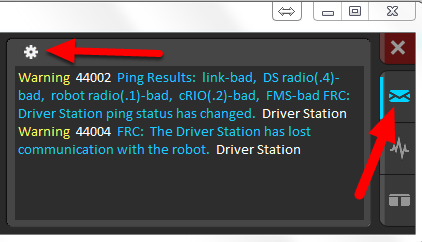

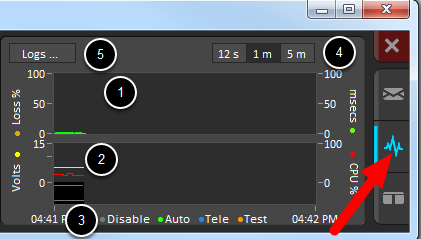

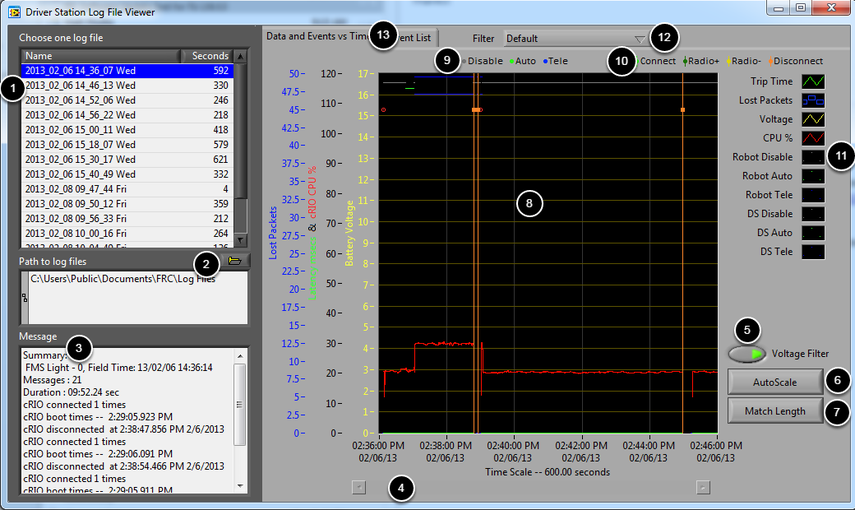

Starting the FRC Driver Station

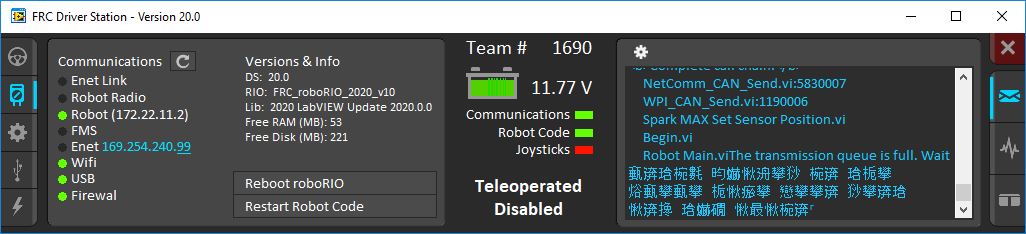

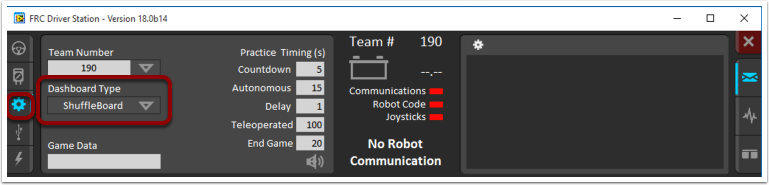

The FRC® Driver Station can be launched by double-clicking the icon on the Desktop or by selecting Start->All Programs->FRC Driver Station.

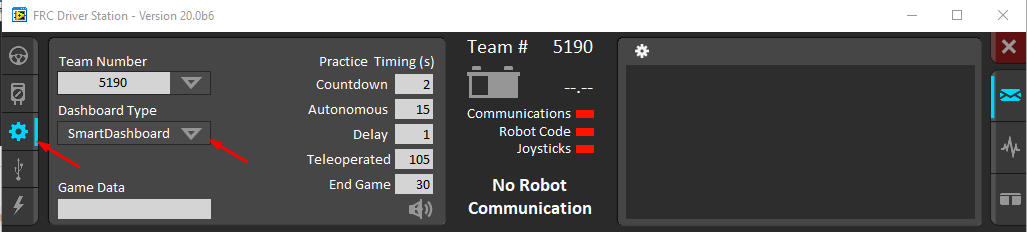

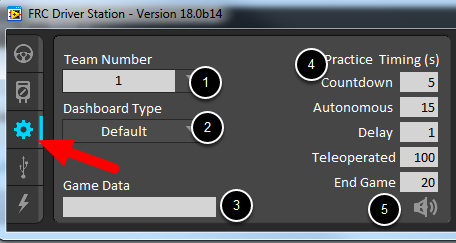

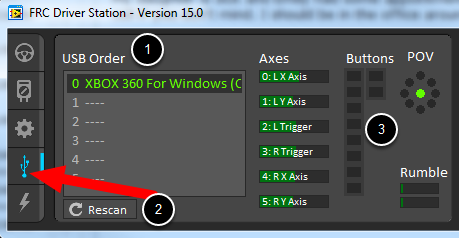

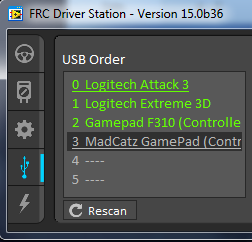

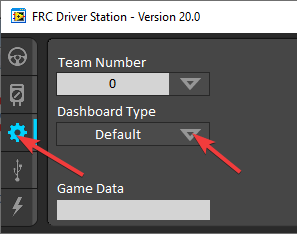

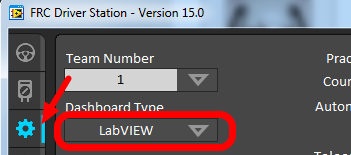

Setting Up the Driver Station

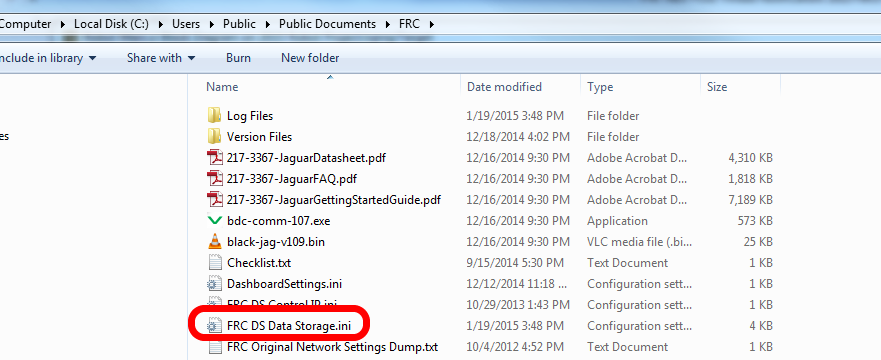

The DS must be set to your team number in order to connect to your robot. In order to do this click the Setup tab then enter your team number in the team number box. Press return or click outside the box for the setting to take effect.

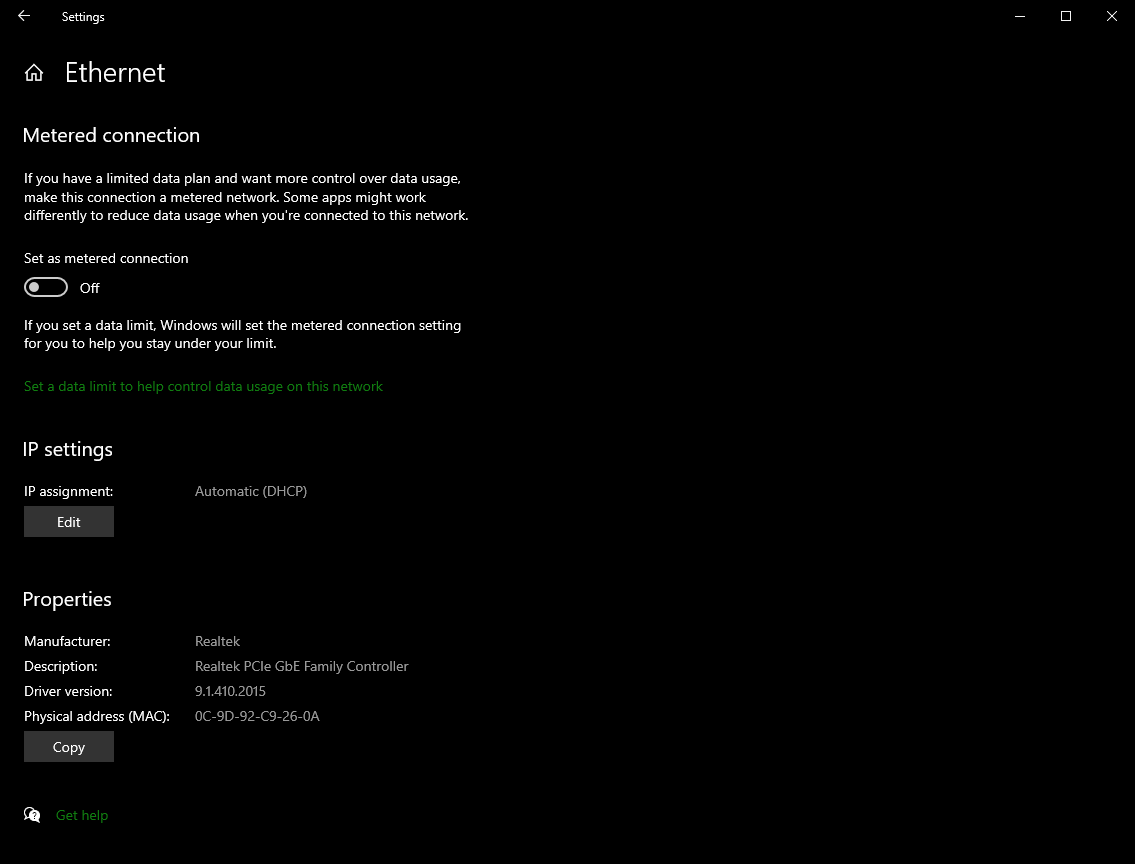

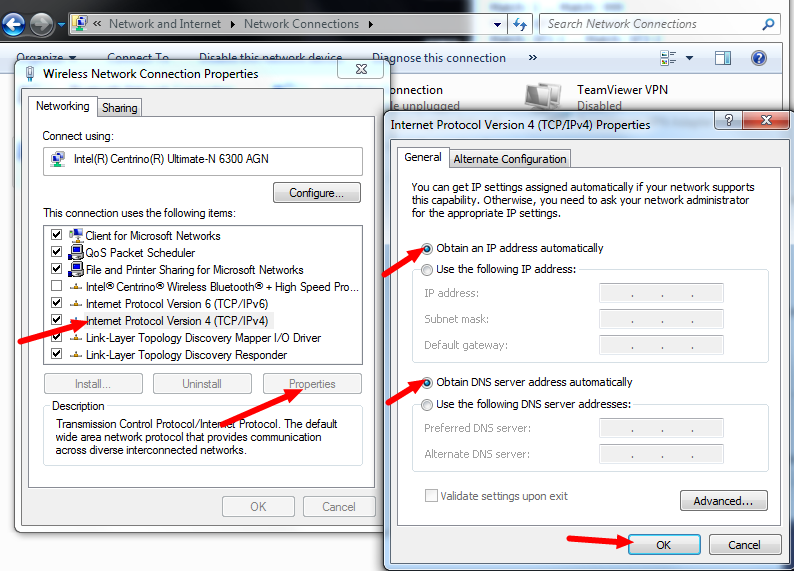

PCs will typically have the correct network settings for the DS to connect to the robot already, but if not, make sure your Network adapter is set to DHCP.

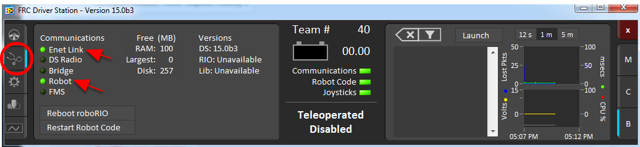

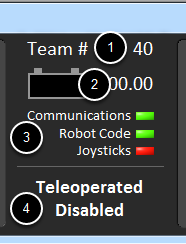

Confirm Connectivity

Tethered

Wireless

Using the Driver Station software, click Diagnostics and confirm that the Enet Link (or Robot Radio led, if operating wirelessly) and Robot leds are green.

Operate the Robot

Click the Operation Tab

Confirm that battery voltage is displayed

Communications, Robot Code, and Joysticks indicators are green.

Put the robot in Teleop Mode

Click Enable. Move the joysticks and observe how the robot responds.

Click Disable

Wireless Operation

Before attempting wireless operation, tethered operation should have been confirmed as described in Tethered Operation. Running your benchtop testing program while connected to the Driver Station via WiFi will confirm that the access point is properly configured.

Configuring the Access Point

See the article Programming your radio for details on configuring the robot radio for use as an access point.

After configuring the access point, connect the driver station wirelessly to the robot. The SSID will be your team number (as entered in the Bridge Configuration Utility). If you set a key when using the Bridge Configuration Utility you will need to enter it to connect to the network. Make sure the computer network adapter is set to DHCP (“Obtain an IP address automatically”).

You can now confirm wireless operation using the same steps in Confirm Connectivity and Operate the Robot above.

Hardware Component Overview

The goal of this document is to provide a brief overview of the hardware components that make up the FRC® Control System. Each component will contain a brief description of the component function and a link to more documentation.

Note

For complete wiring instructions/diagrams, please see the Wiring the FRC Control System document.

Overview of Control System

NI roboRIO

The NI-roboRIO is the main robot controller used for FRC. The roboRIO serves as the “brain” for the robot running team-generated code that commands all of the other hardware.

CTRE Power Distribution Panel

The CTRE Power Distribution Panel (PDP) is designed to distribute power from a 12VDC battery to various robot components through auto-resetting circuit breakers and a small number of special function fused connections. The PDP provides 8 output pairs rated for 40A continuous current and 8 pairs rated for 30A continuous current. The PDP provides dedicated 12V connectors for the roboRIO, as well as connectors for the Voltage Regulator Module and Pneumatics Control Module. It also includes a CAN interface for logging current, temperature, and battery voltage. For more detailed information, see the PDP User Manual.

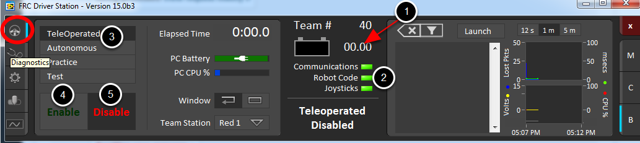

REV Power Distribution Hub

The REV Power Distribution Hub (PDH) is designed to distribute power from a 12VDC battery to various robot components. The PDH features 20 high-current (40A max) channels, 3 low-current (15A max), and 1 switchable low-current channel. The Power Distribution Hub features toolless latching WAGO terminals, an LED voltage display, and the ability to connect over CAN or USB-C to the REV Hardware Client for real-time telemetry.

CTRE Voltage Regulator Module

The CTRE Voltage Regulator Module (VRM) is an independent module that is powered by 12 volts. The device is wired to a dedicated connector on the PDP. The module has multiple regulated 12V and 5V outputs. The purpose of the VRM is to provide regulated power for the robot radio, custom circuits, and IP vision cameras. For more information, see the VRM User Manual.

REV Radio Power Module

The REV Radio Power Module is designed to keep one of the most critical system components, the OpenMesh WiFi radio, powered in the toughest moments of the competition. The Radio Power Module eliminates the need for powering the radio through a traditional barrel power jack. Utilizing 18V Passive POE with two socketed RJ45 connectors, the Radio Power Module passes signal between the radio and roboRIO while providing power directly to the radio. After connecting the radio and roboRIO, easily add power to the Radio Power Module by wiring it to the low-current channels on the Power Distribution Hub utilizing the color coded push button WAGO terminals.

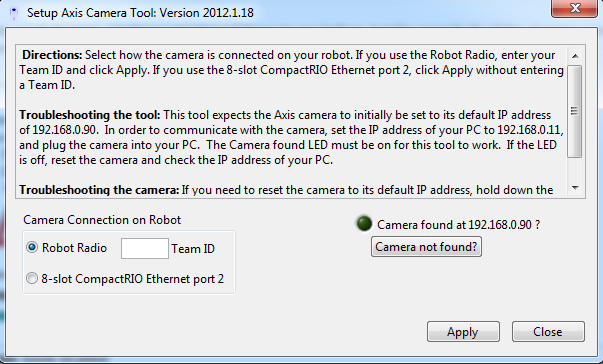

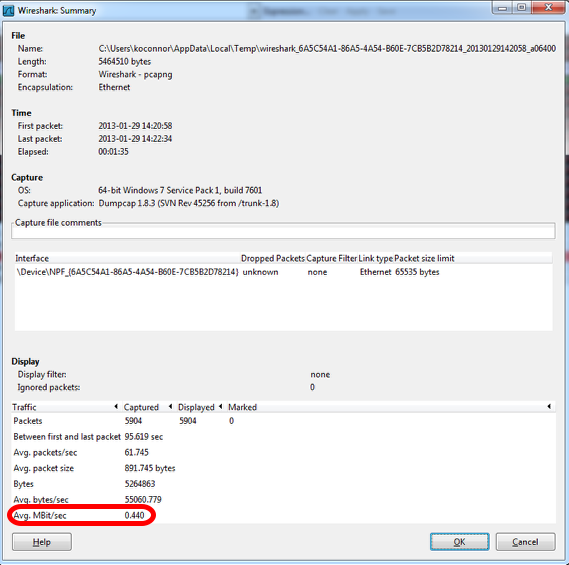

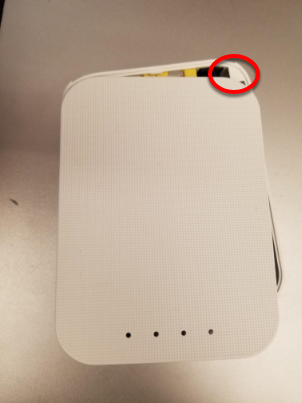

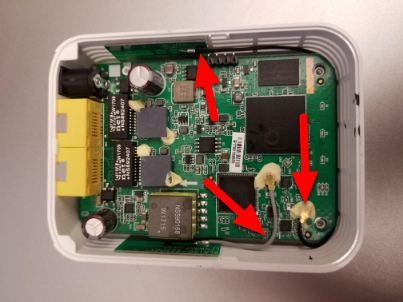

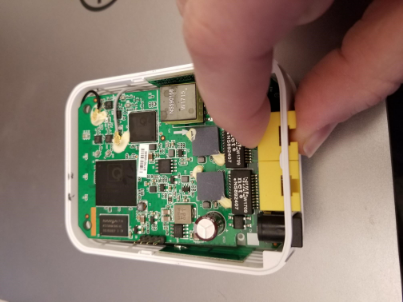

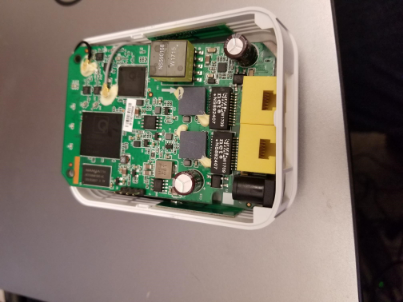

OpenMesh OM5P-AN or OM5P-AC Radio

Either the OpenMesh OM5P-AN or OpenMesh OM5P-AC wireless radio is used as the robot radio to provide wireless communication functionality to the robot. The device can be configured as an Access Point for direct connection of a laptop for use at home. It can also be configured as a bridge for use on the field. The robot radio should be powered by one of the 12V/2A outputs on the VRM and connected to the roboRIO controller over Ethernet. For more information, see Programming your Radio.

The OM5P-AN is no longer available for purchase. The OM5P-AC is slightly heavier, has more cooling grates, and has a rough surface texture compared to the OM5P-AN.

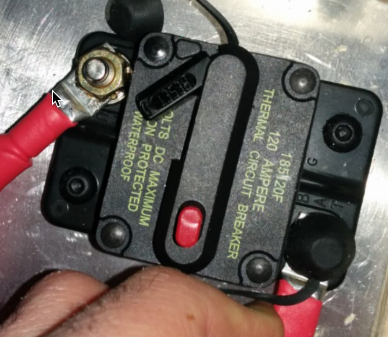

120A Circuit Breaker

The 120A Main Circuit Breaker serves two roles on the robot: the main robot power switch and a protection device for downstream robot wiring and components. The 120A circuit breaker is wired to the positive terminals of the robot battery and Power Distribution boards. For more information, please see the Cooper Bussmann 18X Series Datasheet (PN: 185120F)

Snap Action Circuit Breakers

The Snap Action circuit breakers, MX5 series and VB3 Series, are used with the Power Distribution Panel to limit current to branch circuits. The ratings on these circuit breakers are for continuous current, temporary peak values can be considerably higher.

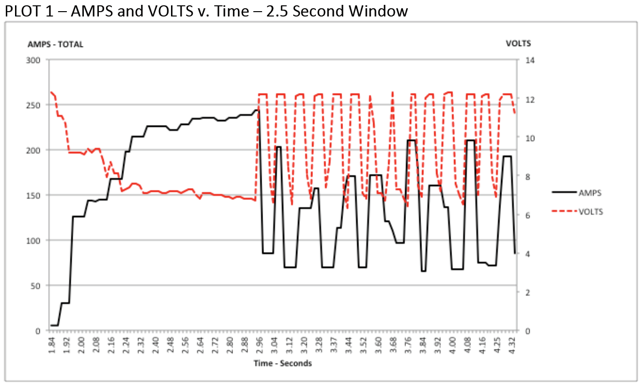

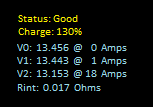

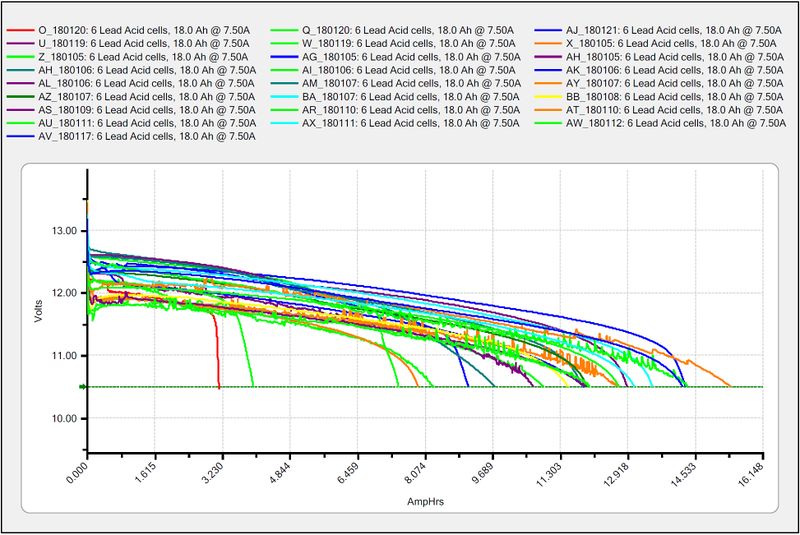

Robot Battery

The power supply for an FRC robot is a single 12V 18Ah Sealed Lead Acid (SLA) battery, capable of meeting the high current demands of an FRC robot. For more information, see the Robot Battery page.

Note

Multiple battery part numbers may be legal, consult the FRC Manual for a complete list.

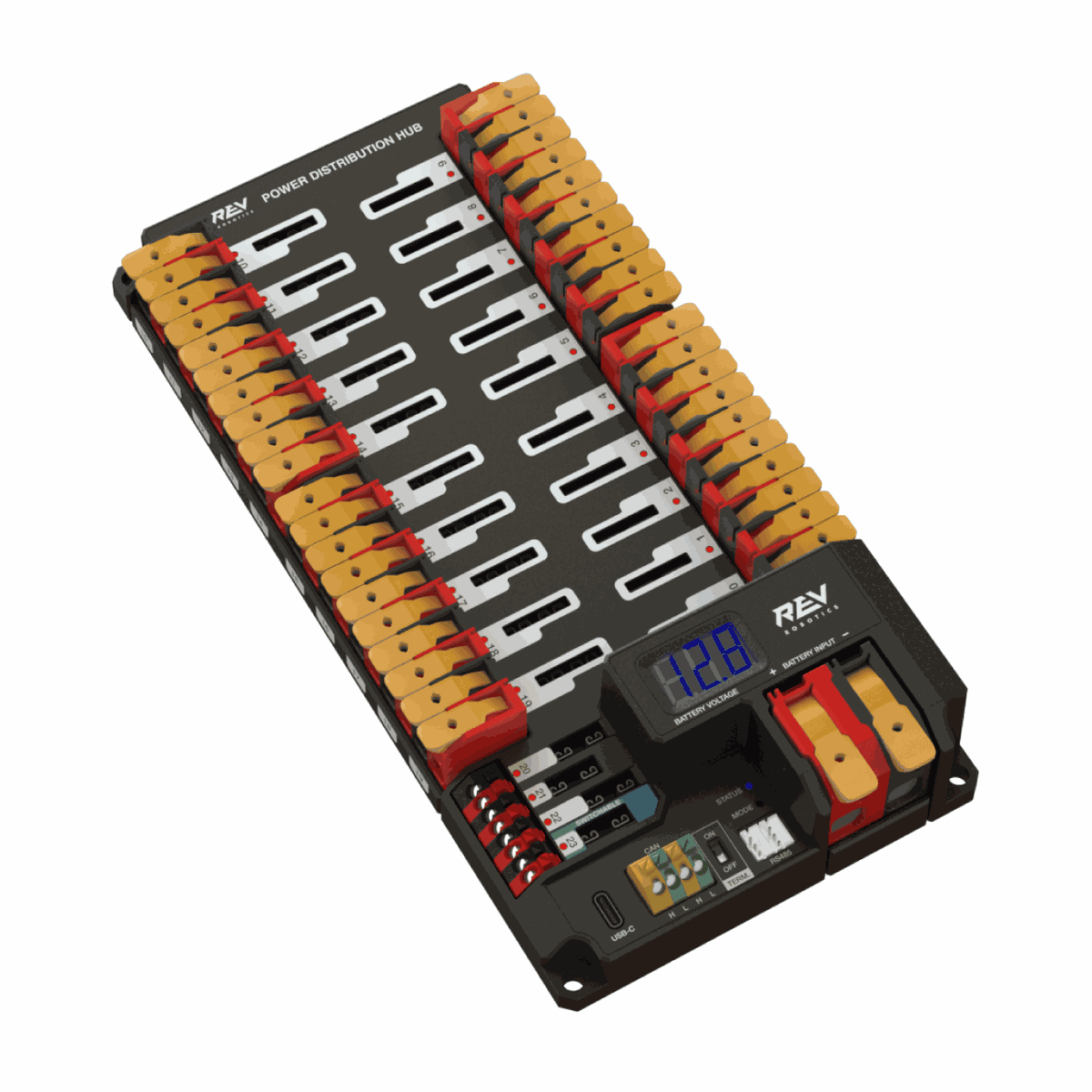

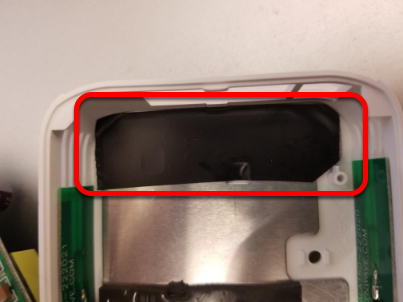

Robot Signal Light

The Robot Signal Light (RSL) is required to be the Allen-Bradley 855PB-B12ME522. It is directly controlled by the roboRIO and will flash when enabled and stay solid while disabled.

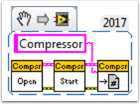

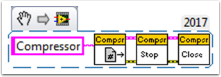

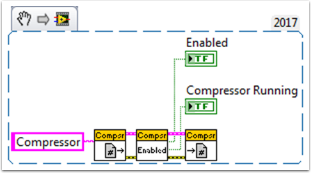

CTRE Pneumatics Control Module

The CTRE Pneumatics Control Module (PCM) contains all of the inputs and outputs required to operate 12V or 24V pneumatic solenoids and the on board compressor. The PCM contains an input for the pressure sensor and will control the compressor automatically when the robot is enabled and a solenoid has been created in the code. For more information see the PCM User Manual.

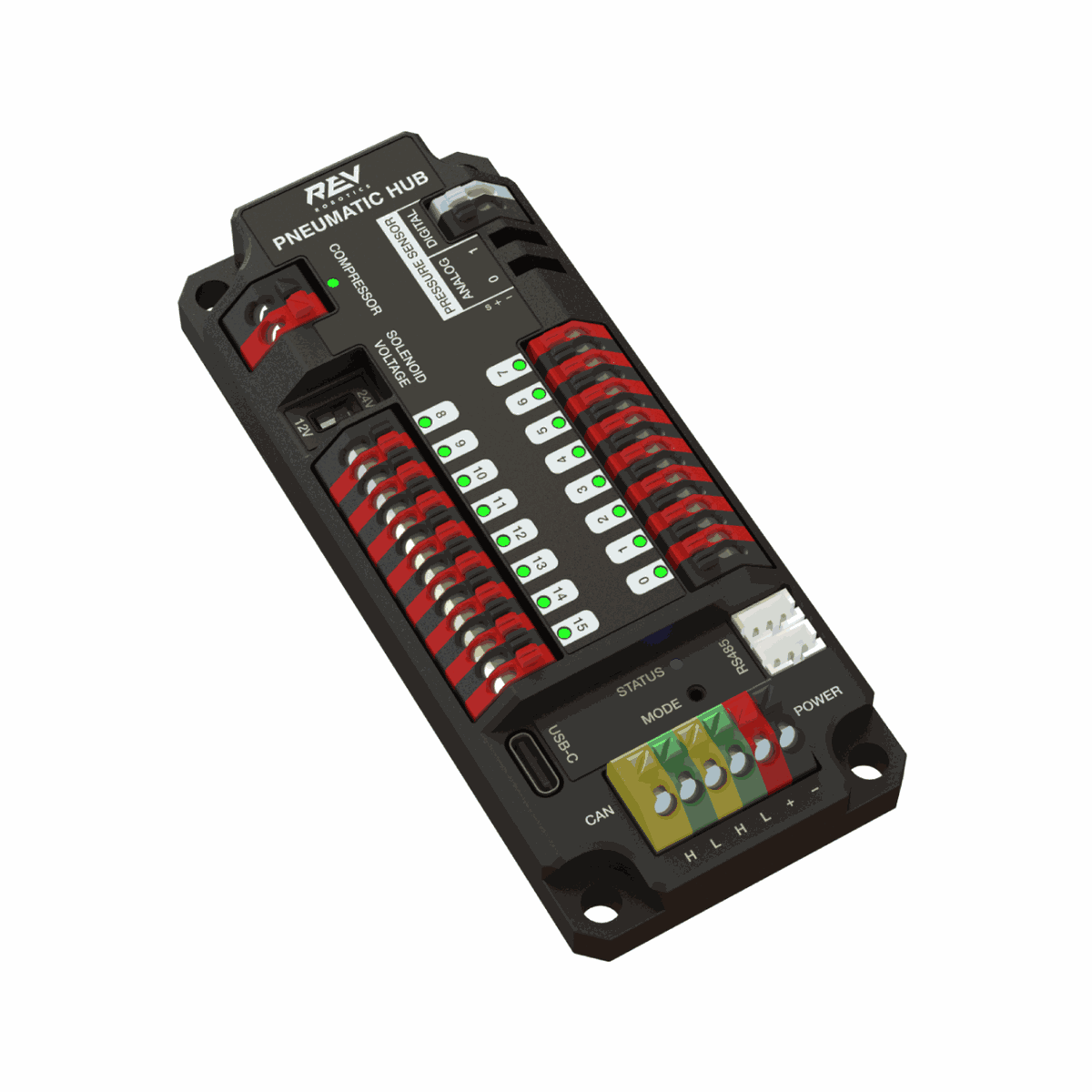

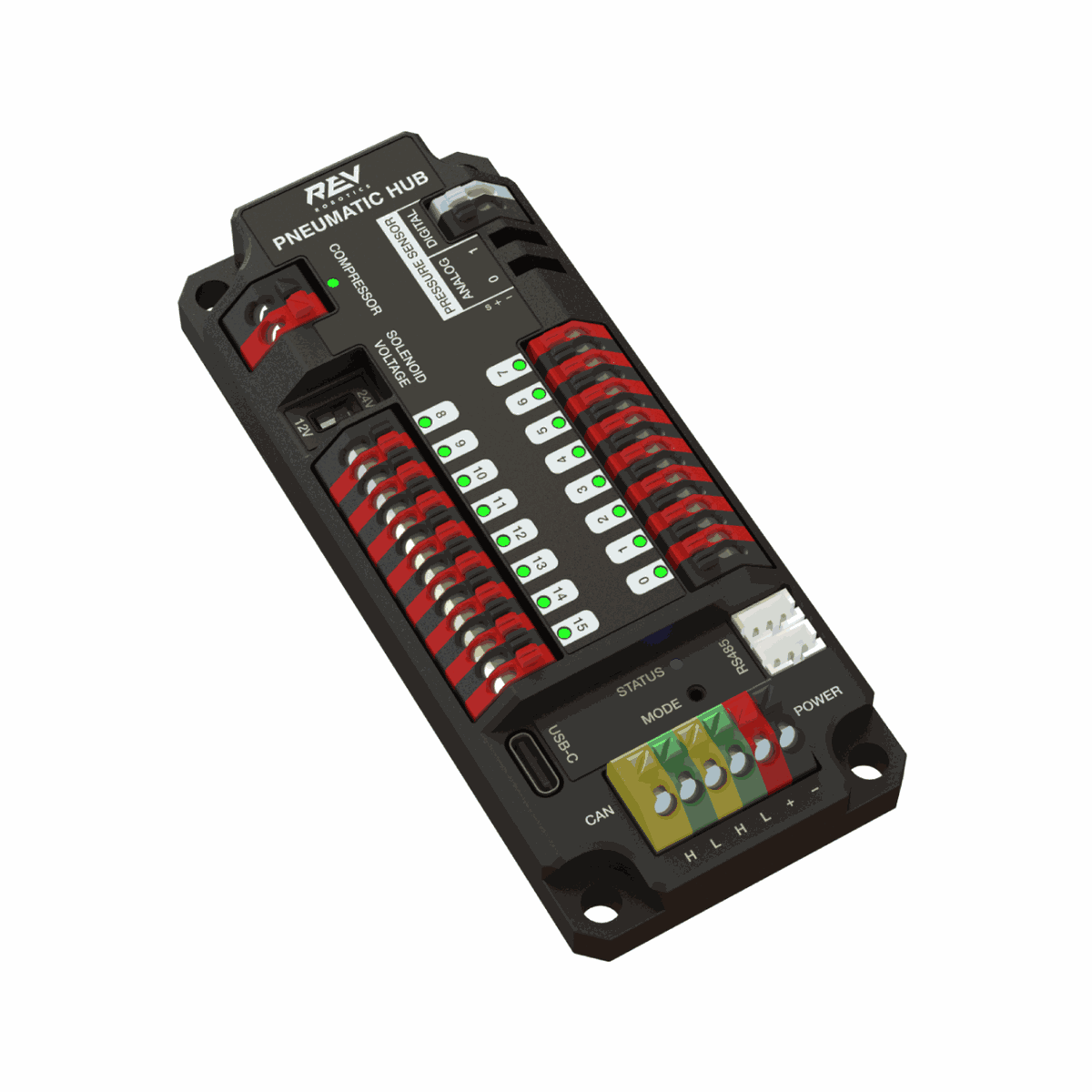

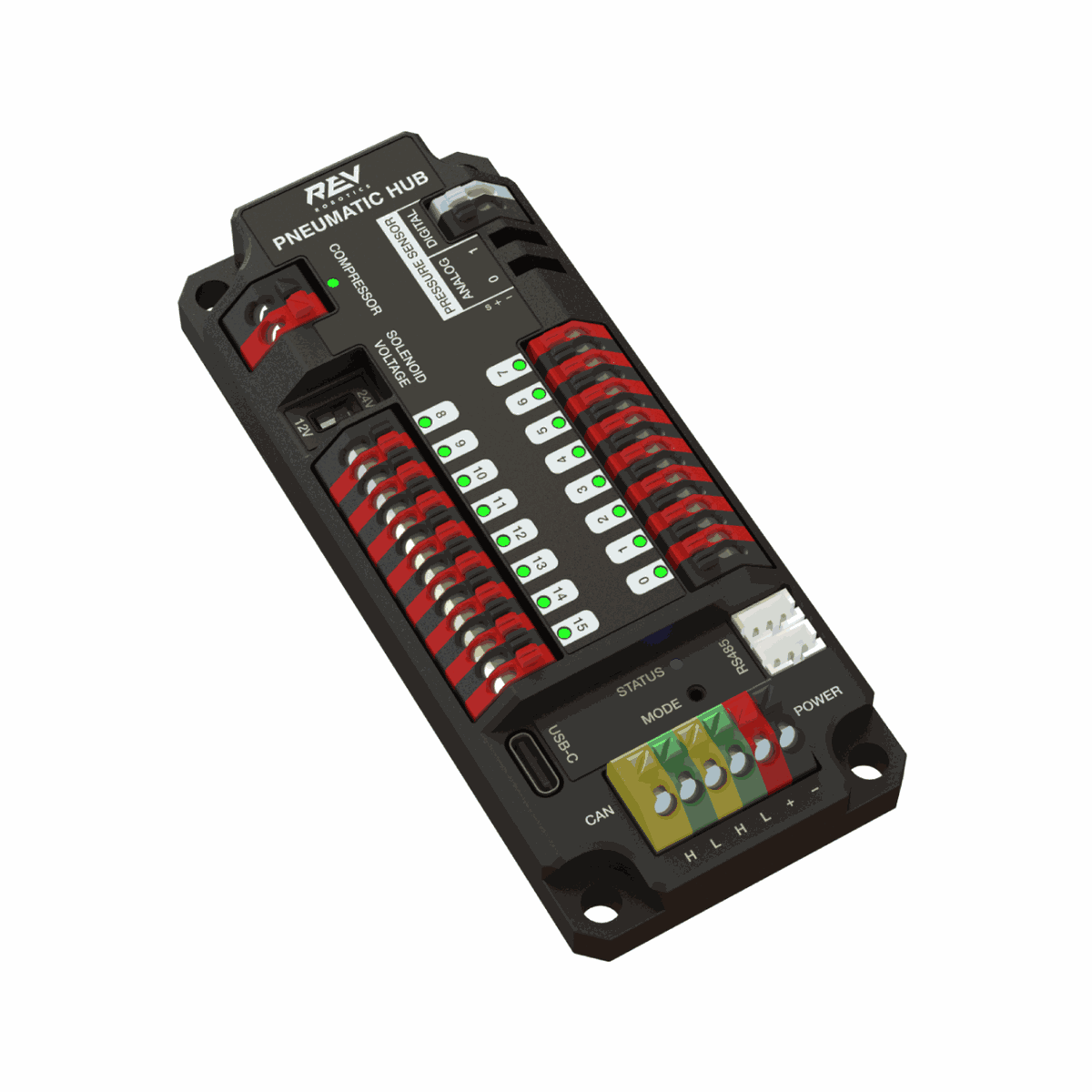

REV Pneumatic Hub

The REV Pneumatic Hub is a standalone module that is capable of switching both 12V and 24V pneumatic solenoid valves. The Pneumatic Hub features 16 solenoid channels which allow for up to 16 single-acting solenoids, 8 double-acting solenoids, or a combination of the two types. The user selectable output voltage is fully regulated, allowing even 12V solenoids to stay active when the robot battery drops as low as 4.75V.

Digital and analog pressure sensor ports are built into the device, increasing the flexibility and feedback functionality of the pneumatic system. The USB-C connection on the Hub works with the REV Hardware Client, allowing users to test pneumatic systems without a need for an additional robot controller.

Motor Controllers

There are a variety of different motor controllers which work with the FRC Control System and are approved for use. These devices are used to provide variable voltage control of the brushed and brushless DC motors used in FRC. They are listed here in order of usage.

Note

3rd Party CAN control is not supported from WPILib. See this section on Third-Party CAN Devices for more information.

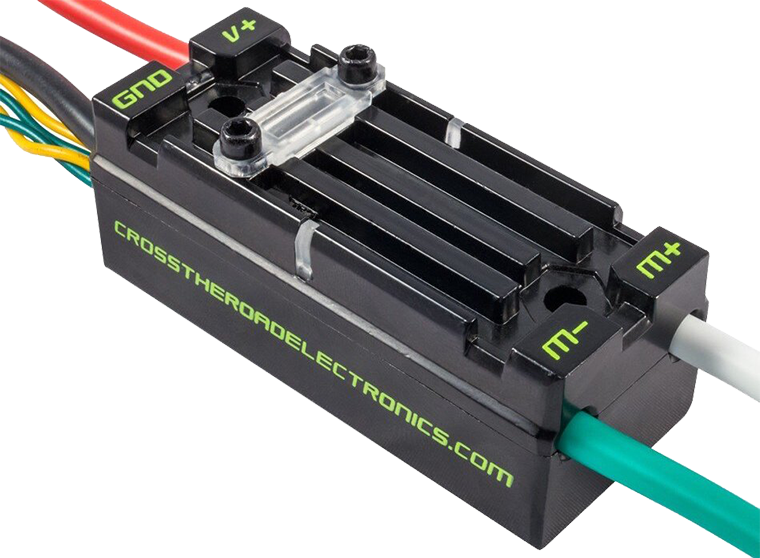

Talon SRX

The Talon SRX Motor Controller is a “smart motor controller” from Cross The Road Electronics/VEX Robotics. The Talon SRX can be controlled over the CAN bus or PWM interface. When using the CAN bus control, this device can take inputs from limit switches and potentiometers, encoders, or similar sensors in order to perform advanced control. For more information see the Talon SRX User’s Guide.

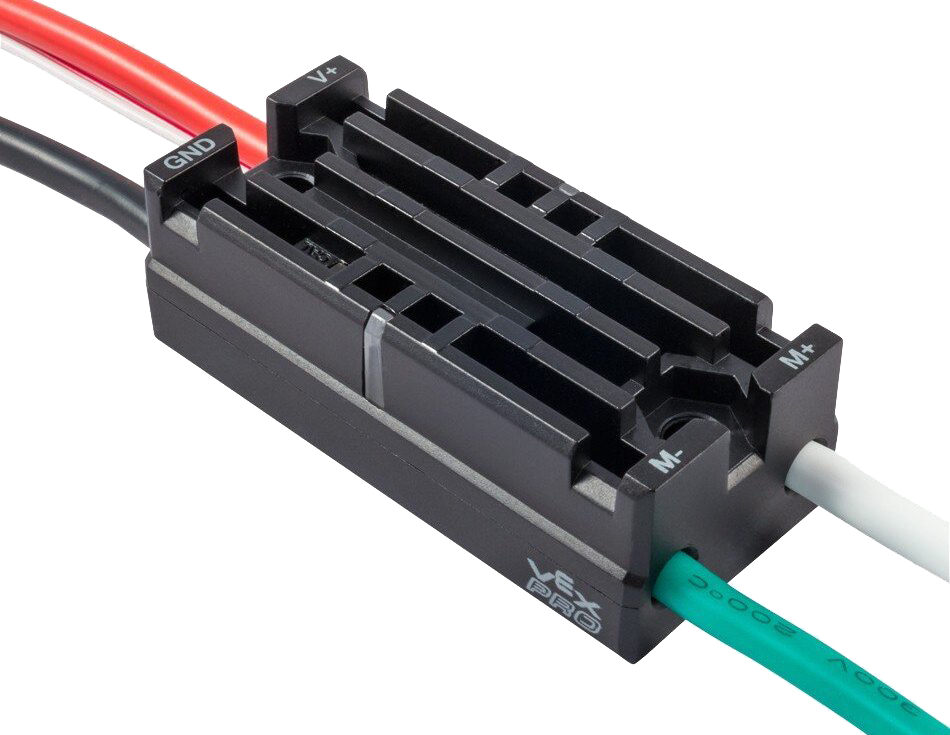

Victor SPX

The Victor SPX Motor Controller is a CAN or PWM controlled motor controller from Cross The Road Electronics/VEX Robotics. The device is connectorized to allow easy connection to the roboRIO PWM connectors or a CAN bus. The case is sealed to prevent debris from entering the controller. For more information, see the Victor SPX User Guide.

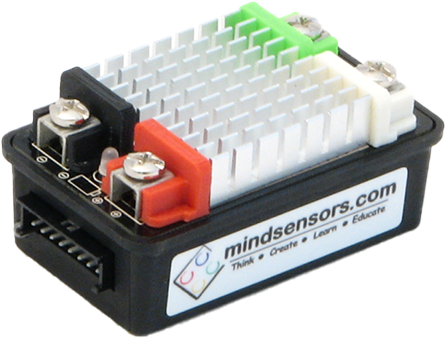

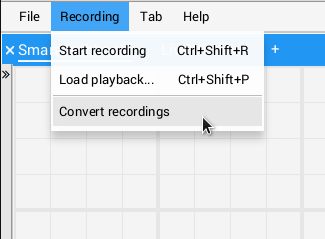

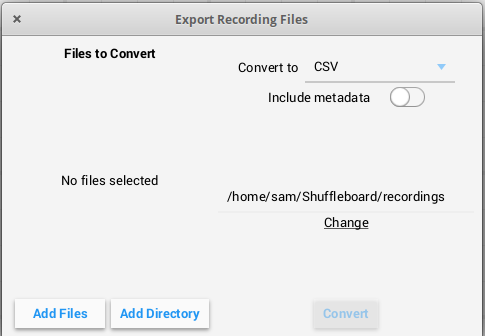

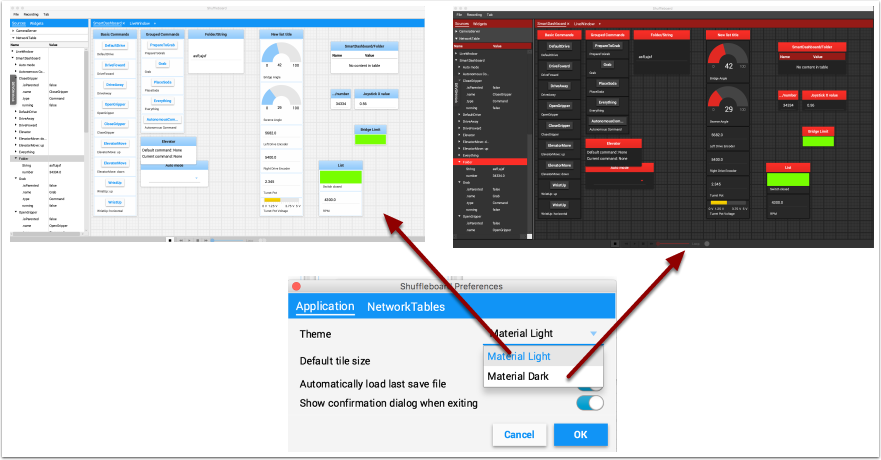

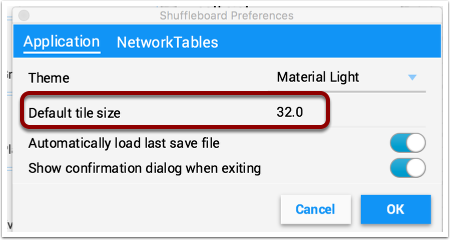

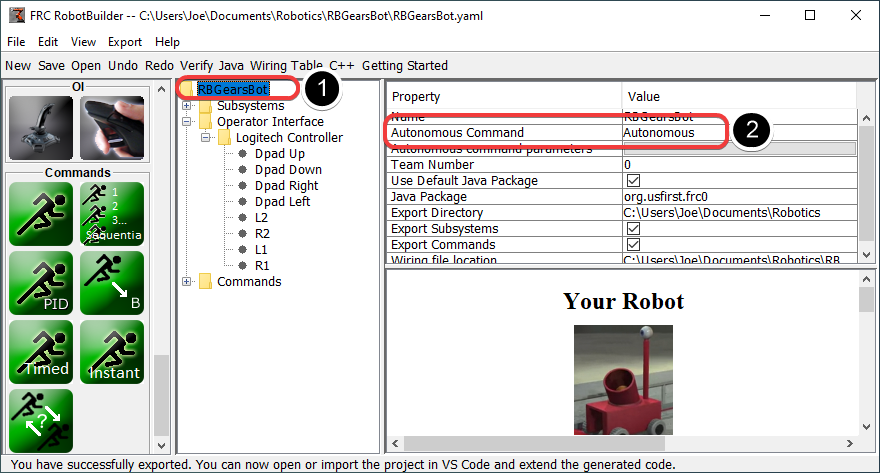

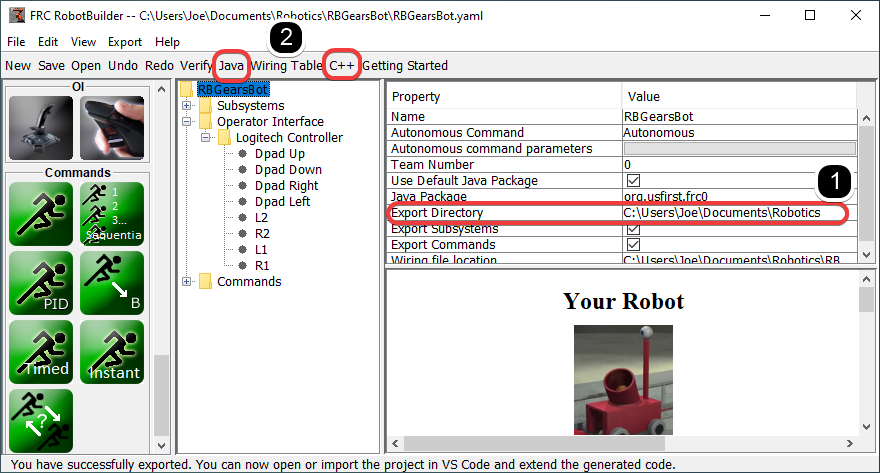

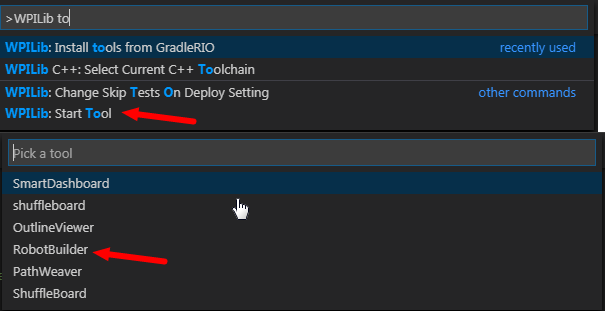

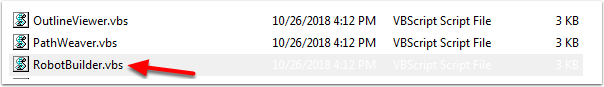

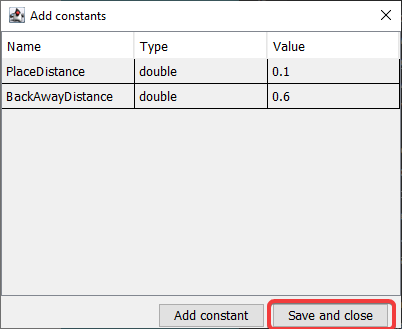

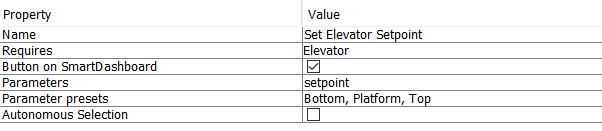

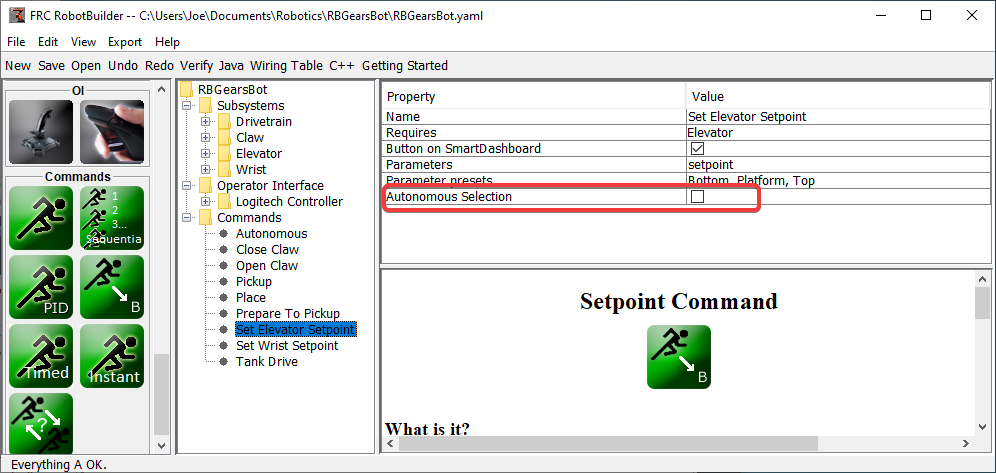

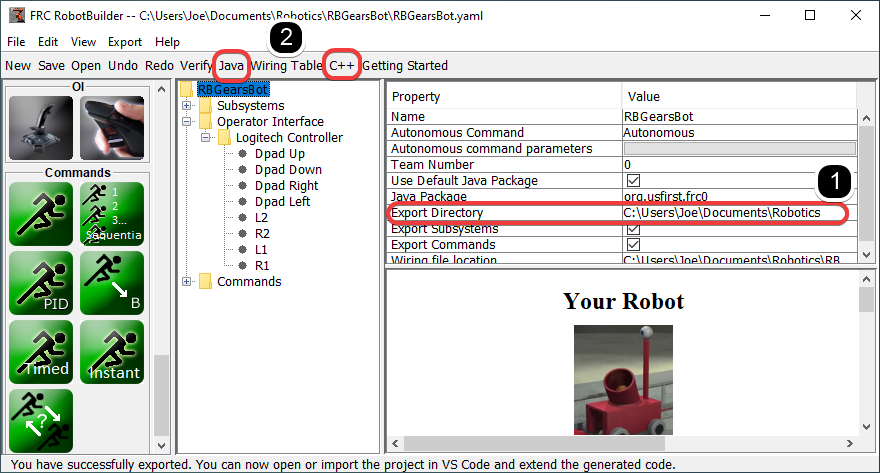

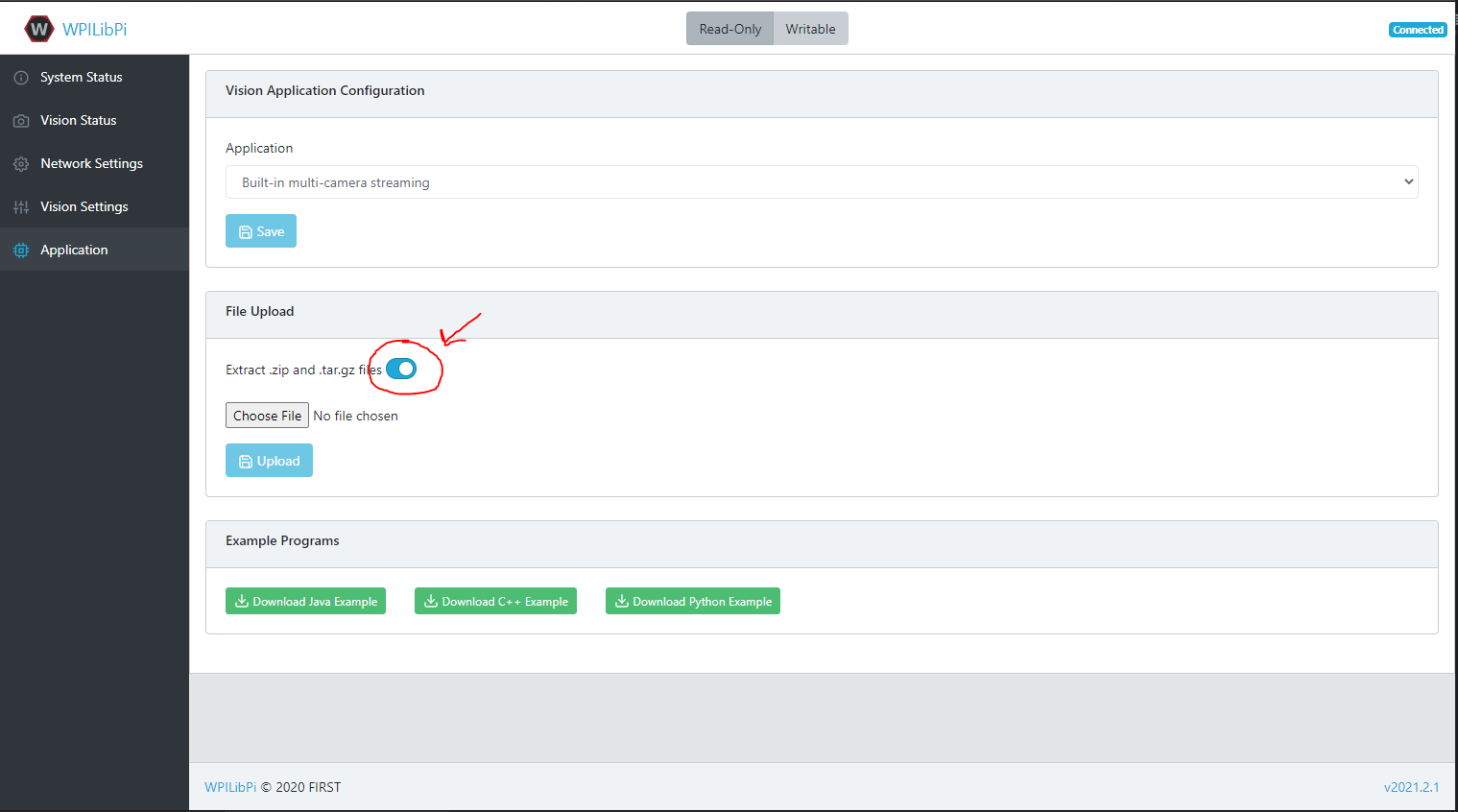

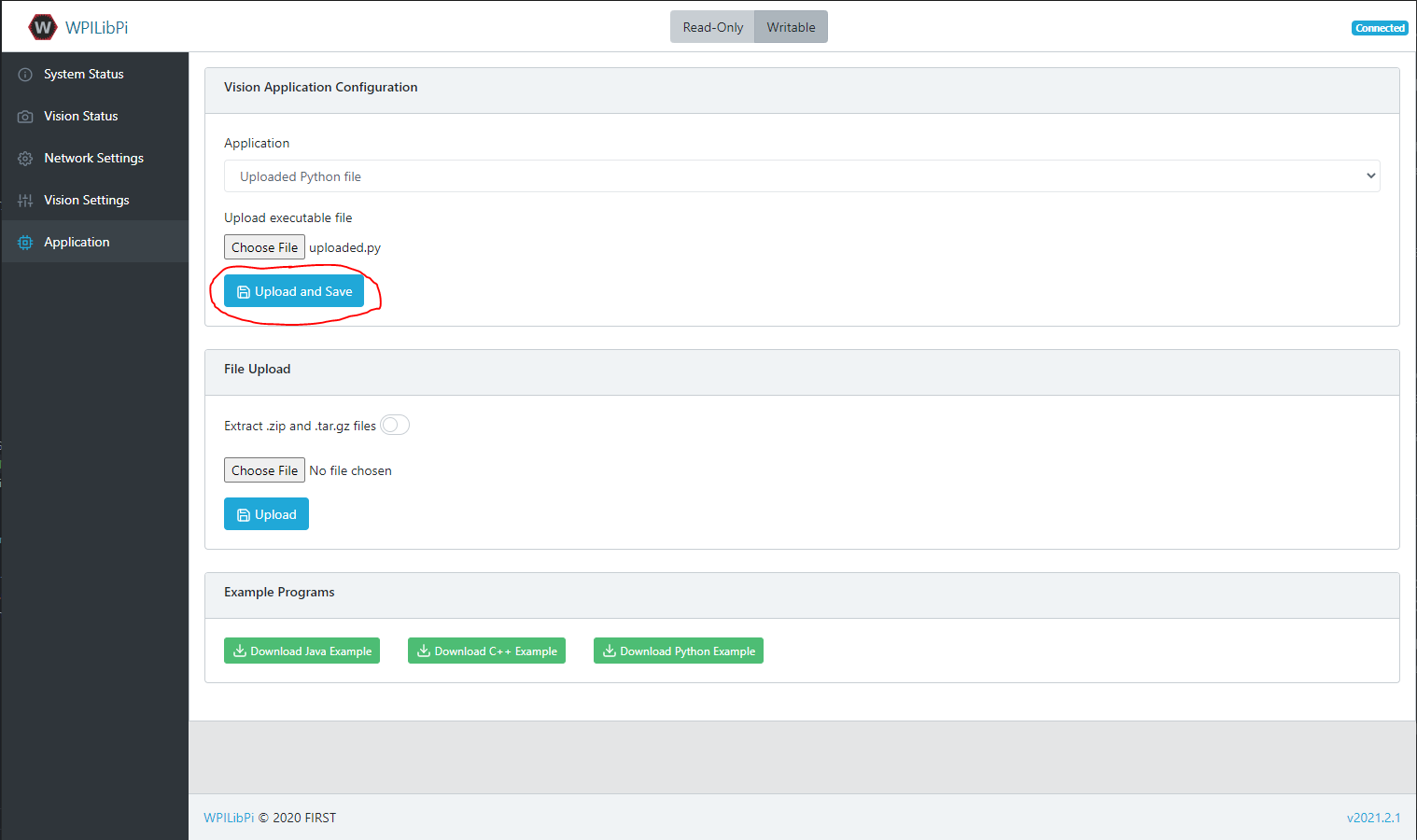

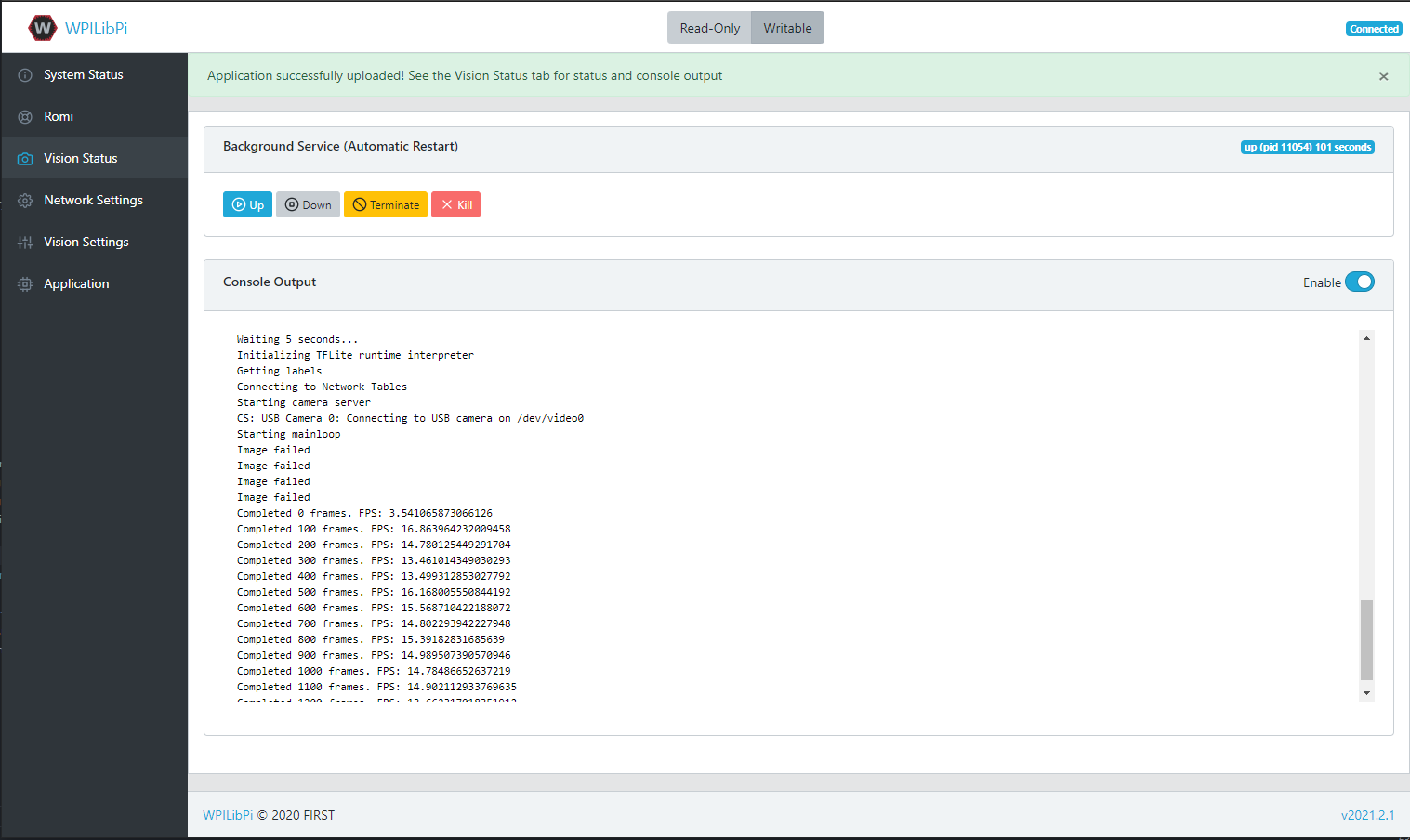

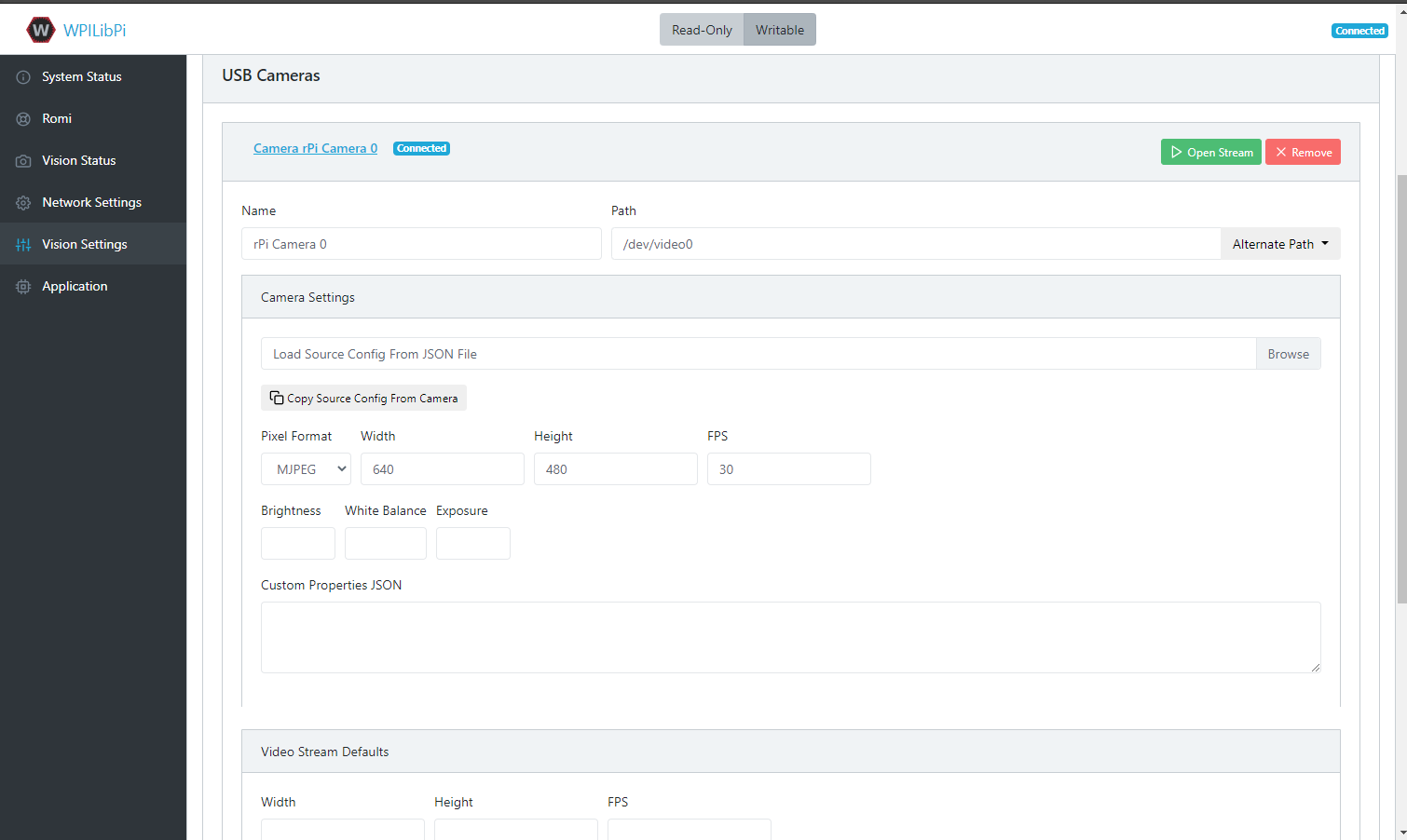

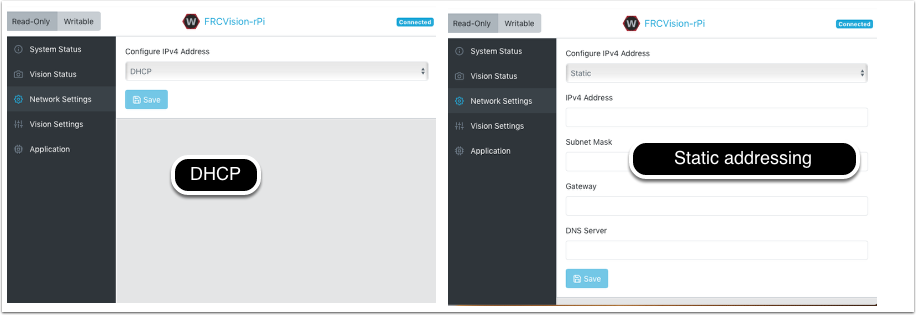

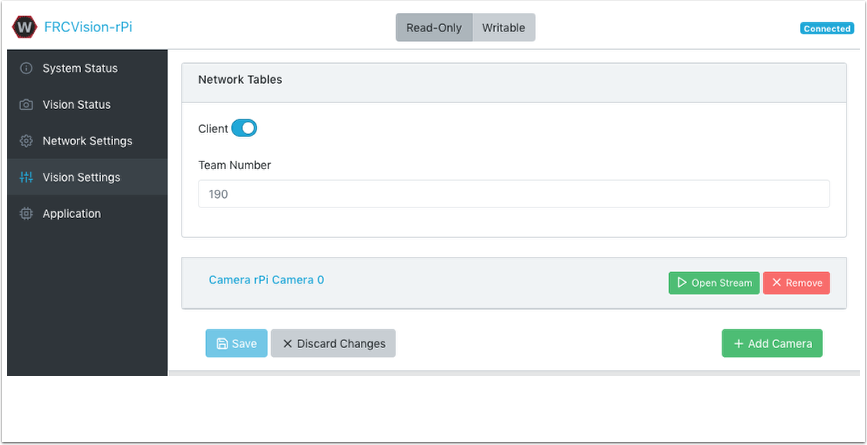

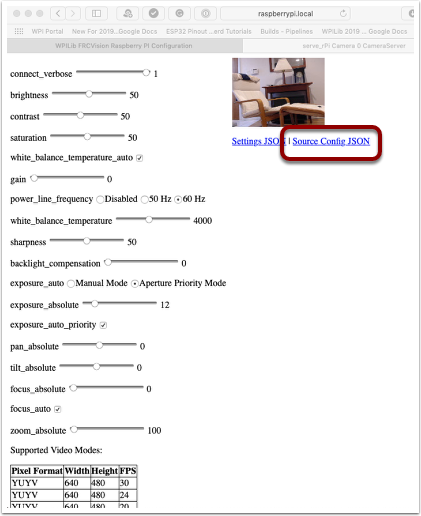

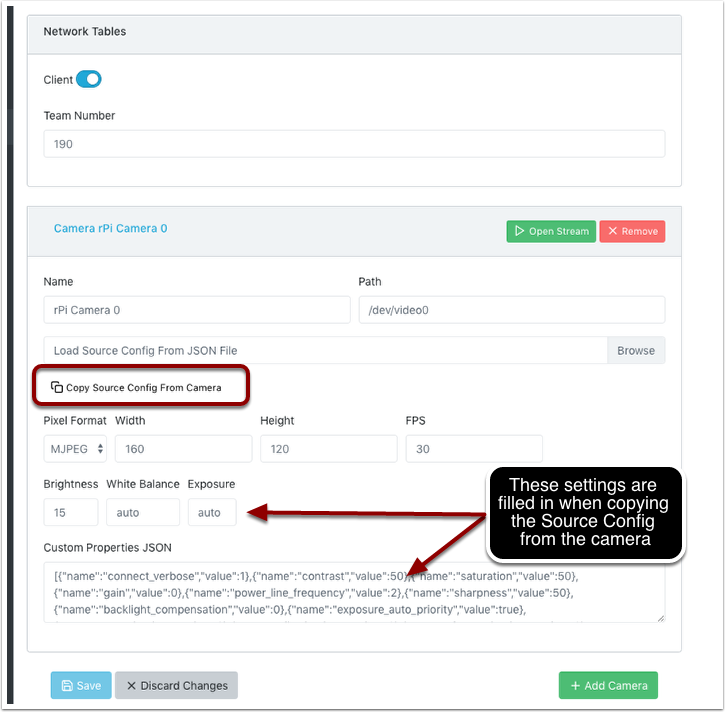

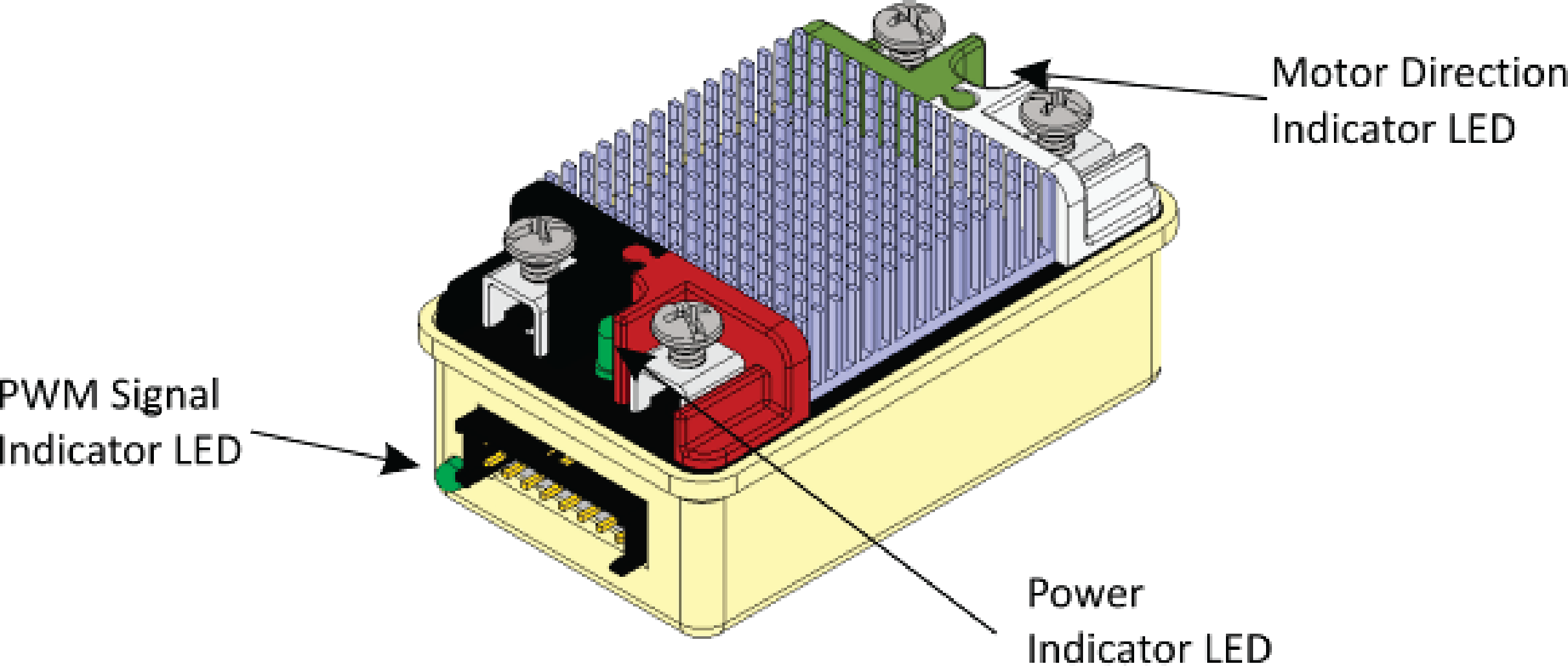

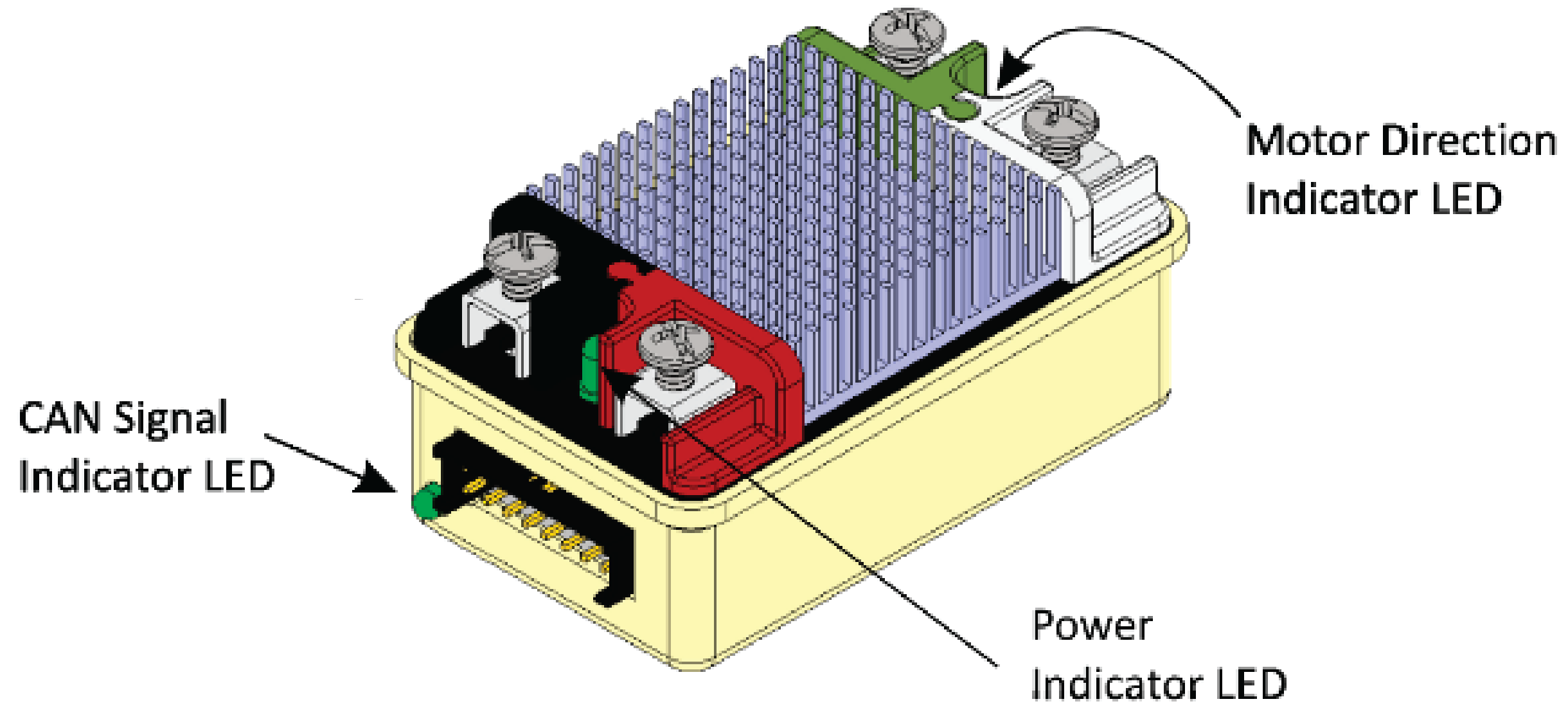

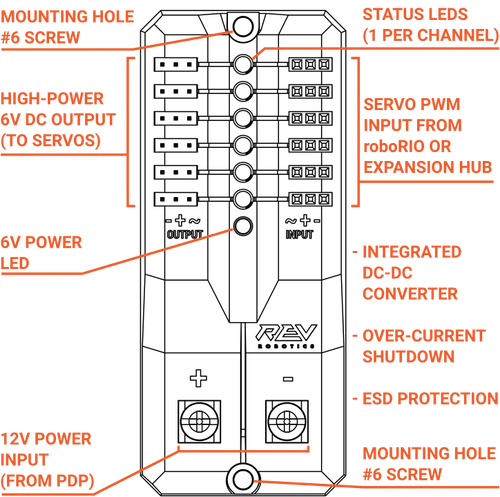

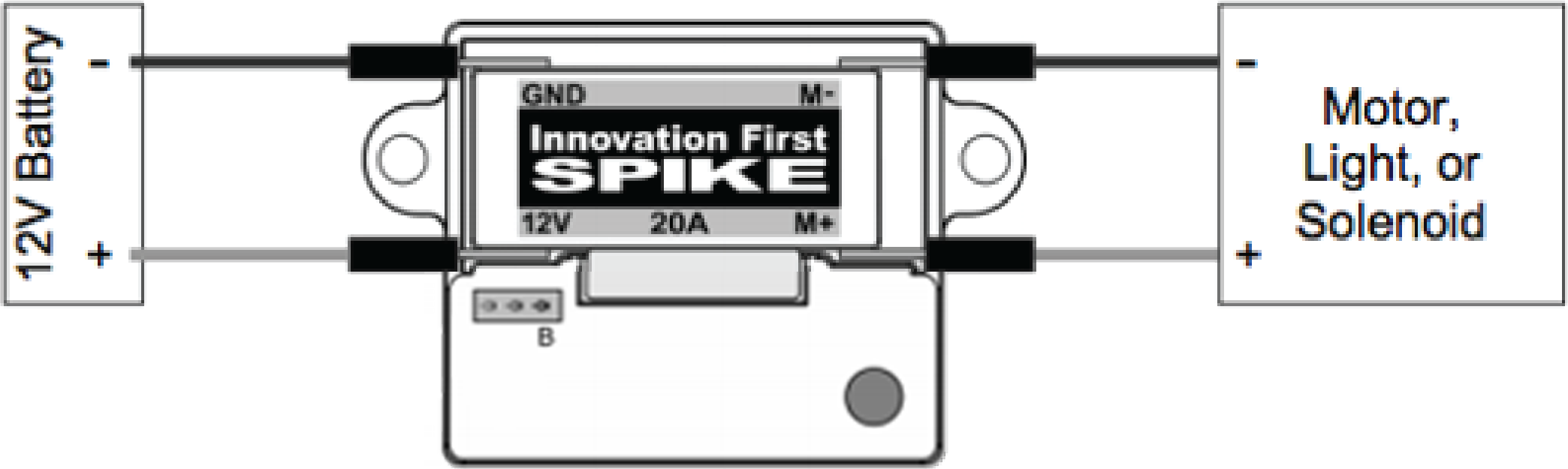

SPARK MAX Motor Controller